SciTech-BigDataAIML-Tensorflow-Introduction to Tensors

https://tensorflow.google.cn/guide/tensor

TensorFlow supports eager execution and graph execution, and TensorFlow defaults to eager execution.:

- In eager execution, operations are evaluated immediately.

Note that during eager execution, you may discover yourTensorsare actually of typeEagerTensor. This is an internal detail. - In graph execution, a computational graph is constructed for later evaluation.

In TensorFlow,tf.functions are a common way to define graph execution. - Note: Typically, anywhere

a TensorFlow functionexpects

a Tensor as input, the function will also accept anything that can be converted to a Tensor usingtf.convert_to_tensor.

Introduction to Tensors

Tensorsaremulti-dimensional arrays with a uniform type(called

adtype). If you're familiar withNumPy, tensors are (kind of) likenp.arrays.

tf.dtypesincluded all supported dtypes:- To inspect a

tf.Tensor's data typeuse theTensor.dtypeproperty. - When creating a

tf.Tensorfroma Python objectyou may optionally specify the datatype. If you don't, TensorFlow chooses a datatype that can represent your data and convertsPython integerstotf.int32andPython floating point numberstotf.float32, Otherwise TensorFlowuses the same rules NumPy useswhen converting to arrays. - You can cast from type to type.

- Tensors and

tf.TensorShapeobjects have convenient properties for accessing these:

- To inspect a

All tensors are immutableandonly create a new one,

just like Python numbers and strings: you can never update the contents of a tensor.- Constants:

tf.constant( a function )

Thetf.stringdtype is used for all raw bytes data in TensorFlow.

Thetf.iomodule contains functions for converting data to and from bytes, including decoding images and parsing csv. - tf.Tensor( a class ): All eager

tf.Tensorvalues are immutable (in contrast totf.Variable).

Atf.Tensorrepresents a multidimensional array of elements.

All elements are of a single known data type.

When writing a TensorFlow program, the main object that is manipulated and passed around is thetf.Tensor. - Variables:

tf.Variable( a class type ) are immutable objects:

use a tf.Variable to store model weights( or other mutable state ) in TensorFlow. Since normal tf.Tensor objects are immutable.

- Constants:

tensorOfStrs = tf.constant(["Gray wolf", "Quick brown fox"])

# <tf.Tensor: shape=(3,), dtype=string,

# numpy=array([b'Gray wolf', b'Quick brown fox'], dtype=object)>

# it is OK if you have 3 string tensors of different lengths.

# Note: the shape is (3,). The string length is not included.

print(tf.strings.split(tensorOfStrs, sep=" "))

# <tf.RaggedTensor [[b'Gray', b'wolf'], [b'Quick', b'brown', b'fox']]>

tensorOfUnicodeStr = tf.constant("")

# <tf.Tensor: shape=(), dtype=string,

# numpy=b'\xf0\x9f\xa5\xb3\xf0\x9f\x91\x8d'>

text = tf.constant("1 10 100")

tf.strings.to_number( tf.strings.split(text, " ") )

# <tf.Tensor: shape=(3,), dtype=float32, numpy=array([1.0, 10.0, 100.0], dtype=float32)>

byte_strings = tf.strings.bytes_split(tf.constant("Duck"))

# <tf.Tensor: shape=(4,), dtype=string, numpy=array([b'D', b'u', b'c', b'k'], dtype=object)>

tf.io.decode_raw(tf.constant("Duck"), tf.uint8)

# <tf.Tensor: shape=(4,), dtype=uint8, numpy=array([68, 117, 99, 107], dtype=uint8)>

var = tf.Variable([0.0, 0.0, 0.0])

var.assign([1, 2, 3]) # OR var = [1, 2, 3]

var.assign_add([1, 1, 1]) # NOT!: var += [1, 1 ,1]

var.assign_sub([1, 1, 1]) # NOT!: var -= [1, 1 ,1]

# Refer to the Variables guide for details.

the_f64_tensor = tf.constant([2.2, 3.3, 4.4], dtype=tf.float64)

# Now, cast to an float16

the_f16_tensor = tf.cast(the_f64_tensor, dtype=tf.float16)

# <tf.Tensor: shape=(3,), dtype=float16, numpy=array([2.199219, 3.300781, 4.398438], dtype=float16)>

# Now, cast to an uint8 and lose the decimal precision

the_u8_tensor = tf.cast(the_f16_tensor, dtype=tf.uint8)

# <tf.Tensor: shape=(3,), dtype=uint8, numpy=array([2, 3, 4], dtype=uint8)>

About shapes

Tensors have shapes represent the structure of a tensor.

Actually Tensors is a Hierarchical Organization of Data, they have ranks/layers from LOCAL to GLOBAL, each rank own corresponding WEIGHTS.

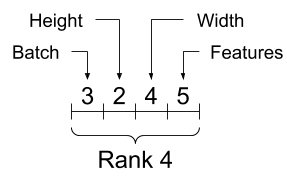

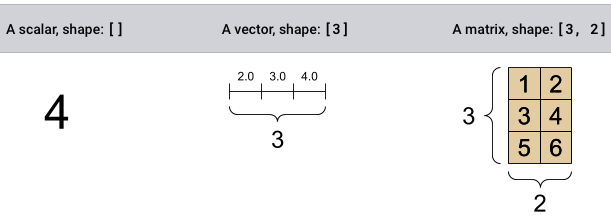

Some vocabulary:

Size: The total number of items in the tensor, the product of the shape vector's elements.Shape: The length (number of elements) of each of the axes of a tensor.- A Tensor's shape (that is, the rank of the Tensor and the size of each dimension) may not always be fully known.

Intf.functiondefinitions, the shape may only be partially known. - Most operations produce tensors of fully-known shapes if the shapes of their inputs are also fully known,

but in some cases it's only possible to find the shape of a tensor at execution time. - A number of specialized tensors are available: see

tf.Variable,tf.constant,tf.placeholder,tf.sparse.SparseTensor, andtf.RaggedTensor

- A Tensor's shape (that is, the rank of the Tensor and the size of each dimension) may not always be fully known.

Rank: Number of tensor axes:

Note: Although you may see reference to a "tensor of two dimensions", a rank-2 tensor does not usually describe a 2D space.- A scalar has rank 0,

- a vector has rank 1,

- a matrix is rank 2.

Axis(Dimension): A particular dimension of a tensor.The base tf.Tensor class requires tensors to be "rectangular"

--- that is,along each axis, every element is the same size.- Context of axes:

- ORG.: often axes are ordered from global to local:

The BATCH AXIS first, followed by spatial dimensions,

and FEATURES for LOCATION LAST. - INDEXING: While axes are often referred to by their indices, you should always keep track of the meaning of each.

- Single-axis indexing: TensorFlow follows standard Python indexing rules, similar to indexing a list or a string in Python, and the basic rules for NumPy indexing.

- indexes start at 0

- negative indices count backwards from the end

- colons(

:), are used for slices:start:stop:step

- Multi-axis indexing: Higher rank tensors

are indexed bypassing multiple indices.

The exact same rules as in the single-axis case apply to each axis independently.

- Single-axis indexing: TensorFlow follows standard Python indexing rules, similar to indexing a list or a string in Python, and the basic rules for NumPy indexing.

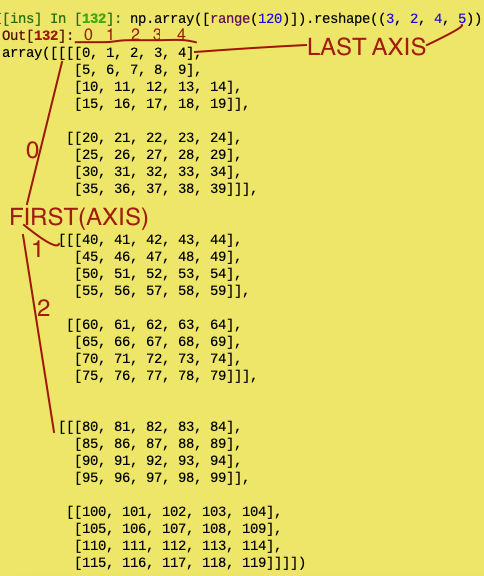

- IMPL.: This way feature vectors are contiguous regions of memory.

The data maintains its layout in memory and a new tensor is created, with the requested shape, pointing to the same data.

TensorFlow uses C-style "row-major" memory ordering, where incrementing the rightmost index corresponds to a single step in memory.

- ORG.: often axes are ordered from global to local:

- reshapping:

- You can reshape a tensor into a new shape. The tf.reshape operation is fast and cheap as the underlying data does not need to be duplicated.

- Typically the only reasonable use of tf.reshape is to combine or split adjacent axes (or add/remove 1s).

np.array([range(6)]).reshape([1, 2, 3])

=> array([[[0, 1, 2], [3, 4, 5]]])np.array([range(6)]).reshape([1, 2, 3]).reshape([-1])

=> array([0, 1, 2, 3, 4, 5])

However, there are specialized types of tensors that can handle different shapes:

Ragged tensors(see RaggedTensor below)Sparse tensors(see SparseTensor below)

You can convert sparse tensors to dense by usingtf.sparse.to_dense`ragged_tensor = tf.ragged.constant( [ [0, 1, 2, 3], [4, 5], [6, 7, 8], [9] ] )`

`# <tf.RaggedTensor [[0, 1, 2, 3], [4, 5], [6, 7, 8], [9]]>`

`print(ragged_tensor.shape)`

`# TensorShape([4, None])` tensorOfStrs = tf.constant( ["Gray wolf", "Quick brown fox"] )

tf.strings.split( tensorOfStrs, sep=" ")

# <tf.RaggedTensor [[b'Gray', b'wolf'], [b'Quick', b'brown', b'fox']]> # Sparse tensors store values by index in a memory-efficient manner

sparse_tensor = tf.sparse.SparseTensor(

indices=[ [0, 0], [1, 2] ],

values=[1, 2],

dense_shape=[3, 4]

)

print(sparse_tensor, "\n")

# SparseTensor(

indices= tf.Tensor([ [0 0] [1 2] ], shape=(2, 2), dtype=int64),

values= tf.Tensor([1 2], shape=(2,), dtype=int32),

dense_shape= tf.Tensor([3 4], shape=(2,), dtype=int64)

)

print(tf.sparse.to_dense(sparse_tensor))

# tf.Tensor( [ [1 0 0 0], [0 0 2 0], [0 0 0 0] ], shape=(3, 4), dtype=int32)

Math on Tensors:

You can do math on tensors, including addition, element-wise multiplication, and matrix multiplication.

a = tf.constant([ [1, 2], [3, 4] ])

b = tf.ones([2,2], dtype=tf.int32) # tf.constant([ [1, 1], [1, 1] ])

tf.add(a, b) # equals: a + b # element-wise addition

tf.multiply(a, b) # equals: a * b # element-wise multiplication

tf.matmul(a, b) # equals: a @ b # matrix multiplication

Tensors are used in all kinds of operations (or "Ops").

c = tf.constant([[4.0, 5.0], [10.0, 1.0]])

print(tf.reduce_max(c)) # Find the largest value

print(tf.math.argmax(c)) # Find the index of the largest value

print(tf.nn.softmax(c)) # Compute the softmax

tf.Tensor(10.0, shape=(), dtype=float32)

tf.Tensor([1 0], shape=(2,), dtype=int64)

tf.Tensor([ [2.6894143e-01 7.3105854e-01], [9.9987662e-01 1.2339458e-04] ], shape=(2, 2), dtype=float32)

SciTech-BigDataAIML-Tensorflow-Introduction to Tensors的更多相关文章

- 吴恩达课后习题第二课第三周:TensorFlow Introduction

目录 第二课第三周:TensorFlow Introduction Introduction to TensorFlow 1 - Packages 1.1 - Checking TensorFlow ...

- [TensorFlow] Introduction to TensorFlow Datasets and Estimators

Datasets and Estimators are two key TensorFlow features you should use: Datasets: The best practice ...

- TensorFlow 中文资源全集,官方网站,安装教程,入门教程,实战项目,学习路径。

Awesome-TensorFlow-Chinese TensorFlow 中文资源全集,学习路径推荐: 官方网站,初步了解. 安装教程,安装之后跑起来. 入门教程,简单的模型学习和运行. 实战项目, ...

- Awesome TensorFlow

Awesome TensorFlow A curated list of awesome TensorFlow experiments, libraries, and projects. Inspi ...

- TensorFlow良心入门教程

All the matrials come from Machine Learning class in Polyu,HK and I reorganize them and add referenc ...

- TensorFlow 中文资源精选,官方网站,安装教程,入门教程,实战项目,学习路径。

Awesome-TensorFlow-Chinese TensorFlow 中文资源全集,学习路径推荐: 官方网站,初步了解. 安装教程,安装之后跑起来. 入门教程,简单的模型学习和运行. 实战项目, ...

- Debugging TensorFlow models 调试 TensorFlow 模型

Debugging TensorFlow models Symbolic nature of TensorFlow makes it relatively more difficult to debu ...

- AI - TensorFlow - 张量(Tensor)

张量(Tensor) 在Tensorflow中,变量统一称作张量(Tensor). 张量(Tensor)是任意维度的数组. 0阶张量:纯量或标量 (scalar), 也就是一个数值,例如,\'Howd ...

- Effective Tensorflow[转]

Effective TensorFlow Table of Contents TensorFlow Basics Understanding static and dynamic shapes Sco ...

- Anaconda+Tensorflow环境安装与配置

转载请注明出处:http://www.cnblogs.com/willnote/p/6746499.html Anaconda安装 在清华大学 TUNA 镜像源选择对应的操作系统与所需的Python版 ...

随机推荐

- T+常用数据表参考

AA_表 基础档案 AA_Partner 客户 EAP_表 设置表 ST_表 库存 SA_表 销售 PU_表 采购 销售订单 销货单表 SA_SaleDelivery ...

- 0x01 - 我的第一个 Object Visitor

我的第一个 Object Visitor 预演准备 为了顺利的进行测试,你需要确保本地已经安装了以下这些必备的软件: dotnet 2.1 或者以上版本的 SDK,我们更建议直接安装 dotnet 5 ...

- CUDA原子操作

这节主要涉及到一个多线程情况下存在的数据竞争问题 -- 多个线程同时访问共享数据时,由于没有正确的同步机制,导致数据出现不一致的情况. C/C++ 多线程中,可以通过互斥锁(mutex).原子操作(a ...

- 解锁FastAPI与MongoDB聚合管道的性能奥秘

title: 解锁FastAPI与MongoDB聚合管道的性能奥秘 date: 2025/05/20 20:24:47 updated: 2025/05/20 20:24:47 author: cmd ...

- ps ef命令查询进程号pid

楼兰胡杨已经在<五分钟扫盲:25个工作中常用的Linux命令>分享了ps命令的简单使用方法,但是,写的过于笼统,这里详细介绍一下. 语法:ps -ef | grep process ...

- 堆排序算法Java实现

摘要 介绍堆排序的基本概念及其实现. 前言 排序大的分类可以分为两种:内排序和外排序.在排序过程中,全部记录存放在内存,则称为内排序,如果排序过程中需要使用外存,则称为外排序.这里讲的排序是内排序 ...

- IPMI新建BMC管理用户

# 查看ipmi的ip [root@HOST-10-198-2-62 ~]# ipmitool lan print # 首先确认非admin用户的id,选择一个ID创建root用户 [root@HOS ...

- springboot实现连接多个数据源

dynamic datasource 导入依赖 <dependency> <groupId>com.baomidou</groupId> <artifactI ...

- 2025 最野 AI 创业攻略!从 0 孵化爆款软件的底层逻辑:技术打磨 + 精准推广双杀

小伙伴们,上一篇内容我们讲到了自身的核心优势,那么今天大黄给大家讲讲我们的发展愿景. 我们的目标: 成为学习AI编程路上必看的内容合集知识库! 项目案例全部由真人编写实现,从0到1毫不保留全部展示出来 ...

- ABAP基础三——DIALOG整体

本来想模拟VA01的,后来想想ME21N也很经典,所以就把一些常见的组建都放上面了. 效果图如下: 1.整体设计就是按 抬头(1) + 项目(N) + 伙伴(N) + 相关数据的tabstrip(N ...