5-Dataloader使用

1. Dataloader使用

① Dataset只是去告诉我们程序,我们的数据集在什么位置,数据集第一个数据给它一个索引0,它对应的是哪一个数据。

② Dataloader就是把数据加载到神经网络当中,Dataloader所做的事就是每次从Dataset中取数据,至于怎么取,是由Dataloader中的参数决定的。

import torchvision

from torch.utils.data import DataLoader

# 准备的测试数据集

test_data = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor())

img, target = test_data[0]

print(img.shape)

print(img)

# batch_size=4 使得 img0, target0 = dataset[0]、img1, target1 = dataset[1]、img2, target2 = dataset[2]、img3, target3 = dataset[3],然后这四个数据作为Dataloader的一个返回

test_loader = DataLoader(dataset=test_data,batch_size=4,shuffle=True,num_workers=0,drop_last=False)

# 用for循环取出DataLoader打包好的四个数据

for data in test_loader:

imgs, targets = data # 每个data都是由4张图片组成,imgs.size 为 [4,3,32,32],四张32×32图片三通道,targets由四个标签组成

print(imgs.shape)

print(targets)

torch.Size([3, 32, 32])

tensor([[[0.6196, 0.6235, 0.6471, ..., 0.5373, 0.4941, 0.4549],

[0.5961, 0.5922, 0.6235, ..., 0.5333, 0.4902, 0.4667],

[0.5922, 0.5922, 0.6196, ..., 0.5451, 0.5098, 0.4706],

...,

[0.2667, 0.1647, 0.1216, ..., 0.1490, 0.0510, 0.1569],

[0.2392, 0.1922, 0.1373, ..., 0.1020, 0.1137, 0.0784],

[0.2118, 0.2196, 0.1765, ..., 0.0941, 0.1333, 0.0824]],

[[0.4392, 0.4353, 0.4549, ..., 0.3725, 0.3569, 0.3333],

[0.4392, 0.4314, 0.4471, ..., 0.3725, 0.3569, 0.3451],

[0.4314, 0.4275, 0.4353, ..., 0.3843, 0.3725, 0.3490],

...,

[0.4863, 0.3922, 0.3451, ..., 0.3804, 0.2510, 0.3333],

[0.4549, 0.4000, 0.3333, ..., 0.3216, 0.3216, 0.2510],

[0.4196, 0.4118, 0.3490, ..., 0.3020, 0.3294, 0.2627]],

[[0.1922, 0.1843, 0.2000, ..., 0.1412, 0.1412, 0.1294],

[0.2000, 0.1569, 0.1765, ..., 0.1216, 0.1255, 0.1333],

[0.1843, 0.1294, 0.1412, ..., 0.1333, 0.1333, 0.1294],

...,

[0.6941, 0.5804, 0.5373, ..., 0.5725, 0.4235, 0.4980],

[0.6588, 0.5804, 0.5176, ..., 0.5098, 0.4941, 0.4196],

[0.6275, 0.5843, 0.5176, ..., 0.4863, 0.5059, 0.4314]]])

torch.Size([4, 3, 32, 32])

tensor([2, 6, 4, 5])

torch.Size([4, 3, 32, 32])

tensor([7, 2, 1, 6])

torch.Size([4, 3, 32, 32])

tensor([6, 7, 4, 4])

torch.Size([4, 3, 32, 32])

tensor([9, 6, 1, 3])

torch.Size([4, 3, 32, 32])

tensor([1, 5, 8, 5])

torch.Size([4, 3, 32, 32])

tensor([1, 8, 4, 6])

torch.Size([4, 3, 32, 32])

tensor([9, 8, 7, 1])

torch.Size([4, 3, 32, 32])

tensor([6, 0, 9, 4])

torch.Size([4, 3, 32, 32])

tensor([7, 1, 9, 9])

torch.Size([4, 3, 32, 32])

tensor([6, 8, 7, 0])

torch.Size([4, 3, 32, 32])

tensor([6, 8, 1, 5])

torch.Size([4, 3, 32, 32])

tensor([5, 2, 7, 8])

torch.Size([4, 3, 32, 32])

tensor([7, 6, 7, 0])

torch.Size([4, 3, 32, 32])

tensor([9, 2, 0, 0])

torch.Size([4, 3, 32, 32])

tensor([0, 6, 0, 8])

torch.Size([4, 3, 32, 32])

tensor([6, 7, 3, 1])

torch.Size([4, 3, 32, 32])

tensor([3, 2, 6, 0])

torch.Size([4, 3, 32, 32])

tensor([9, 5, 0, 5])

torch.Size([4, 3, 32, 32])

tensor([5, 4, 2, 9])

torch.Size([4, 3, 32, 32])

tensor([9, 5, 9, 8])

torch.Size([4, 3, 32, 32])

tensor([3, 3, 8, 0])

torch.Size([4, 3, 32, 32])

tensor([2, 6, 1, 8])

torch.Size([4, 3, 32, 32])

tensor([1, 5, 6, 4])

torch.Size([4, 3, 32, 32])

tensor([9, 9, 6, 5])

torch.Size([4, 3, 32, 32])

tensor([7, 1, 2, 4])

torch.Size([4, 3, 32, 32])

tensor([1, 0, 8, 5])

torch.Size([4, 3, 32, 32])

tensor([2, 8, 1, 8])

torch.Size([4, 3, 32, 32])

tensor([7, 4, 6, 4])

torch.Size([4, 3, 32, 32])

tensor([5, 8, 5, 1])

torch.Size([4, 3, 32, 32])

tensor([8, 5, 9, 7])

torch.Size([4, 3, 32, 32])

tensor([2, 0, 9, 8])

torch.Size([4, 3, 32, 32])

tensor([2, 1, 4, 7])

torch.Size([4, 3, 32, 32])

tensor([9, 8, 1, 3])

torch.Size([4, 3, 32, 32])

tensor([4, 8, 1, 8])

torch.Size([4, 3, 32, 32])

tensor([5, 3, 8, 1])

torch.Size([4, 3, 32, 32])

tensor([2, 3, 7, 7])

torch.Size([4, 3, 32, 32])

tensor([6, 6, 8, 8])

torch.Size([4, 3, 32, 32])

tensor([4, 2, 7, 6])

torch.Size([4, 3, 32, 32])

tensor([2, 3, 1, 0])

torch.Size([4, 3, 32, 32])

tensor([0, 0, 5, 2])

torch.Size([4, 3, 32, 32])

tensor([0, 1, 1, 4])

torch.Size([4, 3, 32, 32])

tensor([1, 3, 2, 5])

torch.Size([4, 3, 32, 32])

tensor([2, 1, 1, 3])

torch.Size([4, 3, 32, 32])

tensor([5, 6, 1, 3])

torch.Size([4, 3, 32, 32])

tensor([8, 6, 2, 9])

torch.Size([4, 3, 32, 32])

tensor([5, 6, 4, 5])

torch.Size([4, 3, 32, 32])

tensor([9, 8, 5, 7])

torch.Size([4, 3, 32, 32])

tensor([6, 2, 8, 1])

torch.Size([4, 3, 32, 32])

tensor([6, 8, 7, 7])

torch.Size([4, 3, 32, 32])

tensor([1, 3, 6, 5])

torch.Size([4, 3, 32, 32])

tensor([6, 1, 8, 4])

torch.Size([4, 3, 32, 32])

tensor([1, 5, 7, 7])

torch.Size([4, 3, 32, 32])

tensor([5, 7, 1, 5])

torch.Size([4, 3, 32, 32])

tensor([5, 7, 7, 3])

torch.Size([4, 3, 32, 32])

tensor([1, 7, 4, 8])

torch.Size([4, 3, 32, 32])

tensor([2, 3, 0, 5])

torch.Size([4, 3, 32, 32])

tensor([6, 6, 7, 4])

torch.Size([4, 3, 32, 32])

tensor([2, 2, 7, 9])

torch.Size([4, 3, 32, 32])

tensor([8, 5, 8, 7])

torch.Size([4, 3, 32, 32])

tensor([5, 0, 8, 4])

torch.Size([4, 3, 32, 32])

tensor([4, 5, 9, 8])

torch.Size([4, 3, 32, 32])

tensor([6, 6, 7, 2])

torch.Size([4, 3, 32, 32])

tensor([6, 6, 1, 1])

torch.Size([4, 3, 32, 32])

tensor([2, 8, 3, 2])

torch.Size([4, 3, 32, 32])

tensor([7, 5, 5, 5])

torch.Size([4, 3, 32, 32])

tensor([1, 5, 5, 7])

torch.Size([4, 3, 32, 32])

tensor([0, 7, 5, 0])

torch.Size([4, 3, 32, 32])

tensor([9, 1, 4, 1])

torch.Size([4, 3, 32, 32])

tensor([8, 4, 6, 1])

torch.Size([4, 3, 32, 32])

tensor([3, 3, 0, 1])

torch.Size([4, 3, 32, 32])

tensor([7, 8, 6, 5])

torch.Size([4, 3, 32, 32])

tensor([3, 9, 6, 0])

torch.Size([4, 3, 32, 32])

tensor([9, 4, 5, 7])

torch.Size([4, 3, 32, 32])

tensor([0, 3, 4, 9])

torch.Size([4, 3, 32, 32])

tensor([1, 2, 8, 8])

torch.Size([4, 3, 32, 32])

tensor([7, 5, 0, 9])

torch.Size([4, 3, 32, 32])

tensor([3, 9, 3, 5])

torch.Size([4, 3, 32, 32])

tensor([0, 4, 3, 5])

torch.Size([4, 3, 32, 32])

tensor([6, 3, 5, 6])

torch.Size([4, 3, 32, 32])

tensor([2, 8, 8, 7])

torch.Size([4, 3, 32, 32])

tensor([6, 3, 4, 3])

torch.Size([4, 3, 32, 32])

tensor([7, 3, 2, 5])

torch.Size([4, 3, 32, 32])

tensor([1, 7, 5, 5])

torch.Size([4, 3, 32, 32])

tensor([3, 5, 2, 5])

torch.Size([4, 3, 32, 32])

tensor([3, 1, 5, 7])

torch.Size([4, 3, 32, 32])

tensor([0, 4, 7, 4])

torch.Size([4, 3, 32, 32])

tensor([6, 9, 9, 5])

torch.Size([4, 3, 32, 32])

tensor([8, 8, 6, 6])

torch.Size([4, 3, 32, 32])

tensor([0, 9, 3, 9])

torch.Size([4, 3, 32, 32])

tensor([4, 2, 2, 2])

torch.Size([4, 3, 32, 32])

tensor([5, 7, 6, 8])

torch.Size([4, 3, 32, 32])

tensor([8, 5, 2, 3])

torch.Size([4, 3, 32, 32])

tensor([1, 4, 3, 8])

torch.Size([4, 3, 32, 32])

tensor([8, 0, 3, 7])

torch.Size([4, 3, 32, 32])

tensor([2, 7, 1, 9])

torch.Size([4, 3, 32, 32])

tensor([1, 4, 4, 8])

torch.Size([4, 3, 32, 32])

tensor([0, 8, 6, 3])

torch.Size([4, 3, 32, 32])

tensor([6, 7, 2, 1])

torch.Size([4, 3, 32, 32])

tensor([4, 4, 0, 2])

torch.Size([4, 3, 32, 32])

tensor([2, 2, 4, 1])

torch.Size([4, 3, 32, 32])

tensor([3, 7, 3, 5])

torch.Size([4, 3, 32, 32])

tensor([3, 1, 8, 7])

torch.Size([4, 3, 32, 32])

tensor([7, 0, 9, 5])

torch.Size([4, 3, 32, 32])

tensor([0, 5, 9, 1])

torch.Size([4, 3, 32, 32])

tensor([3, 3, 5, 4])

torch.Size([4, 3, 32, 32])

tensor([6, 4, 6, 3])

torch.Size([4, 3, 32, 32])

tensor([2, 2, 0, 0])

torch.Size([4, 3, 32, 32])

tensor([7, 5, 1, 2])

torch.Size([4, 3, 32, 32])

tensor([5, 4, 6, 3])

torch.Size([4, 3, 32, 32])

tensor([1, 7, 5, 3])

torch.Size([4, 3, 32, 32])

tensor([3, 7, 4, 5])

torch.Size([4, 3, 32, 32])

tensor([4, 0, 0, 4])

torch.Size([4, 3, 32, 32])

tensor([0, 0, 9, 2])

torch.Size([4, 3, 32, 32])

tensor([5, 7, 4, 8])

torch.Size([4, 3, 32, 32])

tensor([2, 1, 7, 5])

torch.Size([4, 3, 32, 32])

tensor([5, 3, 5, 5])

torch.Size([4, 3, 32, 32])

tensor([6, 1, 8, 4])

torch.Size([4, 3, 32, 32])

tensor([1, 3, 4, 8])

torch.Size([4, 3, 32, 32])

tensor([6, 0, 1, 7])

torch.Size([4, 3, 32, 32])

tensor([0, 6, 2, 4])

torch.Size([4, 3, 32, 32])

tensor([7, 8, 1, 0])

torch.Size([4, 3, 32, 32])

tensor([9, 1, 0, 7])

torch.Size([4, 3, 32, 32])

tensor([7, 7, 2, 9])

torch.Size([4, 3, 32, 32])

tensor([1, 4, 9, 3])

torch.Size([4, 3, 32, 32])

tensor([8, 8, 3, 4])

torch.Size([4, 3, 32, 32])

tensor([4, 2, 3, 0])

torch.Size([4, 3, 32, 32])

tensor([4, 9, 2, 9])

torch.Size([4, 3, 32, 32])

tensor([0, 5, 8, 7])

torch.Size([4, 3, 32, 32])

tensor([1, 2, 5, 3])

torch.Size([4, 3, 32, 32])

tensor([1, 6, 8, 8])

torch.Size([4, 3, 32, 32])

tensor([2, 0, 3, 3])

torch.Size([4, 3, 32, 32])

tensor([1, 2, 0, 4])

torch.Size([4, 3, 32, 32])

tensor([1, 6, 1, 0])

torch.Size([4, 3, 32, 32])

tensor([5, 8, 6, 2])

torch.Size([4, 3, 32, 32])

tensor([4, 0, 5, 3])

torch.Size([4, 3, 32, 32])

tensor([9, 4, 5, 7])

torch.Size([4, 3, 32, 32])

tensor([6, 0, 1, 9])

torch.Size([4, 3, 32, 32])

tensor([5, 3, 7, 1])

torch.Size([4, 3, 32, 32])

tensor([4, 6, 4, 4])

torch.Size([4, 3, 32, 32])

tensor([6, 2, 9, 1])

torch.Size([4, 3, 32, 32])

tensor([7, 7, 3, 4])

torch.Size([4, 3, 32, 32])

tensor([4, 3, 4, 0])

torch.Size([4, 3, 32, 32])

tensor([8, 7, 7, 4])

torch.Size([4, 3, 32, 32])

tensor([1, 1, 2, 0])

torch.Size([4, 3, 32, 32])

tensor([7, 9, 8, 4])

torch.Size([4, 3, 32, 32])

tensor([1, 7, 4, 7])

torch.Size([4, 3, 32, 32])

tensor([0, 6, 5, 7])

torch.Size([4, 3, 32, 32])

tensor([6, 6, 6, 8])

torch.Size([4, 3, 32, 32])

tensor([2, 4, 3, 0])

torch.Size([4, 3, 32, 32])

tensor([8, 8, 9, 8])

torch.Size([4, 3, 32, 32])

tensor([7, 2, 1, 5])

torch.Size([4, 3, 32, 32])

tensor([2, 0, 8, 5])

torch.Size([4, 3, 32, 32])

tensor([9, 5, 3, 4])

torch.Size([4, 3, 32, 32])

tensor([5, 3, 2, 0])

torch.Size([4, 3, 32, 32])

tensor([2, 0, 2, 9])

torch.Size([4, 3, 32, 32])

tensor([2, 3, 4, 4])

torch.Size([4, 3, 32, 32])

tensor([8, 2, 4, 4])

torch.Size([4, 3, 32, 32])

tensor([6, 1, 7, 8])

torch.Size([4, 3, 32, 32])

tensor([8, 1, 6, 2])

torch.Size([4, 3, 32, 32])

tensor([0, 2, 4, 4])

torch.Size([4, 3, 32, 32])

tensor([6, 0, 6, 7])

torch.Size([4, 3, 32, 32])

tensor([7, 2, 6, 0])

torch.Size([4, 3, 32, 32])

tensor([9, 1, 9, 2])

torch.Size([4, 3, 32, 32])

tensor([1, 4, 3, 9])

torch.Size([4, 3, 32, 32])

tensor([0, 7, 5, 7])

torch.Size([4, 3, 32, 32])

tensor([1, 7, 1, 3])

torch.Size([4, 3, 32, 32])

tensor([2, 7, 4, 5])

torch.Size([4, 3, 32, 32])

tensor([5, 0, 4, 1])

torch.Size([4, 3, 32, 32])

tensor([2, 7, 5, 2])

torch.Size([4, 3, 32, 32])

tensor([3, 8, 2, 8])

torch.Size([4, 3, 32, 32])

tensor([2, 5, 2, 4])

torch.Size([4, 3, 32, 32])

tensor([8, 2, 3, 0])

torch.Size([4, 3, 32, 32])

tensor([7, 4, 2, 7])

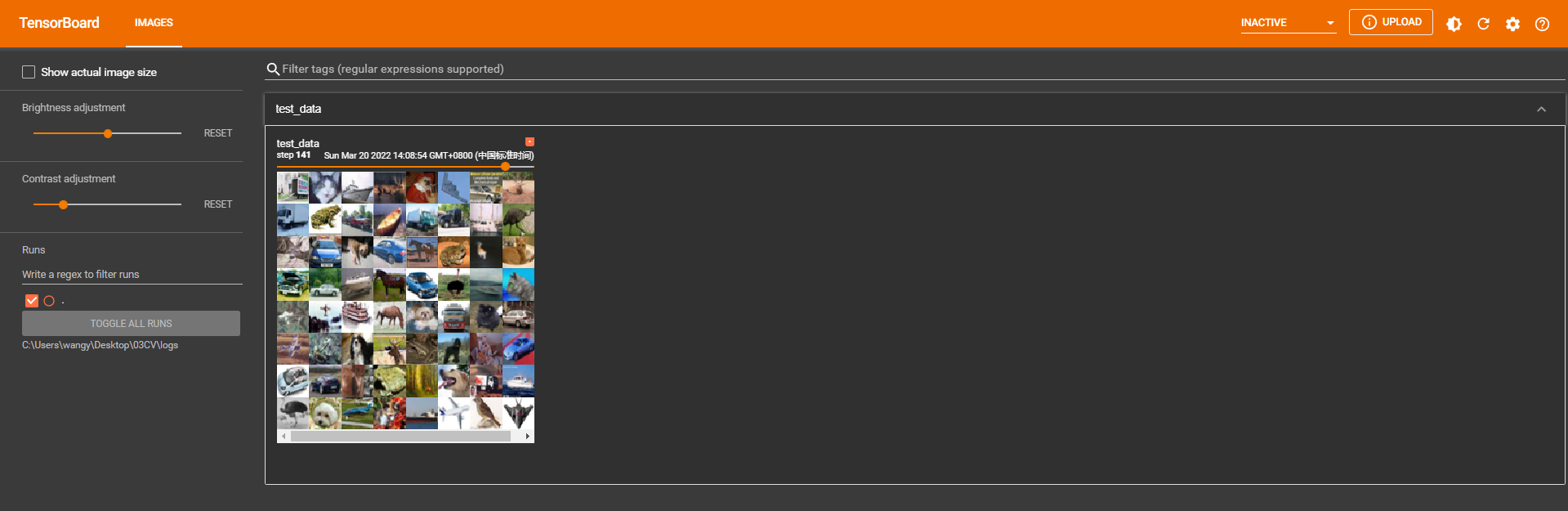

2. Tensorboard展示

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# 准备的测试数据集

test_data = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor())

# batch_size=4 使得 img0, target0 = dataset[0]、img1, target1 = dataset[1]、img2, target2 = dataset[2]、img3, target3 = dataset[3],然后这四个数据作为Dataloader的一个返回

test_loader = DataLoader(dataset=test_data,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

# 用for循环取出DataLoader打包好的四个数据

writer = SummaryWriter("logs")

step = 0

for data in test_loader:

imgs, targets = data # 每个data都是由4张图片组成,imgs.size 为 [4,3,32,32],四张32×32图片三通道,targets由四个标签组成

writer.add_images("test_data",imgs,step)

step = step + 1

writer.close()

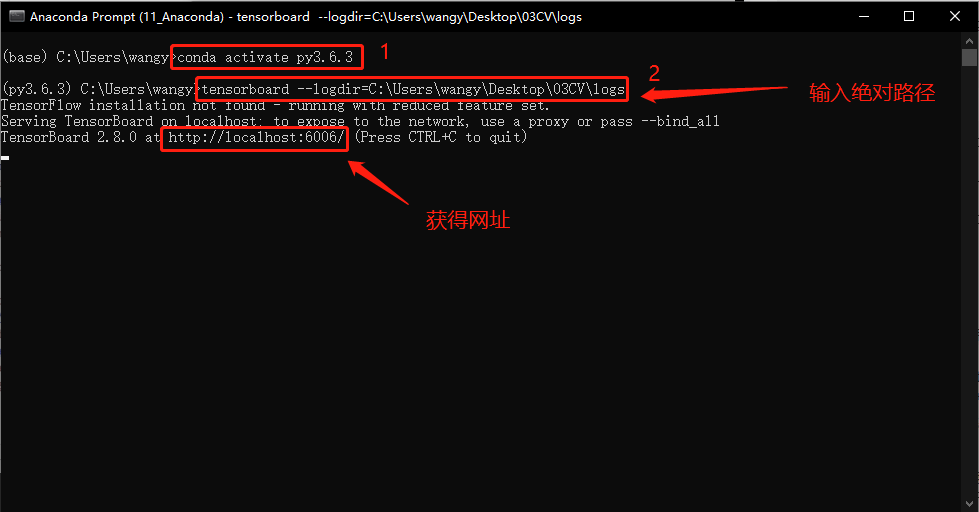

① 在 Anaconda 终端里面,激活py3.6.3环境,再输入

tensorboard --logdir=C:\Users\wangy\Desktop\03CV\logs

命令,将网址赋值浏览器的网址栏,回车,即可查看tensorboard显示日志情况。

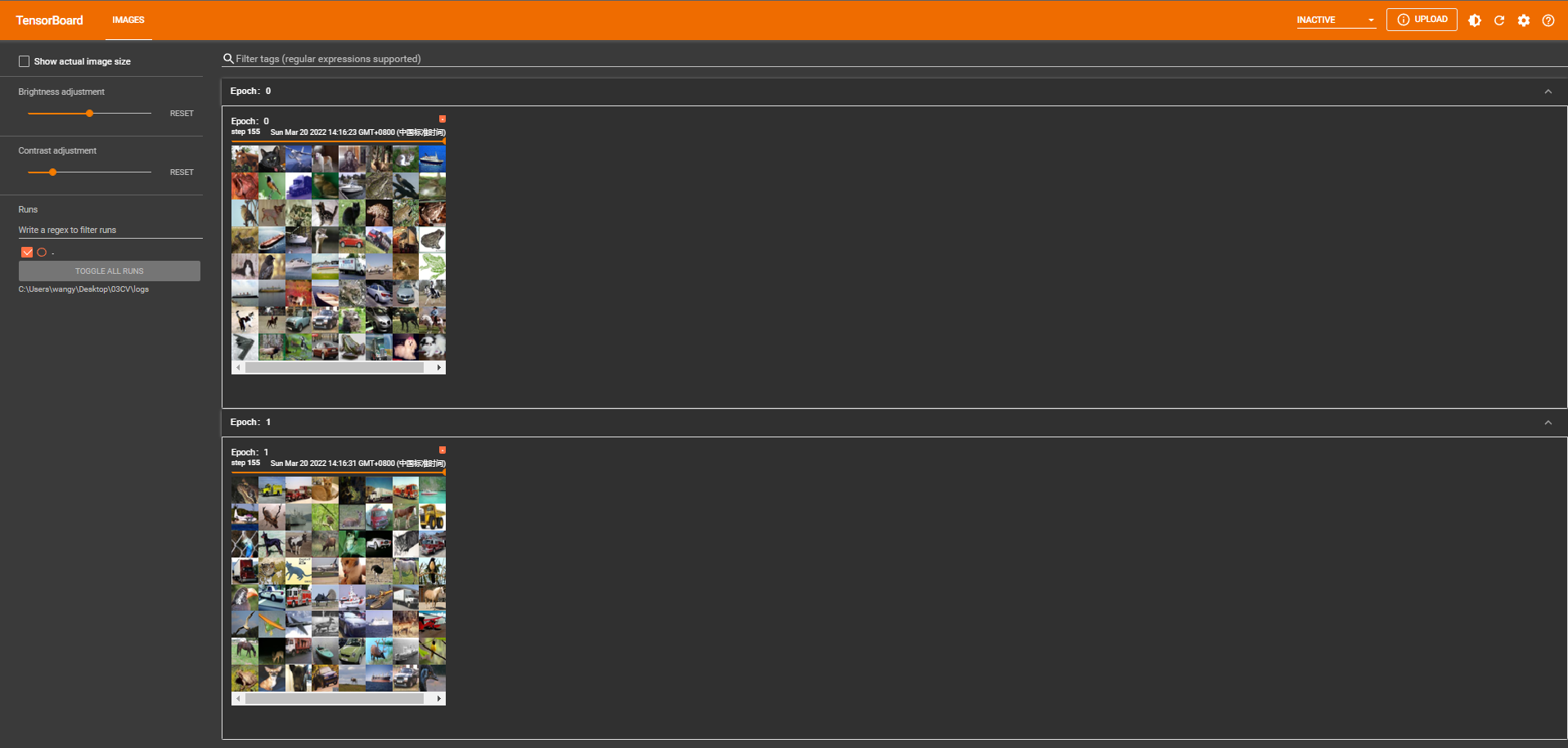

3. Dataloader多轮次

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# 准备的测试数据集

test_data = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor())

# batch_size=4 使得 img0, target0 = dataset[0]、img1, target1 = dataset[1]、img2, target2 = dataset[2]、img3, target3 = dataset[3],然后这四个数据作为Dataloader的一个返回

test_loader = DataLoader(dataset=test_data,batch_size=64,shuffle=True,num_workers=0,drop_last=True)

# 用for循环取出DataLoader打包好的四个数据

writer = SummaryWriter("logs")

for epoch in range(2):

step = 0

for data in test_loader:

imgs, targets = data # 每个data都是由4张图片组成,imgs.size 为 [4,3,32,32],四张32×32图片三通道,targets由四个标签组成

writer.add_images("Epoch:{}".format(epoch),imgs,step)

step = step + 1

writer.close()

5-Dataloader使用的更多相关文章

- torch.utils.data.DataLoader对象中的迭代操作

关于迭代器等概念参考:https://www.cnblogs.com/zf-blog/p/10613533.html 关于pytorch中的DataLoader类参考:https://blog.csd ...

- torch.utils.data.DataLoader()中的pin_memory参数

参考链接:http://www.voidcn.com/article/p-fsdktdik-bry.html 该pin_memory参数与锁页内存.不锁页内存以及虚拟内存三个概念有关: 锁页内存理解( ...

- 聊聊pytorch中的DataLoader

实际上pytorch在定义dataloader的时候是需要传入很多参数的,比如,number_workers, pin_memory, 以及shuffle, dataset等,其中sampler参数算 ...

- Gluon Datasets and DataLoader

mxnet.recordio MXRecordIO Reads/writes RecordIO data format, supporting sequential read and write. r ...

- torch.utils.data.DataLoader使用方法

数据加载器,结合了数据集和取样器,并且可以提供多个线程处理数据集.在训练模型时使用到此函数,用来把训练数据分成多个小组,此函数每次抛出一组数据.直至把所有的数据都抛出.就是做一个数据的初始化. 生成迭 ...

- [pytorch修改]dataloader.py 实现darknet中的subdivision功能

dataloader.py import random import torch import torch.multiprocessing as multiprocessing from torch. ...

- pytorch中如何使用DataLoader对数据集进行批处理

最近搞了搞minist手写数据集的神经网络搭建,一个数据集里面很多个数据,不能一次喂入,所以需要分成一小块一小块喂入搭建好的网络. pytorch中有很方便的dataloader函数来方便我们进行批处 ...

- pytorch的torch.utils.data.DataLoader认识

PyTorch中数据读取的一个重要接口是torch.utils.data.DataLoader,该接口定义在dataloader.py脚本中,只要是用PyTorch来训练模型基本都会用到该接口, 该接 ...

- mxnet自定义dataloader加载自己的数据

实际上关于pytorch加载自己的数据之前有写过一篇博客,但是最近接触了mxnet,发现关于这方面的教程很少 如果要加载自己定义的数据的话,看mxnet关于mnist基本上能够推测12 看pytorc ...

- Pytorch自定义dataloader以及在迭代过程中返回image的name

pytorch官方给的加载数据的方式是已经定义好的dataset以及loader,如何加载自己本地的图片以及label? 形如数据格式为 image1 label1 image2 label2 ... ...

随机推荐

- MYSQL架构介绍

专栏持续更新中- 本专栏针对的是掌握MySQL基本操作后想要对其有深入了解并且有高性能追求的读者. 第一篇文章主要是对MySQL架构的主要概括,让读者脑海中有个对MySQL大体轮廓,很多地方没有展开细 ...

- 【SpringCloud】Gateway新一代网关

Gateway新一代网关 概述简介 官网 上一代zuul 1.x https://github.com/Netflix/zuul/wiki 当前gateway https://cloud.spring ...

- cannot resolve unit......

Just disable Error Insight (Tools -> Options -> Editor Options -> Code Insight, uncheck Err ...

- fiddler的自动响应器

1.点击autoresponder,勾选enable rules和unmatched requests passthrough 2.替换步骤 (1)把要替换的会话拉取到空白处,或者选中要替换的内容点击 ...

- Centos 实现 MySql 8.0.40 主从配置

MySql 版本:8.0.40 服务器:10.120.75.50,10.120.75.51(共两台) 1. 配置主服务器(10.120.75.50) 1.1 编辑 MySQL 配置文件 首先,登录到主 ...

- [转发] Go pprof内存指标含义备忘录

原文链接 Go pprof内存指标含义备忘录 最近组内一些Go服务碰到内存相关的问题,所以今天抽时间看了下Go pprof内存指标的含义,为后续查问题做准备. 内容主要来自于Go代码中对这些字段的注释 ...

- Go语言自定义类型

Go语言与C/C++类似,C++可通过typedef关键字自定义数据类型(别名.定义结构体等),Go语言则通过type关键字可实现自定义类型的实现 1.自定义类型格式 用户自定义类型使用type,其语 ...

- C#数据结构之Tree

using System; using System.Collections.Generic; using System.Linq; using System.Text; using System.T ...

- Pytorch之Tensor学习

Pytorch之Tensor学习 Tensors是与数组和矩阵类似的数据结构,比如它与numpy 的ndarray类似,但tensors可以在GPU上运行.实际上,tensors和numpy数组经常共 ...

- vue中使用swiper 插件出错问题

由于我自己在写一个demo时候用到了该插件,出现了一些问题,所以就简单查了一下该插件的用法以及一些常见的错误 1.出现Get .../maps/swiper.min.js.map 500(Intern ...