2.kafka单节点broker的安装与启动

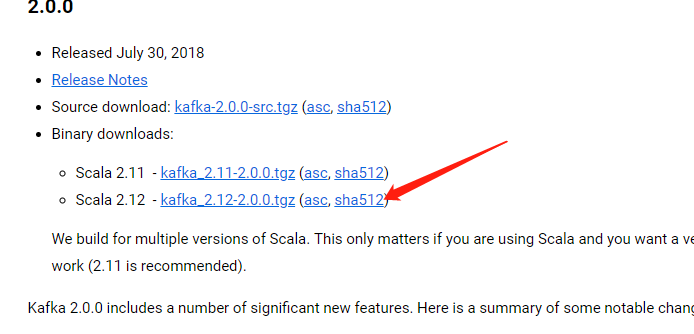

下载kafka,http://kafka.apache.org/downloads

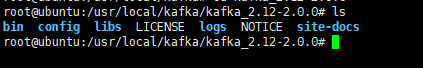

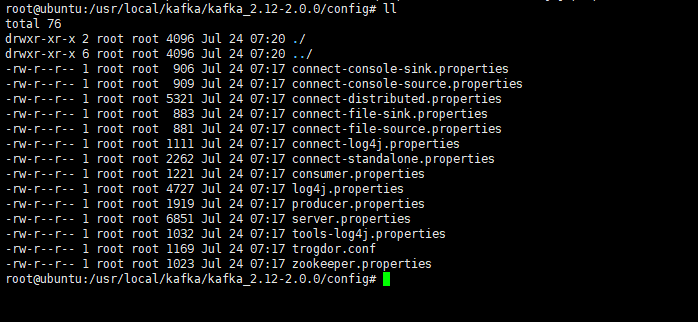

kafka下面的文件结构如下:

进入bin目录,启动kafka之前要先启动zookeeper

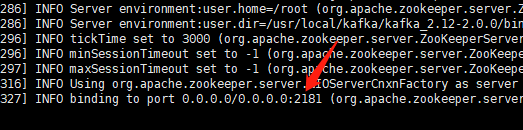

./zookeeper-server-start.sh ../config/zookeeper.properties 启动zookeeper

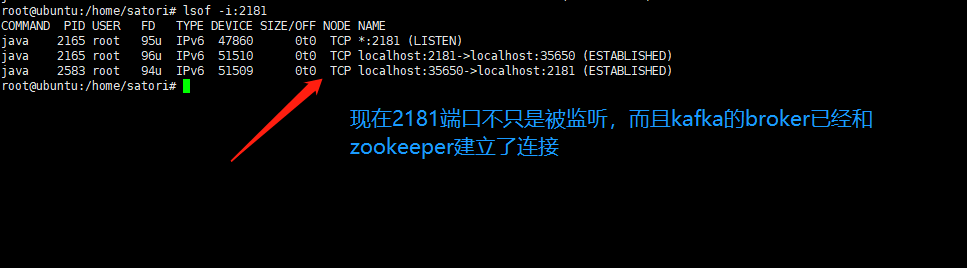

显示绑定了2181端口,我们来查看一下

zookeeper启动成功

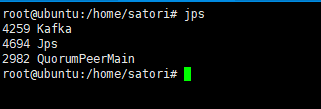

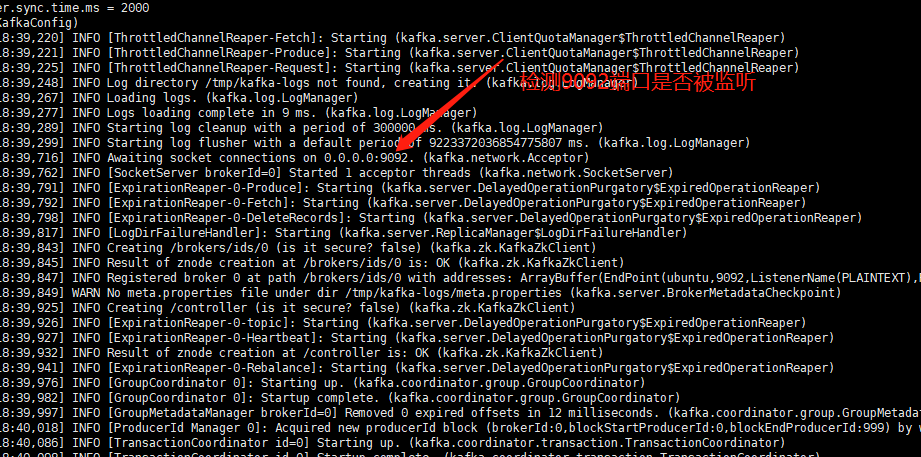

接下来启动kafka

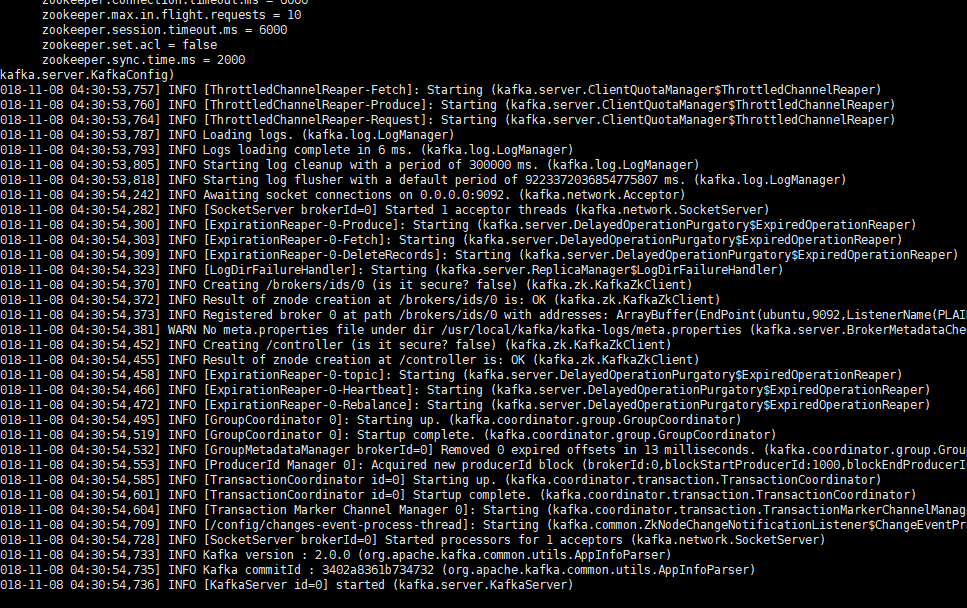

./kafka-server-start.sh ../config/server.properties, 和启动zookeeper类似

kafka启动成功

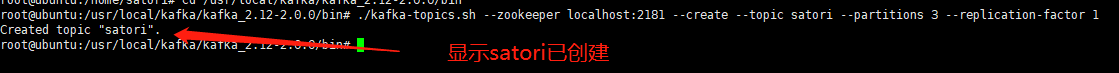

创建topic

之前说过,kafka是基于发布订阅的方式。并且生产者要指定这条消息是发送到什么topic上的,而且消费者一般也不会消费所有的消息,需要指定订阅的消息是属于哪个topic。

如果想创建一个名为satori的topic就可以这么写,后面的参数之后会介绍

./kafka-topics.sh --zookeeper localhost:2181 --create --topic satori --partitions 3 --replication-factor 1

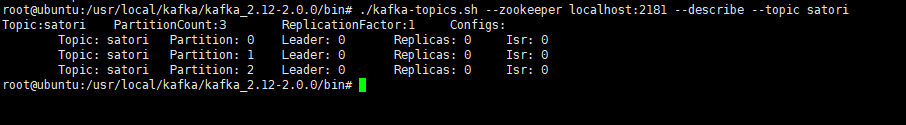

查看satori这个topic,直接将create改成describe

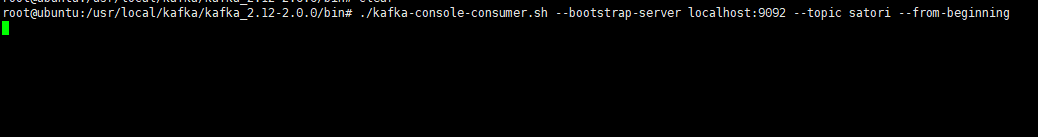

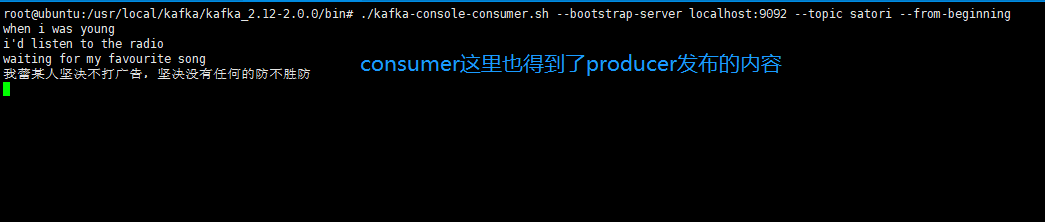

启动一个consumer

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic satori --from-beginning

顺带一提,在低版本的kafka中还可以通过 ./kafka-console-consumer.sh --zookeeper localhost:2181 --topic satori,但是已经在0.9.0之后被移除了

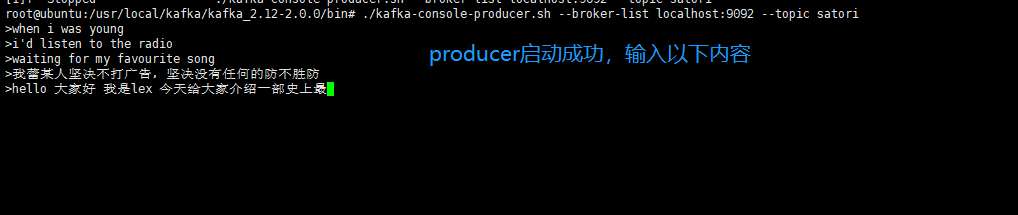

启动一个producer

./kafka-console-producer.sh --broker-list localhost:9092 --topic satori

我们再来看看consumer

-------------------------------------------------------------------

以上是通过kafka自带的zookeeper启动的,接下来我们演示如何通过单独的zookeeper启动kafka

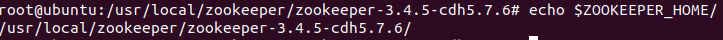

先安装zookeeper,http://archive.cloudera.com/cdh5/cdh/5/

然后配置到环境变量里面去,激活一下。

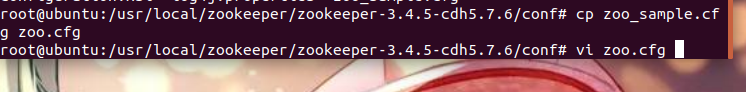

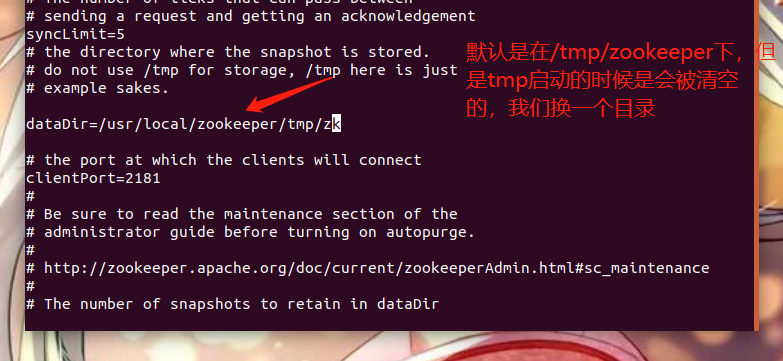

然后还要修改一个配置文件,进入conf目录下。

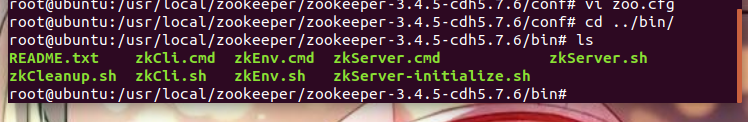

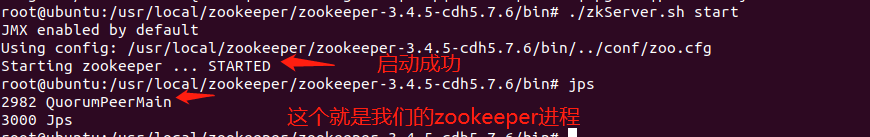

启动zookeeper,进入bin目录

zkServer.sh则是启动文件

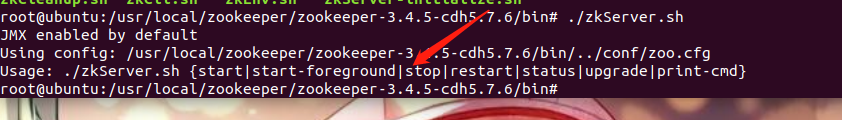

但是提示我们需要加一些参数,我们选一下start

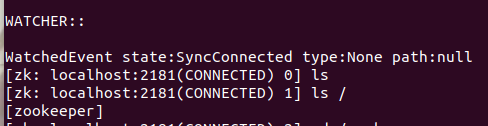

我们还可以使用zkCli.sh连接一下

连接成功,这个比较简单

我们再回过头来看kafka的配置文件

我们来看看server.properties

# Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # see kafka.server.KafkaConfig for additional details and defaults ############################# Server Basics ############################# # The id of the broker. This must be set to a unique integer for each broker. broker.id=0 ############################# Socket Server Settings ############################# # The address the socket server listens on. It will get the value returned from # java.net.InetAddress.getCanonicalHostName() if not configured. # FORMAT: # listeners = listener_name://host_name:port # EXAMPLE: # listeners = PLAINTEXT://your.host.name:9092 #listeners=PLAINTEXT://:9092 # Hostname and port the broker will advertise to producers and consumers. If not set, # it uses the value for "listeners" if configured. Otherwise, it will use the value # returned from java.net.InetAddress.getCanonicalHostName(). #advertised.listeners=PLAINTEXT://your.host.name:9092 # Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details #listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL # The number of threads that the server uses for receiving requests from the network and sending responses to the network num.network.threads=3 # The number of threads that the server uses for processing requests, which may include disk I/O num.io.threads=8 # The send buffer (SO_SNDBUF) used by the socket server socket.send.buffer.bytes=102400 # The receive buffer (SO_RCVBUF) used by the socket server socket.receive.buffer.bytes=102400 # The maximum size of a request that the socket server will accept (protection against OOM) socket.request.max.bytes=104857600 ############################# Log Basics ############################# # A comma separated list of directories under which to store log files log.dirs=/tmp/kafka-logs # The default number of log partitions per topic. More partitions allow greater # parallelism for consumption, but this will also result in more files across # the brokers. num.partitions=1 # The number of threads per data directory to be used for log recovery at startup and flushing at shutdown. # This value is recommended to be increased for installations with data dirs located in RAID array. num.recovery.threads.per.data.dir=1 ############################# Internal Topic Settings ############################# # The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state" # For anything other than development testing, a value greater than 1 is recommended for to ensure availability such as 3. offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 ############################# Log Flush Policy ############################# # Messages are immediately written to the filesystem but by default we only fsync() to sync # the OS cache lazily. The following configurations control the flush of data to disk. # There are a few important trade-offs here: # 1. Durability: Unflushed data may be lost if you are not using replication. # 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush. # 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks. # The settings below allow one to configure the flush policy to flush data after a period of time or # every N messages (or both). This can be done globally and overridden on a per-topic basis. # The number of messages to accept before forcing a flush of data to disk #log.flush.interval.messages=10000 # The maximum amount of time a message can sit in a log before we force a flush #log.flush.interval.ms=1000 ############################# Log Retention Policy ############################# # The following configurations control the disposal of log segments. The policy can # be set to delete segments after a period of time, or after a given size has accumulated. # A segment will be deleted whenever *either* of these criteria are met. Deletion always happens # from the end of the log. # The minimum age of a log file to be eligible for deletion due to age log.retention.hours=168 # A size-based retention policy for logs. Segments are pruned from the log unless the remaining # segments drop below log.retention.bytes. Functions independently of log.retention.hours. #log.retention.bytes=1073741824 # The maximum size of a log segment file. When this size is reached a new log segment will be created. log.segment.bytes=1073741824 # The interval at which log segments are checked to see if they can be deleted according # to the retention policies log.retention.check.interval.ms=300000 ############################# Zookeeper ############################# # Zookeeper connection string (see zookeeper docs for details). # This is a comma separated host:port pairs, each corresponding to a zk # server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002". # You can also append an optional chroot string to the urls to specify the # root directory for all kafka znodes. zookeeper.connect=localhost:2181 # Timeout in ms for connecting to zookeeper zookeeper.connection.timeout.ms=6000 ############################# Group Coordinator Settings ############################# # The following configuration specifies the time, in milliseconds, that the GroupCoordinator will delay the initial consumer rebalance. # The rebalance will be further delayed by the value of group.initial.rebalance.delay.ms as new members join the group, up to a maximum of max.poll.interval.ms. # The default value for this is 3 seconds. # We override this to 0 here as it makes for a better out-of-the-box experience for development and testing. # However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup. group.initial.rebalance.delay.ms=0

我们来慢慢看

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

之前说过kafka就相当于一个broker,而且还可以有多个broker,那么为了区分,因此每一个broker都是有一个id的,并且id还必须唯一。

这个就不需要改,直接从零开始的异世界生活就行。

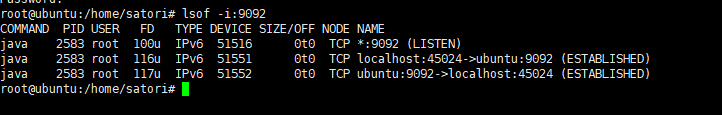

#listeners=PLAINTEXT://:9092

监听的端口

# A comma separated list of directories under which to store log files

log.dirs=/tmp/kafka-logs

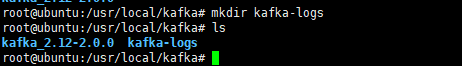

这个log.dirs是用来存放kafka的日志文件的,这个也不能用这个路径,重启的时候回废掉的,我们自己指定一个,就在kafka下面建一个目录吧。

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1

分区的数量,后面介绍,就先让它为1

zookeeper.connect=localhost:2181

zookeeper的地址

配置文件就先到这,我们再来启动kafka。忘记说了我们要把kafka加到环境变量里面去

然后kafka-server-start.sh $KAFKA_HOME/config/server.properties

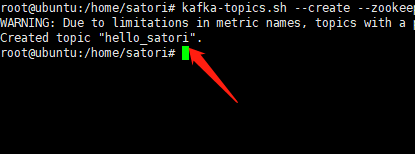

接下来创建topic

kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic hello_satori

指定一个zookeeper,副本系数是1,我们这就一个节点,分区也是1

创建成功

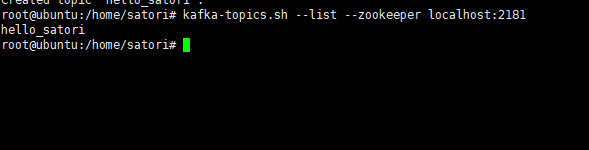

查看topic

kafka-topics.sh --list --zookeeper localhost:2181

显示只有一个topic,就是我们的hello_satori

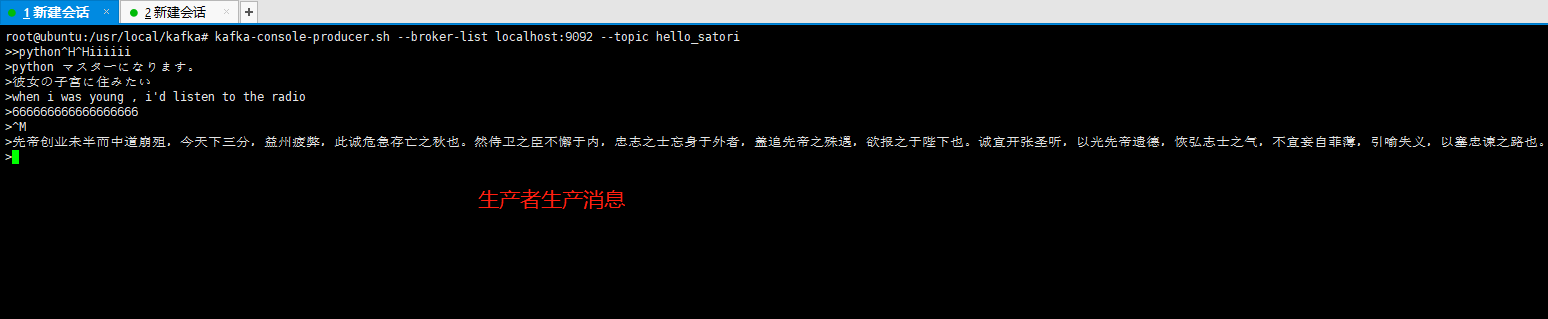

发送消息

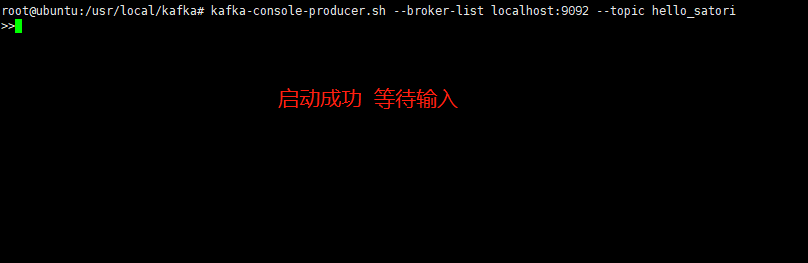

kafka-console-producer.sh --broker-list localhost:9092 --topic hello_satori

我们发送消息是往篮子里面发送,所以需要指定--broker-list,指定topic是往哪个topic里面发送,9092是我们在配置文件中写的监听的端口

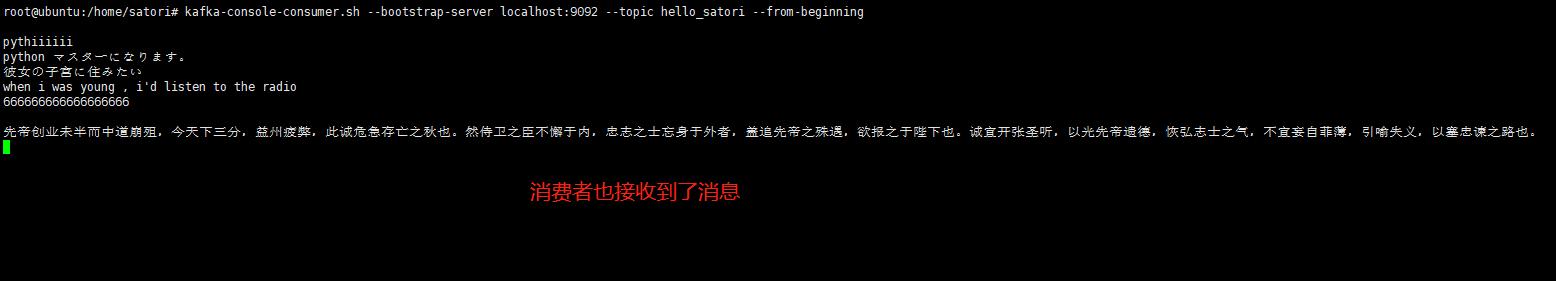

接收消息

kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic hello_satori --from-beginning

顺带一提,在低版本的kafka中还可以通过 ./kafka-console-consumer.sh --zookeeper localhost:2181 --topic satori,但是已经在0.9.0之后被移除了

注意这里的--from-beginning,如果带上,那么以前的数据也会被消费

启动成功,等待生产者生产数据

2.kafka单节点broker的安装与启动的更多相关文章

- Kafka 单节点多Kafka Broker集群

Kafka 单节点多Kafka Broker集群 接前一篇文章,今天搭建一下单节点多Kafka Broker集群环境. 配置与启动服务 由于是在一个节点上启动多个 Kafka Broker实例,所以我 ...

- Kafka单节点及集群配置安装

一.单节点 1.上传Kafka安装包到Linux系统[当前为Centos7]. 2.解压,配置conf/server.property. 2.1配置broker.id 2.2配置log.dirs 2. ...

- zookeeper单节点windows下安装

由于需要在windows下面安装zookeeper,故做个整理 1.下载zookeeper http://mirrors.hust.edu.cn/apache/zookeeper/ 2.解压 3.修改 ...

- 吴裕雄--天生自然HADOOP操作实验学习笔记:单节点伪分布式安装

实验目的 了解java的安装配置 学习配置对自己节点的免密码登陆 了解hdfs的配置和相关命令 了解yarn的配置 实验原理 1.Hadoop安装 Hadoop的安装对一个初学者来说是一个很头疼的事情 ...

- Apache Kafka(二)- Kakfa 安装与启动

安装并启动Kafka 1.下载最新版Kafka(当前为kafka_2.12-2.3.0)并解压: > wget http://mirror.bit.edu.cn/apache/kafka/2.3 ...

- Kafka 单节点单Kafka Broker集群

下载与安装 从 http://www.apache.org/dist/kafka/ 下载最新版本的 kafka,这里使用的是 kafka_2.12-0.10.2.1.tgz $ tar zxvf ka ...

- kafka单节点的安装,部署,使用

1.kafka官网:http://kafka.apache.org/downloads jdk:https://www.oracle.com/technetwork/java/javase/downl ...

- kafka单节点测试

======================命令====================== 启动zookeeper server bin/zookeeper-server-start.sh conf ...

- kafka单节点部署无法访问问题解决

场景:在笔记本安装了一台虚拟机, 在本地的虚拟机上部署了一个kafka服务: 写了一个测试程序,在笔记本上运行测试程序,访问虚拟机上的kafka,报如下异常: 2015-01-15 09:33:26 ...

随机推荐

- EM算法浅析(一)-问题引出

EM算法浅析,我准备写一个系列的文章: EM算法浅析(一)-问题引出 EM算法浅析(二)-算法初探 一.基本认识 EM(Expectation Maximization Algorithm)算法即期望 ...

- POJ 2135 Farm Tour(最小费用最大流)

Description When FJ's friends visit him on the farm, he likes to show them around. His farm comprise ...

- NOIP 2009 靶形数独(DLX)

小城和小华都是热爱数学的好学生,最近,他们不约而同地迷上了数独游戏,好胜的他们想用数独来一比高低.但普通的数独对他们来说都过于简单了,于是他们向Z 博士请教,Z 博士拿出了他最近发明的“靶形数独”,作 ...

- 父窗体和子窗体的显示,show&showdialog方法

showdialog(): 子窗体弹出后,不能对父窗体进行操作.show()可以. 具体原理: 1.在调用Form.Show方法后,Show方法后面的代码会立即执行 2.在调用Form.ShowDi ...

- io学习-相关文章

文章:IO编程 地址:https://www.liaoxuefeng.com/wiki/0014316089557264a6b348958f449949df42a6d3a2e542c000/00143 ...

- Java空指针异常解决方法

Throwable是所有错误或异常的超类,只有当对象是这个类的实例时才能通过Java虚拟机或者Java throw语句抛出. 当Java运行环境发出异常时,会寻找处理该异常的catch块,找到对应的c ...

- JConsole本地连接失败

一.问题描述 笔者在使用JDK自带的JConsole小工具连接Myeclipse里面注册的MBean时,报如下错: 二.解决办法 添加JVM运行参数: -Dcom.sun.management.jmx ...

- React & styled component

React & styled component https://www.styled-components.com/#your-first-styled-component tagged t ...

- 【bzoj4236】JOIOJI STL-map

题目描述 JOIOJI桑是JOI君的叔叔.“JOIOJI”这个名字是由“J.O.I”三个字母各两个构成的. 最近,JOIOJI桑有了一个孩子.JOIOJI桑想让自己孩子的名字和自己一样由“J.O.I” ...

- ECharts饼图制作分析

ECharts,缩写来自Enterprise Charts,商业级数据图表,一个纯Javascript的图表库,可以流畅的运行在PC和移动设备上,兼容当前绝大部分浏览器(IE6/7/8/9/10/11 ...