6 Easy Steps to Learn Naive Bayes Algorithm (with code in Python)

6 Easy Steps to Learn Naive Bayes Algorithm (with code in Python)

Introduction

Here’s a situation you’ve got into:

You are working on a classification problem and you have generated your set of hypothesis, created features and discussed the importance of variables. Within an hour, stakeholders want to see the first cut of the model.

What will you do? You have hunderds of thousands of data points and quite a few variables in your training data set. In such situation, if I were at your place, I would have used ‘Naive Bayes‘, which can be extremely fast relative to other classification algorithms. It works on Bayes theorem of probability to predict the class of unknown data set.

In this article, I’ll explain the basics of this algorithm, so that next time when you come across large data sets, you can bring this algorithm to action. In addition, if you are a newbie in Python, you should be overwhelmed by the presence of available codes in this article.

Table of Contents

- What is Naive Bayes algorithm?

- How Naive Bayes Algorithms works?

- What are the Pros and Cons of using Naive Bayes?

- 4 Applications of Naive Bayes Algorithm

- Steps to build a basic Naive Bayes Model in Python

- Tips to improve the power of Naive Bayes Model

What is Naive Bayes algorithm?

It is a classification technique based on Bayes’ Theorem with an assumption of independence among predictors. In simple terms, a Naive Bayes classifier assumes that the presence of a particular feature in a class is unrelated to the presence of any other feature. For example, a fruit may be considered to be an apple if it is red, round, and about 3 inches in diameter. Even if these features depend on each other or upon the existence of the other features, all of these properties independently contribute to the probability that this fruit is an apple and that is why it is known as ‘Naive’.

Naive Bayes model is easy to build and particularly useful for very large data sets. Along with simplicity, Naive Bayes is known to outperform even highly sophisticated classification methods.

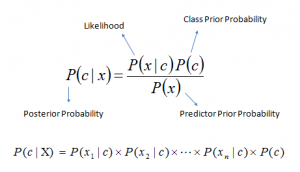

Bayes theorem provides a way of calculating posterior probability P(c|x) from P(c), P(x) and P(x|c). Look at the equation below:

Above,

Above,

- P(c|x) is the posterior probability of class (c, target) given predictor (x, attributes).

- P(c) is the prior probability of class.

- P(x|c) is the likelihood which is the probability of predictor given class.

- P(x) is the prior probability of predictor.

How Naive Bayes algorithm works?

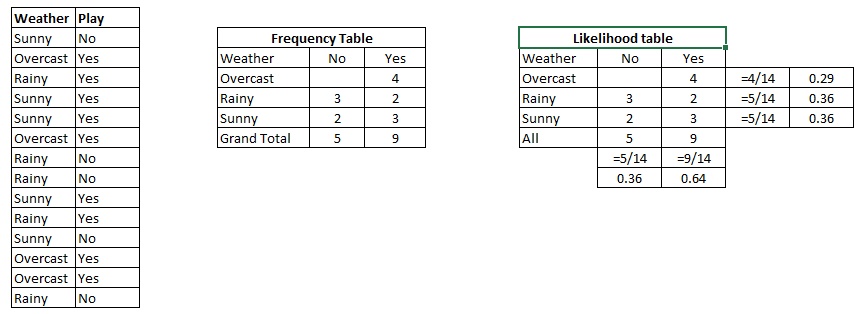

Let’s understand it using an example. Below I have a training data set of weather and corresponding target variable ‘Play’ (suggesting possibilities of playing). Now, we need to classify whether players will play or not based on weather condition. Let’s follow the below steps to perform it.

Step 1: Convert the data set into a frequency table

Step 2: Create Likelihood table by finding the probabilities like Overcast probability = 0.29 and probability of playing is 0.64.

Step 3: Now, use Naive Bayesian equation to calculate the posterior probability for each class. The class with the highest posterior probability is the outcome of prediction.

Problem: Players will play if weather is sunny. Is this statement is correct?

We can solve it using above discussed method of posterior probability.

P(Yes | Sunny) = P( Sunny | Yes) * P(Yes) / P (Sunny)

Here we have P (Sunny |Yes) = 3/9 = 0.33, P(Sunny) = 5/14 = 0.36, P( Yes)= 9/14 = 0.64

Now, P (Yes | Sunny) = 0.33 * 0.64 / 0.36 = 0.60, which has higher probability.

Naive Bayes uses a similar method to predict the probability of different class based on various attributes. This algorithm is mostly used in text classification and with problems having multiple classes.

What are the Pros and Cons of Naive Bayes?

Pros:

- It is easy and fast to predict class of test data set. It also perform well in multi class prediction

- When assumption of independence holds, a Naive Bayes classifier performs better compare to other models like logistic regression and you need less training data.

- It perform well in case of categorical input variables compared to numerical variable(s). For numerical variable, normal distribution is assumed (bell curve, which is a strong assumption).

Cons:

- If categorical variable has a category (in test data set), which was not observed in training data set, then model will assign a 0 (zero) probability and will be unable to make a prediction. This is often known as “Zero Frequency”. To solve this, we can use the smoothing technique. One of the simplest smoothing techniques is called Laplace estimation.

- On the other side naive Bayes is also known as a bad estimator, so the probability outputs frompredict_proba are not to be taken too seriously.

- Another limitation of Naive Bayes is the assumption of independent predictors. In real life, it is almost impossible that we get a set of predictors which are completely independent.

4 Applications of Naive Bayes Algorithms

- Real time Prediction: Naive Bayes is an eager learning classifier and it is sure fast. Thus, it could be used for making predictions in real time.

- Multi class Prediction: This algorithm is also well known for multi class prediction feature. Here we can predict the probability of multiple classes of target variable.

- Text classification/ Spam Filtering/ Sentiment Analysis: Naive Bayes classifiers mostly used in text classification (due to better result in multi class problems and independence rule) have higher success rate as compared to other algorithms. As a result, it is widely used in Spam filtering (identify spam e-mail) and Sentiment Analysis (in social media analysis, to identify positive and negative customer sentiments)

- Recommendation System: Naive Bayes Classifier and Collaborative Filtering together builds a Recommendation System that uses machine learning and data mining techniques to filter unseen information and predict whether a user would like a given resource or not

How to build a basic model using Naive Bayes in Python?

Again, scikit learn (python library) will help here to build a Naive Bayes model in Python. There are three types of Naive Bayes model under scikit learn library:

Gaussian: It is used in classification and it assumes that features follow a normal distribution.

Multinomial: It is used for discrete counts. For example, let’s say, we have a text classification problem. Here we can consider bernoulli trials which is one step further and instead of “word occurring in the document”, we have “count how often word occurs in the document”, you can think of it as “number of times outcome number x_i is observed over the n trials”.

Bernoulli: The binomial model is useful if your feature vectors are binary (i.e. zeros and ones). One application would be text classification with ‘bag of words’ model where the 1s & 0s are “word occurs in the document” and “word does not occur in the document” respectively.

Based on your data set, you can choose any of above discussed model. Below is the example of Gaussian model.

Python Code

#Import Library of Gaussian Naive Bayes model

from sklearn.naive_bayes import GaussianNB

import numpy as np #assigning predictor and target variables

x= np.array([[-3,7],[1,5], [1,2], [-2,0], [2,3], [-4,0], [-1,1], [1,1], [-2,2], [2,7], [-4,1], [-2,7]])

Y = np.array([3, 3, 3, 3, 4, 3, 3, 4, 3, 4, 4, 4])

#Create a Gaussian Classifier

model = GaussianNB() # Train the model using the training sets

model.fit(x, y) #Predict Output

predicted= model.predict([[1,2],[3,4]])

print predicted Output: ([3,4])

Above, we looked at the basic Naive Bayes model, you can improve the power of this basic model by tuning parameters and handle assumption intelligently. Let’s look at the methods to improve the performance of Naive Bayes Model. I’d recommend you to go through this document for more details on Text classification using Naive Bayes.

Tips to improve the power of Naive Bayes Model

Here are some tips for improving power of Naive Bayes Model:

- If continuous features do not have normal distribution, we should use transformation or different methods to convert it in normal distribution.

- If test data set has zero frequency issue, apply smoothing techniques “Laplace Correction” to predict the class of test data set.

- Remove correlated features, as the highly correlated features are voted twice in the model and it can lead to over inflating importance.

- Naive Bayes classifiers has limited options for parameter tuning like alpha=1 for smoothing, fit_prior=[True|False] to learn class prior probabilities or not and some other options (look at detail here). I would recommend to focus on your pre-processing of data and the feature selection.

- You might think to apply some classifier combination technique like ensembling, bagging and boosting but these methods would not help. Actually, “ensembling, boosting, bagging” won’t help since their purpose is to reduce variance. Naive Bayes has no variance to minimize.

End Notes

In this article, we looked at one of the supervised machine learning algorithm “Naive Bayes” mainly used for classification. Congrats, if you’ve thoroughly & understood this article, you’ve already taken you first step to master this algorithm. From here, all you need is practice.

Further, I would suggest you to focus more on data pre-processing and feature selection prior to applying Naive Bayes algorithm.0 In future post, I will discuss about text and document classification using naive bayes in more detail.

Did you find this article helpful? Please share your opinions / thoughts in the comments section below.

6 Easy Steps to Learn Naive Bayes Algorithm (with code in Python)的更多相关文章

- Naive Bayes Algorithm And Laplace Smoothing

朴素贝叶斯算法(Naive Bayes)适用于在Training Set中,输入X和输出Y都是离散型的情况.如果输入X为连续,输出Y为离散,我们考虑使用逻辑回归(Logistic Regression ...

- 机器学习---用python实现朴素贝叶斯算法(Machine Learning Naive Bayes Algorithm Application)

在<机器学习---朴素贝叶斯分类器(Machine Learning Naive Bayes Classifier)>一文中,我们介绍了朴素贝叶斯分类器的原理.现在,让我们来实践一下. 在 ...

- 朴素贝叶斯法(naive Bayes algorithm)

对于给定的训练数据集,朴素贝叶斯法首先基于iid假设学习输入/输出的联合分布:然后基于此模型,对给定的输入x,利用贝叶斯定理求出后验概率最大的输出y. 一.目标 设输入空间是n维向量的集合,输出空间为 ...

- Naive Bayes Algorithm

朴素贝叶斯的核心基础理论就是贝叶斯理论和条件独立性假设,在文本数据分析中应用比较成功.朴素贝叶斯分类器实现起来非常简单,虽然其性能经常会被支持向量机等技术超越,但有时也能发挥出惊人的效果.所以,在将朴 ...

- [ML] Naive Bayes for Text Classification

TF-IDF Algorithm From http://www.ruanyifeng.com/blog/2013/03/tf-idf.html Chapter 1, 知道了"词频" ...

- [Machine Learning & Algorithm] 朴素贝叶斯算法(Naive Bayes)

生活中很多场合需要用到分类,比如新闻分类.病人分类等等. 本文介绍朴素贝叶斯分类器(Naive Bayes classifier),它是一种简单有效的常用分类算法. 一.病人分类的例子 让我从一个例子 ...

- Naive Bayes Theorem and Application - Theorem

Naive Bayes Theorm And Application - Theorem Naive Bayes model: 1. Naive Bayes model 2. model: discr ...

- ML | Naive Bayes

what's xxx In machine learning, naive Bayes classifiers are a family of simple probabilistic classif ...

- 数据挖掘十大经典算法(9) 朴素贝叶斯分类器 Naive Bayes

贝叶斯分类器 贝叶斯分类器的分类原理是通过某对象的先验概率,利用贝叶斯公式计算出其后验概率,即该对象属于某一类的概率,选择具有最大后验概率的类作为该对象所属的类.眼下研究较多的贝叶斯分类器主要有四种, ...

随机推荐

- vue-cli 安装时 npm 报错 errno -4048

如何解决vue-cli 安装时 npm 报错 errno -4048 第一种解决方法:以管理身份运行cmd.exe 第二种解决办法:在dos窗口输入命令 npm cache clean --fo ...

- windows多线程(十) 生产者与消费者问题

一.概述 生产者消费者问题是一个著名的线程同步问题,该问题描述如下:有一个生产者在生产产品,这些产品将提供给若干个消费者去消费,为了使生产者和消费者能并发执行,在两者之间设置一个具有多个缓冲区的缓冲池 ...

- v-html的应用

var app=new Vue({ el: '#app', data:{ link:'<a href="#">这是一个连接</a>' },}) <di ...

- [OS] Linux进程、线程通信方式总结

转自:http://blog.sina.com.cn/s/blog_64b9c6850100ub80.html Linux系统中的进程通信方式主要以下几种: 同一主机上的进程通信方式 * UNIX进程 ...

- 隐藏基于Dialog的MFC的主窗体

最近需要做一个主窗体常态隐藏的程序,类似360卫士那样,只有托盘图标常显示.本以为隐藏主窗体很简单,但遇到了意想不到的情况. 无效的做法 最初的想法是设置主对话框资源的 Visiable 属性为 fa ...

- UVA11248_Frequency Hopping

给一个有向网络,求其1,n两点的最大流量是否不小于C,如果小于,是否可以通过修改一条边的容量使得最大流量不小于C? 首先对于给定的网络,我们可以先跑一遍最大流,然后先看流量是否大于C. 然后保存跑完第 ...

- 栈java实现

这几天,过得挺充实的,每天都在不停的上课,早上很早就起来去跑步,晚上到图书馆看书.一边紧张的学习,一边在默默的备战软考.最近还接手了一个公司官网的建设.这是我在川信最后的一个完整学期了,每件事我都要认 ...

- 1.红黑树和自平衡二叉(查找)树区别 2.红黑树与B树的区别

1.红黑树和自平衡二叉(查找)树区别 1.红黑树放弃了追求完全平衡,追求大致平衡,在与平衡二叉树的时间复杂度相差不大的情况下,保证每次插入最多只需要三次旋转就能达到平衡,实现起来也更为简单. 2.平衡 ...

- ajax发送post请求遇到的坑

前端小白的我. 用django-rest-framework写好了一个接口.如下,就接收两个字符串参数. 前端写了一个简单的提交post请求到这个接口,如下 浏览器提交请求后,一直提示 400 Bad ...

- LINQ 学习之筛选条件

从list 里遍历出 id="DM" 且 code="0000" 的数据 var tes1= from u in list.where u.ID == & ...