Paper Reading - Convolutional Sequence to Sequence Learning ( CoRR 2017 ) ★

Link of the Paper: https://arxiv.org/abs/1705.03122

Motivation:

- Compared to recurrent layers, convolutions create representations for fixed size contexts, however, the effective context size of the network can easily be made larger by stacking several layers on top of each other. This allows to precisely control the maximum length of dependencies to be modeled. Convolutional networks do not depend on the computations of the previous time step and therefore allow parallelization over every element in a sequence. This contrasts with RNNs which maintain a hidden state of the entire past that prevents parallel computation within a sequence.

- Multi-layer convolutional neural networks create hierarchical representations over the input sequence in which nearby input elements interact at lower layers while distant elements interact at higher layers. Hierarchical structure provides a shorter path to capture long-range dependencies compared to the chain structure modeled by recurrent networks. Inputs to a convolutional network are fed through a constant number of kernels and non-linearities, whereas recurrent networks apply up to n operations and non-linearities to the first word and only a single set of operations to the last word. Fixing the number of nonlinearities applied to the inputs also eases learning.

Innotation:

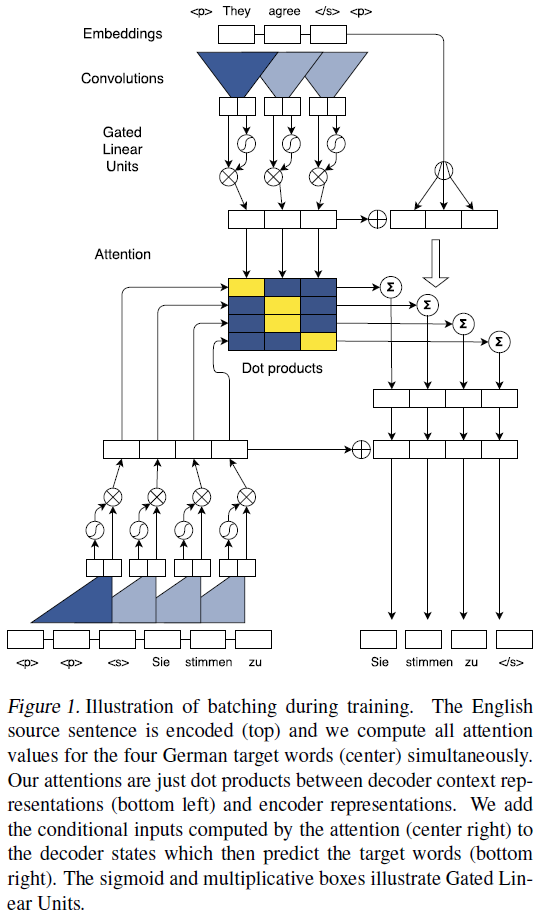

- An architecture for Seq2Seq modeling based entirely on convolutional neural networks. Both encoder and decoder networks share a simple block structure that computes intermediate states based on a fixed number of input elements. Each block contains a one dimensional convolution followed by a non-linearity. For a decoder network with a single block and kernel width k, each resulting state hi1 contains information over k input elements. Stacking several blocks on top of each other increases the number of input elements represented in a state. ( Stacking is similar to the pooling process. )

- Position Embeddings: Input elements x = (x1, . . . , xm) embedded in distributional space as w = (w1, . . . , wm), where wj ∈ Rf is a column in an embedding matrix D ∈ RV×f. The authors also equip the model with a sense of order by embedding the absolute position of input elements p = (p1, . . . , pm) where pj ∈ Rf. Both are combined to obtain input element representations e = (w1+p1, . . . , wm+pm). Position embeddings are useful in the architecture since they give the model a sense of which portion of the sequence in the input or output it is currently dealing with.

- The authors introduce a separate attention mechanism for each decoder layer.

Improvement:

- The model is equipped with gated linear units ( Language modeling with gated linear units - Dauphin et al., arXiv 2016 ) and residual connections ( Deep Residual Learning for Image Recognition - He et al., CVPR 2015a ).

- The authors choose gated linear units as non-linearity which implement a simple gating mechanism over the output of the convolution Y = [A B] ∈ R2d: v([A B]) = A ⓧ σ(B), where A, B ∈ Rd are the inputs to the non-linearity, ⓧ is the point-wise multiplication and the output v([A B]) ∈ Rd is half the size of Y. The gates σ(B) control which inputs A of the current context are relevant. And GLUs perform better than tanh in the context of language modelling.

- To enable deep convolutional networks, authors add residual connections from the input of each convolution to the output of the block. hil = v( Wl [hi−k/2l−1, . . . , hi+k/2l−1] + bwl ) + hil−1

- For encoder networks authors ensure that the output of the convolutional layers matches the input length by padding the input at each layer. However, for decoder networks they have to take care that no future information is available to the decoder. Specifically, we pad the input by k − 1 elements on both the left and right side by zero vectors, and then remove k elements from the end of the convolution output.

General Points:

- Sequence to sequence modeling has been synonymous with recurrent neural network based encoder-decoder architectures. The encoder RNN processes an input sequence x = (x1, . . . , xm) of m elements and returns state representations z = (z1, . . . , zm). The decoder RNN takes z and generates the output sequence y = (y1, . . . , yn) left to right, one element at a time. To generate output yi+1, the decoder computes a new hidden state hi+1 based on the previous state hi, an embedding gi of the previous target language word yi, as well as a conditional input ci derived from the encoder output z. Models without attention consider only the final encoder state zm by setting ci = zm for all i, or simply initialize the first decoder state with zm, in which case ci is not used. Architectures with attention compute ci as a weighted sum of (z1, . . . , zm) at each time step. The weights of the sum are referred to as attention scores and allow the network to focus on different parts of the input sequence as it generates the output sequences. Attention scores are computed by essentially comparing each encoder state zj to a combination of the previous decoder state hi and the last prediction yi; the result is normalized to be a distribution over input elements.

Paper Reading - Convolutional Sequence to Sequence Learning ( CoRR 2017 ) ★的更多相关文章

- Paper Reading - Convolutional Image Captioning ( CVPR 2018 )

Link of the Paper: https://arxiv.org/abs/1711.09151 Motivation: LSTM units are complex and inherentl ...

- [paper reading] C-MIL: Continuation Multiple Instance Learning for Weakly Supervised Object Detection CVPR2019

MIL陷入局部最优,检测到局部,无法完整的检测到物体.将instance划分为空间相关和类别相关的子集.在这些子集中定义一系列平滑的损失近似代替原损失函数,优化这些平滑损失. C-MIL learns ...

- Paper Reading - Attention Is All You Need ( NIPS 2017 ) ★

Link of the Paper: https://arxiv.org/abs/1706.03762 Motivation: The inherently sequential nature of ...

- Convolutional Sequence to Sequence Learning 论文笔记

目录 简介 模型结构 Position Embeddings GLU or GRU Convolutional Block Structure Multi-step Attention Normali ...

- PP: Sequence to sequence learning with neural networks

From google institution; 1. Before this, DNN cannot be used to map sequences to sequences. In this p ...

- 【论文阅读】Sequence to Sequence Learning with Neural Network

Sequence to Sequence Learning with NN <基于神经网络的序列到序列学习>原文google scholar下载. @author: Ilya Sutske ...

- Mol Cell Proteomics. | Prediction of LC-MS/MS properties of peptides from sequence by deep learning (通过深度学习技术根据肽段序列预测其LC-MS/MS谱特征) (解读人:梅占龙)

通过深度学习技术根据肽段序列预测其LC-MS/MS谱特征 解读人:梅占龙 质谱平台 文献名:Prediction of LC-MS/MS properties of peptides from se ...

- Paper Read: Convolutional Image Captioning

Convolutional Image Captioning 2018-11-04 20:42:07 Paper: http://openaccess.thecvf.com/content_cvpr_ ...

- A neural chatbot using sequence to sequence model with attentional decoder. This is a fully functional chatbot.

原项目链接:https://github.com/chiphuyen/stanford-tensorflow-tutorials/tree/master/assignments/chatbot 一个使 ...

随机推荐

- Observer(观察者)模式

1.概述 一些面向对象的编程方式,提供了一种构建对象间复杂网络互连的能力.当对象们连接在一起时,它们就可以相互提供服务和信息. 通常来说,当某个对象的状态发生改变时,你仍然需要对象之间能互相通信.但是 ...

- Backward compatibility

向后兼容

- selenium java maven 自动化测试(二) 页面元素获取与操作

在第一节中,我们已经成功打开了页面,但是自动化测试必然包含了表单的填写与按钮的点击. 所以在第二章中我以博客园为例,完成按钮点击,表单填写 还是以代码为准,先上代码: package com.ryan ...

- 温故vue对vue计算属性computed的分析

vue 复习笔记(1)一段时间没有看过vue的官方文档了,温故而知新,所以我决定将vue的文档在看一遍 1计算属性computed在vue的computed中声明的是计算属性,可以使用箭头函数来进行定 ...

- Linux下onvif客户端获取ipc摄像头 GetProfiles:获取h265媒体信息文件

GetProfiles:获取媒体信息文件 鉴权:但是在使用这个接口之前是需要鉴权的.ONVIF协议规定,部分接口需要鉴权,部分接口不需要鉴权,在调用需要鉴权的接口时不使用鉴权,会导致接口调用失败.实现 ...

- (1)linux和oracle---环境搭建

对linux和oracle一直是敬而远之,稍微有些了解.无奈由于工作需要这次要硬着头皮上了!@#!@@#$%^^ 对于重windows用户的我来说,简直是万种折磨. 算是做个记录吧,一定要坚持下去. ...

- day 32 管道,信号量,进程池,线程的创建

1.管道(了解) Pipe(): 在进程之间建立一条通道,并返回元组(conn1,conn2),其中conn1,conn2表示管道两端的连接对象,强调一点:必须在产生Process对象之前产生管道. ...

- python学习笔记(二)python基础知识(list,tuple,dict,set)

1. list\tuple\dict\set d={} l=[] t=() s=set() print(type(l)) print(type(d)) print(type(t)) print(typ ...

- Flume的介绍和简单操作

Flume是什么 Flume是Cloudera提供的一个高可用的,高可靠的,分布式的海量日志采集.聚合和传输的系统,Flume支持在日志系统中定制各类数据发送方,用于收集数据:同时,Flume提供对数 ...

- Ruby中区分运行来源的方法(转)

Ruby中区分运行来源的方法 这篇文章主要介绍了Ruby中区分运行来源的方法,本文讲解的是类似Python中的if name == 'main':效果,其实Ruby中也有类似语法,需要的朋友可以参考下 ...