Hadoop MapReduce编程 API入门系列之多个Job迭代式MapReduce运行(十二)

推荐

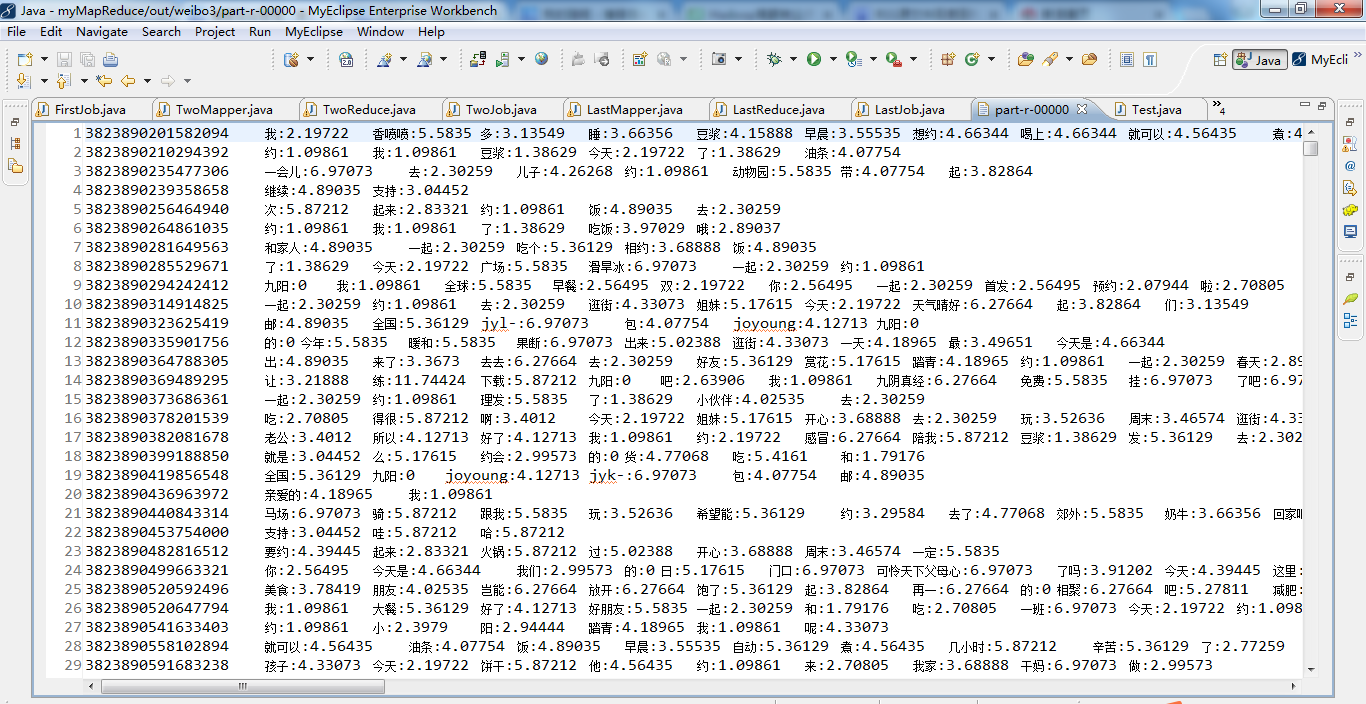

MapReduce分析明星微博数据

这篇博客,给大家,体会不一样的版本编程。

执行

2016-12-12 15:07:51,762 INFO [org.apache.hadoop.metrics.jvm.JvmMetrics] - Initializing JVM Metrics with processName=JobTracker, sessionId=

2016-12-12 15:07:52,197 WARN [org.apache.hadoop.mapreduce.JobSubmitter] - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

2016-12-12 15:07:52,199 WARN [org.apache.hadoop.mapreduce.JobSubmitter] - No job jar file set. User classes may not be found. See Job or Job#setJar(String).

2016-12-12 15:07:52,216 INFO [org.apache.hadoop.mapreduce.lib.input.FileInputFormat] - Total input paths to process : 1

2016-12-12 15:07:52,265 INFO [org.apache.hadoop.mapreduce.JobSubmitter] - number of splits:1

2016-12-12 15:07:52,541 INFO [org.apache.hadoop.mapreduce.JobSubmitter] - Submitting tokens for job: job_local1414008937_0001

2016-12-12 15:07:53,106 INFO [org.apache.hadoop.mapreduce.Job] - The url to track the job: http://localhost:8080/

2016-12-12 15:07:53,107 INFO [org.apache.hadoop.mapreduce.Job] - Running job: job_local1414008937_0001

2016-12-12 15:07:53,114 INFO [org.apache.hadoop.mapred.LocalJobRunner] - OutputCommitter set in config null

2016-12-12 15:07:53,128 INFO [org.apache.hadoop.mapred.LocalJobRunner] - OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2016-12-12 15:07:53,203 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Waiting for map tasks

2016-12-12 15:07:53,216 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local1414008937_0001_m_000000_0

2016-12-12 15:07:53,271 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:07:53,374 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@65f3724c

2016-12-12 15:07:53,382 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/data/Weibodata.txt:0+174116

2016-12-12 15:07:53,443 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:07:53,443 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:07:53,443 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:07:53,444 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:07:53,444 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:07:53,450 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2016-12-12 15:07:54,110 INFO [org.apache.hadoop.mapreduce.Job] - Job job_local1414008937_0001 running in uber mode : false

2016-12-12 15:07:54,112 INFO [org.apache.hadoop.mapreduce.Job] - map 0% reduce 0%

2016-12-12 15:07:55,068 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:07:55,068 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:07:55,068 INFO [org.apache.hadoop.mapred.MapTask] - Spilling map output

2016-12-12 15:07:55,068 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufend = 747379; bufvoid = 104857600

2016-12-12 15:07:55,068 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396(104857584); kvend = 26101152(104404608); length = 113245/6553600

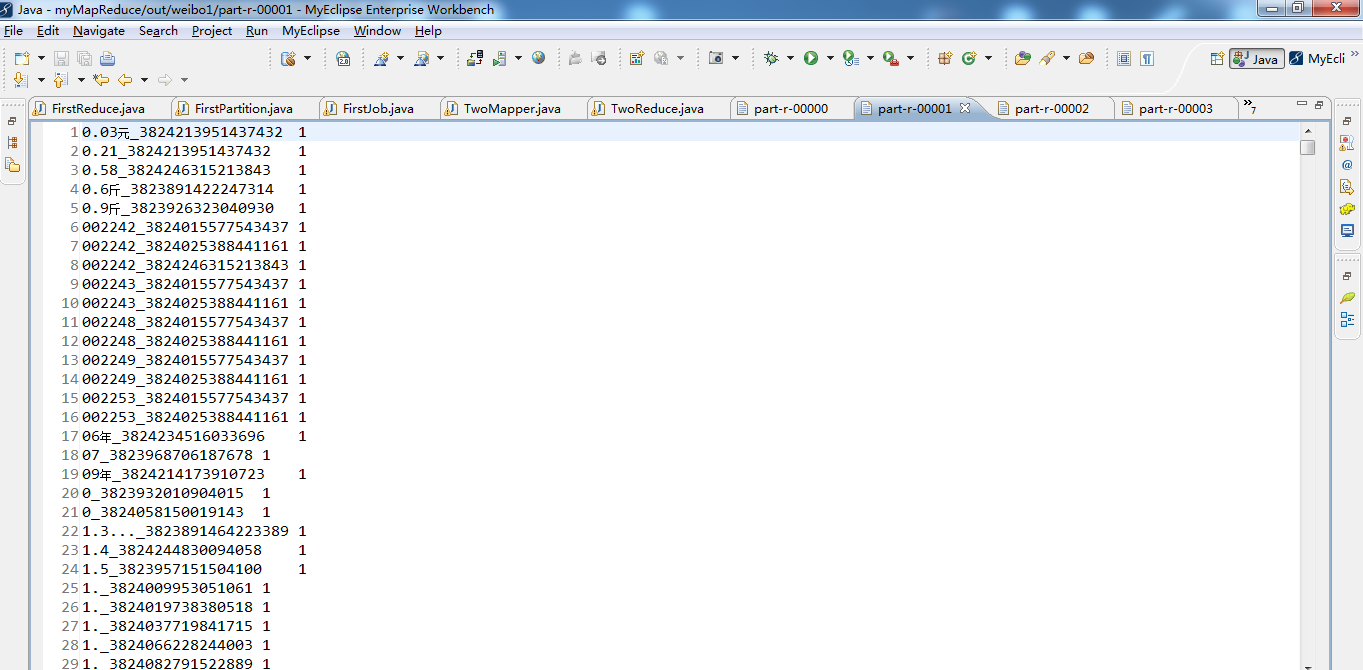

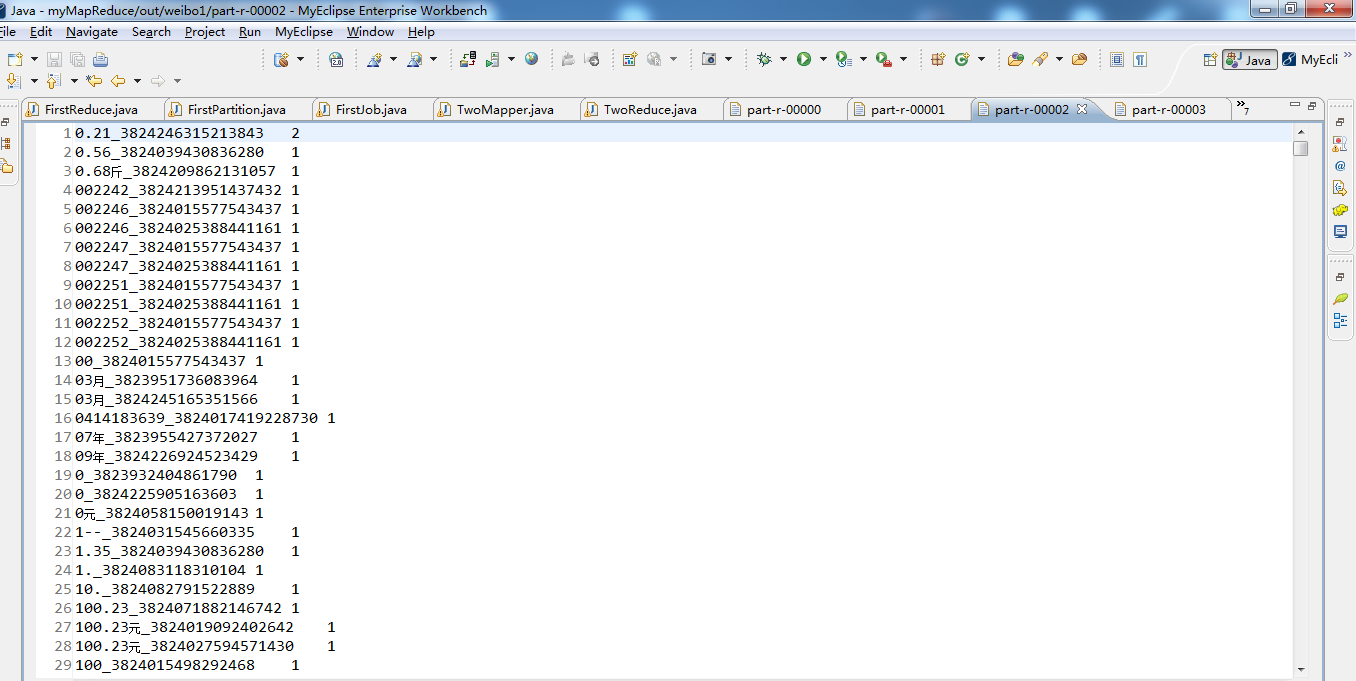

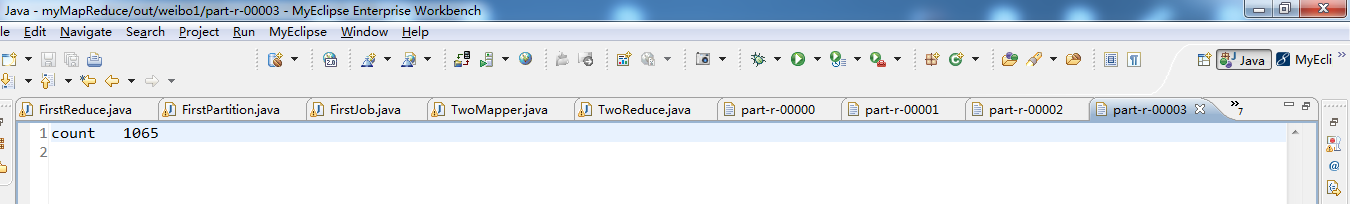

count___________1065

2016-12-12 15:07:55,674 INFO [org.apache.hadoop.mapred.MapTask] - Finished spill 0

2016-12-12 15:07:55,685 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local1414008937_0001_m_000000_0 is done. And is in the process of committing

2016-12-12 15:07:55,706 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:07:55,706 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local1414008937_0001_m_000000_0' done.

2016-12-12 15:07:55,706 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local1414008937_0001_m_000000_0

2016-12-12 15:07:55,707 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map task executor complete.

2016-12-12 15:07:55,714 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Waiting for reduce tasks

2016-12-12 15:07:55,714 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local1414008937_0001_r_000000_0

2016-12-12 15:07:55,727 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:07:55,754 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@24a11405

2016-12-12 15:07:55,758 INFO [org.apache.hadoop.mapred.ReduceTask] - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@12efdb85

2016-12-12 15:07:55,776 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - MergerManager: memoryLimit=1327077760, maxSingleShuffleLimit=331769440, mergeThreshold=875871360, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2016-12-12 15:07:55,778 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - attempt_local1414008937_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2016-12-12 15:07:55,810 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local1414008937_0001_m_000000_0 decomp: 222260 len: 222264 to MEMORY

2016-12-12 15:07:55,818 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 222260 bytes from map-output for attempt_local1414008937_0001_m_000000_0

2016-12-12 15:07:55,863 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 222260, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->222260

2016-12-12 15:07:55,865 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - EventFetcher is interrupted.. Returning

2016-12-12 15:07:55,866 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:55,867 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2016-12-12 15:07:55,876 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:55,876 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 222236 bytes

2016-12-12 15:07:55,952 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merged 1 segments, 222260 bytes to disk to satisfy reduce memory limit

2016-12-12 15:07:55,953 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 1 files, 222264 bytes from disk

2016-12-12 15:07:55,954 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 0 segments, 0 bytes from memory into reduce

2016-12-12 15:07:55,955 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:55,987 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 222236 bytes

2016-12-12 15:07:55,989 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:55,994 INFO [org.apache.hadoop.conf.Configuration.deprecation] - mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2016-12-12 15:07:56,124 INFO [org.apache.hadoop.mapreduce.Job] - map 100% reduce 0%

2016-12-12 15:07:56,347 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local1414008937_0001_r_000000_0 is done. And is in the process of committing

2016-12-12 15:07:56,349 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,349 INFO [org.apache.hadoop.mapred.Task] - Task attempt_local1414008937_0001_r_000000_0 is allowed to commit now

2016-12-12 15:07:56,357 INFO [org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter] - Saved output of task 'attempt_local1414008937_0001_r_000000_0' to file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/_temporary/0/task_local1414008937_0001_r_000000

2016-12-12 15:07:56,358 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce > reduce

2016-12-12 15:07:56,359 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local1414008937_0001_r_000000_0' done.

2016-12-12 15:07:56,359 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local1414008937_0001_r_000000_0

2016-12-12 15:07:56,359 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local1414008937_0001_r_000001_0

2016-12-12 15:07:56,365 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:07:56,391 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@464d02ee

2016-12-12 15:07:56,392 INFO [org.apache.hadoop.mapred.ReduceTask] - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@69fb7b50

2016-12-12 15:07:56,394 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - MergerManager: memoryLimit=1327077760, maxSingleShuffleLimit=331769440, mergeThreshold=875871360, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2016-12-12 15:07:56,395 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - attempt_local1414008937_0001_r_000001_0 Thread started: EventFetcher for fetching Map Completion Events

2016-12-12 15:07:56,399 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#2 about to shuffle output of map attempt_local1414008937_0001_m_000000_0 decomp: 226847 len: 226851 to MEMORY

2016-12-12 15:07:56,401 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 226847 bytes from map-output for attempt_local1414008937_0001_m_000000_0

2016-12-12 15:07:56,401 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 226847, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->226847

2016-12-12 15:07:56,402 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - EventFetcher is interrupted.. Returning

2016-12-12 15:07:56,402 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,402 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2016-12-12 15:07:56,407 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:56,407 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 226820 bytes

2016-12-12 15:07:56,488 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merged 1 segments, 226847 bytes to disk to satisfy reduce memory limit

2016-12-12 15:07:56,488 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 1 files, 226851 bytes from disk

2016-12-12 15:07:56,489 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 0 segments, 0 bytes from memory into reduce

2016-12-12 15:07:56,489 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:56,490 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 226820 bytes

2016-12-12 15:07:56,491 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,581 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local1414008937_0001_r_000001_0 is done. And is in the process of committing

2016-12-12 15:07:56,584 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,584 INFO [org.apache.hadoop.mapred.Task] - Task attempt_local1414008937_0001_r_000001_0 is allowed to commit now

2016-12-12 15:07:56,591 INFO [org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter] - Saved output of task 'attempt_local1414008937_0001_r_000001_0' to file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/_temporary/0/task_local1414008937_0001_r_000001

2016-12-12 15:07:56,593 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce > reduce

2016-12-12 15:07:56,593 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local1414008937_0001_r_000001_0' done.

2016-12-12 15:07:56,593 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local1414008937_0001_r_000001_0

2016-12-12 15:07:56,593 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local1414008937_0001_r_000002_0

2016-12-12 15:07:56,596 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:07:56,640 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@36d0c62b

2016-12-12 15:07:56,640 INFO [org.apache.hadoop.mapred.ReduceTask] - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@44824d2a

2016-12-12 15:07:56,641 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - MergerManager: memoryLimit=1327077760, maxSingleShuffleLimit=331769440, mergeThreshold=875871360, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2016-12-12 15:07:56,643 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - attempt_local1414008937_0001_r_000002_0 Thread started: EventFetcher for fetching Map Completion Events

2016-12-12 15:07:56,648 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#3 about to shuffle output of map attempt_local1414008937_0001_m_000000_0 decomp: 224215 len: 224219 to MEMORY

2016-12-12 15:07:56,650 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 224215 bytes from map-output for attempt_local1414008937_0001_m_000000_0

2016-12-12 15:07:56,650 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 224215, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->224215

2016-12-12 15:07:56,651 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - EventFetcher is interrupted.. Returning

2016-12-12 15:07:56,651 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,652 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2016-12-12 15:07:56,658 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:56,658 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 224191 bytes

2016-12-12 15:07:56,675 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merged 1 segments, 224215 bytes to disk to satisfy reduce memory limit

2016-12-12 15:07:56,676 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 1 files, 224219 bytes from disk

2016-12-12 15:07:56,676 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 0 segments, 0 bytes from memory into reduce

2016-12-12 15:07:56,676 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:56,677 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 224191 bytes

2016-12-12 15:07:56,678 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,711 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local1414008937_0001_r_000002_0 is done. And is in the process of committing

2016-12-12 15:07:56,714 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,714 INFO [org.apache.hadoop.mapred.Task] - Task attempt_local1414008937_0001_r_000002_0 is allowed to commit now

2016-12-12 15:07:56,725 INFO [org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter] - Saved output of task 'attempt_local1414008937_0001_r_000002_0' to file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/_temporary/0/task_local1414008937_0001_r_000002

2016-12-12 15:07:56,726 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce > reduce

2016-12-12 15:07:56,727 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local1414008937_0001_r_000002_0' done.

2016-12-12 15:07:56,727 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local1414008937_0001_r_000002_0

2016-12-12 15:07:56,727 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local1414008937_0001_r_000003_0

2016-12-12 15:07:56,729 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:07:56,749 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@42ed705f

2016-12-12 15:07:56,750 INFO [org.apache.hadoop.mapred.ReduceTask] - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@726c8f4c

2016-12-12 15:07:56,751 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - MergerManager: memoryLimit=1327077760, maxSingleShuffleLimit=331769440, mergeThreshold=875871360, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2016-12-12 15:07:56,752 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - attempt_local1414008937_0001_r_000003_0 Thread started: EventFetcher for fetching Map Completion Events

2016-12-12 15:07:56,757 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#4 about to shuffle output of map attempt_local1414008937_0001_m_000000_0 decomp: 14 len: 18 to MEMORY

2016-12-12 15:07:56,758 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 14 bytes from map-output for attempt_local1414008937_0001_m_000000_0

2016-12-12 15:07:56,758 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 14, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->14

2016-12-12 15:07:56,759 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - EventFetcher is interrupted.. Returning

2016-12-12 15:07:56,759 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,759 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2016-12-12 15:07:56,764 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:56,764 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 6 bytes

2016-12-12 15:07:56,765 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merged 1 segments, 14 bytes to disk to satisfy reduce memory limit

2016-12-12 15:07:56,765 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 1 files, 18 bytes from disk

2016-12-12 15:07:56,765 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 0 segments, 0 bytes from memory into reduce

2016-12-12 15:07:56,765 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:07:56,766 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 6 bytes

2016-12-12 15:07:56,766 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

count___________1065

2016-12-12 15:07:56,770 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local1414008937_0001_r_000003_0 is done. And is in the process of committing

2016-12-12 15:07:56,771 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 1 / 1 copied.

2016-12-12 15:07:56,771 INFO [org.apache.hadoop.mapred.Task] - Task attempt_local1414008937_0001_r_000003_0 is allowed to commit now

2016-12-12 15:07:56,777 INFO [org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter] - Saved output of task 'attempt_local1414008937_0001_r_000003_0' to file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/_temporary/0/task_local1414008937_0001_r_000003

2016-12-12 15:07:56,778 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce > reduce

2016-12-12 15:07:56,778 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local1414008937_0001_r_000003_0' done.

2016-12-12 15:07:56,778 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local1414008937_0001_r_000003_0

2016-12-12 15:07:56,779 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce task executor complete.

2016-12-12 15:07:57,127 INFO [org.apache.hadoop.mapreduce.Job] - map 100% reduce 100%

2016-12-12 15:07:57,137 INFO [org.apache.hadoop.mapreduce.Job] - Job job_local1414008937_0001 completed successfully

2016-12-12 15:07:57,186 INFO [org.apache.hadoop.mapreduce.Job] - Counters: 33

File System Counters

FILE: Number of bytes read=4937350

FILE: Number of bytes written=8113860

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=1065

Map output records=28312

Map output bytes=747379

Map output materialized bytes=673352

Input split bytes=127

Combine input records=28312

Combine output records=23098

Reduce input groups=23098

Reduce shuffle bytes=673352

Reduce input records=23098

Reduce output records=23098

Spilled Records=46196

Shuffled Maps =4

Failed Shuffles=0

Merged Map outputs=4

GC time elapsed (ms)=165

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=1672478720

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=174116

File Output Format Counters

Bytes Written=585532

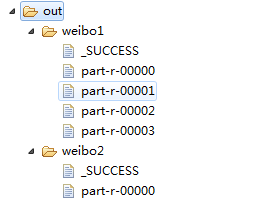

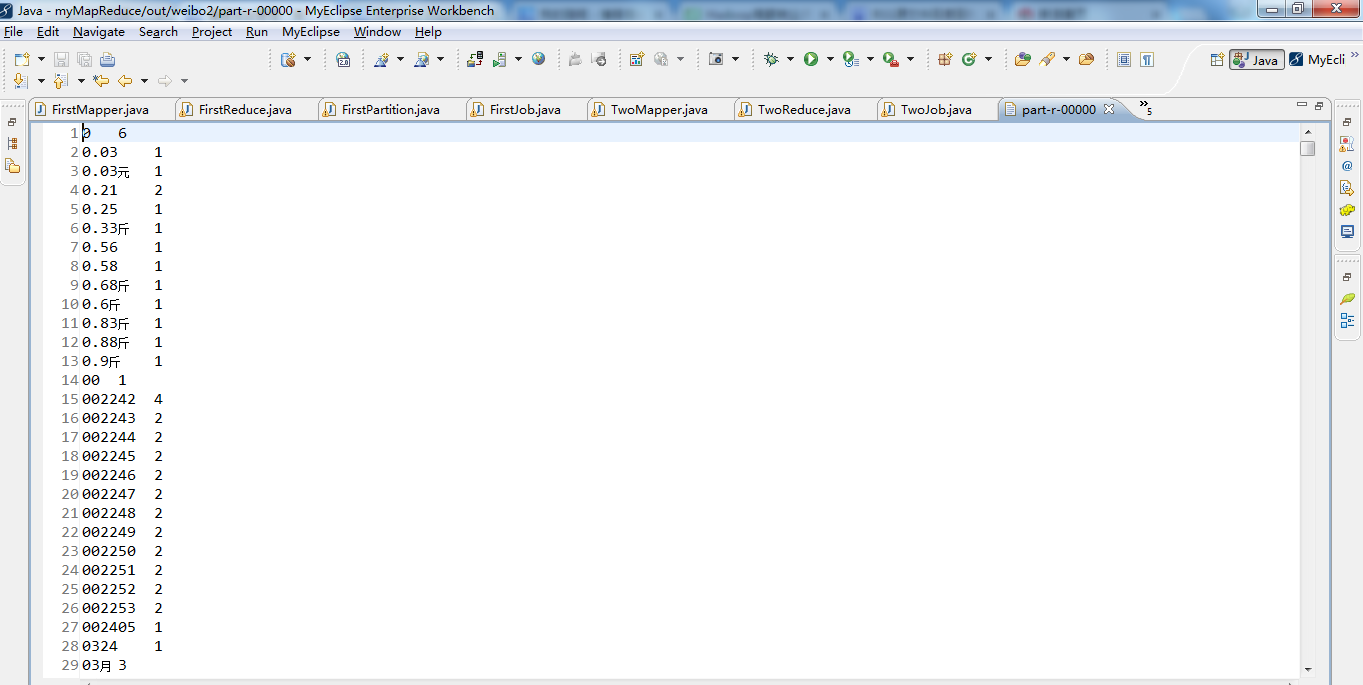

执行

2016-12-12 15:10:36,011 INFO [org.apache.hadoop.metrics.jvm.JvmMetrics] - Initializing JVM Metrics with processName=JobTracker, sessionId=

2016-12-12 15:10:36,436 WARN [org.apache.hadoop.mapreduce.JobSubmitter] - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

2016-12-12 15:10:36,438 WARN [org.apache.hadoop.mapreduce.JobSubmitter] - No job jar file set. User classes may not be found. See Job or Job#setJar(String).

2016-12-12 15:10:36,892 INFO [org.apache.hadoop.mapreduce.lib.input.FileInputFormat] - Total input paths to process : 4

2016-12-12 15:10:36,959 INFO [org.apache.hadoop.mapreduce.JobSubmitter] - number of splits:4

2016-12-12 15:10:37,215 INFO [org.apache.hadoop.mapreduce.JobSubmitter] - Submitting tokens for job: job_local564512176_0001

2016-12-12 15:10:37,668 INFO [org.apache.hadoop.mapreduce.Job] - The url to track the job: http://localhost:8080/

2016-12-12 15:10:37,670 INFO [org.apache.hadoop.mapreduce.Job] - Running job: job_local564512176_0001

2016-12-12 15:10:37,672 INFO [org.apache.hadoop.mapred.LocalJobRunner] - OutputCommitter set in config null

2016-12-12 15:10:37,685 INFO [org.apache.hadoop.mapred.LocalJobRunner] - OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2016-12-12 15:10:37,757 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Waiting for map tasks

2016-12-12 15:10:37,759 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local564512176_0001_m_000000_0

2016-12-12 15:10:37,822 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:10:37,854 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@12633e10

2016-12-12 15:10:37,861 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00001:0+195718

2016-12-12 15:10:37,924 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:10:37,924 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:10:37,925 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:10:37,925 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:10:37,925 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:10:37,932 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2016-12-12 15:10:38,401 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:10:38,402 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:10:38,402 INFO [org.apache.hadoop.mapred.MapTask] - Spilling map output

2016-12-12 15:10:38,402 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufend = 78968; bufvoid = 104857600

2016-12-12 15:10:38,402 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396(104857584); kvend = 26183268(104733072); length = 31129/6553600

2016-12-12 15:10:38,673 INFO [org.apache.hadoop.mapreduce.Job] - Job job_local564512176_0001 running in uber mode : false

2016-12-12 15:10:38,676 INFO [org.apache.hadoop.mapreduce.Job] - map 0% reduce 0%

2016-12-12 15:10:38,724 INFO [org.apache.hadoop.mapred.MapTask] - Finished spill 0

2016-12-12 15:10:38,730 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local564512176_0001_m_000000_0 is done. And is in the process of committing

2016-12-12 15:10:38,744 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:10:38,744 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local564512176_0001_m_000000_0' done.

2016-12-12 15:10:38,745 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local564512176_0001_m_000000_0

2016-12-12 15:10:38,745 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local564512176_0001_m_000001_0

2016-12-12 15:10:38,748 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:10:38,778 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@43aa735f

2016-12-12 15:10:38,784 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00002:0+193443

2016-12-12 15:10:38,820 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:10:38,820 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:10:38,820 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:10:38,821 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:10:38,821 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:10:38,822 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2016-12-12 15:10:39,017 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:10:39,017 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:10:39,018 INFO [org.apache.hadoop.mapred.MapTask] - Spilling map output

2016-12-12 15:10:39,018 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufend = 78027; bufvoid = 104857600

2016-12-12 15:10:39,018 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396(104857584); kvend = 26183624(104734496); length = 30773/6553600

2016-12-12 15:10:39,157 INFO [org.apache.hadoop.mapred.MapTask] - Finished spill 0

2016-12-12 15:10:39,162 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local564512176_0001_m_000001_0 is done. And is in the process of committing

2016-12-12 15:10:39,166 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:10:39,166 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local564512176_0001_m_000001_0' done.

2016-12-12 15:10:39,166 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local564512176_0001_m_000001_0

2016-12-12 15:10:39,167 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local564512176_0001_m_000002_0

2016-12-12 15:10:39,171 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:10:39,219 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@405f4f03

2016-12-12 15:10:39,222 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00000:0+191780

2016-12-12 15:10:39,265 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:10:39,265 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:10:39,265 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:10:39,265 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:10:39,265 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:10:39,270 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2016-12-12 15:10:39,311 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:10:39,311 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:10:39,311 INFO [org.apache.hadoop.mapred.MapTask] - Spilling map output

2016-12-12 15:10:39,311 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufend = 77478; bufvoid = 104857600

2016-12-12 15:10:39,312 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396(104857584); kvend = 26183920(104735680); length = 30477/6553600

2016-12-12 15:10:39,360 INFO [org.apache.hadoop.mapred.MapTask] - Finished spill 0

2016-12-12 15:10:39,365 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local564512176_0001_m_000002_0 is done. And is in the process of committing

2016-12-12 15:10:39,368 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:10:39,369 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local564512176_0001_m_000002_0' done.

2016-12-12 15:10:39,369 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local564512176_0001_m_000002_0

2016-12-12 15:10:39,369 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local564512176_0001_m_000003_0

2016-12-12 15:10:39,372 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:10:39,416 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@4e5497cb

2016-12-12 15:10:39,419 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00003:0+11

2016-12-12 15:10:39,461 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:10:39,461 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:10:39,461 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:10:39,461 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:10:39,462 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:10:39,463 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2016-12-12 15:10:39,466 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:10:39,466 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:10:39,479 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local564512176_0001_m_000003_0 is done. And is in the process of committing

2016-12-12 15:10:39,482 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:10:39,482 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local564512176_0001_m_000003_0' done.

2016-12-12 15:10:39,482 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local564512176_0001_m_000003_0

2016-12-12 15:10:39,482 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map task executor complete.

2016-12-12 15:10:39,487 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Waiting for reduce tasks

2016-12-12 15:10:39,488 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local564512176_0001_r_000000_0

2016-12-12 15:10:39,497 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:10:39,519 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@6d565f45

2016-12-12 15:10:39,523 INFO [org.apache.hadoop.mapred.ReduceTask] - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@1f719a8d

2016-12-12 15:10:39,538 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - MergerManager: memoryLimit=1327077760, maxSingleShuffleLimit=331769440, mergeThreshold=875871360, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2016-12-12 15:10:39,541 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - attempt_local564512176_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2016-12-12 15:10:39,583 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local564512176_0001_m_000002_0 decomp: 37768 len: 37772 to MEMORY

2016-12-12 15:10:39,589 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 37768 bytes from map-output for attempt_local564512176_0001_m_000002_0

2016-12-12 15:10:39,638 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 37768, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->37768

2016-12-12 15:10:39,644 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local564512176_0001_m_000001_0 decomp: 37233 len: 37237 to MEMORY

2016-12-12 15:10:39,646 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 37233 bytes from map-output for attempt_local564512176_0001_m_000001_0

2016-12-12 15:10:39,647 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 37233, inMemoryMapOutputs.size() -> 2, commitMemory -> 37768, usedMemory ->75001

2016-12-12 15:10:39,652 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local564512176_0001_m_000000_0 decomp: 37343 len: 37347 to MEMORY

2016-12-12 15:10:39,653 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 37343 bytes from map-output for attempt_local564512176_0001_m_000000_0

2016-12-12 15:10:39,654 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 37343, inMemoryMapOutputs.size() -> 3, commitMemory -> 75001, usedMemory ->112344

2016-12-12 15:10:39,658 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local564512176_0001_m_000003_0 decomp: 2 len: 6 to MEMORY

2016-12-12 15:10:39,659 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 2 bytes from map-output for attempt_local564512176_0001_m_000003_0

2016-12-12 15:10:39,660 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 4, commitMemory -> 112344, usedMemory ->112346

2016-12-12 15:10:39,660 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - EventFetcher is interrupted.. Returning

2016-12-12 15:10:39,661 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 4 / 4 copied.

2016-12-12 15:10:39,662 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - finalMerge called with 4 in-memory map-outputs and 0 on-disk map-outputs

2016-12-12 15:10:39,673 INFO [org.apache.hadoop.mapred.Merger] - Merging 4 sorted segments

2016-12-12 15:10:39,674 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 3 segments left of total size: 112332 bytes

2016-12-12 15:10:39,678 INFO [org.apache.hadoop.mapreduce.Job] - map 100% reduce 0%

2016-12-12 15:10:39,780 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merged 4 segments, 112346 bytes to disk to satisfy reduce memory limit

2016-12-12 15:10:39,781 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 1 files, 112344 bytes from disk

2016-12-12 15:10:39,783 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 0 segments, 0 bytes from memory into reduce

2016-12-12 15:10:39,784 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:10:39,785 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 112336 bytes

2016-12-12 15:10:39,785 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 4 / 4 copied.

2016-12-12 15:10:39,792 INFO [org.apache.hadoop.conf.Configuration.deprecation] - mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2016-12-12 15:10:40,343 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local564512176_0001_r_000000_0 is done. And is in the process of committing

2016-12-12 15:10:40,346 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 4 / 4 copied.

2016-12-12 15:10:40,346 INFO [org.apache.hadoop.mapred.Task] - Task attempt_local564512176_0001_r_000000_0 is allowed to commit now

2016-12-12 15:10:40,353 INFO [org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter] - Saved output of task 'attempt_local564512176_0001_r_000000_0' to file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo2/_temporary/0/task_local564512176_0001_r_000000

2016-12-12 15:10:40,363 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce > reduce

2016-12-12 15:10:40,364 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local564512176_0001_r_000000_0' done.

2016-12-12 15:10:40,364 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local564512176_0001_r_000000_0

2016-12-12 15:10:40,364 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce task executor complete.

2016-12-12 15:10:40,678 INFO [org.apache.hadoop.mapreduce.Job] - map 100% reduce 100%

2016-12-12 15:10:40,678 INFO [org.apache.hadoop.mapreduce.Job] - Job job_local564512176_0001 completed successfully

2016-12-12 15:10:40,701 INFO [org.apache.hadoop.mapreduce.Job] - Counters: 33

File System Counters

FILE: Number of bytes read=2579152

FILE: Number of bytes written=1581170

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=23098

Map output records=23097

Map output bytes=234473

Map output materialized bytes=112362

Input split bytes=528

Combine input records=23097

Combine output records=8774

Reduce input groups=5567

Reduce shuffle bytes=112362

Reduce input records=8774

Reduce output records=5567

Spilled Records=17548

Shuffled Maps =4

Failed Shuffles=0

Merged Map outputs=4

GC time elapsed (ms)=48

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=2114977792

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=585564

File Output Format Counters

Bytes Written=50762

执行job成功

执行

2016-12-12 15:12:33,225 INFO [org.apache.hadoop.metrics.jvm.JvmMetrics] - Initializing JVM Metrics with processName=JobTracker, sessionId=

2016-12-12 15:12:33,823 WARN [org.apache.hadoop.mapreduce.JobSubmitter] - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

2016-12-12 15:12:33,824 WARN [org.apache.hadoop.mapreduce.JobSubmitter] - No job jar file set. User classes may not be found. See Job or Job#setJar(String).

2016-12-12 15:12:34,364 INFO [org.apache.hadoop.mapreduce.lib.input.FileInputFormat] - Total input paths to process : 4

2016-12-12 15:12:34,410 INFO [org.apache.hadoop.mapreduce.JobSubmitter] - number of splits:4

2016-12-12 15:12:34,729 INFO [org.apache.hadoop.mapreduce.JobSubmitter] - Submitting tokens for job: job_local671371338_0001

2016-12-12 15:12:35,471 INFO [org.apache.hadoop.mapred.LocalDistributedCacheManager] - Creating symlink: \tmp\hadoop-Administrator\mapred\local\1481526755080\part-r-00003 <- D:\Code\MyEclipseJavaCode\myMapReduce/part-r-00003

2016-12-12 15:12:35,516 INFO [org.apache.hadoop.mapred.LocalDistributedCacheManager] - Localized file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00003 as file:/tmp/hadoop-Administrator/mapred/local/1481526755080/part-r-00003

2016-12-12 15:12:35,521 INFO [org.apache.hadoop.mapred.LocalDistributedCacheManager] - Creating symlink: \tmp\hadoop-Administrator\mapred\local\1481526755081\part-r-00000 <- D:\Code\MyEclipseJavaCode\myMapReduce/part-r-00000

2016-12-12 15:12:35,544 INFO [org.apache.hadoop.mapred.LocalDistributedCacheManager] - Localized file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo2/part-r-00000 as file:/tmp/hadoop-Administrator/mapred/local/1481526755081/part-r-00000

2016-12-12 15:12:35,696 INFO [org.apache.hadoop.mapreduce.Job] - The url to track the job: http://localhost:8080/

2016-12-12 15:12:35,697 INFO [org.apache.hadoop.mapreduce.Job] - Running job: job_local671371338_0001

2016-12-12 15:12:35,703 INFO [org.apache.hadoop.mapred.LocalJobRunner] - OutputCommitter set in config null

2016-12-12 15:12:35,715 INFO [org.apache.hadoop.mapred.LocalJobRunner] - OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2016-12-12 15:12:35,772 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Waiting for map tasks

2016-12-12 15:12:35,772 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local671371338_0001_m_000000_0

2016-12-12 15:12:35,819 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:12:35,852 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@50b97c8b

2016-12-12 15:12:35,858 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00001:0+195718

2016-12-12 15:12:35,926 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:12:35,926 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:12:35,926 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:12:35,926 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:12:35,927 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:12:35,938 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

******************

2016-12-12 15:12:36,701 INFO [org.apache.hadoop.mapreduce.Job] - Job job_local671371338_0001 running in uber mode : false

2016-12-12 15:12:36,703 INFO [org.apache.hadoop.mapreduce.Job] - map 0% reduce 0%

2016-12-12 15:12:36,965 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:12:36,966 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:12:36,966 INFO [org.apache.hadoop.mapred.MapTask] - Spilling map output

2016-12-12 15:12:36,966 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufend = 239755; bufvoid = 104857600

2016-12-12 15:12:36,966 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396(104857584); kvend = 26183268(104733072); length = 31129/6553600

2016-12-12 15:12:37,135 INFO [org.apache.hadoop.mapred.MapTask] - Finished spill 0

2016-12-12 15:12:37,141 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local671371338_0001_m_000000_0 is done. And is in the process of committing

2016-12-12 15:12:37,153 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:12:37,153 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local671371338_0001_m_000000_0' done.

2016-12-12 15:12:37,154 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local671371338_0001_m_000000_0

2016-12-12 15:12:37,154 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local671371338_0001_m_000001_0

2016-12-12 15:12:37,156 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:12:37,191 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@70849e34

2016-12-12 15:12:37,194 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00002:0+193443

2016-12-12 15:12:37,229 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:12:37,229 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:12:37,229 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:12:37,230 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:12:37,230 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:12:37,230 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

******************

2016-12-12 15:12:37,601 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:12:37,602 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:12:37,602 INFO [org.apache.hadoop.mapred.MapTask] - Spilling map output

2016-12-12 15:12:37,602 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufend = 237126; bufvoid = 104857600

2016-12-12 15:12:37,602 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396(104857584); kvend = 26183624(104734496); length = 30773/6553600

2016-12-12 15:12:37,651 INFO [org.apache.hadoop.mapred.MapTask] - Finished spill 0

2016-12-12 15:12:37,683 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local671371338_0001_m_000001_0 is done. And is in the process of committing

2016-12-12 15:12:37,687 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:12:37,687 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local671371338_0001_m_000001_0' done.

2016-12-12 15:12:37,687 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local671371338_0001_m_000001_0

2016-12-12 15:12:37,687 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local671371338_0001_m_000002_0

2016-12-12 15:12:37,690 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:12:37,722 INFO [org.apache.hadoop.mapreduce.Job] - map 100% reduce 0%

2016-12-12 15:12:37,810 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@544b0d4c

2016-12-12 15:12:37,813 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00000:0+191780

2016-12-12 15:12:37,851 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:12:37,851 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:12:37,851 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:12:37,851 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:12:37,852 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:12:37,853 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

******************

2016-12-12 15:12:37,915 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:12:37,915 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:12:37,916 INFO [org.apache.hadoop.mapred.MapTask] - Spilling map output

2016-12-12 15:12:37,916 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufend = 234731; bufvoid = 104857600

2016-12-12 15:12:37,916 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396(104857584); kvend = 26183920(104735680); length = 30477/6553600

2016-12-12 15:12:37,939 INFO [org.apache.hadoop.mapred.MapTask] - Finished spill 0

2016-12-12 15:12:37,943 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local671371338_0001_m_000002_0 is done. And is in the process of committing

2016-12-12 15:12:37,946 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:12:37,946 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local671371338_0001_m_000002_0' done.

2016-12-12 15:12:37,946 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local671371338_0001_m_000002_0

2016-12-12 15:12:37,947 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local671371338_0001_m_000003_0

2016-12-12 15:12:37,950 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:12:37,999 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@6c241f31

2016-12-12 15:12:38,002 INFO [org.apache.hadoop.mapred.MapTask] - Processing split: file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo1/part-r-00003:0+11

2016-12-12 15:12:38,046 INFO [org.apache.hadoop.mapred.MapTask] - (EQUATOR) 0 kvi 26214396(104857584)

2016-12-12 15:12:38,046 INFO [org.apache.hadoop.mapred.MapTask] - mapreduce.task.io.sort.mb: 100

2016-12-12 15:12:38,046 INFO [org.apache.hadoop.mapred.MapTask] - soft limit at 83886080

2016-12-12 15:12:38,046 INFO [org.apache.hadoop.mapred.MapTask] - bufstart = 0; bufvoid = 104857600

2016-12-12 15:12:38,046 INFO [org.apache.hadoop.mapred.MapTask] - kvstart = 26214396; length = 6553600

2016-12-12 15:12:38,047 INFO [org.apache.hadoop.mapred.MapTask] - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

******************

2016-12-12 15:12:38,050 INFO [org.apache.hadoop.mapred.LocalJobRunner] -

2016-12-12 15:12:38,050 INFO [org.apache.hadoop.mapred.MapTask] - Starting flush of map output

2016-12-12 15:12:38,060 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local671371338_0001_m_000003_0 is done. And is in the process of committing

2016-12-12 15:12:38,063 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map

2016-12-12 15:12:38,063 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local671371338_0001_m_000003_0' done.

2016-12-12 15:12:38,064 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local671371338_0001_m_000003_0

2016-12-12 15:12:38,064 INFO [org.apache.hadoop.mapred.LocalJobRunner] - map task executor complete.

2016-12-12 15:12:38,067 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Waiting for reduce tasks

2016-12-12 15:12:38,067 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Starting task: attempt_local671371338_0001_r_000000_0

2016-12-12 15:12:38,079 INFO [org.apache.hadoop.yarn.util.ProcfsBasedProcessTree] - ProcfsBasedProcessTree currently is supported only on Linux.

2016-12-12 15:12:38,104 INFO [org.apache.hadoop.mapred.Task] - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@777da320

2016-12-12 15:12:38,116 INFO [org.apache.hadoop.mapred.ReduceTask] - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@76a01b4b

2016-12-12 15:12:38,133 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - MergerManager: memoryLimit=1327077760, maxSingleShuffleLimit=331769440, mergeThreshold=875871360, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2016-12-12 15:12:38,135 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - attempt_local671371338_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2016-12-12 15:12:38,165 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local671371338_0001_m_000001_0 decomp: 252516 len: 252520 to MEMORY

2016-12-12 15:12:38,169 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 252516 bytes from map-output for attempt_local671371338_0001_m_000001_0

2016-12-12 15:12:38,216 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 252516, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->252516

2016-12-12 15:12:38,221 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local671371338_0001_m_000002_0 decomp: 249973 len: 249977 to MEMORY

2016-12-12 15:12:38,223 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 249973 bytes from map-output for attempt_local671371338_0001_m_000002_0

2016-12-12 15:12:38,224 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 249973, inMemoryMapOutputs.size() -> 2, commitMemory -> 252516, usedMemory ->502489

2016-12-12 15:12:38,230 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local671371338_0001_m_000000_0 decomp: 255323 len: 255327 to MEMORY

2016-12-12 15:12:38,233 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 255323 bytes from map-output for attempt_local671371338_0001_m_000000_0

2016-12-12 15:12:38,233 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 255323, inMemoryMapOutputs.size() -> 3, commitMemory -> 502489, usedMemory ->757812

2016-12-12 15:12:38,235 INFO [org.apache.hadoop.mapreduce.task.reduce.LocalFetcher] - localfetcher#1 about to shuffle output of map attempt_local671371338_0001_m_000003_0 decomp: 2 len: 6 to MEMORY

2016-12-12 15:12:38,236 INFO [org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput] - Read 2 bytes from map-output for attempt_local671371338_0001_m_000003_0

2016-12-12 15:12:38,236 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 4, commitMemory -> 757812, usedMemory ->757814

2016-12-12 15:12:38,237 INFO [org.apache.hadoop.mapreduce.task.reduce.EventFetcher] - EventFetcher is interrupted.. Returning

2016-12-12 15:12:38,238 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 4 / 4 copied.

2016-12-12 15:12:38,238 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - finalMerge called with 4 in-memory map-outputs and 0 on-disk map-outputs

2016-12-12 15:12:38,252 INFO [org.apache.hadoop.mapred.Merger] - Merging 4 sorted segments

2016-12-12 15:12:38,253 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 3 segments left of total size: 757755 bytes

2016-12-12 15:12:38,413 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merged 4 segments, 757814 bytes to disk to satisfy reduce memory limit

2016-12-12 15:12:38,414 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 1 files, 757812 bytes from disk

2016-12-12 15:12:38,415 INFO [org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl] - Merging 0 segments, 0 bytes from memory into reduce

2016-12-12 15:12:38,415 INFO [org.apache.hadoop.mapred.Merger] - Merging 1 sorted segments

2016-12-12 15:12:38,416 INFO [org.apache.hadoop.mapred.Merger] - Down to the last merge-pass, with 1 segments left of total size: 757789 bytes

2016-12-12 15:12:38,433 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 4 / 4 copied.

2016-12-12 15:12:38,439 INFO [org.apache.hadoop.conf.Configuration.deprecation] - mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2016-12-12 15:12:38,844 INFO [org.apache.hadoop.mapred.Task] - Task:attempt_local671371338_0001_r_000000_0 is done. And is in the process of committing

2016-12-12 15:12:38,846 INFO [org.apache.hadoop.mapred.LocalJobRunner] - 4 / 4 copied.

2016-12-12 15:12:38,846 INFO [org.apache.hadoop.mapred.Task] - Task attempt_local671371338_0001_r_000000_0 is allowed to commit now

2016-12-12 15:12:38,857 INFO [org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter] - Saved output of task 'attempt_local671371338_0001_r_000000_0' to file:/D:/Code/MyEclipseJavaCode/myMapReduce/out/weibo3/_temporary/0/task_local671371338_0001_r_000000

2016-12-12 15:12:38,861 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce > reduce

2016-12-12 15:12:38,861 INFO [org.apache.hadoop.mapred.Task] - Task 'attempt_local671371338_0001_r_000000_0' done.

2016-12-12 15:12:38,861 INFO [org.apache.hadoop.mapred.LocalJobRunner] - Finishing task: attempt_local671371338_0001_r_000000_0

2016-12-12 15:12:38,862 INFO [org.apache.hadoop.mapred.LocalJobRunner] - reduce task executor complete.

2016-12-12 15:12:39,724 INFO [org.apache.hadoop.mapreduce.Job] - map 100% reduce 100%

2016-12-12 15:12:39,726 INFO [org.apache.hadoop.mapreduce.Job] - Job job_local671371338_0001 completed successfully

2016-12-12 15:12:39,841 INFO [org.apache.hadoop.mapreduce.Job] - Counters: 33

File System Counters

FILE: Number of bytes read=4124093

FILE: Number of bytes written=5365498

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=23098

Map output records=23097

Map output bytes=711612

Map output materialized bytes=757830

Input split bytes=528

Combine input records=0

Combine output records=0

Reduce input groups=1065

Reduce shuffle bytes=757830

Reduce input records=23097

Reduce output records=1065

Spilled Records=46194

Shuffled Maps =4

Failed Shuffles=0

Merged Map outputs=4

GC time elapsed (ms)=30

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=2353528832

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=585564

File Output Format Counters

Bytes Written=340785

执行job成功

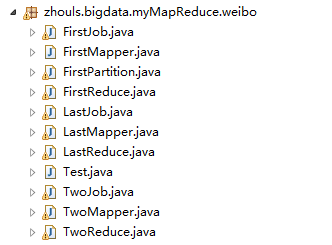

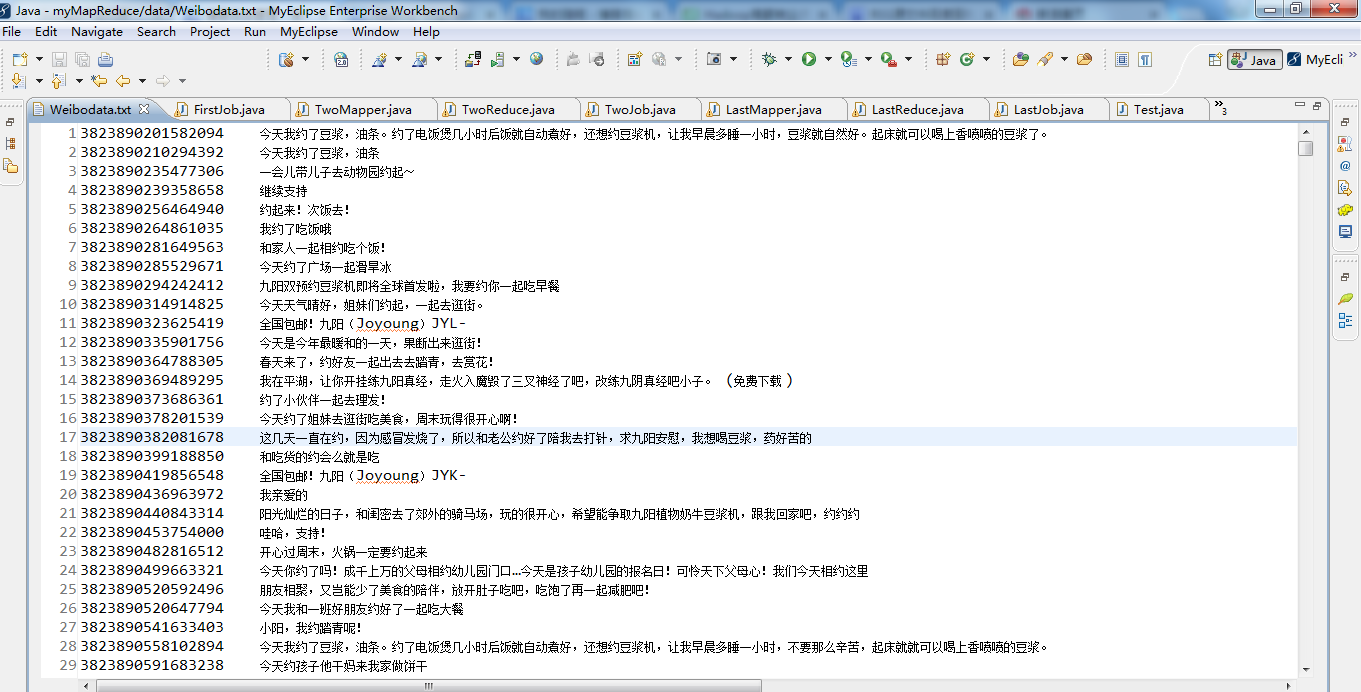

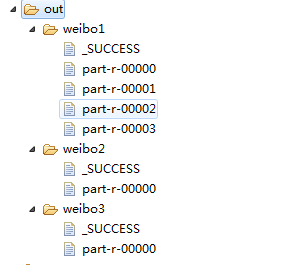

代码

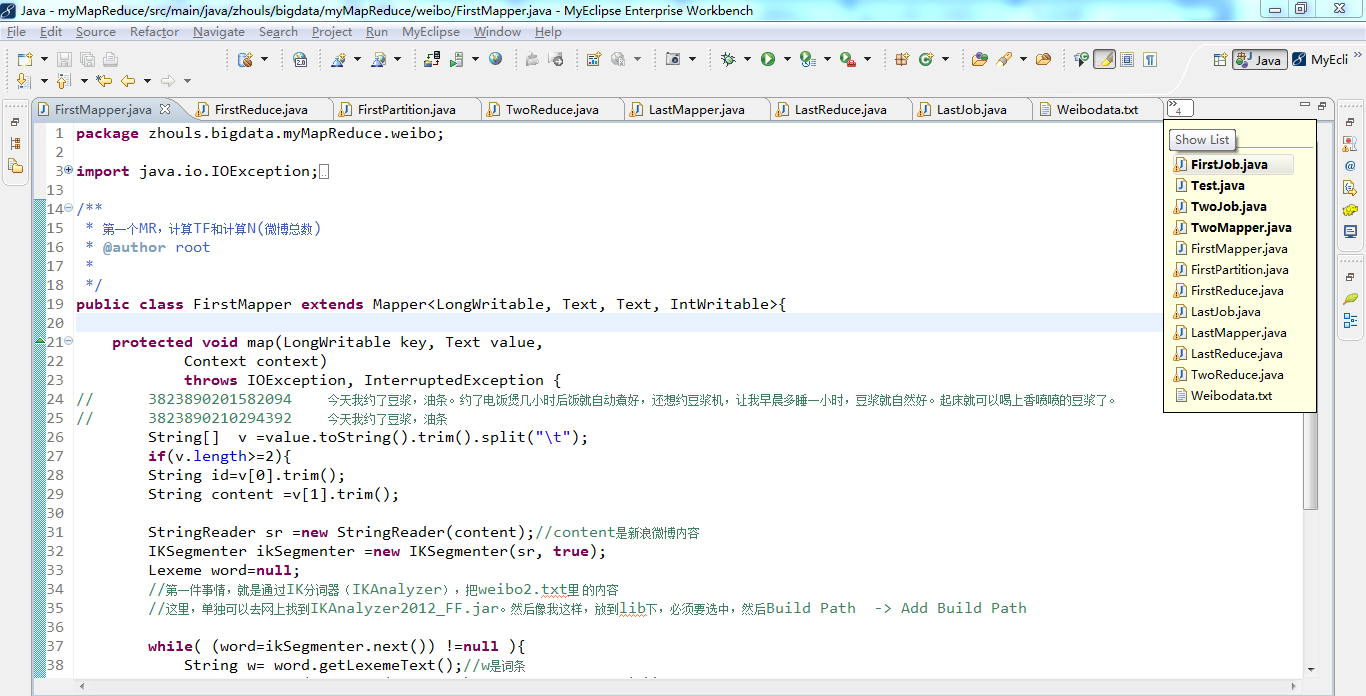

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import java.io.StringReader; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.wltea.analyzer.core.IKSegmenter;

import org.wltea.analyzer.core.Lexeme; /**

* 第一个MR,计算TF和计算N(微博总数)

* @author root

*

*/

public class FirstMapper extends Mapper<LongWritable, Text, Text, IntWritable>{ protected void map(LongWritable key, Text value,

Context context)

throws IOException, InterruptedException {

// 3823890201582094 今天我约了豆浆,油条。约了电饭煲几小时后饭就自动煮好,还想约豆浆机,让我早晨多睡一小时,豆浆就自然好。起床就可以喝上香喷喷的豆浆了。

// 3823890210294392 今天我约了豆浆,油条

String[] v =value.toString().trim().split("\t");

if(v.length>=2){

String id=v[0].trim();

String content =v[1].trim(); StringReader sr =new StringReader(content);//content是新浪微博内容

IKSegmenter ikSegmenter =new IKSegmenter(sr, true);

Lexeme word=null;

//第一件事情,就是通过IK分词器(IKAnalyzer),把weibo2.txt里 的内容

//这里,单独可以去网上找到IKAnalyzer2012_FF.jar。然后像我这样,放到lib下,必须要选中,然后Build Path -> Add Build Path while( (word=ikSegmenter.next()) !=null ){

String w= word.getLexemeText();//w是词条

context.write(new Text(w+"_"+id), new IntWritable(1));

}

context.write(new Text("count"), new IntWritable(1));

}else{

System.out.println(value.toString()+"-------------");//为什么要来----------,是因为方便统计TF,因为TF是某一篇微博词条的词频。

}

} }

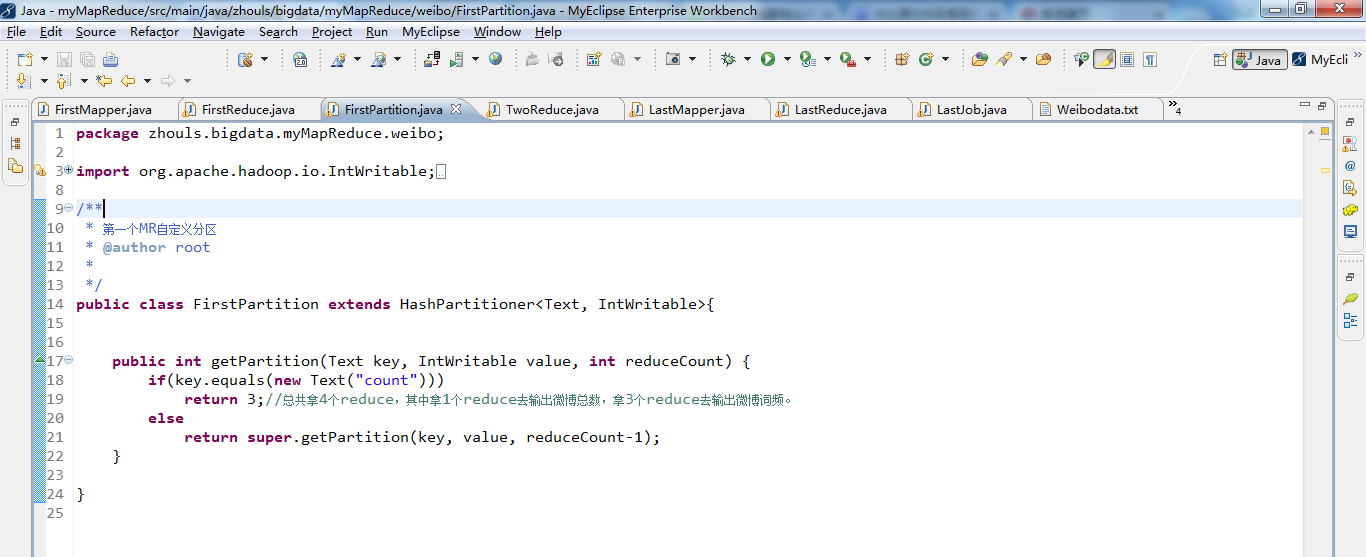

package zhouls.bigdata.myMapReduce.weibo; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.lib.partition.HashPartitioner; /**

* 第一个MR自定义分区

* @author root

*

*/

public class FirstPartition extends HashPartitioner<Text, IntWritable>{ public int getPartition(Text key, IntWritable value, int reduceCount) {

if(key.equals(new Text("count")))

return 3;//总共拿4个reduce,其中拿1个reduce去输出微博总数,拿3个reduce去输出微博词频。

else

return super.getPartition(key, value, reduceCount-1);

} }

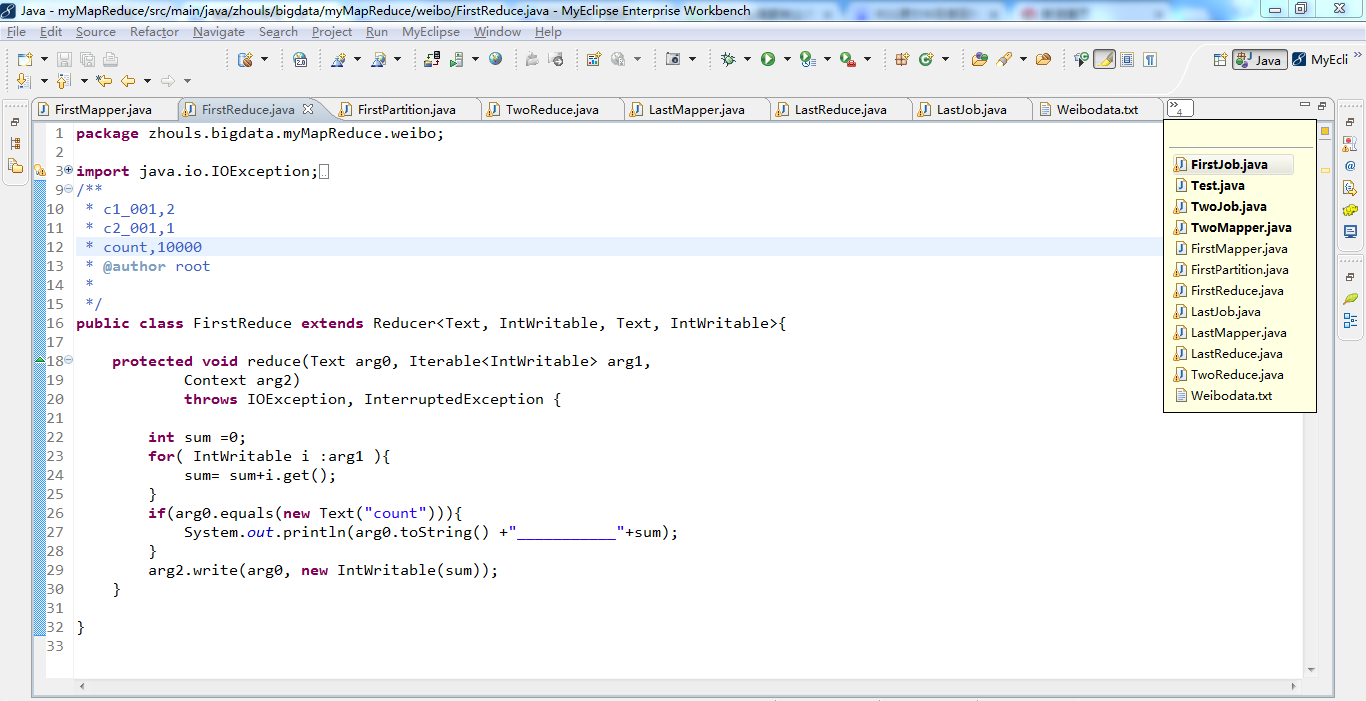

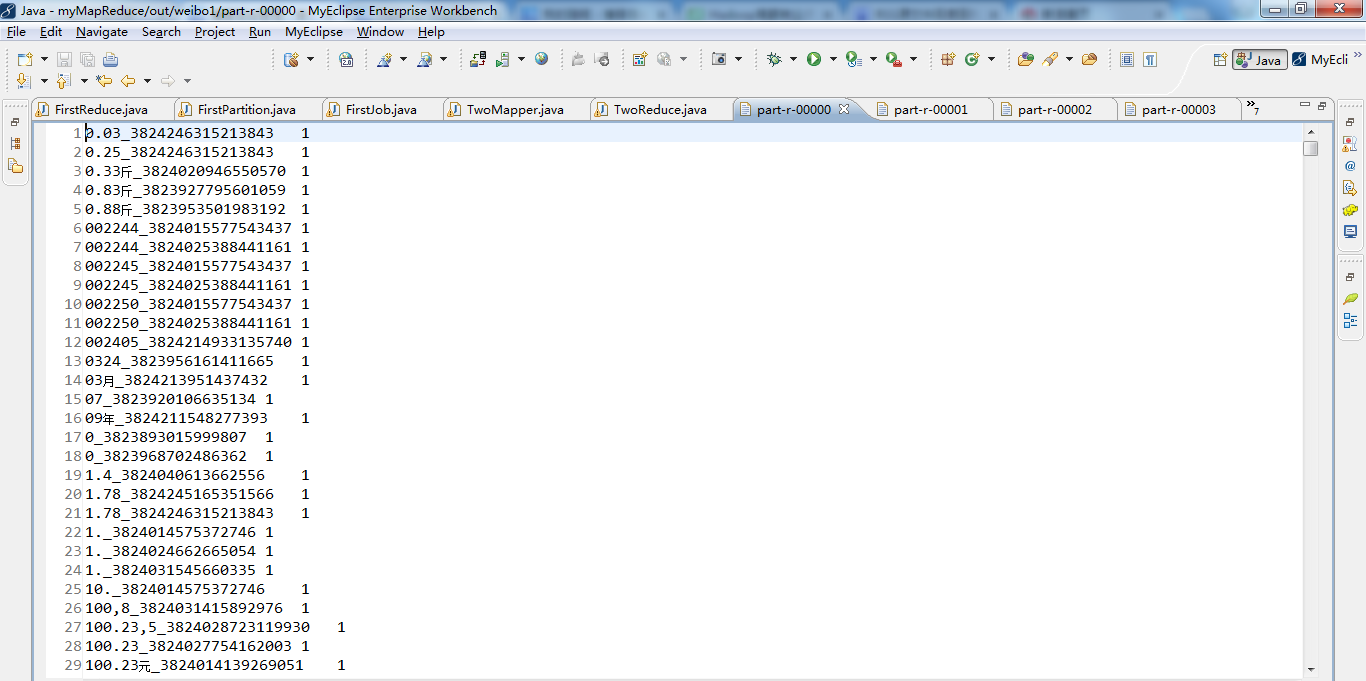

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

/**

* c1_001,2

* c2_001,1

* count,10000

* @author root

*

*/

public class FirstReduce extends Reducer<Text, IntWritable, Text, IntWritable>{ protected void reduce(Text arg0, Iterable<IntWritable> arg1,

Context arg2)

throws IOException, InterruptedException { int sum =0;

for( IntWritable i :arg1 ){

sum= sum+i.get();

}

if(arg0.equals(new Text("count"))){

System.out.println(arg0.toString() +"___________"+sum);

}

arg2.write(arg0, new IntWritable(sum));

} }

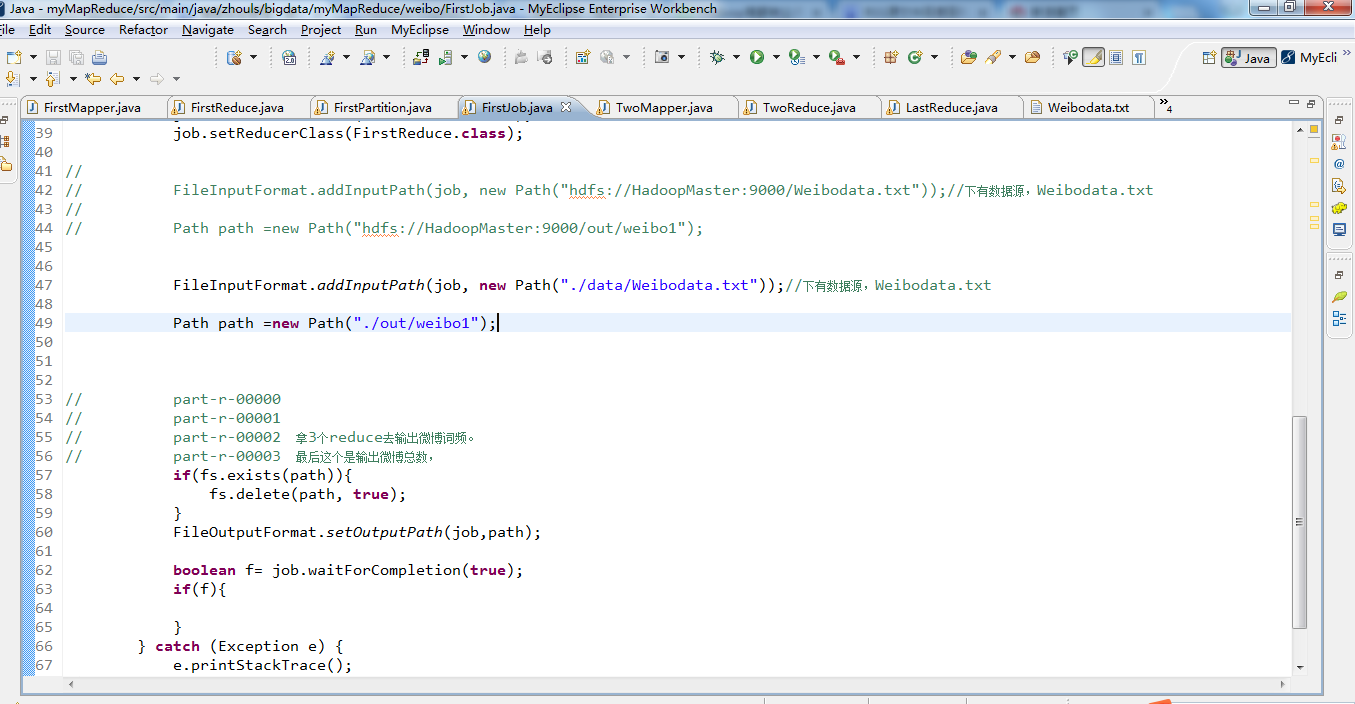

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.TextInputFormat;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class FirstJob { public static void main(String[] args) {

Configuration config =new Configuration();

// config.set("fs.defaultFS", "hdfs://HadoopMaster:9000");

// config.set("yarn.resourcemanager.hostname", "HadoopMaster");

try {

FileSystem fs =FileSystem.get(config);

// JobConf job =new JobConf(config);

Job job =Job.getInstance(config);

job.setJarByClass(FirstJob.class);

job.setJobName("weibo1"); job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// job.setMapperClass();

job.setNumReduceTasks(4);

job.setPartitionerClass(FirstPartition.class);

job.setMapperClass(FirstMapper.class);

job.setCombinerClass(FirstReduce.class);

job.setReducerClass(FirstReduce.class); //

// FileInputFormat.addInputPath(job, new Path("hdfs://HadoopMaster:9000/Weibodata.txt"));//下有数据源,Weibodata.txt

//

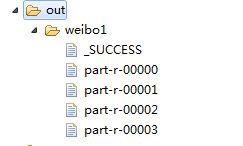

// Path path =new Path("hdfs://HadoopMaster:9000/out/weibo1"); FileInputFormat.addInputPath(job, new Path("./data/weibo/Weibodata.txt"));//下有数据源,Weibodata.txt Path path =new Path("./out/weibo1"); // part-r-00000

// part-r-00001

// part-r-00002 拿3个reduce去输出微博词频。

// part-r-00003 最后这个是输出微博总数,

if(fs.exists(path)){

fs.delete(path, true);

}

FileOutputFormat.setOutputPath(job,path); boolean f= job.waitForCompletion(true);

if(f){ }

} catch (Exception e) {

e.printStackTrace();

}

}

}

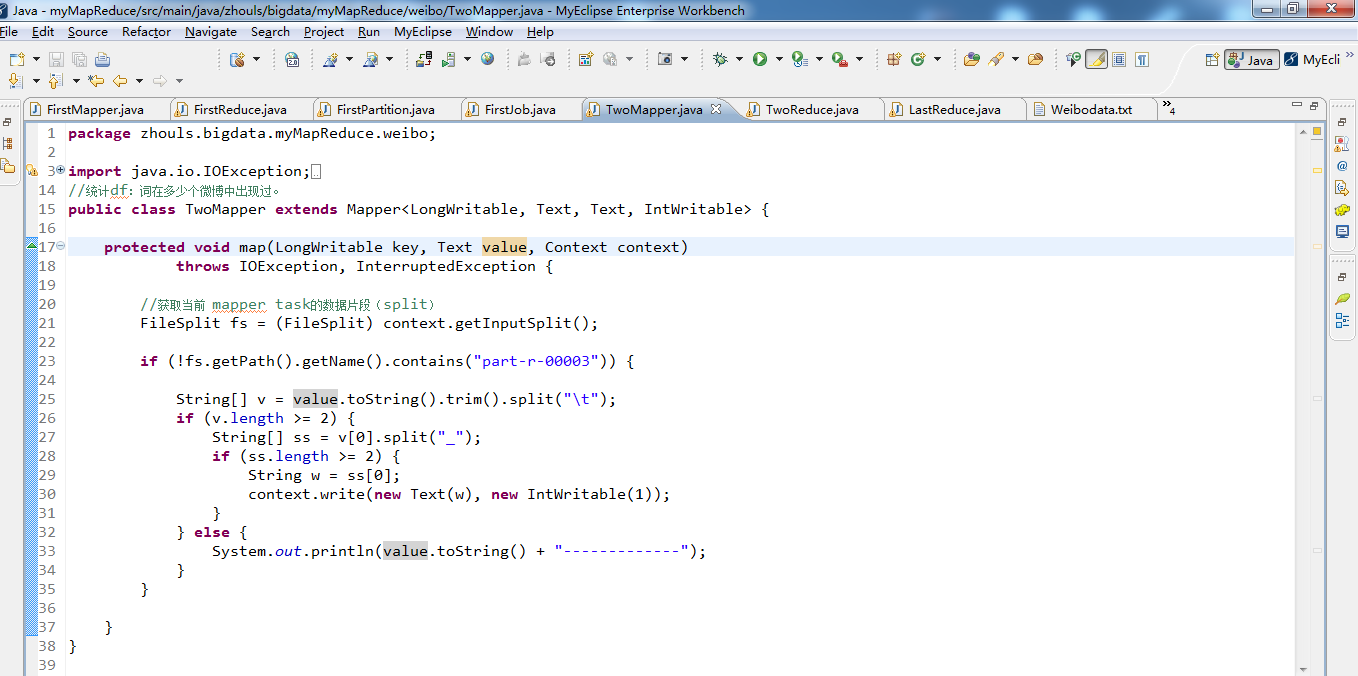

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import java.io.StringReader; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.wltea.analyzer.core.IKSegmenter;

import org.wltea.analyzer.core.Lexeme;

//统计df:词在多少个微博中出现过。

public class TwoMapper extends Mapper<LongWritable, Text, Text, IntWritable> { protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException { //获取当前 mapper task的数据片段(split)

FileSplit fs = (FileSplit) context.getInputSplit(); if (!fs.getPath().getName().contains("part-r-00003")) { String[] v = value.toString().trim().split("\t");

if (v.length >= 2) {

String[] ss = v[0].split("_");

if (ss.length >= 2) {

String w = ss[0];

context.write(new Text(w), new IntWritable(1));

}

} else {

System.out.println(value.toString() + "-------------");

}

} }

}

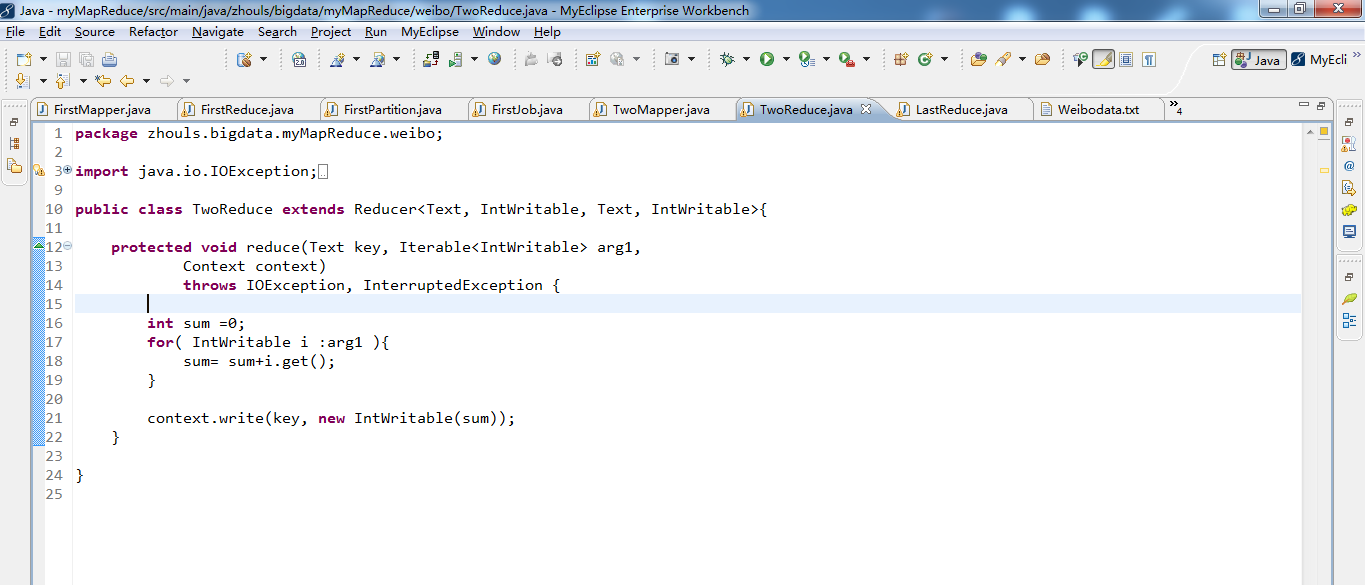

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer; public class TwoReduce extends Reducer<Text, IntWritable, Text, IntWritable>{ protected void reduce(Text key, Iterable<IntWritable> arg1,

Context context)

throws IOException, InterruptedException { int sum =0;

for( IntWritable i :arg1 ){

sum= sum+i.get();

} context.write(key, new IntWritable(sum));

} }

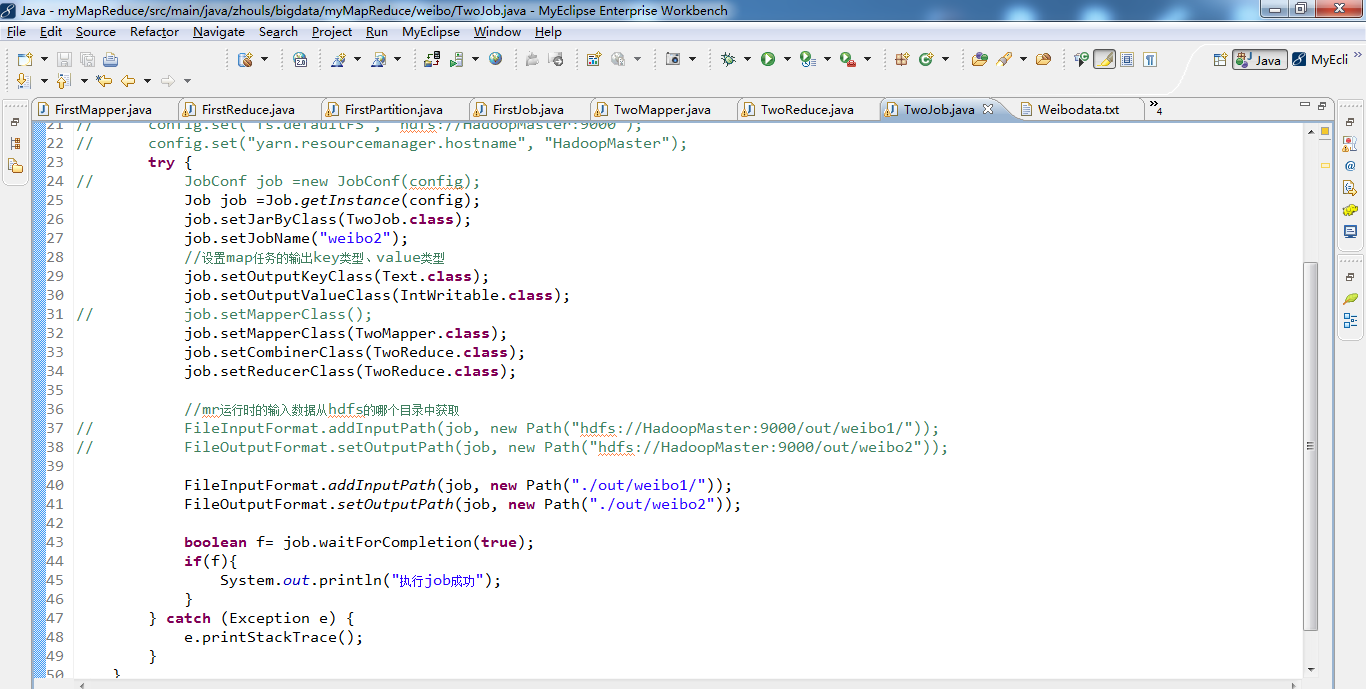

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.TextInputFormat;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class TwoJob { public static void main(String[] args) {

Configuration config =new Configuration();

// config.set("fs.defaultFS", "hdfs://HadoopMaster:9000");

// config.set("yarn.resourcemanager.hostname", "HadoopMaster");

try {

// JobConf job =new JobConf(config);

Job job =Job.getInstance(config);

job.setJarByClass(TwoJob.class);

job.setJobName("weibo2");

//设置map任务的输出key类型、value类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// job.setMapperClass();

job.setMapperClass(TwoMapper.class);

job.setCombinerClass(TwoReduce.class);

job.setReducerClass(TwoReduce.class); //mr运行时的输入数据从hdfs的哪个目录中获取

// FileInputFormat.addInputPath(job, new Path("hdfs://HadoopMaster:9000/out/weibo1/"));

// FileOutputFormat.setOutputPath(job, new Path("hdfs://HadoopMaster:9000/out/weibo2")); FileInputFormat.addInputPath(job, new Path("./out/weibo1/"));

FileOutputFormat.setOutputPath(job, new Path("./out/weibo2")); boolean f= job.waitForCompletion(true);

if(f){

System.out.println("执行job成功");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

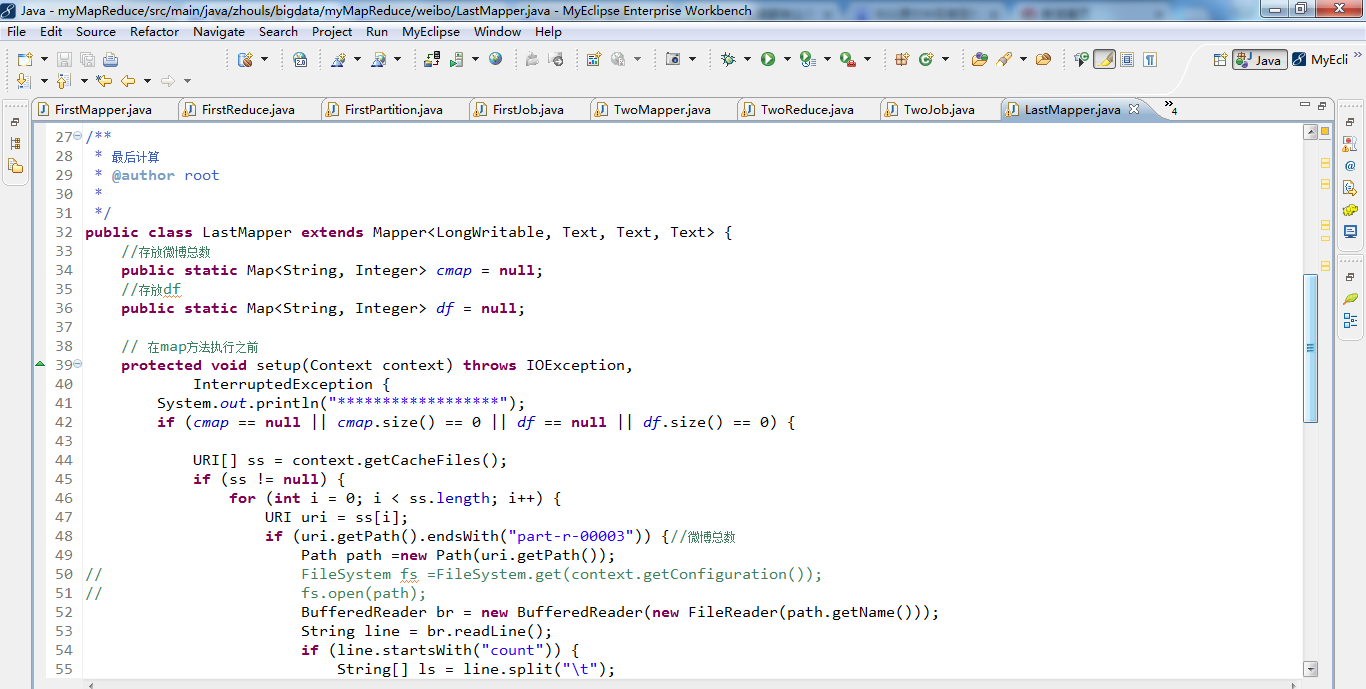

package zhouls.bigdata.myMapReduce.weibo; import java.io.BufferedReader; import java.io.File;

import java.io.FileInputStream;

import java.io.FileReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.StringReader;

import java.net.URI;

import java.text.NumberFormat;

import java.util.HashMap;

import java.util.Map; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.wltea.analyzer.core.IKSegmenter;

import org.wltea.analyzer.core.Lexeme; /**

* 最后计算

* @author root

*

*/

public class LastMapper extends Mapper<LongWritable, Text, Text, Text> {

//存放微博总数

public static Map<String, Integer> cmap = null;

//存放df

public static Map<String, Integer> df = null; // 在map方法执行之前

protected void setup(Context context) throws IOException,

InterruptedException {

System.out.println("******************");

if (cmap == null || cmap.size() == 0 || df == null || df.size() == 0) { URI[] ss = context.getCacheFiles();

if (ss != null) {

for (int i = 0; i < ss.length; i++) {

URI uri = ss[i];

if (uri.getPath().endsWith("part-r-00003")) {//微博总数

Path path =new Path(uri.getPath());

// FileSystem fs =FileSystem.get(context.getConfiguration());

// fs.open(path);

BufferedReader br = new BufferedReader(new FileReader(path.getName()));

String line = br.readLine();

if (line.startsWith("count")) {

String[] ls = line.split("\t");

cmap = new HashMap<String, Integer>();

cmap.put(ls[0], Integer.parseInt(ls[1].trim()));

}

br.close();

} else if (uri.getPath().endsWith("part-r-00000")) {//词条的DF

df = new HashMap<String, Integer>();

Path path =new Path(uri.getPath());

BufferedReader br = new BufferedReader(new FileReader(path.getName()));

String line;

while ((line = br.readLine()) != null) {

String[] ls = line.split("\t");

df.put(ls[0], Integer.parseInt(ls[1].trim()));

}

br.close();

}

}

}

}

} protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

FileSplit fs = (FileSplit) context.getInputSplit();

// System.out.println("--------------------");

if (!fs.getPath().getName().contains("part-r-00003")) { String[] v = value.toString().trim().split("\t");

if (v.length >= 2) {

int tf =Integer.parseInt(v[1].trim());//tf值

String[] ss = v[0].split("_");

if (ss.length >= 2) {

String w = ss[0];

String id=ss[1]; double s=tf * Math.log(cmap.get("count")/df.get(w));

NumberFormat nf =NumberFormat.getInstance();

nf.setMaximumFractionDigits(5);

context.write(new Text(id), new Text(w+":"+nf.format(s)));

}

} else {

System.out.println(value.toString() + "-------------");

}

}

}

}

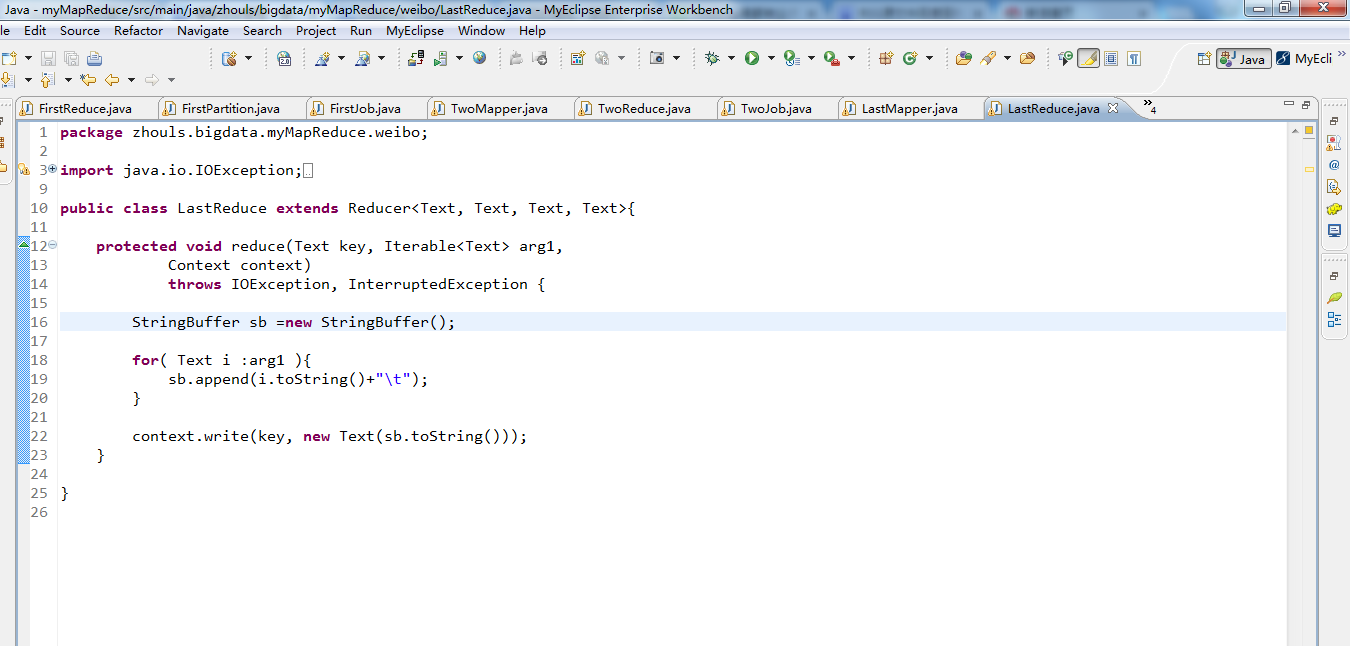

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer; public class LastReduce extends Reducer<Text, Text, Text, Text>{ protected void reduce(Text key, Iterable<Text> arg1,

Context context)

throws IOException, InterruptedException { StringBuffer sb =new StringBuffer(); for( Text i :arg1 ){

sb.append(i.toString()+"\t");

} context.write(key, new Text(sb.toString()));

} }

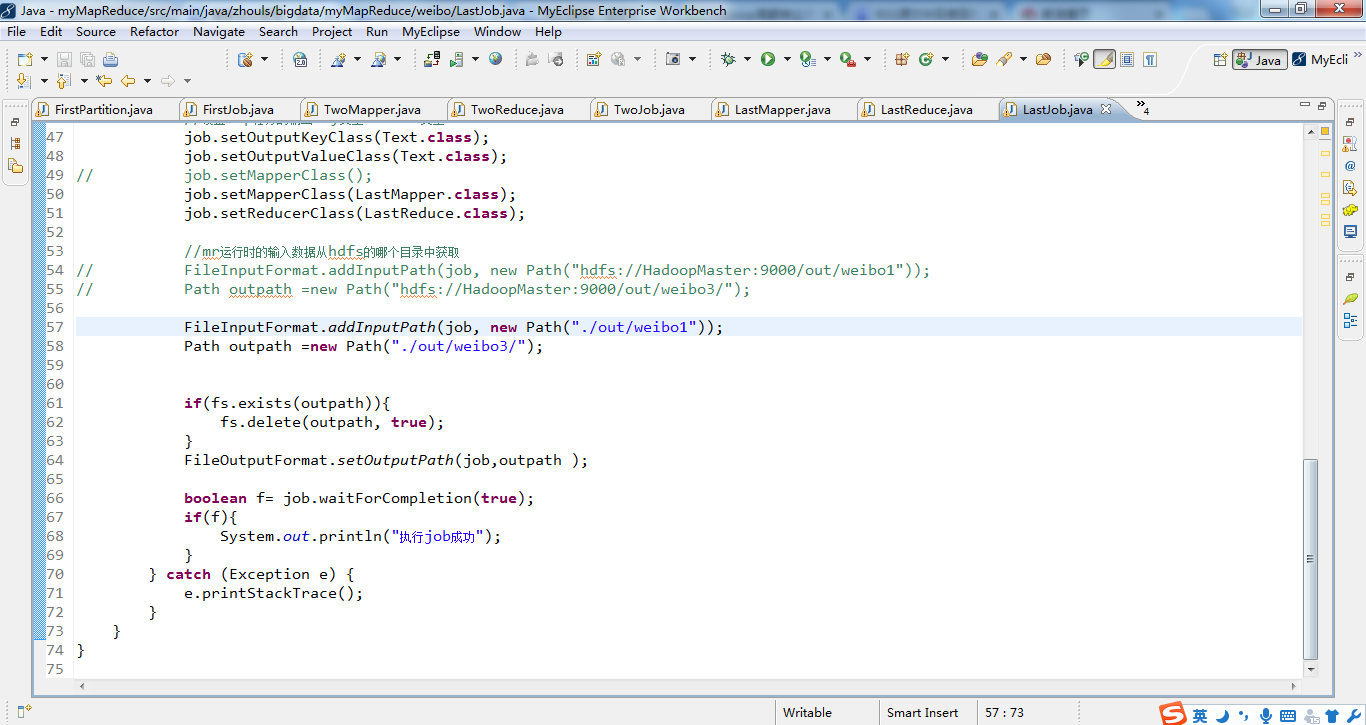

package zhouls.bigdata.myMapReduce.weibo; import java.io.IOException; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.TextInputFormat;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class LastJob {

public static void main(String[] args) {

Configuration config =new Configuration();

// config.set("fs.defaultFS", "hdfs://HadoopMaster:9000");

// config.set("yarn.resourcemanager.hostname", "HadoopMaster");

// config.set("mapred.jar", "C:\\Users\\Administrator\\Desktop\\weibo3.jar");

try {

FileSystem fs =FileSystem.get(config);

// JobConf job =new JobConf(config);

Job job =Job.getInstance(config);

job.setJarByClass(LastJob.class);

job.setJobName("weibo3"); // DistributedCache.addCacheFile(uri, conf);

//2.5

//把微博总数加载到内存

// job.addCacheFile(new Path("hdfs://HadoopMaster:9000/out/weibo1/part-r-00003").toUri());

// //把df加载到内存

// job.addCacheFile(new Path("hdfs://HadoopMaster:9000/out/weibo2/part-r-00000").toUri()); job.addCacheFile(new Path("./out/weibo1/part-r-00003").toUri());

//把df加载到内存

job.addCacheFile(new Path("./out/weibo2/part-r-00000").toUri()); //设置map任务的输出key类型、value类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

// job.setMapperClass();

job.setMapperClass(LastMapper.class);

job.setReducerClass(LastReduce.class); //mr运行时的输入数据从hdfs的哪个目录中获取

// FileInputFormat.addInputPath(job, new Path("hdfs://HadoopMaster:9000/out/weibo1"));

// Path outpath =new Path("hdfs://HadoopMaster:9000/out/weibo3/"); FileInputFormat.addInputPath(job, new Path("./out/weibo1"));

Path outpath =new Path("./out/weibo3/"); if(fs.exists(outpath)){

fs.delete(outpath, true);

}

FileOutputFormat.setOutputPath(job,outpath ); boolean f= job.waitForCompletion(true);

if(f){

System.out.println("执行job成功");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

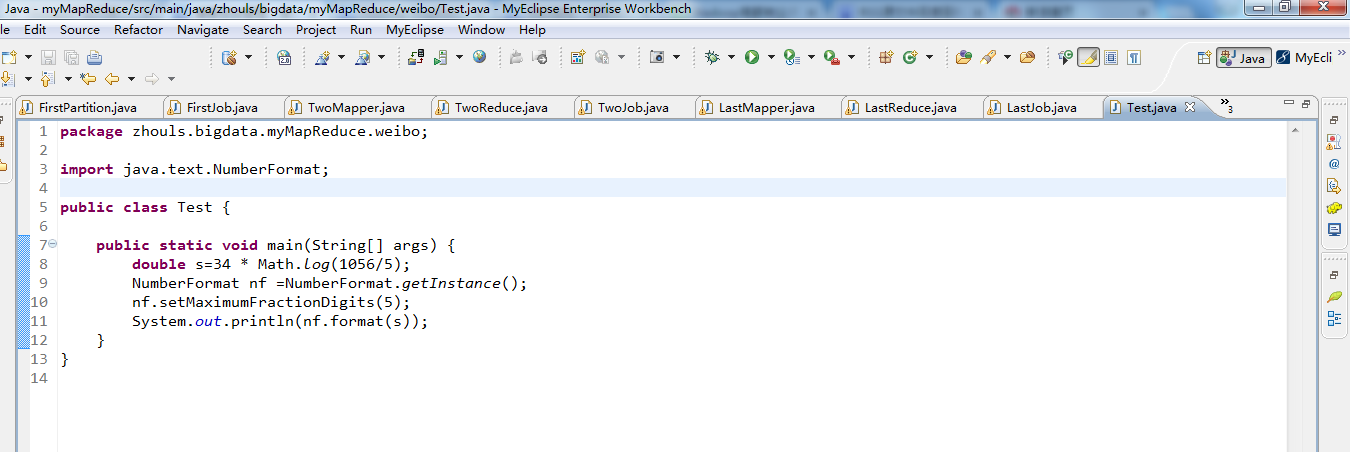

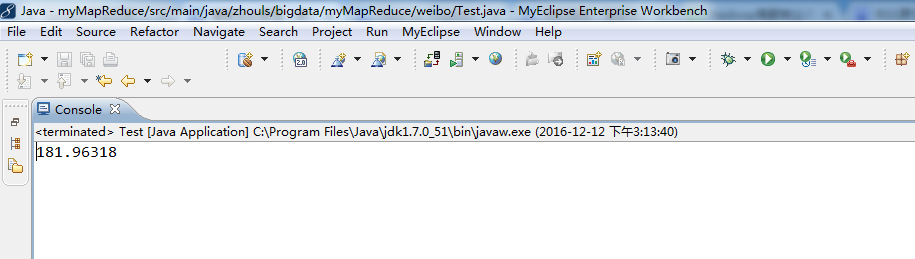

package zhouls.bigdata.myMapReduce.weibo;

import java.text.NumberFormat;

public class Test {

public static void main(String[] args) {

double s=34 * Math.log(1056/5);

NumberFormat nf =NumberFormat.getInstance();

nf.setMaximumFractionDigits(5);

System.out.println(nf.format(s));

}

}

Hadoop MapReduce编程 API入门系列之多个Job迭代式MapReduce运行(十二)的更多相关文章

- Hadoop MapReduce编程 API入门系列之压缩和计数器(三十)

不多说,直接上代码. Hadoop MapReduce编程 API入门系列之小文件合并(二十九) 生成的结果,作为输入源. 代码 package zhouls.bigdata.myMapReduce. ...

- Hadoop MapReduce编程 API入门系列之挖掘气象数据版本3(九)

不多说,直接上干货! 下面,是版本1. Hadoop MapReduce编程 API入门系列之挖掘气象数据版本1(一) 下面是版本2. Hadoop MapReduce编程 API入门系列之挖掘气象数 ...

- Hadoop MapReduce编程 API入门系列之挖掘气象数据版本2(十)

下面,是版本1. Hadoop MapReduce编程 API入门系列之挖掘气象数据版本1(一) 这篇博文,包括了,实际生产开发非常重要的,单元测试和调试代码.这里不多赘述,直接送上代码. MRUni ...

- Hadoop MapReduce编程 API入门系列之Crime数据分析(二十五)(未完)

不多说,直接上代码. 一共12列,我们只需提取有用的列:第二列(犯罪类型).第四列(一周的哪一天).第五列(具体时间)和第七列(犯罪场所). 思路分析 基于项目的需求,我们通过以下几步完成: 1.首先 ...

- Hadoop MapReduce编程 API入门系列之join(二十六)(未完)

不多说,直接上代码. 天气记录数据库 Station ID Timestamp Temperature 气象站数据库 Station ID Station Name 气象站和天气记录合并之后的示意图如 ...

- Hadoop MapReduce编程 API入门系列之MapReduce多种输入格式(十七)

不多说,直接上代码. 代码 package zhouls.bigdata.myMapReduce.ScoreCount; import java.io.DataInput; import java.i ...

- Hadoop MapReduce编程 API入门系列之自定义多种输入格式数据类型和排序多种输出格式(十一)

推荐 MapReduce分析明星微博数据 http://git.oschina.net/ljc520313/codeexample/tree/master/bigdata/hadoop/mapredu ...

- Hadoop MapReduce编程 API入门系列之wordcount版本1(五)

这个很简单哈,编程的版本很多种. 代码版本1 package zhouls.bigdata.myMapReduce.wordcount5; import java.io.IOException; im ...

- Hadoop MapReduce编程 API入门系列之薪水统计(三十一)

不多说,直接上代码. 代码 package zhouls.bigdata.myMapReduce.SalaryCount; import java.io.IOException; import jav ...

随机推荐

- Webpack 打包学习

前段时间项目主管让测试组长研究webpack打包方式,闲暇时自己想学习一下,留着备用,本周日学习一下. https://www.jianshu.com/p/42e11515c10f

- 【sqli-labs】 less6 GET - Double Injection - Double Quotes - String (双注入GET双引号字符型注入)

同less5 单引号改成双引号就行 http://localhost/sqli/Less-6/?id=a" union select 1,count(*),concat((select ta ...

- js截取字符串测试

function gget() { $.ajax({ type: "GET", url: "index", data: { U: '1234', P: '000 ...

- redis与其可视化工具在win7上的安装

步骤 1.下载安装Redis服务. 2.调用执行文件创建服务器以及测试缓存. 3.使用可视化工具redis-desktop-manager管理查询缓存. 1.下载安装Redis服务. 下载地址:htt ...

- Spring+SprinMVC配置学习总结

一千个人有一千种spring的配置方式,真是这样.看了好多的配置,试验了很多.这里做一个总结. 1 原理上,spring和springmvc可以合并为一个配置文件然后在web.xml中加载,因为最终的 ...

- C语言编程-9_4 字符统计

输入一个字符串(其长度不超过81),分别统计其中26个英文字母出现的次数(不区分大.小写字母),并按字母出现次数从高到低排序,若次数相同,按字母顺序排列.字母输出格式举例,例如:A-3,表示字母A出现 ...

- shell脚本—基础知识,变量

shell脚本本质: 编译型语言 解释型语言 shell编程基本过程 1.建立shell文件 2.赋予shell文件执行权限,使用chmod命令修改权限 3.执行shell文件 shell变量: sh ...

- Scala语言学习笔记——方法、函数及异常

1.Scala 方法及函数区别 ① Scala 有方法与函数,二者在语义上的区别很小.Scala 方法是类的一部分,而函数是一个对象可以赋值给一个变量.换句话来说在类中定义的函数即是方法 ② Scal ...

- freemarker使用map替换字符串中的值

package demo01; import java.io.IOException;import java.io.OutputStreamWriter;import java.io.StringRe ...

- 360 基于 Prometheus的在线服务监控实践

转自:https://mp.weixin.qq.com/s/lcjZzjptxrUBN1999k_rXw 主题简介: Prometheus基础介绍 Prometheus打点及查询技巧 Promethe ...