Spark Standalone Mode 单机启动Spark -- 分布式计算系统spark学习(一)

spark是个啥?

Spark是一个通用的并行计算框架,由UCBerkeley的AMP实验室开发。

- 本地模式

- Standalone模式

- Mesoes模式

- yarn模式

1.下载安装

http://spark.apache.org/downloads.html

这里可以选择下载源码编译,或者下载已经编译好的程序(因为spark是运行在JVM上面,也可以说是跨平台的),这里是直接下载可执行程序。

Chose a package type: Pre-built for Hadoop 2.4 and later 。

解压这个 spark-1.3.0-bin-hadoop2.4.tgz 即可。

PS:你需要安装java运行环境

~/project/spark-1.3.0-bin-hadoop2.4 $java -version

java version "1.8.0_25"

Java(TM) SE Runtime Environment (build 1.8.0_25-b17)

Java HotSpot(TM) 64-Bit Server VM (build 25.25-b02, mixed mode)

2.目录分布

sbin目录是各种启动命令

~/project/spark-1.3.0-bin-hadoop2.4 $tree sbin/

sbin/

├── slaves.sh

├── spark-config.sh

├── spark-daemon.sh

├── spark-daemons.sh

├── start-all.sh

├── start-history-server.sh

├── start-master.sh

├── start-slave.sh

├── start-slaves.sh

├── start-thriftserver.sh

├── stop-all.sh

├── stop-history-server.sh

├── stop-master.sh

├── stop-slaves.sh

└── stop-thriftserver.sh

conf目录是一些配置模板:

~/project/spark-1.3.0-bin-hadoop2.4 $tree conf/

conf/

├── fairscheduler.xml.template

├── log4j.properties.template

├── metrics.properties.template

├── slaves.template

├── spark-defaults.conf.template

└── spark-env.sh.template

3.启动master

~/project/spark-1.3.0-bin-hadoop2.4 $./sbin/start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /Users/qpzhang/project/spark-1.3.0-bin-hadoop2.4/sbin/../logs/spark-qpzhang-org.apache.spark.deploy.master.Master-1-qpzhangdeMac-mini.local.out

没有进行任何配置时,采用的都是默认配置,可以看到日志文件的输出:

~/project/spark-1.3.-bin-hadoop2. $cat logs/spark-qpzhang-org.apache.spark.deploy.master.Master--qpzhangdeMac-mini.local.out Spark assembly has been built with Hive, including Datanucleus jars on classpath Spark Command: /Library/Java/JavaVirtualMachines/jdk1..0_25.jdk/Contents/Home/bin/java -cp :/Users/qpzhang/project/spark-1.3.-bin-hadoop2./sbin/../conf:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./lib/spark-assembly-1.3.-hadoop2.4.0.jar:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./lib/datanucleus-api-jdo-3.2..jar:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./lib/datanucleus-core-3.2..jar:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./lib/datanucleus-rdbms-3.2..jar -Dspark.akka.logLifecycleEvents=true -Xms512m -Xmx512m org.apache.spark.deploy.master.Master --ip qpzhangdeMac-mini.local --port --webui-port ======================================== Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties // :: INFO Master: Registered signal handlers for [TERM, HUP, INT] // :: WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable // :: INFO SecurityManager: Changing view acls to: qpzhang // :: INFO SecurityManager: Changing modify acls to: qpzhang // :: INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(qpzhang); users with modify permissions: Set(qpzhang) // :: INFO Slf4jLogger: Slf4jLogger started // :: INFO Remoting: Starting remoting // :: INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkMaster@qpzhangdeMac-mini.local:7077] // :: INFO Remoting: Remoting now listens on addresses: [akka.tcp://sparkMaster@qpzhangdeMac-mini.local:7077] // :: INFO Utils: Successfully started service 'sparkMaster' on port . // :: INFO Server: jetty-.y.z-SNAPSHOT // :: INFO AbstractConnector: Started SelectChannelConnector@qpzhangdeMac-mini.local: 15/03/20 10:08:11 INFO Utils: Successfully started service on port 6066. // :: INFO StandaloneRestServer: Started REST server for submitting applications on port 15/03/20 10:08:11 INFO Master: Starting Spark master at spark://qpzhangdeMac-mini.local:7077 // :: INFO Master: Running Spark version 1.3. // :: INFO Server: jetty-.y.z-SNAPSHOT // :: INFO AbstractConnector: Started SelectChannelConnector@0.0.0.0: 15/03/20 10:08:11 INFO Utils: Successfully started service 'MasterUI' on port 8080. // :: INFO MasterWebUI: Started MasterWebUI at http://10.60.215.41:8080 15/03/20 10:08:11 INFO Master: I have been elected leader! New state: ALIVE

可以看到输出的几条重要的信息,service端口6066,spark端口 7077,ui端口8080等,并且当前node通过选举,确认自己为leader。

这个时候,我们可以通过 http://localhost:8080/ 来查看到当前master的总体状态。

4.附加一个worker到master

~/project/spark-1.3.-bin-hadoop2. $./bin/spark-class org.apache.spark.deploy.worker.Worker spark://qpzhangdeMac-mini.local:7077

Spark assembly has been built with Hive, including Datanucleus jars on classpath

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

// :: INFO Worker: Registered signal handlers for [TERM, HUP, INT]

// :: WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: INFO SecurityManager: Changing view acls to: qpzhang

// :: INFO SecurityManager: Changing modify acls to: qpzhang

// :: INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(qpzhang); users with modify permissions: Set(qpzhang)

// :: INFO Slf4jLogger: Slf4jLogger started

// :: INFO Remoting: Starting remoting

// :: INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkWorker@10.60.215.41:60994]

// :: INFO Remoting: Remoting now listens on addresses: [akka.tcp://sparkWorker@10.60.215.41:60994]

15/03/20 10:33:50 INFO Utils: Successfully started service 'sparkWorker' on port 60994.

15/03/20 10:33:50 INFO Worker: Starting Spark worker 10.60.215.41:60994 with 8 cores, 7.0 GB RAM

// :: INFO Worker: Running Spark version 1.3.

// :: INFO Worker: Spark home: /Users/qpzhang/project/spark-1.3.-bin-hadoop2.

// :: INFO Server: jetty-.y.z-SNAPSHOT

// :: INFO AbstractConnector: Started SelectChannelConnector@0.0.0.0:

// :: INFO Utils: Successfully started service 'WorkerUI' on port .

15/03/20 10:33:50 INFO WorkerWebUI: Started WorkerWebUI at http://10.60.215.41:8081

// :: INFO Worker: Connecting to master akka.tcp://sparkMaster@qpzhangdeMac-mini.local:7077/user/Master...

// :: INFO Worker: Successfully registered with master spark://qpzhangdeMac-mini.local:7077

从日志输出可以看到, worker自己在60994端口工作,然后为自己也起了一个UI,端口是8081,可以通过 http://10.60.215.41:8081查看worker的工作状态,(不得不说,选择的分布式少不了UI监控状态这一块儿了)。

5.启动spark shell终端:

~/project/spark-1.3.-bin-hadoop2. $./bin/spark-shell

Spark assembly has been built with Hive, including Datanucleus jars on classpath

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

// :: INFO SecurityManager: Changing view acls to: qpzhang

// :: INFO SecurityManager: Changing modify acls to: qpzhang

// :: INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(qpzhang); users with modify permissions: Set(qpzhang)

// :: INFO HttpServer: Starting HTTP Server

// :: INFO Server: jetty-.y.z-SNAPSHOT

// :: INFO AbstractConnector: Started SocketConnector@0.0.0.0:

// :: INFO Utils: Successfully started service 'HTTP class server' on port .

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.3.

/_/ Using Scala version 2.10. (Java HotSpot(TM) -Bit Server VM, Java 1.8.0_25)

Type in expressions to have them evaluated.

Type :help for more information.

// :: INFO SparkContext: Running Spark version 1.3.

// :: INFO SecurityManager: Changing view acls to: qpzhang

// :: INFO SecurityManager: Changing modify acls to: qpzhang

// :: INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(qpzhang); users with modify permissions: Set(qpzhang)

// :: INFO Slf4jLogger: Slf4jLogger started

// :: INFO Remoting: Starting remoting

// :: INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@10.60.215.41:61645]

15/03/20 10:43:43 INFO Utils: Successfully started service 'sparkDriver' on port 61645.

// :: INFO SparkEnv: Registering MapOutputTracker

// :: INFO SparkEnv: Registering BlockManagerMaster

// :: INFO DiskBlockManager: Created local directory at /var/folders/2l/195zcc1n0sn2wjfjwf9hl9d80000gn/T/spark-5349b1ce-bd10-4f44--da660c1a02a3/blockmgr-a519687e-0cc3-45e4-839a-f93ac8f1397b

// :: INFO MemoryStore: MemoryStore started with capacity 265.1 MB

// :: INFO HttpFileServer: HTTP File server directory is /var/folders/2l/195zcc1n0sn2wjfjwf9hl9d80000gn/T/spark-29d81b59-ec6a--b2fb-81bf6b1d3b10/httpd-c572e4a5-ff85-44c9-a21f-71fb34b831e1

// :: INFO HttpServer: Starting HTTP Server

// :: INFO Server: jetty-.y.z-SNAPSHOT

15/03/20 10:43:44 INFO AbstractConnector: Started SocketConnector@0.0.0.0:61646

// :: INFO Utils: Successfully started service 'HTTP file server' on port .

// :: INFO SparkEnv: Registering OutputCommitCoordinator

// :: INFO Server: jetty-.y.z-SNAPSHOT

// :: INFO AbstractConnector: Started SelectChannelConnector@0.0.0.0:

// :: INFO Utils: Successfully started service 'SparkUI' on port .

15/03/20 10:43:44 INFO SparkUI: Started SparkUI at http://10.60.215.41:4040

// :: INFO Executor: Starting executor ID <driver> on host localhost

15/03/20 10:43:44 INFO Executor: Using REPL class URI: http://10.60.215.41:61644

15/03/20 10:43:44 INFO AkkaUtils: Connecting to HeartbeatReceiver: akka.tcp://sparkDriver@10.60.215.41:61645/user/HeartbeatReceiver

// :: INFO NettyBlockTransferService: Server created on

// :: INFO BlockManagerMaster: Trying to register BlockManager

15/03/20 10:43:44 INFO BlockManagerMasterActor: Registering block manager localhost:61651 with 265.1 MB RAM, BlockManagerId(<driver>, localhost, 61651)

// :: INFO BlockManagerMaster: Registered BlockManager

// :: INFO SparkILoop: Created spark context..

Spark context available as sc.

// :: INFO SparkILoop: Created sql context (with Hive support)..

SQL context available as sqlContext. scala>

从输出可以看到,又是一堆端口(各种service进行通信,没办法),包含UI, driver等等。warning日志告诉你没有进行config,采用默认。如何进行config,后面再说,先用默认的跑起来玩玩。

6.通过shell下达命令

下面我们来执行几个官网上面overview中的几个命令来玩玩。

scala> val textFile = sc.textFile("README.md") //加载数据文件,可以是本地路径,也是是HDFS路径或者其它

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 155.4 KB, free 265.0 MB)

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 22.2 KB, free 265.0 MB)

// :: INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on localhost: (size: 22.2 KB, free: 265.1 MB)

// :: INFO BlockManagerMaster: Updated info of block broadcast_0_piece0

// :: INFO SparkContext: Created broadcast from textFile at <console>:

textFile: org.apache.spark.rdd.RDD[String] = README.md MapPartitionsRDD[1] at textFile at <console>:21

scala> textFile.count() //列出文件行数

// :: INFO FileInputFormat: Total input paths to process :

// :: INFO SparkContext: Starting job: count at <console>:

// :: INFO DAGScheduler: Got job (count at <console>:) with output partitions (allowLocal=false)

// :: INFO DAGScheduler: Final stage: Stage (count at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List()

// :: INFO DAGScheduler: Missing parents: List()

// :: INFO DAGScheduler: Submitting Stage (README.md MapPartitionsRDD[] at textFile at <console>:), which has no missing parents

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 2.6 KB, free 265.0 MB)

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 1923.0 B, free 265.0 MB)

// :: INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on localhost: (size: 1923.0 B, free: 265.1 MB)

// :: INFO BlockManagerMaster: Updated info of block broadcast_1_piece0

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from Stage (README.md MapPartitionsRDD[] at textFile at <console>:)

// :: INFO TaskSchedulerImpl: Adding task set 0.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID , localhost, PROCESS_LOCAL, bytes)

// :: INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID , localhost, PROCESS_LOCAL, bytes)

// :: INFO Executor: Running task 1.0 in stage 0.0 (TID )

// :: INFO Executor: Running task 0.0 in stage 0.0 (TID )

// :: INFO HadoopRDD: Input split: file:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./README.md:+

// :: INFO HadoopRDD: Input split: file:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./README.md:+

// :: INFO deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

// :: INFO deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

// :: INFO deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

// :: INFO deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

// :: INFO deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

// :: INFO Executor: Finished task 1.0 in stage 0.0 (TID ). bytes result sent to driver

// :: INFO Executor: Finished task 0.0 in stage 0.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID ) in ms on localhost (/)

// :: INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID ) in ms on localhost (/)

// :: INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: Stage (count at <console>:) finished in 0.134 s

// :: INFO DAGScheduler: Job finished: count at <console>:, took 0.254626 s

res0: Long = 98

scala> textFile.first() //输出第一个item, 也就是第一行内容

// :: INFO SparkContext: Starting job: first at <console>:

// :: INFO DAGScheduler: Got job (first at <console>:) with output partitions (allowLocal=true)

// :: INFO DAGScheduler: Final stage: Stage (first at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List()

// :: INFO DAGScheduler: Missing parents: List()

// :: INFO DAGScheduler: Submitting Stage (README.md MapPartitionsRDD[] at textFile at <console>:), which has no missing parents

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 2.6 KB, free 265.0 MB)

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 1945.0 B, free 265.0 MB)

// :: INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on localhost: (size: 1945.0 B, free: 265.1 MB)

// :: INFO BlockManagerMaster: Updated info of block broadcast_2_piece0

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from Stage (README.md MapPartitionsRDD[] at textFile at <console>:)

// :: INFO TaskSchedulerImpl: Adding task set 1.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID , localhost, PROCESS_LOCAL, bytes)

// :: INFO Executor: Running task 0.0 in stage 1.0 (TID )

// :: INFO HadoopRDD: Input split: file:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./README.md:+

// :: INFO Executor: Finished task 0.0 in stage 1.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID ) in ms on localhost (/)

// :: INFO DAGScheduler: Stage (first at <console>:) finished in 0.009 s

// :: INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: Job finished: first at <console>:, took 0.016292 s

res1: String = # Apache Spark

scala> val linesWithSpark = textFile.filter(line => line.contains("Spark")) //定义一个filter, 这里定义的是包含Spark关键词的filter

linesWithSpark: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[] at filter at <console>:

scala> linesWithSpark.count() //输出filter中的结果数

// :: INFO SparkContext: Starting job: count at <console>:

// :: INFO DAGScheduler: Got job (count at <console>:) with output partitions (allowLocal=false)

// :: INFO DAGScheduler: Final stage: Stage (count at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List()

// :: INFO DAGScheduler: Missing parents: List()

// :: INFO DAGScheduler: Submitting Stage (MapPartitionsRDD[] at filter at <console>:), which has no missing parents

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 2.8 KB, free 265.0 MB)

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 2029.0 B, free 265.0 MB)

// :: INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on localhost: (size: 2029.0 B, free: 265.1 MB)

// :: INFO BlockManagerMaster: Updated info of block broadcast_3_piece0

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from Stage (MapPartitionsRDD[] at filter at <console>:)

// :: INFO TaskSchedulerImpl: Adding task set 2.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID , localhost, PROCESS_LOCAL, bytes)

// :: INFO TaskSetManager: Starting task 1.0 in stage 2.0 (TID , localhost, PROCESS_LOCAL, bytes)

// :: INFO Executor: Running task 0.0 in stage 2.0 (TID )

// :: INFO Executor: Running task 1.0 in stage 2.0 (TID )

// :: INFO HadoopRDD: Input split: file:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./README.md:+

// :: INFO HadoopRDD: Input split: file:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./README.md:+

// :: INFO Executor: Finished task 1.0 in stage 2.0 (TID ). bytes result sent to driver

// :: INFO Executor: Finished task 0.0 in stage 2.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 1.0 in stage 2.0 (TID ) in ms on localhost (/)

// :: INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID ) in ms on localhost (/)

// :: INFO DAGScheduler: Stage (count at <console>:) finished in 0.011 s

// :: INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: Job finished: count at <console>:, took 0.019407 s

res2: Long = 19 //可以看到有19行包含 Spark关键词

scala> linesWithSpark.first() //打印第一行数据

// :: INFO SparkContext: Starting job: first at <console>:

// :: INFO DAGScheduler: Got job (first at <console>:) with output partitions (allowLocal=true)

// :: INFO DAGScheduler: Final stage: Stage (first at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List()

// :: INFO DAGScheduler: Missing parents: List()

// :: INFO DAGScheduler: Submitting Stage (MapPartitionsRDD[] at filter at <console>:), which has no missing parents

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_4 stored as values in memory (estimated size 2.8 KB, free 265.0 MB)

// :: INFO MemoryStore: ensureFreeSpace() called with curMem=, maxMem=

// :: INFO MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 2.0 KB, free 264.9 MB)

// :: INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on localhost: (size: 2.0 KB, free: 265.1 MB)

// :: INFO BlockManagerMaster: Updated info of block broadcast_4_piece0

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from Stage (MapPartitionsRDD[] at filter at <console>:)

// :: INFO TaskSchedulerImpl: Adding task set 3.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 3.0 (TID , localhost, PROCESS_LOCAL, bytes)

// :: INFO Executor: Running task 0.0 in stage 3.0 (TID )

// :: INFO HadoopRDD: Input split: file:/Users/qpzhang/project/spark-1.3.-bin-hadoop2./README.md:+

// :: INFO Executor: Finished task 0.0 in stage 3.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 0.0 in stage 3.0 (TID ) in ms on localhost (/)

// :: INFO DAGScheduler: Stage (first at <console>:) finished in 0.010 s

// :: INFO TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: Job finished: first at <console>:, took 0.016494 s

res3: String = # Apache Spark

更多命令参考: https://spark.apache.org/docs/latest/quick-start.html

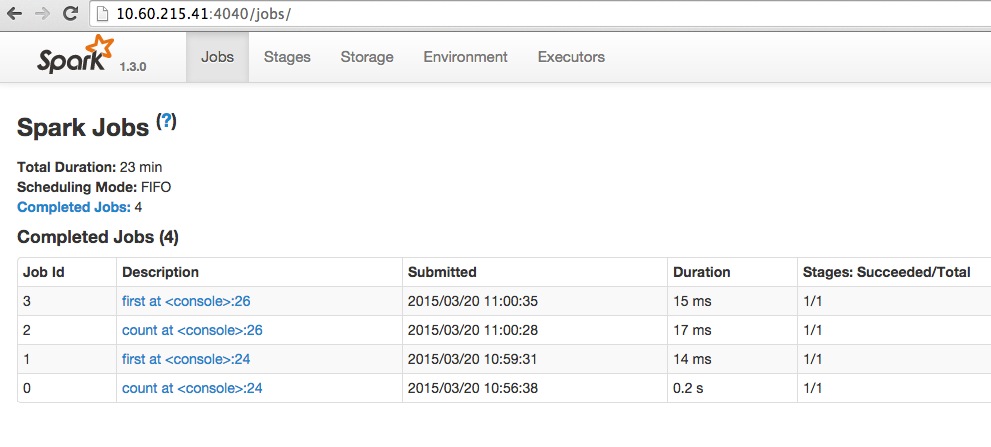

期间,我们可以通过UI看到job列表和状态:

跑起来先,第一步已经完成,那么spark架构是什么样的?运行原理?如何自定义配置?如何扩展到分布式?如何编程实现?我们后面再慢慢研究。

参考资料:

http://dataunion.org/bbs/forum.php?mod=viewthread&tid=890

===================================

转载请注明出处:http://www.cnblogs.com/zhangqingping/p/4352977.html

Spark Standalone Mode 单机启动Spark -- 分布式计算系统spark学习(一)的更多相关文章

- Spark Standalone Mode 多机启动 -- 分布式计算系统spark学习(二)(更新一键启动slavers)

捣鼓了一下,先来个手动挡吧.自动挡要设置ssh无密码登陆啥的,后面开搞. 一.手动多台机链接master 手动链接master其实上篇已经用过. 这里有两台机器: 10.60.215.41 启动mas ...

- 提交任务到spark master -- 分布式计算系统spark学习(四)

部署暂时先用默认配置,我们来看看如何提交计算程序到spark上面. 拿官方的Python的测试程序搞一下. qpzhang@qpzhangdeMac-mini:~/project/spark-1.3. ...

- 让spark运行在mesos上 -- 分布式计算系统spark学习(五)

mesos集群部署参见上篇. 运行在mesos上面和 spark standalone模式的区别是: 1)stand alone 需要自己启动spark master 需要自己启动spark slav ...

- 系统架构--分布式计算系统spark学习(三)

通过搭建和运行example,我们初步认识了spark. 大概是这么一个流程 ------------------------------ -------------- ...

- 大数据学习day18----第三阶段spark01--------0.前言(分布式运算框架的核心思想,MR与Spark的比较,spark可以怎么运行,spark提交到spark集群的方式)1. spark(standalone模式)的安装 2. Spark各个角色的功能 3.SparkShell的使用,spark编程入门(wordcount案例)

0.前言 0.1 分布式运算框架的核心思想(此处以MR运行在yarn上为例) 提交job时,resourcemanager(图中写成了master)会根据数据的量以及工作的复杂度,解析工作量,从而 ...

- Spark:一个高效的分布式计算系统

概述 什么是Spark ◆ Spark是UC Berkeley AMP lab所开源的类Hadoop MapReduce的通用的并行计算框架,Spark基于map reduce算法实现的分布式计算,拥 ...

- Spark系列之二——一个高效的分布式计算系统

1.什么是Spark? Spark是UC Berkeley AMP lab所开源的类Hadoop MapReduce的通用的并行计算框架,Spark基于map reduce算法实现的分布式计算,拥有H ...

- 【转】Spark:一个高效的分布式计算系统

原文地址:http://tech.uc.cn/?p=2116 概述 什么是Spark Spark是UC Berkeley AMP lab所开源的类Hadoop MapReduce的通用的并行计算框架, ...

- Spark:一个高效的分布式计算系统--转

原文地址:http://soft.chinabyte.com/database/431/12914931.shtml 概述 什么是Spark ◆ Spark是UC Berkeley AMP lab所开 ...

随机推荐

- python学习笔记(4)--聊天记录处理

说明: 1.把冒号和前面的名字去掉 2.男的台词放一个txt文件,女的台词放一个txt文件 3.遇到======就重新生成一个文件 record.txt: 婷婷:迪迪早啊! 迪迪:早啊! 婷婷:111 ...

- Jquery弹窗

<title>弹窗</title> <script src="JS/jquery-1.7.2.js"></script> <s ...

- Cannot proceed with delivery: an existing transporter instance is currently uploading this package

当使用Xcode的Application Loader上传spa到AppStore的过程中,如果临时中断,当你再次进行上传的过程时,就发发现如下现象: Cannot proceed with deli ...

- 记录一个glibc 导致的段错误以及gdb 移植

上一篇我有相关关于一个段错误的记录,现在记录当时的段错误具体是在哪里的. // 从 GNU 的官网下载当前在使用的 glibc 的源代码以及最新的 glibc 源代码 // 地址如下: http:// ...

- Lua中的常用语句结构以及函数

1.Lua中的常用语句结构介绍 --if 语句结构,如下实例: gTable = {} ] ] then ]) == gTable[] then ]) else print("unkown ...

- 三种CSS方法实现loadingh点点点的效果

我们在提交数据的时候,在开始提交数据与数据提交成功之间会有一段时间间隔,为了有更好的用户体验,我们可以在这个时间段添加一个那处点点点的动画,如下图所示: 汇总了一下实现这种效果主要有三种方法: 第一种 ...

- C++ 运算符重载四(自定义数组类)

//自定义数组类 #include<iostream> using namespace std; //分析:能获取数组长度,添加元素,删除元素,修改元素 //要求重载[],=,==,!=运 ...

- 用 free 或 delete 释放了内存之后,立即将指针设置为 NULL,防止产 生“野指针”

用 free 或 delete 释放了内存之后,立即将指针设置为 NULL,防止产 生“野指针”. #include <iostream> using namespace std; /* ...

- 【openwrt+arduion】案例

http://www.geek-workshop.com/thread-4950-1-1.html http://www.guokr.com/article/319356/ http://www.gu ...

- UDP传输原理及数据分片——学习笔记

TCP传输可靠性是:TCP协议里自己做了设计来保证可靠性. IP报文本身是不可靠的 UDP也是 TCP做了很多复杂的协议设计,来保证可靠性. TCP 面向连接,三次握手,四次挥手 拥塞机制 重传机制 ...