Intro to Machine Learning

本节主要用于机器学习入门,介绍两个简单的分类模型:

决策树和随机森林

不涉及内部原理,仅仅介绍基础的调用方法

1. How Models Work

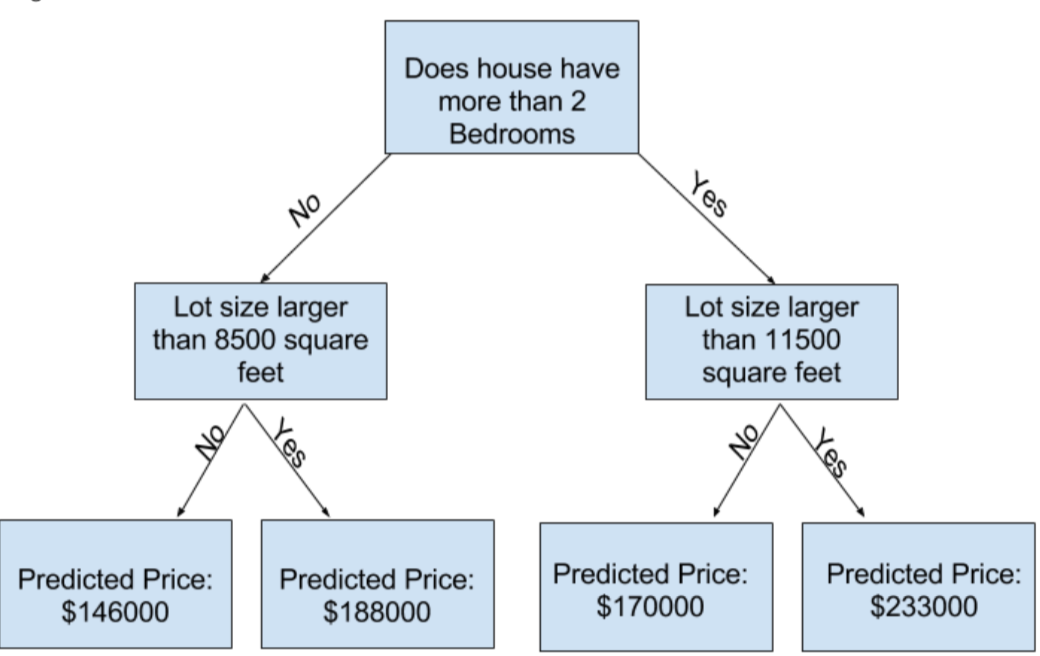

以简单的决策树为例

This step of capturing patterns from data is called fitting or training the model

The data used to train the data is called the trainning data

After the model has been fit, you can apply it to new data to predict prices of additional homes

2.Basic Data Exploration

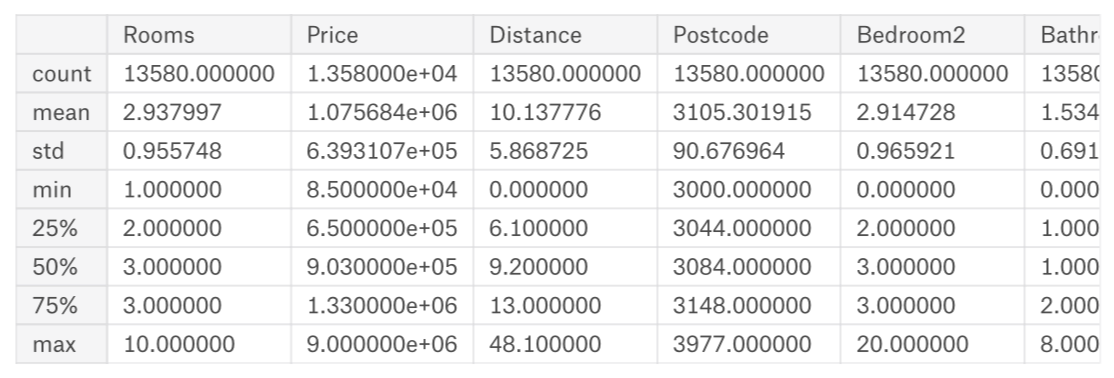

使用pandas中的describle()来探究数据:

melbourne_file_path = '../input/melbourne-housing-snapshot/melb_data.csv'

melbourne_data = pd.read_csv(melbourne_file_path)

melbourne.describe()

output:

注:数值含义

count: 非缺失值的数量

mean: 平均值

std: 标准偏差,它度量值在数值上的分布情况

min、25%、50%、75%、max: 将每一列按照从lowest到highest排序,最小值是min, 1/4位置上,大于25%而小于50%是25%

3.Your First Machine Learning Model

- Selecting Data for Modeling

import pandas as pd

melbourne_file_path = ' ../input/melbourne-housing-snapshot/melb_data.csv'

melbourne_data = pd.read_csv(melbourne_file_path)

- Selecting The Prediction Target

方法:使用dot-notation来挑选prediction target

- Choosing "Features"

melbourne_features = ['Rooms', 'Bathroom', 'Landsize', 'Lattitude', 'Longtitude']

X = melbourne_data[melbourne_features]

查看数据是否加载正确:

X.head()

探究数据基本特性:

- Building Your Model

我们使用scikit-learn来创造模型,scikit-learn教程如下:

具体的原理可以根据需要自己探究

https://scikit-learn.org/stable/supervised_learning.html#supervised-learning

构建模型步骤:

- Define:

What type of model will it be? A decision tree? Some other type of model? Some other parameters of the model type are specified too.

- Fit:

Capture patterns from provided data. This is the heart of modeling

- Predict:

Just what it sounds like

- Evaluate:

Determine how accurate the model's predictions are

实现:

from sklearn.tree import DecisionTreeRegressor

melbourne_mode = DecisionTreeRegressor(random_state=1)

melbourne_mode.fit(X , y)

打印出开始几行:

print (X.head())

预测后的价格如下:

print (melbourne_mode.predict(X.head())

4.Model Validation

由于预测的价格和真实的价格会有差距,而差距多少,我们需要衡量

使用Mean Absolute Error

error= actual-predicted

在实际过程中,我们要将数据分成两份,一份用于训练,叫做training data, 一份用于验证叫validataion data

from sklearn.model_selection import train_test_split

train_X, val_X, train_y, val_y = train_test_split(X, y, random_state=0)

melbourne_model = DecisionTreeRegressor()

melbourne_model.fit(train_X, train_y)

val_predictions = melbourne_model.predict(val_X)

print(mean_absolute_error(val_y, val_predictions))

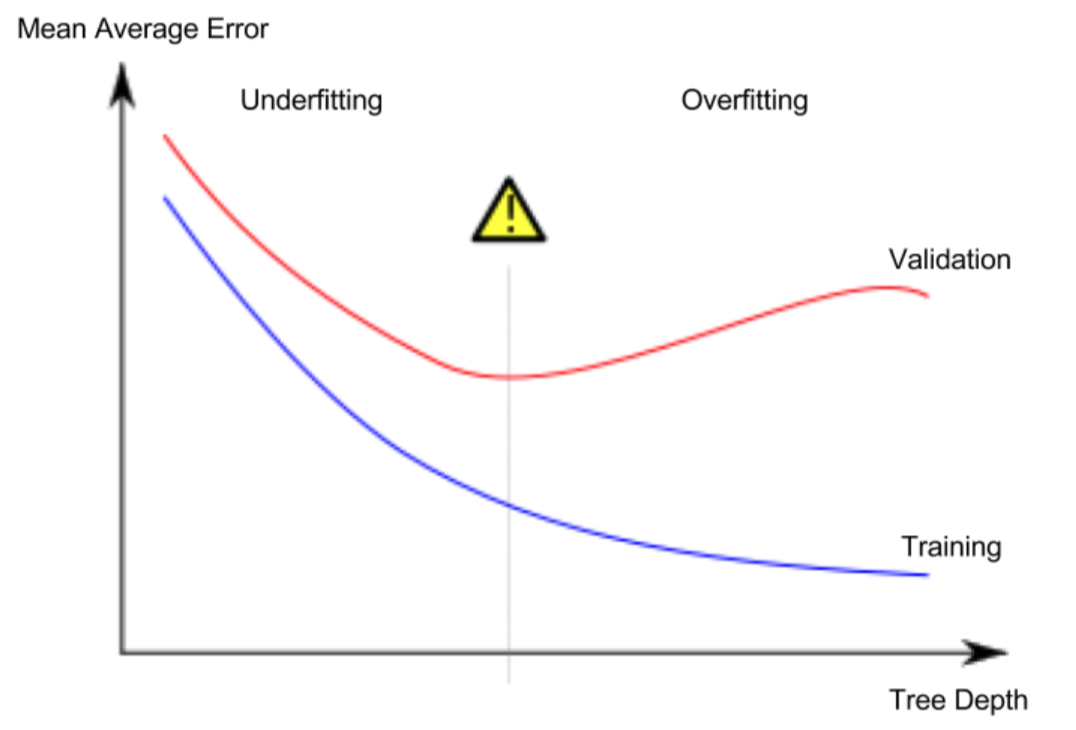

5.Underfitting and Overfitting

- overfitting: A model matches the data almost perfectly, but does poorly in validation and other new data.

- underfitting: When a model fails to capture important distinctions and patterns in the data, so it performs poorly even in training data.

The more leaves we allow the model to make, the more we move from the underfitting area in the above graph to overfitting area.

from sklearn.metrics import mean_absolute_error

from sklearn.tree import DecsionTreeRegressor

def get_ame(max_leaf_nodes, train_X, val_X, train_y, val_y):

model = DecisionTreeRegressor(max_leaf_nodes = max_leaf_nodes, random_state = 0)

model.fit(train_X, train_y)

preds_val = model.predict(val_X)

mae = mean_absolute_error(val_y, preds_val)

return(mae)

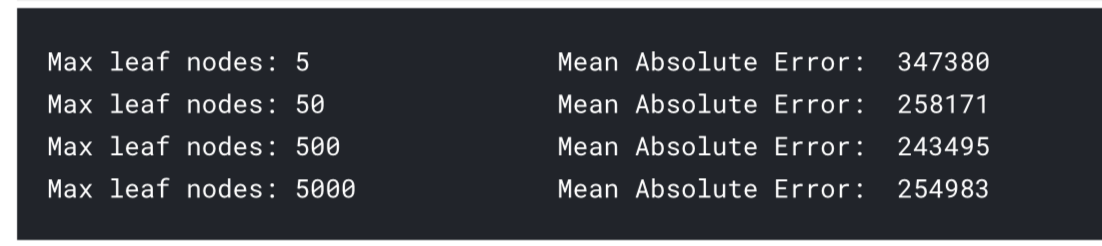

我可以使用循环比较选择最合适的max_leaf_nodes

for max_leaf_nodes in [5,50,500,5000]:

my_ame = get_ame(max_leaf_nodes, train_X, val_X, train_y, val_y)

print(max_leaf_nodes, my_ame)

最后可以发现,当max leaf nodes 为 500时,MAE最小, 接下来我们换另外一种模型

6.Random Forests

The random forest uses many trees, and it makes a prediction by averaging the predictions of each component tree. It generally has much better predictive accuracy than a single decision tree and it works well with default parameters.

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

forest_model = RandomForestRegressor(random_state=1)

forest_model.fit(train_X,train_y)

melb_preds = forest_model.predict(val_X)

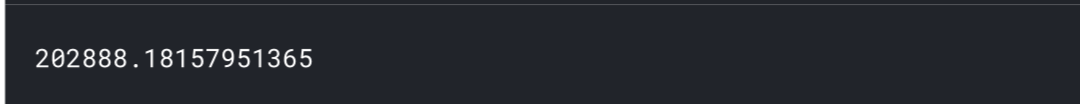

print(mean_absolute_error(val_y, melb_preds))

可以发现最后的误差,相对于决策树小。

one of the best features of Random Forest models is that they generally work reasonably even without this tuning.

7.Machine Learning Competitions

- Build a Random Forest model with all of your data

- Read in the "test" data, which doesn't include values for the target. Predict home values in the test data with your Random Forest model.

- Submit those predictions to the competition and see your score.

- Optionally, come back to see if you can improve your model by adding features or changing your model. Then you can resubmit to see how that stacks up on the competition leaderboard.

Intro to Machine Learning的更多相关文章

- 【机器学习Machine Learning】资料大全

昨天总结了深度学习的资料,今天把机器学习的资料也总结一下(友情提示:有些网站需要"科学上网"^_^) 推荐几本好书: 1.Pattern Recognition and Machi ...

- 机器学习(Machine Learning)&深度学习(Deep Learning)资料【转】

转自:机器学习(Machine Learning)&深度学习(Deep Learning)资料 <Brief History of Machine Learning> 介绍:这是一 ...

- How do I learn machine learning?

https://www.quora.com/How-do-I-learn-machine-learning-1?redirected_qid=6578644 How Can I Learn X? ...

- 机器学习(Machine Learning)与深度学习(Deep Learning)资料汇总

<Brief History of Machine Learning> 介绍:这是一篇介绍机器学习历史的文章,介绍很全面,从感知机.神经网络.决策树.SVM.Adaboost到随机森林.D ...

- Easy machine learning pipelines with pipelearner: intro and call for contributors

@drsimonj here to introduce pipelearner – a package I'm developing to make it easy to create machine ...

- How do I learn mathematics for machine learning?

https://www.quora.com/How-do-I-learn-mathematics-for-machine-learning How do I learn mathematics f ...

- 机器学习案例学习【每周一例】之 Titanic: Machine Learning from Disaster

下面一文章就总结几点关键: 1.要学会观察,尤其是输入数据的特征提取时,看各输入数据和输出的关系,用绘图看! 2.训练后,看测试数据和训练数据误差,确定是否过拟合还是欠拟合: 3.欠拟合的话,说明模 ...

- 【Machine Learning】KNN算法虹膜图片识别

K-近邻算法虹膜图片识别实战 作者:白宁超 2017年1月3日18:26:33 摘要:随着机器学习和深度学习的热潮,各种图书层出不穷.然而多数是基础理论知识介绍,缺乏实现的深入理解.本系列文章是作者结 ...

- 【Machine Learning】Python开发工具:Anaconda+Sublime

Python开发工具:Anaconda+Sublime 作者:白宁超 2016年12月23日21:24:51 摘要:随着机器学习和深度学习的热潮,各种图书层出不穷.然而多数是基础理论知识介绍,缺乏实现 ...

随机推荐

- C#连接SQL Anywhere 12 数据库

using System;using System.Data.Common; namespace ConsoleApplication27{ class Program { ...

- QT状态机

首先吐槽下网上各种博主不清不楚的讲解 特别容易让新手迷惑 总体思想是这样的:首先要有一个状态机对象, 顾名思义,这玩意就是用来容纳状态的.然后调用状态机的start()函数它就会更具你的逻辑去执行相关 ...

- NS3中一些难以理解的常数

摘要:在NS3的学习中,PHY MAC中总有一些常数,需要理解才能修改.如帧间间隔等.那么,本文做个简单分析,帮助大家理解.针对802.11标准中MAC协议. void WifiMac::Conf ...

- 免费IP代理池定时维护,封装通用爬虫工具类每次随机更新IP代理池跟UserAgent池,并制作简易流量爬虫

前言 我们之前的爬虫都是模拟成浏览器后直接爬取,并没有动态设置IP代理以及UserAgent标识,本文记录免费IP代理池定时维护,封装通用爬虫工具类每次随机更新IP代理池跟UserAgent池,并制作 ...

- git submodule 子模块

### 背景:为什么要用子模块? 在开发项目中可能会遇到这种问题:在你的项目中使用另一个项目,也许这是一个第三方开发的库,或者是你独立开发的并在多个父项目中使用的.简单来说就是A同学开发了一个模块,被 ...

- ThreadLocalSingleton.h——base

#ifndef MUDUO_BASE_THREADLOCALSINGLETON_H #define MUDUO_BASE_THREADLOCALSINGLETON_H #include <boo ...

- 由group by引发的sql_mode的学习

前言 在一次使用group by查询数据库时,遇到了问题.下面先搭建环境,然后让问题复现,最后分析问题. 一 问题复现 mysql版本 建表插入数据 表的结构 现在问题来了:我想查询上面表中每个部门年 ...

- 《白帽子讲web安全》——吴瀚清 阅读笔记

浏览器安全 同源策略:浏览器的同源策略限制了不同来源的“document”或脚本,对当前的“document”读取或设置某些属性.是浏览器安全的基础,即限制不同域的网址脚本交互 <scr ...

- Python 列表深浅复制详解

在文章<Python 数据类型>里边介绍了列表的用法,其中列表有个 copy() 方法,意思是复制一个相同的列表.例如 names = ["小明", "小红& ...

- 图表控件业界革命 -Arction新产品LightningChart JS 上市

芬兰高科技企业Arction Ltd 在今年8月份推出了用于网页的数据可视化控件新解决方案—— LightningChart JS. 初次的基准测试表明,该产品为网页应用程序的数据可视化刷新了新的纪录 ...