Paper Reading - Learning to Evaluate Image Captioning ( CVPR 2018 ) ★

Link of the Paper: https://arxiv.org/abs/1806.06422

Innovations:

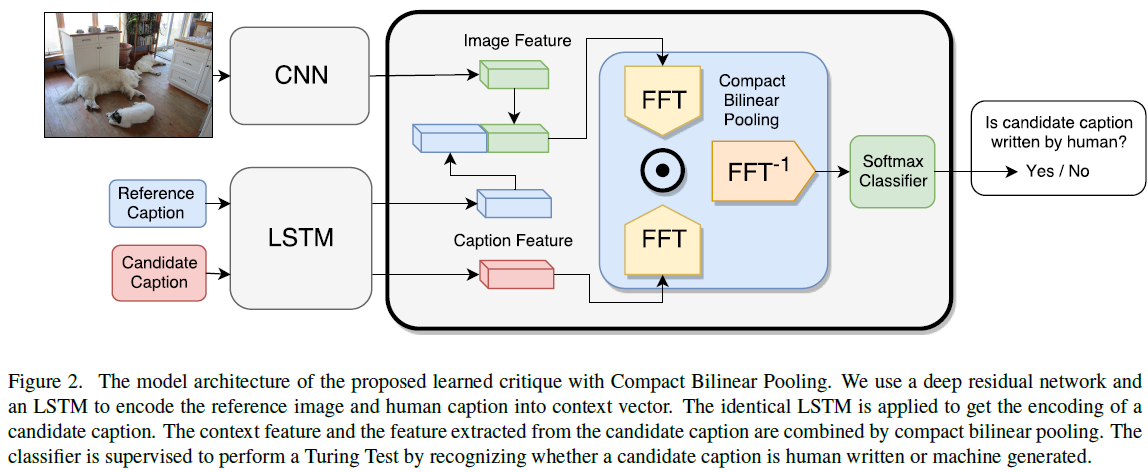

- The authors propose a novel learning based discriminative evaluation metric that is directly trained to distinguish between human and machine-generated captions. They train an automatic critique to distinguish generated captions from human-written ones, and then score candidate captions by how successful they are in fooling the critique. Formally, given a critique parametrized by Θ, a reference image i, and a generated caption c, the score is defined as the probability for the caption of being human-written, as assigned by the critique: scoreΘ(c, i) = P(c is human written | i, Θ). More generally, the reference image represents the context in which the generated caption is evaluated. To provide further information about the relevance and salience of the image content, a reference caption can additionally be supplied to the context. Let C(i) denotes the context of image i, then reference caption c could be included as part of context, i.e. c∈C(i). The score with context becomes scoreΘ(c, i) = P(c is human written | C(i), Θ).

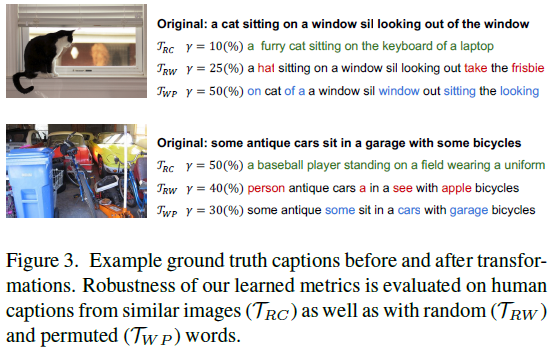

- To systematically create pathological sentences, the authors define several transformations to generate unnatural sentences that might get high scores in an evaluation metric. Their proposed data augmentation scheme uses these transformations to generate large number of negative examples. Formally, a transformation Τ takes an image-caption dataset and generates a new one: Τ({(c, i) ∈ D}; γ) = {(c1', i1'), ..., (cn', in')}, where i, ii' are images, c, ci' are captions, D is a list of caption-image tuples representing the original dataset, and γ is a hyper-parameter that controls the strength of the transformation. Specifically, authors define following three transformations to generate pathological image-captions pairs:

- Random Captions ( RC ): To ensure the metric pays attention to the image content, they randomly sample human written captions from other images in the training set: TRC(D; γ) = {(c', i) | (c, i), (c', i') ∈ D, i'∈Nγ(i)}, where Nγ(i) represents the set of images that are top γ percent nearest neighbors to image i.

- Word Permutation ( WP ): To make sure that their metric pays attention to sentence structure, authors randomly permute at least 2 words in the reference caption: TWP(D; γ) = {(c', i) | (c, i) ∈ D, c' ∈ Pγ(c) \ {c}}, where Pγ(c) represents all sentences generated by permuting γ percent of words in caption c.

- Random Word ( RW ): To explore rare words authors replace from 2 to all words of the reference caption with random words from the vocabulary: TRW(D; γ) = {(c', i) | (c, i) ∈ D, c' ∈ Wγ(c) \ {c}}, where Wγ(c) represents all sentences generated by randomly replacing γ percent words from caption c.

- The authors propose a systematic approach to measure the robustness of an evaluation metric to a given pathological transformation.

General Points:

- Commonly used evaluation metrics for Image Captioning: BLEU, METEOR, ROUGE, CIDEr, SPICE. These metrics face two challenges. Firstly, many metrics fail to correlate well with human judgments. Metrics based on measuring word overlap between candidate and reference captions find it difficult to capture semantic meaning of a sentence, therefore often lead to bad correlation with human judgments. Secondly, each evaluation metric has its well-known blind spot, and rule-based metrics are often inflexible to be responsive to new pathological cases.

- Compact Bilinear Pooling ( CBP ) has been demonstrated in Multimodal compact bilinear pooling for visual question answering and visual grounding to be very effective in combining heterogeneous information of image and text.

Paper Reading - Learning to Evaluate Image Captioning ( CVPR 2018 ) ★的更多相关文章

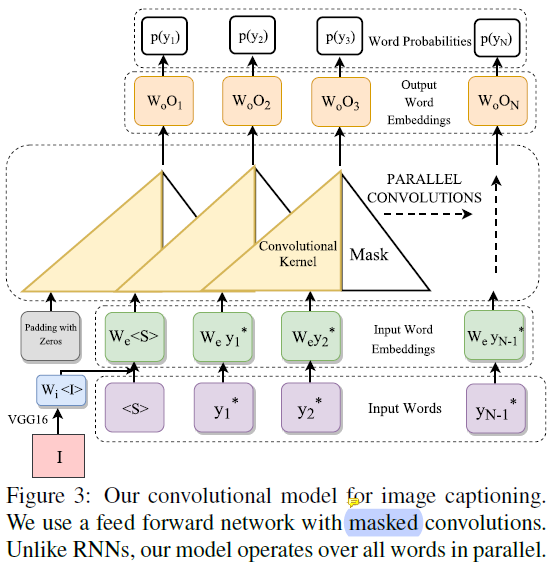

- Paper Reading - Convolutional Image Captioning ( CVPR 2018 )

Link of the Paper: https://arxiv.org/abs/1711.09151 Motivation: LSTM units are complex and inherentl ...

- Paper Reading - Learning like a Child: Fast Novel Visual Concept Learning from Sentence Descriptions of Images ( ICCV 2015 )

Link of the Paper: https://arxiv.org/pdf/1504.06692.pdf Innovations: The authors propose the Novel V ...

- Paper Reading: Stereo DSO

开篇第一篇就写一个paper reading吧,用markdown+vim写东西切换中英文挺麻烦的,有些就偷懒都用英文写了. Stereo DSO: Large-Scale Direct Sparse ...

- 读paper笔记[Learning to rank]

读paper笔记[Learning to rank] by Jiawang 选读paper: [1] Ranking by calibrated AdaBoost, R. Busa-Fekete, B ...

- 在矩池云上复现 CVPR 2018 LearningToCompare_FSL 环境

这是 CVPR 2018 的一篇少样本学习论文:Learning to Compare: Relation Network for Few-Shot Learning 源码地址:https://git ...

- 爬取CVPR 2018过程中遇到的坑

爬取 CVPR 2018 过程中遇到的坑 使用语言及模块 语言: Python 3.6.6 模块: re requests lxml bs4 过程 一开始都挺顺利的,先获取到所有文章的链接再逐个爬取获 ...

- Paper Reading - Convolutional Sequence to Sequence Learning ( CoRR 2017 ) ★

Link of the Paper: https://arxiv.org/abs/1705.03122 Motivation: Compared to recurrent layers, convol ...

- Paper Reading - Deep Captioning with Multimodal Recurrent Neural Networks ( m-RNN ) ( ICLR 2015 ) ★

Link of the Paper: https://arxiv.org/pdf/1412.6632.pdf Main Points: The authors propose a multimodal ...

- Paper Reading - Deep Visual-Semantic Alignments for Generating Image Descriptions ( CVPR 2015 )

Link of the Paper: https://arxiv.org/abs/1412.2306 Main Points: An Alignment Model: Convolutional Ne ...

随机推荐

- 选择排序_c++

选择排序_c++ GitHub 文解 选择排序的核心思想是对于 N 个元素进行排序时,对其进行 K = (N - 1) 次排序,每次排序从后(N + 1 - K)个数值中选择最小的元素与以 (K - ...

- android软件开发之获取本地音乐属性

歌曲的名称 :MediaStore.Audio.Media.TITLString tilte = cursor.getString(cursor.getColumnIndexOrThrow(Media ...

- wps for linux 安装后系统缺失字体安装配置

错误提示: 解决方法: 从http://bbs.wps.cn/thread-22355435-1-1.html下载字体库,离线版本:(链接: https://pan.baidu.com/s/1i5dz ...

- Spring的入门学习笔记 (AOP概念及操作+AspectJ)

AOP概念 1.aop:面向切面(方面)编程,扩展功能不通过源代码实现 2.采用横向抽取机制,取代了传统的纵向继承重复代码 AOP原理 假设现有 public class User{ //添加用户方法 ...

- ComboBox可搜索下拉框的使用注意事项,简单记录以及我遇到的一些奇怪的bug

前几天做一个react的项目的时候需要用一个可搜索的下拉框ComboBox,上代码: <ComboBox // className={comboxClassName} items={storeA ...

- u-boot.2012.10makefile分析,良心博友汇总

声明:以下内容大部分来自网站博客文章,仅作学习之用1.uboot系列之-----顶层Makefile分析(一)1.u-boot.bin生成过程分析 2.make/makefile中的加号+,减号-和a ...

- RESTful Demo

Demo 功能 两个模块, App 与 Admin, App 模块提供增加用户(/add?name=${name})与查询用户(/query/${id}), Admin 模块提供列出所有用户(/lis ...

- Tomcat7 调优及 JVM 参数优化

Tomcat 的缺省配置是不能稳定长期运行的,也就是不适合生产环境,它会死机,让你不断重新启动,甚至在午夜时分唤醒你.对于操作系统优化来说,是尽可能的增大可使用的内存容量.提高CPU 的频率,保证 ...

- 在线接口文档工具——ShowDoc

ShowDoc:https://www.showdoc.cc/ --待更.

- c#调用c++库函数

如果是非托管的,就用DllImport,举例 using System; using System.Runtime.InteropServices; class MainApp ...