TensorFlow线性回归

目录

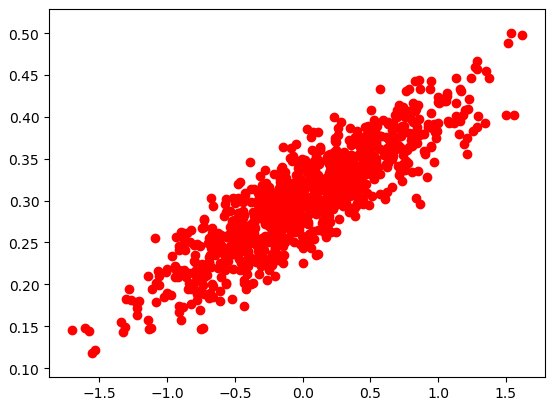

数据可视化

梯度下降

结果可视化

|

数据可视化 |

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt # 随机生成1000个点,围绕在y=0.1x+0.3的直线周围

num_points = 1000

vectors_set = []

for i in range(num_points):

x1 = np.random.normal(0.0, 0.55)

y1 = x1 * 0.1 + 0.3 + np.random.normal(0.0, 0.03)

vectors_set.append([x1, y1]) # 生成一些样本

x_data = [v[0] for v in vectors_set]

y_data = [v[1] for v in vectors_set] plt.scatter(x_data,y_data,c='r')

plt.show()

|

梯度下降 |

# -*- coding: utf-8 -*-

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt # 随机生成1000个点,围绕在y=0.1x+0.3的直线周围

num_points = 1000

vectors_set = []

for i in range(num_points):

x1 = np.random.normal(0.0, 0.55)

y1 = x1 * 0.1 + 0.3 + np.random.normal(0.0, 0.03)

vectors_set.append([x1, y1]) # 生成一些样本

x_data = [v[0] for v in vectors_set]

y_data = [v[1] for v in vectors_set] # 生成1维的W矩阵,取值是[-1,1]之间的随机数

W = tf.Variable(tf.random_uniform([1], -1.0, 1.0), name='W')

# 生成1维的b矩阵,初始值是0

b = tf.Variable(tf.zeros([1]), name='b')

# 经过计算得出预估值y

y = W * x_data + b # 以预估值y和实际值y_data之间的均方误差作为损失

loss = tf.reduce_mean(tf.square(y - y_data), name='loss')

# 采用梯度下降法来优化参数

optimizer = tf.train.GradientDescentOptimizer(0.5) #参数是学习率

# 训练的过程就是最小化这个误差值

train = optimizer.minimize(loss, name='train') sess = tf.Session() init = tf.global_variables_initializer()

sess.run(init) # 初始化的W和b是多少

print ("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss))

# 执行20次训练

for step in range(20):

sess.run(train)

# 输出训练好的W和b

print ("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss))

'''

W = [ 0.72134733] b = [ 0.] loss = 0.204532

W = [ 0.54246926] b = [ 0.31014919] loss = 0.0552976

W = [ 0.41924465] b = [ 0.30693138] loss = 0.029155

W = [ 0.33045709] b = [ 0.30471471] loss = 0.0155833

W = [ 0.26648441] b = [ 0.30311754] loss = 0.00853772

W = [ 0.22039121] b = [ 0.30196676] loss = 0.00488007

W = [ 0.18718043] b = [ 0.3011376] loss = 0.00298124

W = [ 0.16325161] b = [ 0.30054021] loss = 0.00199547

W = [ 0.14601055] b = [ 0.30010974] loss = 0.00148373

W = [ 0.13358814] b = [ 0.29979959] loss = 0.00121806

W = [ 0.12463761] b = [ 0.29957613] loss = 0.00108014

W = [ 0.11818863] b = [ 0.29941514] loss = 0.00100854

W = [ 0.11354206] b = [ 0.29929912] loss = 0.000971367

W = [ 0.11019413] b = [ 0.29921553] loss = 0.00095207

W = [ 0.10778191] b = [ 0.29915532] loss = 0.000942053

W = [ 0.10604387] b = [ 0.29911193] loss = 0.000936852

W = [ 0.10479159] b = [ 0.29908064] loss = 0.000934153

W = [ 0.1038893] b = [ 0.29905814] loss = 0.000932751

W = [ 0.10323919] b = [ 0.2990419] loss = 0.000932023

W = [ 0.10277078] b = [ 0.29903021] loss = 0.000931646

W = [ 0.10243329] b = [ 0.29902178] loss = 0.00093145

'''

|

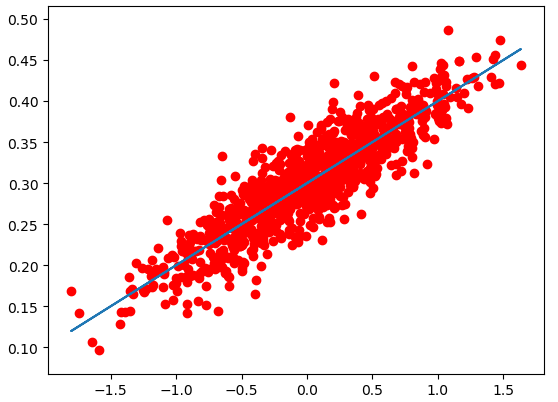

结果可视化 |

# -*- coding: utf-8 -*-

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt # 随机生成1000个点,围绕在y=0.1x+0.3的直线周围

num_points = 1000

vectors_set = []

for i in range(num_points):

x1 = np.random.normal(0.0, 0.55)

y1 = x1 * 0.1 + 0.3 + np.random.normal(0.0, 0.03)

vectors_set.append([x1, y1]) # 生成一些样本

x_data = [v[0] for v in vectors_set]

y_data = [v[1] for v in vectors_set] # 生成1维的W矩阵,取值是[-1,1]之间的随机数

W = tf.Variable(tf.random_uniform([1], -1.0, 1.0), name='W')

# 生成1维的b矩阵,初始值是0

b = tf.Variable(tf.zeros([1]), name='b')

# 经过计算得出预估值y

y = W * x_data + b # 以预估值y和实际值y_data之间的均方误差作为损失

loss = tf.reduce_mean(tf.square(y - y_data), name='loss')

# 采用梯度下降法来优化参数

optimizer = tf.train.GradientDescentOptimizer(0.5) #参数是学习率

# 训练的过程就是最小化这个误差值

train = optimizer.minimize(loss, name='train') sess = tf.Session() init = tf.global_variables_initializer()

sess.run(init) # 初始化的W和b是多少

print ("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss))

# 执行20次训练

for step in range(20):

sess.run(train)

# 输出训练好的W和b

print ("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss)) plt.scatter(x_data,y_data,c='r')

plt.plot(x_data,sess.run(W)*x_data+sess.run(b))

plt.show()

TensorFlow线性回归的更多相关文章

- [tensorflow] 线性回归模型实现

在这一篇博客中大概讲一下用tensorflow如何实现一个简单的线性回归模型,其中就可能涉及到一些tensorflow的基本概念和操作,然后因为我只是入门了点tensorflow,所以我只能对部分代码 ...

- python,tensorflow线性回归Django网页显示Gif动态图

1.工程组成 2.urls.py """Django_machine_learning_linear_regression URL Configuration The ` ...

- tensorflow 线性回归解决 iris 2分类

# Combining Everything Together #---------------------------------- # This file will perform binary ...

- 1.tensorflow——线性回归

tensorflow 1.一切都要tf. 2.只有sess.run才能生效 import tensorflow as tf import numpy as np import matplotlib.p ...

- tensorflow 线性回归 iris

线性拟合

- TensorFlow简要教程及线性回归算法示例

TensorFlow是谷歌推出的深度学习平台,目前在各大深度学习平台中使用的最广泛. 一.安装命令 pip3 install -U tensorflow --default-timeout=1800 ...

- TensorFlow API 汉化

TensorFlow API 汉化 模块:tf 定义于tensorflow/__init__.py. 将所有公共TensorFlow接口引入此模块. 模块 app module:通用入口点脚本. ...

- tfboys——tensorflow模块学习(三)

tf.estimator模块 定义在:tensorflow/python/estimator/estimator_lib.py 估算器(Estimator): 用于处理模型的高级工具. 主要模块 ex ...

- TensorFlow — 相关 API

TensorFlow — 相关 API TensorFlow 相关函数理解 任务时间:时间未知 tf.truncated_normal truncated_normal( shape, mean=0. ...

随机推荐

- wex5 baasData规则和绑定 学习

1 在baasData新建一个计算列 2 点击编辑规则,左边选择该计算列, 右边点击计算后面的设置 3 写规则的逻辑 好像不能用if 只能用三目运算符 4 绑定样式和文本的时候 这么用:

- springboot(二十)-配置文件 bootstrap和application区别

用过 Spring Boot 的都知道在 Spring Boot 中有以下两种配置文件 bootstrap (.yml 或者 .properties) application (.yml 或者 .pr ...

- leetcode 对角线遍历 JavaScript

JavaScript /** * @param {number[][]} matrix * @return {number[]} */ var findDiagonalOrder = function ...

- 第二十篇 jQuery 初步学习2

jQuery 初步学习2 前言: 老师这里啰嗦一下,因为考虑到一些同学,不太了解WEB前端这门语言.老师就简单的说一下,写前端,需要什么:一台笔记本.一个文本编辑器.就没啦!当然,写这门语言, ...

- python之multiprocessing多进程

multiprocessing 充分利用cpu多核一般情况下cpu密集使用进程池,IO密集使用线程池.python下想要充分利用多核CPU,就用多进程. Process 类Process 类用来描述一 ...

- String,到底创建了多少个对象?

String str=new String("aaa"); <span style="font-size:14px;">String str=n ...

- Logback日志输出到ELK

用docker-compose本机部署elk docker-compose.yml version: "3" services: es01: image: docker.elast ...

- shell脚本实战

想写个脚本,发现都忘了,蛋疼,一边回忆一边查一边写,总算完成了,贴在下面: #!/bin/bash #Program: # This program can help you quickly rede ...

- 更改命令行,完全显示hostname

刚装完一台新服务器,想让命令行的能显示全部的hostname,查阅资料后,将$PS1的参数修改即可 1,echo $PS1 2,将其中的/h换成/H即可 3,我是在/etc/profile中加了一行 ...

- 分布式特点理解-Zookeeper准备

分布式环境特点 分布性 地域,区域,机房,服务器不同导致分布性 并发性 程序运行中,并发性操作很常见,比如同一个分布式系统中的多个节点,同时访问一个共享资源(数据库,分布式存储) 无序性 进程之间的消 ...