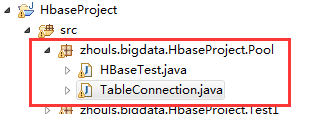

HBase编程 API入门系列之HTable pool(6)

HTable是一个比较重的对此,比如加载配置文件,连接ZK,查询meta表等等,高并发的时候影响系统的性能,因此引入了“池”的概念。

引入“HBase里的连接池”的目的是:

为了更高的,提高程序的并发和访问速度。

从“池”里去拿,拿完之后,放“池”即可。

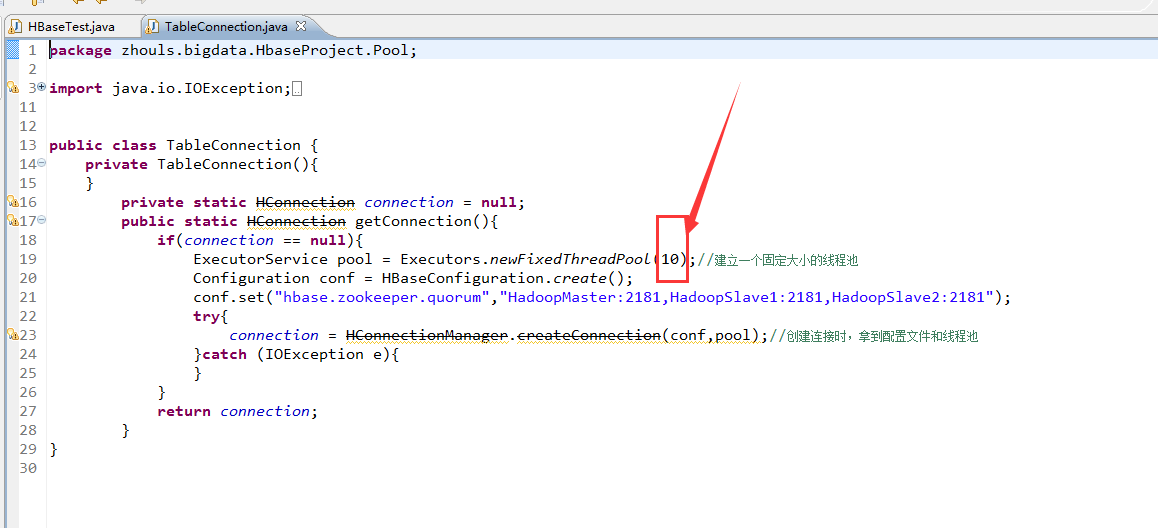

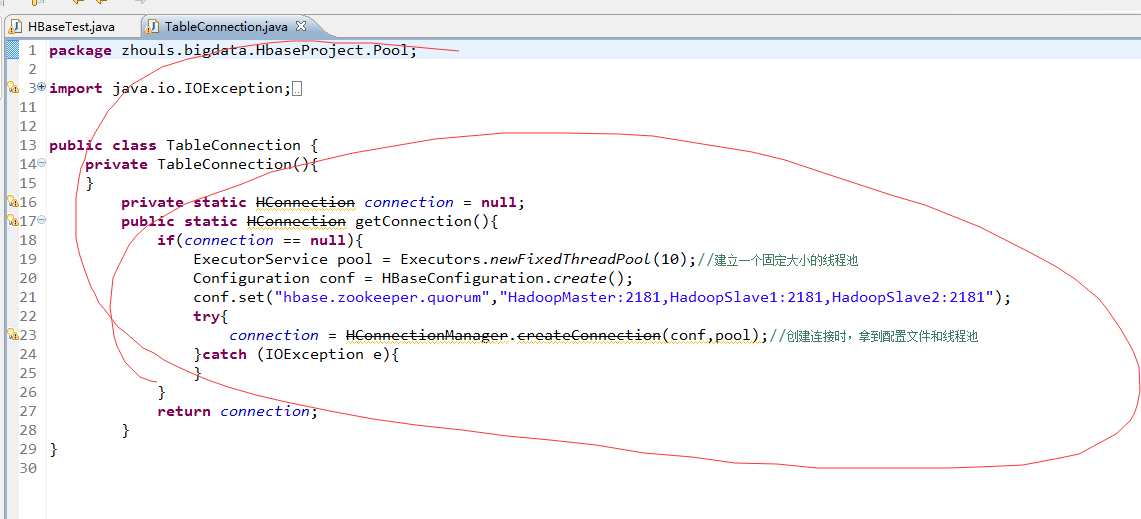

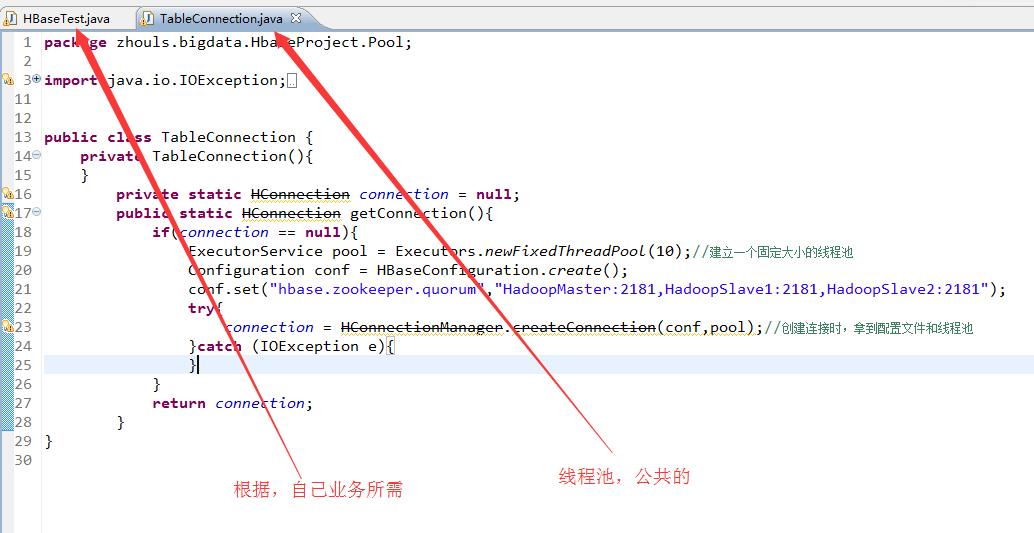

package zhouls.bigdata.HbaseProject.Pool; import java.io.IOException;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.HConnection;

import org.apache.hadoop.hbase.client.HConnectionManager; public class TableConnection {

private TableConnection(){

}

private static HConnection connection = null;

public static HConnection getConnection(){

if(connection == null){

ExecutorService pool = Executors.newFixedThreadPool(10);//建立一个固定大小的线程池

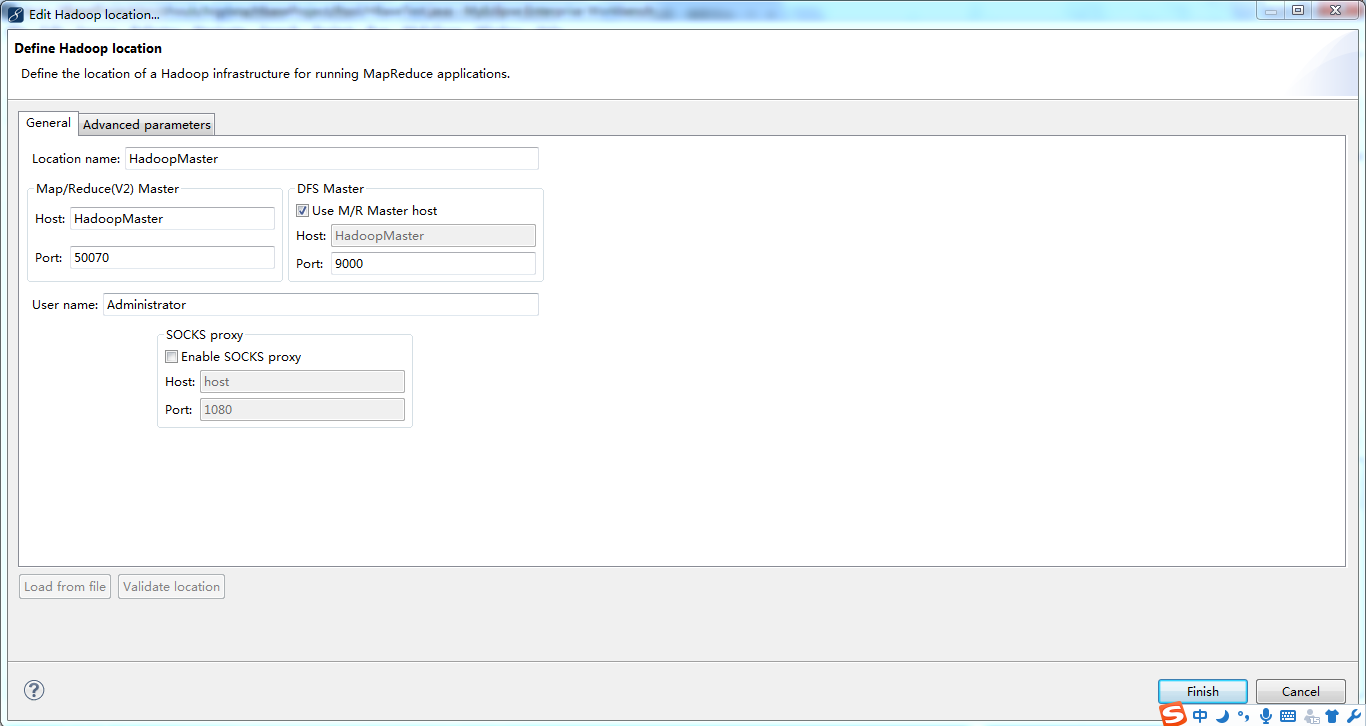

Configuration conf = HBaseConfiguration.create();

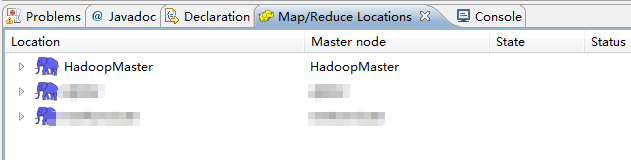

conf.set("hbase.zookeeper.quorum","HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

try{

connection = HConnectionManager.createConnection(conf,pool);//创建连接时,拿到配置文件和线程池

}catch (IOException e){

}

}

return connection;

}

}

转到程序里,怎么来用这个“池”呢?

即,TableConnection是公共的,新建好的“池”。可以一直作为模板啦。

1、引用“池”超过

HBase编程 API入门系列之put(客户端而言)(1)

上面这种方式

package zhouls.bigdata.HbaseProject.Pool; import java.io.IOException; import zhouls.bigdata.HbaseProject.Pool.TableConnection; import javax.xml.transform.Result; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

// HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

// Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0

// put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3

// table.put(put);

// table.close(); // Get get = new Get(Bytes.toBytes("row_04"));

// get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_2"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name"));

// table.delete(delete);

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_04"));

//// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close(); // Scan scan = new Scan();

// scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键

// scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键

// scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// ResultScanner rst = table.getScanner(scan);//整个循环

// System.out.println(rst.toString());

// for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){

// for(Cell cell:next.rawCells()){//某个row key下的循坏

// System.out.println(next.toString());

// System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

// System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

// System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

// }

// }

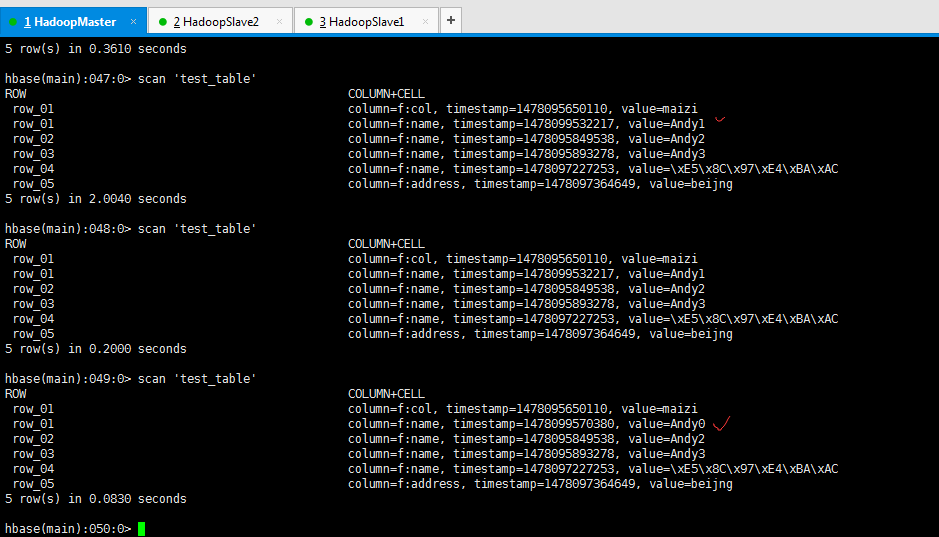

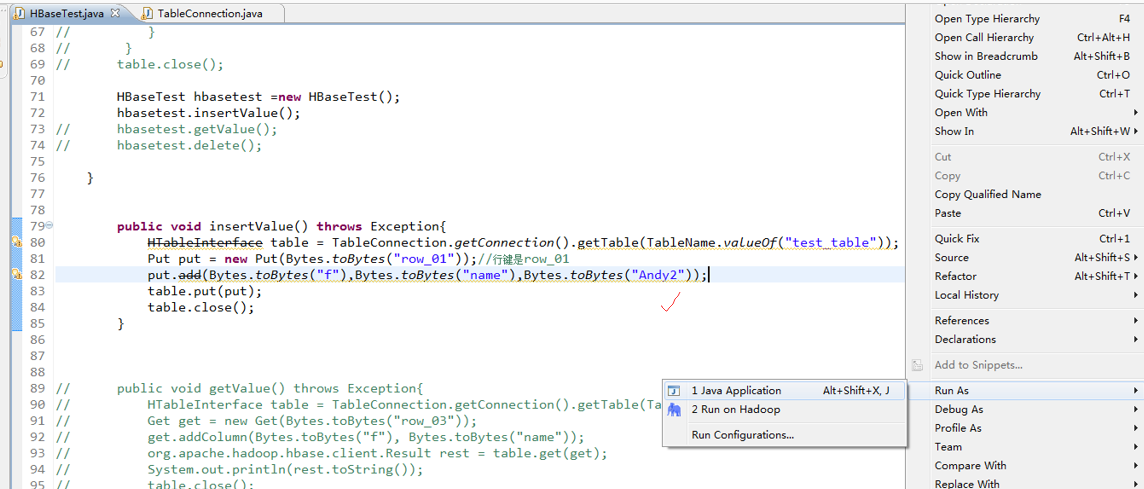

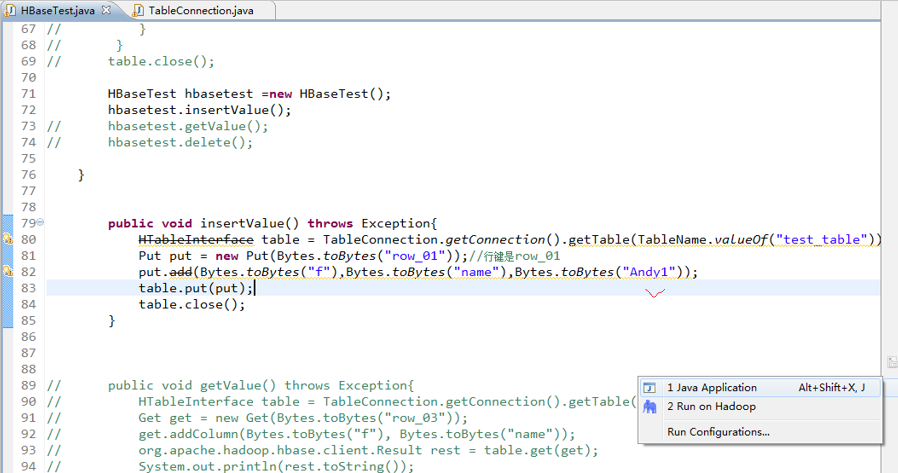

// table.close(); HBaseTest hbasetest =new HBaseTest();

hbasetest.insertValue();

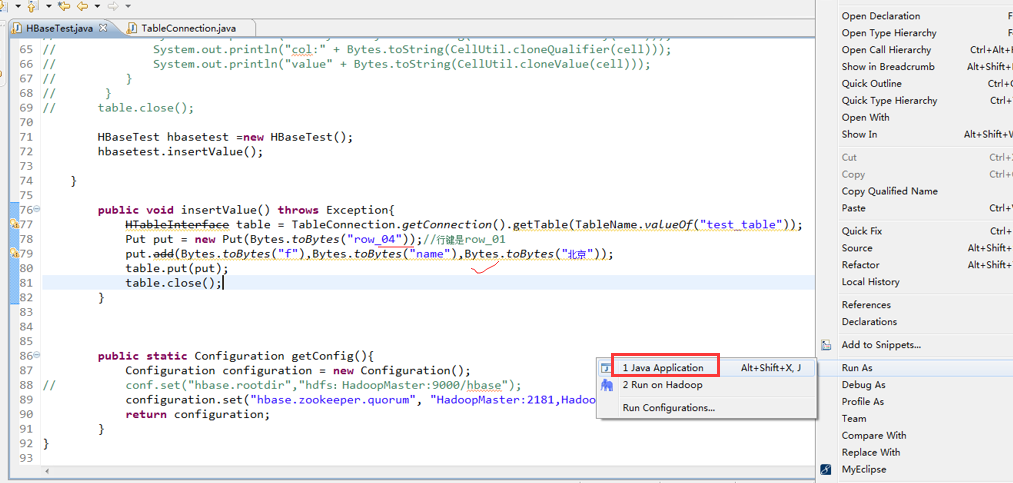

} public void insertValue() throws Exception{

HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

Put put = new Put(Bytes.toBytes("row_04"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("北京"));

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

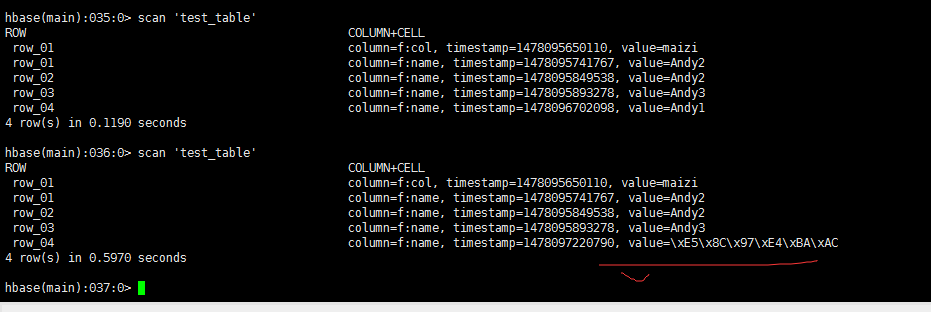

hbase(main):035:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478095650110, value=maizi

row_01 column=f:name, timestamp=1478095741767, value=Andy2

row_02 column=f:name, timestamp=1478095849538, value=Andy2

row_03 column=f:name, timestamp=1478095893278, value=Andy3

row_04 column=f:name, timestamp=1478096702098, value=Andy1

4 row(s) in 0.1190 seconds

hbase(main):036:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478095650110, value=maizi

row_01 column=f:name, timestamp=1478095741767, value=Andy2

row_02 column=f:name, timestamp=1478095849538, value=Andy2

row_03 column=f:name, timestamp=1478095893278, value=Andy3

row_04 column=f:name, timestamp=1478097220790, value=\xE5\x8C\x97\xE4\xBA\xAC

4 row(s) in 0.5970 seconds

hbase(main):037:0>

package zhouls.bigdata.HbaseProject.Pool; import java.io.IOException; import zhouls.bigdata.HbaseProject.Pool.TableConnection; import javax.xml.transform.Result; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

// HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

// Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0

// put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3

// table.put(put);

// table.close(); // Get get = new Get(Bytes.toBytes("row_04"));

// get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_2"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name"));

// table.delete(delete);

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_04"));

//// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close(); // Scan scan = new Scan();

// scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键

// scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键

// scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// ResultScanner rst = table.getScanner(scan);//整个循环

// System.out.println(rst.toString());

// for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){

// for(Cell cell:next.rawCells()){//某个row key下的循坏

// System.out.println(next.toString());

// System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

// System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

// System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

// }

// }

// table.close(); HBaseTest hbasetest =new HBaseTest();

hbasetest.insertValue();

} public void insertValue() throws Exception{

HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

Put put = new Put(Bytes.toBytes("row_05"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("address"),Bytes.toBytes("beijng"));

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

2016-12-11 14:22:14,784 INFO [org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper] - Process identifier=hconnection-0x19d12e87 connecting to ZooKeeper ensemble=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181

2016-12-11 14:22:14,796 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-12-11 14:22:14,796 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:host.name=WIN-BQOBV63OBNM

2016-12-11 14:22:14,796 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.version=1.7.0_51

2016-12-11 14:22:14,796 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.vendor=Oracle Corporation

2016-12-11 14:22:14,796 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.home=C:\Program Files\Java\jdk1.7.0_51\jre

2016-12-11 14:22:14,797 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.class.path=D:\Code\MyEclipseJavaCode\HbaseProject\bin;D:\SoftWare\hbase-1.2.3\lib\activation-1.1.jar;D:\SoftWare\hbase-1.2.3\lib\aopalliance-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-i18n-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\api-asn1-api-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\api-util-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\asm-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\avro-1.7.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-1.7.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-core-1.8.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-cli-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-codec-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\commons-collections-3.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-compress-1.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-configuration-1.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-daemon-1.0.13.jar;D:\SoftWare\hbase-1.2.3\lib\commons-digester-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\commons-el-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-httpclient-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-io-2.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-lang-2.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-logging-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math-2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math3-3.1.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-net-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\disruptor-3.3.0.jar;D:\SoftWare\hbase-1.2.3\lib\findbugs-annotations-1.3.9-1.jar;D:\SoftWare\hbase-1.2.3\lib\guava-12.0.1.jar;D:\SoftWare\hbase-1.2.3\lib\guice-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\guice-servlet-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-annotations-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-auth-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-hdfs-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-app-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-core-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-jobclient-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-shuffle-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-api-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-server-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-client-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-examples-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-external-blockcache-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop2-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-prefix-tree-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-procedure-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-protocol-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-resource-bundle-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-rest-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-shell-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-thrift-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\htrace-core-3.1.0-incubating.jar;D:\SoftWare\hbase-1.2.3\lib\httpclient-4.2.5.jar;D:\SoftWare\hbase-1.2.3\lib\httpcore-4.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-core-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-jaxrs-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-mapper-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-xc-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jamon-runtime-2.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-compiler-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-runtime-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\javax.inject-1.jar;D:\SoftWare\hbase-1.2.3\lib\java-xmlbuilder-0.4.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-api-2.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-impl-2.2.3-1.jar;D:\SoftWare\hbase-1.2.3\lib\jcodings-1.0.8.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-client-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-core-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-guice-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-json-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-server-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jets3t-0.9.0.jar;D:\SoftWare\hbase-1.2.3\lib\jettison-1.3.3.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-sslengine-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-util-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\joni-2.1.2.jar;D:\SoftWare\hbase-1.2.3\lib\jruby-complete-1.6.8.jar;D:\SoftWare\hbase-1.2.3\lib\jsch-0.1.42.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-api-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\junit-4.12.jar;D:\SoftWare\hbase-1.2.3\lib\leveldbjni-all-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\libthrift-0.9.3.jar;D:\SoftWare\hbase-1.2.3\lib\log4j-1.2.17.jar;D:\SoftWare\hbase-1.2.3\lib\metrics-core-2.2.0.jar;D:\SoftWare\hbase-1.2.3\lib\netty-all-4.0.23.Final.jar;D:\SoftWare\hbase-1.2.3\lib\paranamer-2.3.jar;D:\SoftWare\hbase-1.2.3\lib\protobuf-java-2.5.0.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-api-1.7.7.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-log4j12-1.7.5.jar;D:\SoftWare\hbase-1.2.3\lib\snappy-java-1.0.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\spymemcached-2.11.6.jar;D:\SoftWare\hbase-1.2.3\lib\xmlenc-0.52.jar;D:\SoftWare\hbase-1.2.3\lib\xz-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\zookeeper-3.4.6.jar

2016-12-11 14:22:14,797 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.library.path=C:\Program Files\Java\jdk1.7.0_51\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\ProgramData\Oracle\Java\javapath;C:\Python27\;C:\Python27\Scripts;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;D:\SoftWare\MATLAB R2013a\runtime\win64;D:\SoftWare\MATLAB R2013a\bin;C:\Program Files (x86)\IDM Computer Solutions\UltraCompare;C:\Program Files\Java\jdk1.7.0_51\bin;C:\Program Files\Java\jdk1.7.0_51\jre\bin;D:\SoftWare\apache-ant-1.9.0\bin;HADOOP_HOME\bin;D:\SoftWare\apache-maven-3.3.9\bin;D:\SoftWare\Scala\bin;D:\SoftWare\Scala\jre\bin;%MYSQL_HOME\bin;D:\SoftWare\MySQL Server\MySQL Server 5.0\bin;D:\SoftWare\apache-tomcat-7.0.69\bin;%C:\Windows\System32;%C:\Windows\SysWOW64;D:\SoftWare\SSH Secure Shell;.

2016-12-11 14:22:14,798 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\

2016-12-11 14:22:14,798 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.compiler=<NA>

2016-12-11 14:22:14,798 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.name=Windows 7

2016-12-11 14:22:14,798 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.arch=amd64

2016-12-11 14:22:14,798 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.version=6.1

2016-12-11 14:22:14,799 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.name=Administrator

2016-12-11 14:22:14,799 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.home=C:\Users\Administrator

2016-12-11 14:22:14,799 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.dir=D:\Code\MyEclipseJavaCode\HbaseProject

2016-12-11 14:22:14,801 INFO [org.apache.zookeeper.ZooKeeper] - Initiating client connection, connectString=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181 sessionTimeout=90000 watcher=hconnection-0x19d12e870x0, quorum=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181, baseZNode=/hbase

2016-12-11 14:22:14,853 INFO [org.apache.zookeeper.ClientCnxn] - Opening socket connection to server HadoopMaster/192.168.80.10:2181. Will not attempt to authenticate using SASL (unknown error)

2016-12-11 14:22:14,855 INFO [org.apache.zookeeper.ClientCnxn] - Socket connection established to HadoopMaster/192.168.80.10:2181, initiating session

2016-12-11 14:22:14,960 INFO [org.apache.zookeeper.ClientCnxn] - Session establishment complete on server HadoopMaster/192.168.80.10:2181, sessionid = 0x1582556e7c5001c, negotiated timeout = 40000

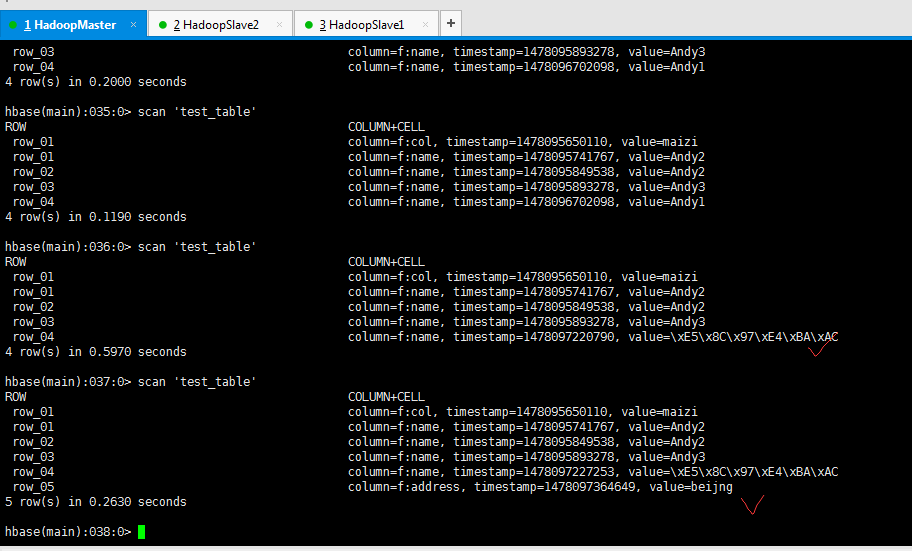

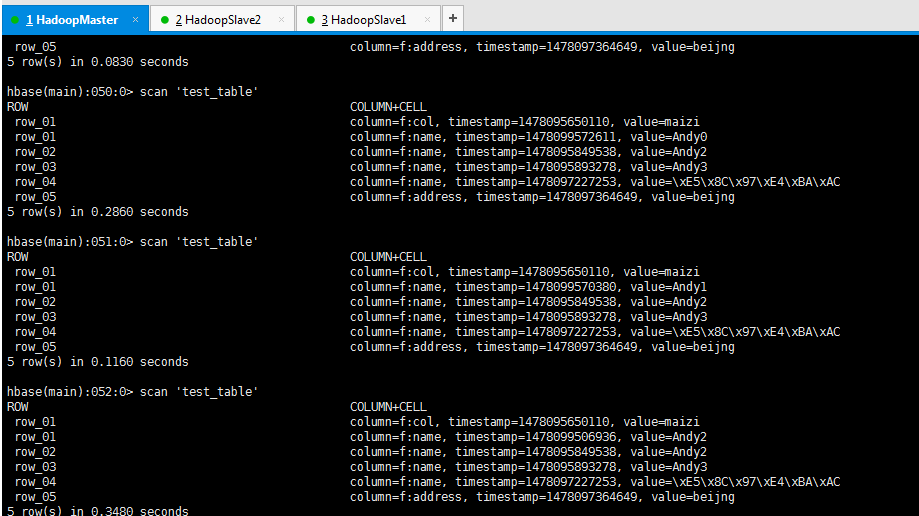

hbase(main):035:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478095650110, value=maizi

row_01 column=f:name, timestamp=1478095741767, value=Andy2

row_02 column=f:name, timestamp=1478095849538, value=Andy2

row_03 column=f:name, timestamp=1478095893278, value=Andy3

row_04 column=f:name, timestamp=1478096702098, value=Andy1

4 row(s) in 0.1190 seconds

hbase(main):036:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478095650110, value=maizi

row_01 column=f:name, timestamp=1478095741767, value=Andy2

row_02 column=f:name, timestamp=1478095849538, value=Andy2

row_03 column=f:name, timestamp=1478095893278, value=Andy3

row_04 column=f:name, timestamp=1478097220790, value=\xE5\x8C\x97\xE4\xBA\xAC

4 row(s) in 0.5970 seconds

hbase(main):037:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478095650110, value=maizi

row_01 column=f:name, timestamp=1478095741767, value=Andy2

row_02 column=f:name, timestamp=1478095849538, value=Andy2

row_03 column=f:name, timestamp=1478095893278, value=Andy3

row_04 column=f:name, timestamp=1478097227253, value=\xE5\x8C\x97\xE4\xBA\xAC

row_05 column=f:address, timestamp=1478097364649, value=beijng

5 row(s) in 0.2630 seconds

hbase(main):038:0>

即,这就是,“池”的概念,会一直保持。

详细分析

这里,我设定的是10个线程池,

其实,很简单,就好比,你来拿一个去用,别人来拿一个去用。等你们用完了,再还回来。(好比跟图书馆里的借书一样)

那有人会问,若我设定的固定10个线程池,都被别人拿完了,若第11个来了,怎办?岂不是,没得拿?

答案:那你就等着呗,等别人还回来。这跟队列是一样的原理。

这样做的理由,很简单,有了线程池,不需,我们再每次都手动配置文件啊连接zk了。因为,在TableConnection.java里,写好了。

2、引用“池”超过

HBase编程 API入门系列之get(客户端而言)(2)

上面这种方式

为了更进一步,给博友们,深层次明白,“池”的魅力,当然,这也是在公司实际开发里,首推和强烈建议去做的。

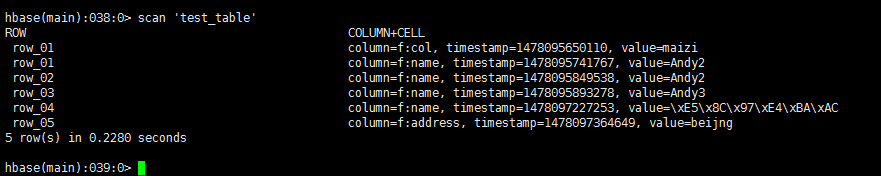

hbase(main):038:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478095650110, value=maizi

row_01 column=f:name, timestamp=1478095741767, value=Andy2

row_02 column=f:name, timestamp=1478095849538, value=Andy2

row_03 column=f:name, timestamp=1478095893278, value=Andy3

row_04 column=f:name, timestamp=1478097227253, value=\xE5\x8C\x97\xE4\xBA\xAC

row_05 column=f:address, timestamp=1478097364649, value=beijng

5 row(s) in 0.2280 seconds

hbase(main):039:0>

package zhouls.bigdata.HbaseProject.Pool; import java.io.IOException; import zhouls.bigdata.HbaseProject.Pool.TableConnection; import javax.xml.transform.Result; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

// HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

// Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0

// put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3

// table.put(put);

// table.close(); // Get get = new Get(Bytes.toBytes("row_04"));

// get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_2"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name"));

// table.delete(delete);

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_04"));

//// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close(); // Scan scan = new Scan();

// scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键

// scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键

// scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// ResultScanner rst = table.getScanner(scan);//整个循环

// System.out.println(rst.toString());

// for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){

// for(Cell cell:next.rawCells()){//某个row key下的循坏

// System.out.println(next.toString());

// System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

// System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

// System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

// }

// }

// table.close(); HBaseTest hbasetest =new HBaseTest();

// hbasetest.insertValue();

hbasetest.getValue();

} // public void insertValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Put put = new Put(Bytes.toBytes("row_05"));//行键是row_01

// put.add(Bytes.toBytes("f"),Bytes.toBytes("address"),Bytes.toBytes("beijng"));

// table.put(put);

// table.close();

// } public void getValue() throws Exception{

HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

Get get = new Get(Bytes.toBytes("row_03"));

get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

org.apache.hadoop.hbase.client.Result rest = table.get(get);

System.out.println(rest.toString());

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

2016-12-11 14:37:12,030 INFO [org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper] - Process identifier=hconnection-0x7660aac9 connecting to ZooKeeper ensemble=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181

2016-12-11 14:37:12,040 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-12-11 14:37:12,041 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:host.name=WIN-BQOBV63OBNM

2016-12-11 14:37:12,041 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.version=1.7.0_51

2016-12-11 14:37:12,041 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.vendor=Oracle Corporation

2016-12-11 14:37:12,041 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.home=C:\Program Files\Java\jdk1.7.0_51\jre

2016-12-11 14:37:12,041 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.class.path=D:\Code\MyEclipseJavaCode\HbaseProject\bin;D:\SoftWare\hbase-1.2.3\lib\activation-1.1.jar;D:\SoftWare\hbase-1.2.3\lib\aopalliance-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-i18n-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\api-asn1-api-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\api-util-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\asm-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\avro-1.7.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-1.7.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-core-1.8.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-cli-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-codec-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\commons-collections-3.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-compress-1.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-configuration-1.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-daemon-1.0.13.jar;D:\SoftWare\hbase-1.2.3\lib\commons-digester-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\commons-el-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-httpclient-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-io-2.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-lang-2.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-logging-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math-2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math3-3.1.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-net-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\disruptor-3.3.0.jar;D:\SoftWare\hbase-1.2.3\lib\findbugs-annotations-1.3.9-1.jar;D:\SoftWare\hbase-1.2.3\lib\guava-12.0.1.jar;D:\SoftWare\hbase-1.2.3\lib\guice-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\guice-servlet-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-annotations-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-auth-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-hdfs-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-app-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-core-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-jobclient-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-shuffle-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-api-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-server-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-client-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-examples-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-external-blockcache-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop2-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-prefix-tree-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-procedure-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-protocol-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-resource-bundle-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-rest-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-shell-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-thrift-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\htrace-core-3.1.0-incubating.jar;D:\SoftWare\hbase-1.2.3\lib\httpclient-4.2.5.jar;D:\SoftWare\hbase-1.2.3\lib\httpcore-4.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-core-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-jaxrs-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-mapper-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-xc-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jamon-runtime-2.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-compiler-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-runtime-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\javax.inject-1.jar;D:\SoftWare\hbase-1.2.3\lib\java-xmlbuilder-0.4.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-api-2.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-impl-2.2.3-1.jar;D:\SoftWare\hbase-1.2.3\lib\jcodings-1.0.8.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-client-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-core-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-guice-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-json-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-server-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jets3t-0.9.0.jar;D:\SoftWare\hbase-1.2.3\lib\jettison-1.3.3.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-sslengine-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-util-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\joni-2.1.2.jar;D:\SoftWare\hbase-1.2.3\lib\jruby-complete-1.6.8.jar;D:\SoftWare\hbase-1.2.3\lib\jsch-0.1.42.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-api-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\junit-4.12.jar;D:\SoftWare\hbase-1.2.3\lib\leveldbjni-all-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\libthrift-0.9.3.jar;D:\SoftWare\hbase-1.2.3\lib\log4j-1.2.17.jar;D:\SoftWare\hbase-1.2.3\lib\metrics-core-2.2.0.jar;D:\SoftWare\hbase-1.2.3\lib\netty-all-4.0.23.Final.jar;D:\SoftWare\hbase-1.2.3\lib\paranamer-2.3.jar;D:\SoftWare\hbase-1.2.3\lib\protobuf-java-2.5.0.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-api-1.7.7.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-log4j12-1.7.5.jar;D:\SoftWare\hbase-1.2.3\lib\snappy-java-1.0.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\spymemcached-2.11.6.jar;D:\SoftWare\hbase-1.2.3\lib\xmlenc-0.52.jar;D:\SoftWare\hbase-1.2.3\lib\xz-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\zookeeper-3.4.6.jar

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.library.path=C:\Program Files\Java\jdk1.7.0_51\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\ProgramData\Oracle\Java\javapath;C:\Python27\;C:\Python27\Scripts;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;D:\SoftWare\MATLAB R2013a\runtime\win64;D:\SoftWare\MATLAB R2013a\bin;C:\Program Files (x86)\IDM Computer Solutions\UltraCompare;C:\Program Files\Java\jdk1.7.0_51\bin;C:\Program Files\Java\jdk1.7.0_51\jre\bin;D:\SoftWare\apache-ant-1.9.0\bin;HADOOP_HOME\bin;D:\SoftWare\apache-maven-3.3.9\bin;D:\SoftWare\Scala\bin;D:\SoftWare\Scala\jre\bin;%MYSQL_HOME\bin;D:\SoftWare\MySQL Server\MySQL Server 5.0\bin;D:\SoftWare\apache-tomcat-7.0.69\bin;%C:\Windows\System32;%C:\Windows\SysWOW64;D:\SoftWare\SSH Secure Shell;.

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.compiler=<NA>

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.name=Windows 7

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.arch=amd64

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.version=6.1

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.name=Administrator

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.home=C:\Users\Administrator

2016-12-11 14:37:12,042 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.dir=D:\Code\MyEclipseJavaCode\HbaseProject

2016-12-11 14:37:12,044 INFO [org.apache.zookeeper.ZooKeeper] - Initiating client connection, connectString=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181 sessionTimeout=90000 watcher=hconnection-0x7660aac90x0, quorum=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181, baseZNode=/hbase

2016-12-11 14:37:12,091 INFO [org.apache.zookeeper.ClientCnxn] - Opening socket connection to server HadoopMaster/192.168.80.10:2181. Will not attempt to authenticate using SASL (unknown error)

2016-12-11 14:37:12,094 INFO [org.apache.zookeeper.ClientCnxn] - Socket connection established to HadoopMaster/192.168.80.10:2181, initiating session

2016-12-11 14:37:12,162 INFO [org.apache.zookeeper.ClientCnxn] - Session establishment complete on server HadoopMaster/192.168.80.10:2181, sessionid = 0x1582556e7c5001d, negotiated timeout = 40000

keyvalues={row_03/f:name/1478095893278/Put/vlen=5/seqid=0}

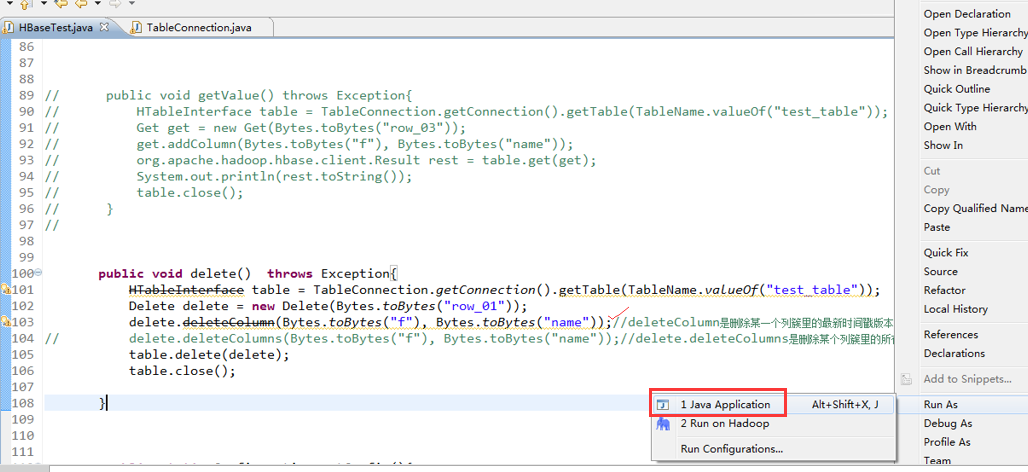

3.1、引用“池”超过

HBase编程 API入门系列之delete(客户端而言)(3)

HBase编程 API入门之delete.deleteColumn和delete.deleteColumns区别(客户端而言)(4)

上面这种方式

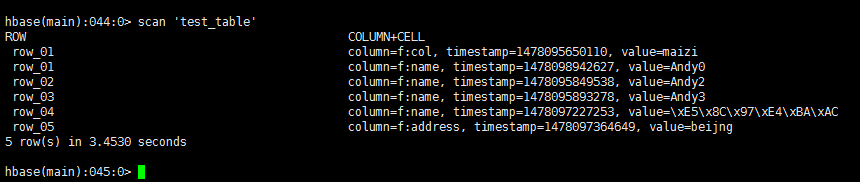

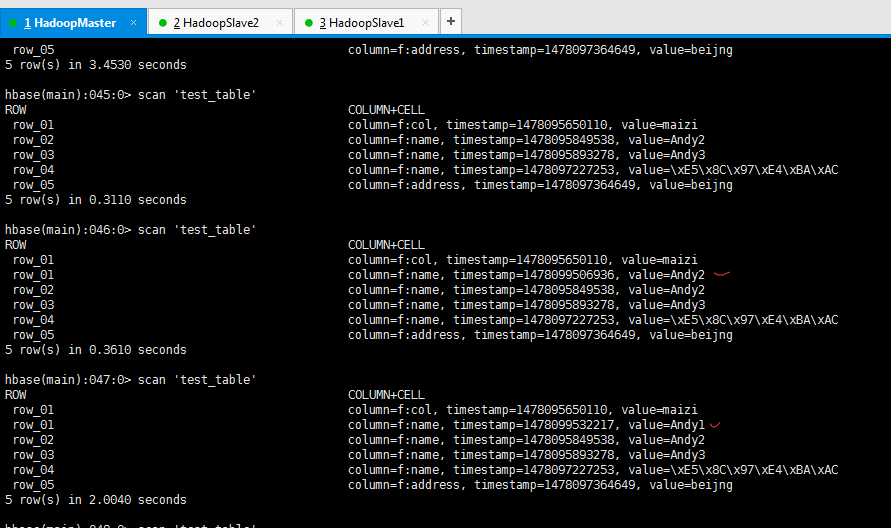

时间戳版本旧到新,是Andy2 -> Andy1 -> Andy0

先建 后建

package zhouls.bigdata.HbaseProject.Pool; import java.io.IOException; import zhouls.bigdata.HbaseProject.Pool.TableConnection; import javax.xml.transform.Result; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

// HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

// Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0

// put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3

// table.put(put);

// table.close(); // Get get = new Get(Bytes.toBytes("row_04"));

// get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_2"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name"));

// table.delete(delete);

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_04"));

//// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close(); // Scan scan = new Scan();

// scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键

// scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键

// scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// ResultScanner rst = table.getScanner(scan);//整个循环

// System.out.println(rst.toString());

// for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){

// for(Cell cell:next.rawCells()){//某个row key下的循坏

// System.out.println(next.toString());

// System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

// System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

// System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

// }

// }

// table.close(); HBaseTest hbasetest =new HBaseTest();

// hbasetest.insertValue();

// hbasetest.getValue();

hbasetest.delete();

} // public void insertValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0"));

// table.put(put);

// table.close();

// } // public void getValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Get get = new Get(Bytes.toBytes("row_03"));

// get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close();

// }

// public void delete() throws Exception{

HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

Delete delete = new Delete(Bytes.toBytes("row_01"));

// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

table.delete(delete);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

delete.deleteColumn和delete.deleteColumns区别是:

deleteColumn是删除某一个列簇里的最新时间戳版本。

delete.deleteColumns是删除某个列簇里的所有时间戳版本。

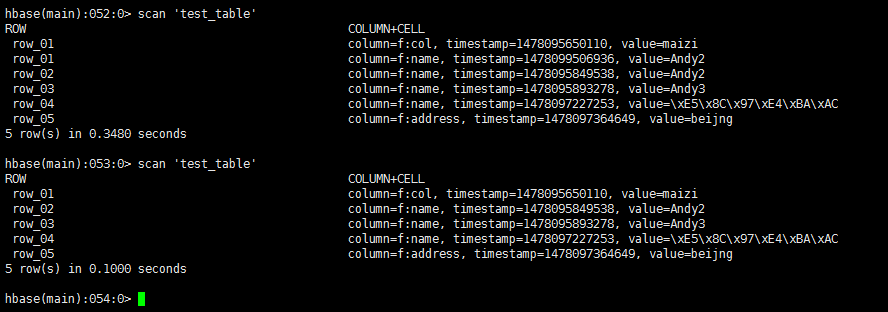

3.2、引用“池”超过

HBase编程 API入门之delete(客户端而言)

HBase编程 API入门之delete.deleteColumn和delete.deleteColumns区别(客户端而言)

上面这种方式

时间戳版本旧到新,是Andy2 -> Andy1 -> Andy0

先建 后建

时间戳版本旧到新,是Andy2 -> Andy1 -> Andy0

先建 后建

package zhouls.bigdata.HbaseProject.Pool; import java.io.IOException; import zhouls.bigdata.HbaseProject.Pool.TableConnection; import javax.xml.transform.Result; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

// HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

// Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0

// put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3

// table.put(put);

// table.close(); // Get get = new Get(Bytes.toBytes("row_04"));

// get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_2"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name"));

// table.delete(delete);

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_04"));

//// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close(); // Scan scan = new Scan();

// scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键

// scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键

// scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// ResultScanner rst = table.getScanner(scan);//整个循环

// System.out.println(rst.toString());

// for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){

// for(Cell cell:next.rawCells()){//某个row key下的循坏

// System.out.println(next.toString());

// System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

// System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

// System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

// }

// }

// table.close(); HBaseTest hbasetest =new HBaseTest();

// hbasetest.insertValue();

// hbasetest.getValue();

hbasetest.delete();

} // public void insertValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0"));

// table.put(put);

// table.close();

// } // public void getValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Get get = new Get(Bytes.toBytes("row_03"));

// get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close();

// }

// public void delete() throws Exception{

HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

Delete delete = new Delete(Bytes.toBytes("row_01"));

delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

table.delete(delete);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

时间戳版本旧到新,是Andy2 -> Andy1 -> Andy0

先建 后建

delete.deleteColumn和delete.deleteColumns区别是:

deleteColumn是删除某一个列簇里的最新时间戳版本。

delete.deleteColumns是删除某个列簇里的所有时间戳版本。

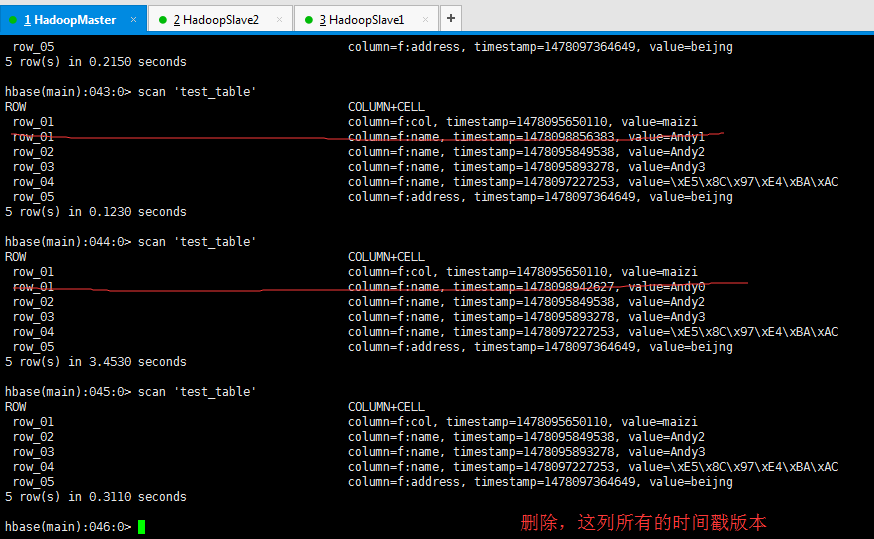

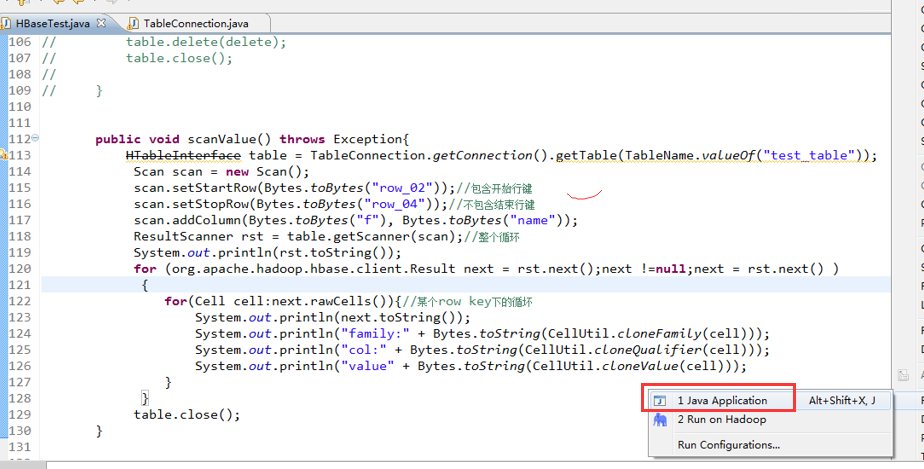

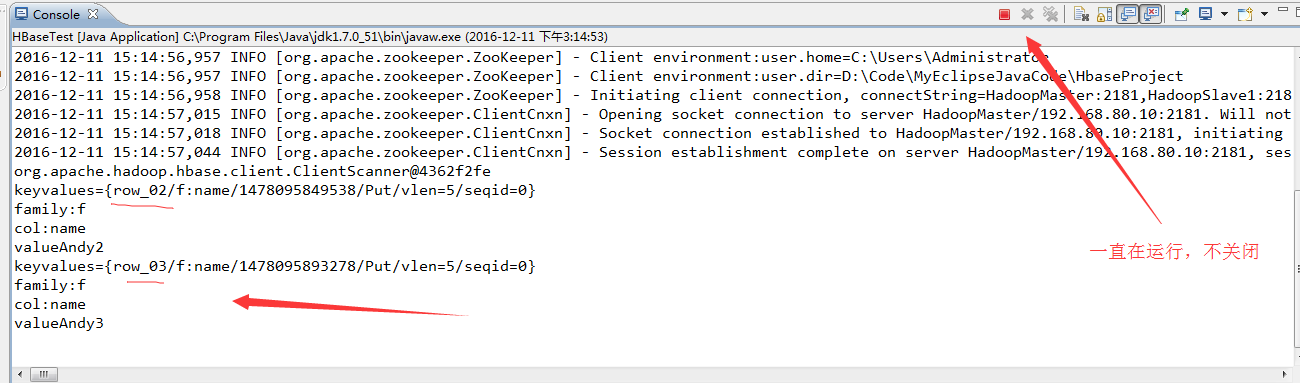

4、引用“池”超过

HBase编程 API入门之scan(客户端而言)

上面这种方式

package zhouls.bigdata.HbaseProject.Pool; import java.io.IOException; import zhouls.bigdata.HbaseProject.Pool.TableConnection; import javax.xml.transform.Result; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

// HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

// Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0

// put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3

// table.put(put);

// table.close(); // Get get = new Get(Bytes.toBytes("row_04"));

// get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_2"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name"));

// table.delete(delete);

// table.close(); // Delete delete = new Delete(Bytes.toBytes("row_04"));

//// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close(); // Scan scan = new Scan();

// scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键

// scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键

// scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// ResultScanner rst = table.getScanner(scan);//整个循环

// System.out.println(rst.toString());

// for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){

// for(Cell cell:next.rawCells()){//某个row key下的循坏

// System.out.println(next.toString());

// System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

// System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

// System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

// }

// }

// table.close(); HBaseTest hbasetest =new HBaseTest();

// hbasetest.insertValue();

// hbasetest.getValue();

// hbasetest.delete();

hbasetest.scanValue();

} // public void insertValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0"));

// table.put(put);

// table.close();

// } // public void getValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Get get = new Get(Bytes.toBytes("row_03"));

// get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close();

// }

// // public void delete() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Delete delete = new Delete(Bytes.toBytes("row_01"));

// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

//// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close();

// } public void scanValue() throws Exception{

HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

Scan scan = new Scan();

scan.setStartRow(Bytes.toBytes("row_02"));//包含开始行键

scan.setStopRow(Bytes.toBytes("row_04"));//不包含结束行键

scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

ResultScanner rst = table.getScanner(scan);//整个循环

System.out.println(rst.toString());

for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() ){

for(Cell cell:next.rawCells()){//某个row key下的循坏

System.out.println(next.toString());

System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

}

}

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

2016-12-11 15:14:56,940 INFO [org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper] - Process identifier=hconnection-0x278a676 connecting to ZooKeeper ensemble=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181

2016-12-11 15:14:56,954 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-12-11 15:14:56,954 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:host.name=WIN-BQOBV63OBNM

2016-12-11 15:14:56,954 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.version=1.7.0_51

2016-12-11 15:14:56,954 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.vendor=Oracle Corporation

2016-12-11 15:14:56,954 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.home=C:\Program Files\Java\jdk1.7.0_51\jre

2016-12-11 15:14:56,954 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.class.path=D:\Code\MyEclipseJavaCode\HbaseProject\bin;D:\SoftWare\hbase-1.2.3\lib\activation-1.1.jar;D:\SoftWare\hbase-1.2.3\lib\aopalliance-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-i18n-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\api-asn1-api-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\api-util-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\asm-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\avro-1.7.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-1.7.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-core-1.8.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-cli-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-codec-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\commons-collections-3.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-compress-1.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-configuration-1.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-daemon-1.0.13.jar;D:\SoftWare\hbase-1.2.3\lib\commons-digester-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\commons-el-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-httpclient-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-io-2.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-lang-2.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-logging-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math-2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math3-3.1.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-net-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\disruptor-3.3.0.jar;D:\SoftWare\hbase-1.2.3\lib\findbugs-annotations-1.3.9-1.jar;D:\SoftWare\hbase-1.2.3\lib\guava-12.0.1.jar;D:\SoftWare\hbase-1.2.3\lib\guice-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\guice-servlet-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-annotations-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-auth-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-hdfs-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-app-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-core-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-jobclient-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-shuffle-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-api-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-server-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-client-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-examples-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-external-blockcache-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop2-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-prefix-tree-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-procedure-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-protocol-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-resource-bundle-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-rest-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-shell-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-thrift-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\htrace-core-3.1.0-incubating.jar;D:\SoftWare\hbase-1.2.3\lib\httpclient-4.2.5.jar;D:\SoftWare\hbase-1.2.3\lib\httpcore-4.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-core-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-jaxrs-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-mapper-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-xc-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jamon-runtime-2.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-compiler-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-runtime-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\javax.inject-1.jar;D:\SoftWare\hbase-1.2.3\lib\java-xmlbuilder-0.4.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-api-2.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-impl-2.2.3-1.jar;D:\SoftWare\hbase-1.2.3\lib\jcodings-1.0.8.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-client-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-core-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-guice-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-json-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-server-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jets3t-0.9.0.jar;D:\SoftWare\hbase-1.2.3\lib\jettison-1.3.3.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-sslengine-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-util-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\joni-2.1.2.jar;D:\SoftWare\hbase-1.2.3\lib\jruby-complete-1.6.8.jar;D:\SoftWare\hbase-1.2.3\lib\jsch-0.1.42.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-api-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\junit-4.12.jar;D:\SoftWare\hbase-1.2.3\lib\leveldbjni-all-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\libthrift-0.9.3.jar;D:\SoftWare\hbase-1.2.3\lib\log4j-1.2.17.jar;D:\SoftWare\hbase-1.2.3\lib\metrics-core-2.2.0.jar;D:\SoftWare\hbase-1.2.3\lib\netty-all-4.0.23.Final.jar;D:\SoftWare\hbase-1.2.3\lib\paranamer-2.3.jar;D:\SoftWare\hbase-1.2.3\lib\protobuf-java-2.5.0.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-api-1.7.7.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-log4j12-1.7.5.jar;D:\SoftWare\hbase-1.2.3\lib\snappy-java-1.0.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\spymemcached-2.11.6.jar;D:\SoftWare\hbase-1.2.3\lib\xmlenc-0.52.jar;D:\SoftWare\hbase-1.2.3\lib\xz-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\zookeeper-3.4.6.jar

2016-12-11 15:14:56,955 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.library.path=C:\Program Files\Java\jdk1.7.0_51\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\ProgramData\Oracle\Java\javapath;C:\Python27\;C:\Python27\Scripts;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;D:\SoftWare\MATLAB R2013a\runtime\win64;D:\SoftWare\MATLAB R2013a\bin;C:\Program Files (x86)\IDM Computer Solutions\UltraCompare;C:\Program Files\Java\jdk1.7.0_51\bin;C:\Program Files\Java\jdk1.7.0_51\jre\bin;D:\SoftWare\apache-ant-1.9.0\bin;HADOOP_HOME\bin;D:\SoftWare\apache-maven-3.3.9\bin;D:\SoftWare\Scala\bin;D:\SoftWare\Scala\jre\bin;%MYSQL_HOME\bin;D:\SoftWare\MySQL Server\MySQL Server 5.0\bin;D:\SoftWare\apache-tomcat-7.0.69\bin;%C:\Windows\System32;%C:\Windows\SysWOW64;D:\SoftWare\SSH Secure Shell;.

2016-12-11 15:14:56,956 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\

2016-12-11 15:14:56,956 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.compiler=<NA>

2016-12-11 15:14:56,956 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.name=Windows 7

2016-12-11 15:14:56,956 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.arch=amd64

2016-12-11 15:14:56,956 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.version=6.1

2016-12-11 15:14:56,957 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.name=Administrator

2016-12-11 15:14:56,957 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.home=C:\Users\Administrator

2016-12-11 15:14:56,957 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.dir=D:\Code\MyEclipseJavaCode\HbaseProject

2016-12-11 15:14:56,958 INFO [org.apache.zookeeper.ZooKeeper] - Initiating client connection, connectString=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181 sessionTimeout=90000 watcher=hconnection-0x278a6760x0, quorum=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181, baseZNode=/hbase

2016-12-11 15:14:57,015 INFO [org.apache.zookeeper.ClientCnxn] - Opening socket connection to server HadoopMaster/192.168.80.10:2181. Will not attempt to authenticate using SASL (unknown error)

2016-12-11 15:14:57,018 INFO [org.apache.zookeeper.ClientCnxn] - Socket connection established to HadoopMaster/192.168.80.10:2181, initiating session

2016-12-11 15:14:57,044 INFO [org.apache.zookeeper.ClientCnxn] - Session establishment complete on server HadoopMaster/192.168.80.10:2181, sessionid = 0x1582556e7c50024, negotiated timeout = 40000

org.apache.hadoop.hbase.client.ClientScanner@4362f2fe

keyvalues={row_02/f:name/1478095849538/Put/vlen=5/seqid=0}

family:f

col:name

valueAndy2

keyvalues={row_03/f:name/1478095893278/Put/vlen=5/seqid=0}

family:f

col:name

valueAndy3

好的,其他的功能,就不带领大家去做了,自行去研究。

最后,总结:

在实际开发中,一定要掌握线程池!!!

附上代码

package zhouls.bigdata.HbaseProject.Pool;

import java.io.IOException;

import zhouls.bigdata.HbaseProject.Pool.TableConnection;

import javax.xml.transform.Result;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes;

public class HBaseTest {

public static void main(String[] args) throws Exception {

// HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

// Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0

// put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3

// table.put(put);

// table.close();

// Get get = new Get(Bytes.toBytes("row_04"));

// get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close();

// Delete delete = new Delete(Bytes.toBytes("row_2"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email"));

// delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name"));

// table.delete(delete);

// table.close();

// Delete delete = new Delete(Bytes.toBytes("row_04"));

//// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close();

// Scan scan = new Scan();

// scan.setStartRow(Bytes.toBytes("row_01"));//包含开始行键

// scan.setStopRow(Bytes.toBytes("row_03"));//不包含结束行键

// scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// ResultScanner rst = table.getScanner(scan);//整个循环

// System.out.println(rst.toString());

// for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() )

// {

// for(Cell cell:next.rawCells()){//某个row key下的循坏

// System.out.println(next.toString());

// System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

// System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

// System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

// }

// }

// table.close();

HBaseTest hbasetest =new HBaseTest();

// hbasetest.insertValue();

// hbasetest.getValue();

// hbasetest.delete();

hbasetest.scanValue();

}

//生产开发中,建议这样用线程池做

// public void insertValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

// put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0"));

// table.put(put);

// table.close();

// }

//生产开发中,建议这样用线程池做

// public void getValue() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Get get = new Get(Bytes.toBytes("row_03"));

// get.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

// org.apache.hadoop.hbase.client.Result rest = table.get(get);

// System.out.println(rest.toString());

// table.close();

// }

//

//生产开发中,建议这样用线程池做

// public void delete() throws Exception{

// HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

// Delete delete = new Delete(Bytes.toBytes("row_01"));

// delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));//deleteColumn是删除某一个列簇里的最新时间戳版本。

//// delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name"));//delete.deleteColumns是删除某个列簇里的所有时间戳版本。

// table.delete(delete);

// table.close();

//

// }

//生产开发中,建议这样用线程池做

public void scanValue() throws Exception{

HTableInterface table = TableConnection.getConnection().getTable(TableName.valueOf("test_table"));

Scan scan = new Scan();

scan.setStartRow(Bytes.toBytes("row_02"));//包含开始行键

scan.setStopRow(Bytes.toBytes("row_04"));//不包含结束行键

scan.addColumn(Bytes.toBytes("f"), Bytes.toBytes("name"));

ResultScanner rst = table.getScanner(scan);//整个循环

System.out.println(rst.toString());

for (org.apache.hadoop.hbase.client.Result next = rst.next();next !=null;next = rst.next() )

{

for(Cell cell:next.rawCells()){//某个row key下的循坏

System.out.println(next.toString());

System.out.println("family:" + Bytes.toString(CellUtil.cloneFamily(cell)));

System.out.println("col:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

System.out.println("value" + Bytes.toString(CellUtil.cloneValue(cell)));

}

}

table.close();

}

public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

package zhouls.bigdata.HbaseProject.Pool;

import java.io.IOException;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.HConnection;

import org.apache.hadoop.hbase.client.HConnectionManager;

public class TableConnection {

private TableConnection(){

}

private static HConnection connection = null;

public static HConnection getConnection(){

if(connection == null){

ExecutorService pool = Executors.newFixedThreadPool(10);//建立一个固定大小的线程池

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum","HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

try{

connection = HConnectionManager.createConnection(conf,pool);//创建连接时,拿到配置文件和线程池

}catch (IOException e){

}

}

return connection;

}

}

HBase编程 API入门系列之HTable pool(6)的更多相关文章

- HBase编程 API入门系列之create(管理端而言)(8)

大家,若是看过我前期的这篇博客的话,则 HBase编程 API入门系列之put(客户端而言)(1) 就知道,在这篇博文里,我是在HBase Shell里创建HBase表的. 这里,我带领大家,学习更高 ...

- HBase编程 API入门系列之delete(客户端而言)(3)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. 前面的基础,如下 HBase编程 API入门系列之put(客户端而言)(1) HBase编程 API入门系列之get(客户端而言) ...

- HBase编程 API入门系列之get(客户端而言)(2)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. 前面是基础,如下 HBase编程 API入门系列之put(客户端而言)(1) package zhouls.bigdata.Hba ...

- HBase编程 API入门系列之delete(管理端而言)(9)

大家,若是看过我前期的这篇博客的话,则 HBase编程 API入门之delete(客户端而言) 就知道,在这篇博文里,我是在客户端里删除HBase表的. 这里,我带领大家,学习更高级的,因为,在开发中 ...

- HBase编程 API入门系列之工具Bytes类(7)

这是从程度开发层面来说,为了方便和提高开发人员. 这个工具Bytes类,有很多很多方法,帮助我们HBase编程开发人员,提高开发. 这里,我只赘述,很常用的! package zhouls.bigda ...

- HBase编程 API入门系列之put(客户端而言)(1)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. [hadoop@HadoopSlave1 conf]$ cat regionservers HadoopMasterHadoopS ...

- HBase编程 API入门系列之modify(管理端而言)(10)

这里,我带领大家,学习更高级的,因为,在开发中,尽量不能去服务器上修改表. 所以,在管理端来修改HBase表.采用线程池的方式(也是生产开发里首推的) package zhouls.bigdata.H ...

- HBase编程 API入门系列之scan(客户端而言)(5)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. package zhouls.bigdata.HbaseProject.Test1; import javax.xml.trans ...

- HBase编程 API入门系列之delete.deleteColumn和delete.deleteColumns区别(客户端而言)(4)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. delete.deleteColumn和delete.deleteColumns区别是: deleteColumn是删除某一个列簇 ...

随机推荐

- POJ 3041 - 最大二分匹配

这道题实现起来还是比较简单的,但是理解起来可能有点困难. 我最开始想到的是贪心法,每次消灭当前小行星最多的一行或一列.然而WA了.Discuss区里已经有高人给出反例. 下面给出正确的解法 我们把行和 ...

- 据说现在很缺设计师,阿里云的LOGO是用键盘打出来的……

今天,阿里云发布了自己的新LOGO,官方的解读是:“[]”这个呢其实是代码中常用的一个符号,代表着计算:中间的“—”则代表流动的数据.在官网等平台上,阿里云新LOGO是动态的,中间的“—”会匀速移动, ...

- js中的数组遍历

js中的数组遍历是项目中经常用到的,在这里将几种方法做个对比. ! for循环:使用评率最高,也是最基本的一种遍历方式. let arr = ['a','b','c','d','e']; for (l ...

- Tost元素识别

在日常使用App过程中,经常会看到App界面有一些弹窗提示(如下图所示)这些提示元素出现后等待3秒左右就会自动消失,那么我们该如何获取这些元素文字内容呢? Toast简介 Android中的Toast ...

- 【剑指Offer】21、栈的压入、弹出序列

题目描述: 输入两个整数序列,第一个序列表示栈的压入顺序,请判断第二个序列是否可能为该栈的弹出顺序.假设压入栈的所有数字均不相等.例如序列1,2,3,4,5是某栈的压入顺序,序列4,5,3,2 ...

- swift-计算字符串长度

text.characters.count(记得text一定要是String类型)

- encodeURI和encodeURIComponent的区别?

encodeURI方法不会对下列字符编码 ASCII字母.数字.~!@#$&*()=:/,;?+' encodeURIComponent方法不会对下列字符编码 ASCII字母.数字.~!*() ...

- MySQL 复制 - 性能与扩展性的基石:概述及其原理

原文:MySQL 复制 - 性能与扩展性的基石:概述及其原理 1. 复制概述 MySQL 内置的复制功能是构建基于 MySQL 的大规模.高性能应用的基础,复制解决的基本问题是让一台服务器的数据与其他 ...

- MongoDB简介、特点、原理、使用场景、应用案例

简介 MongoDB[1] 是一个基于分布式文件存储的数据库.由C 语言编写.旨在为WEB应用提供可扩展的高性能数据存储解决方案. MongoDB[2] 是一个介于关系数据库和非关系数据库之间的产品, ...

- redis liunx系统安装

同事总结非常好,借鉴一下 原文地址:https://www.cnblogs.com/dslx/p/9291535.html redis安装 下载redis的安装包上传到Linux服务器,安装包如下 h ...