[CVPR2015] Is object localization for free? – Weakly-supervised learning with convolutional neural networks论文笔记

p.p1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333 }

p.p2 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #042eee }

span.s1 { }

span.s2 { text-decoration: underline }

Is object localization for free? –Weakly-supervised learning with convolutional neural networks. Maxime Oquab, Leon Bottou, Ivan Laptev, Josef Sivic

http://www.di.ens.fr/~josef/publications/Oquab15.pdf

p.p1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 15.0px "Helvetica Neue"; color: #323333 }

p.p2 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333 }

li.li2 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333 }

span.s1 { }

span.s2 { background-color: #fefa00 }

ul.ul1 { list-style-type: disc }

ul.ul2 { list-style-type: circle }

亮点

- 一个好名字给了让读者开始阅读的理由

- global max pooling over sliding window的定位方法值得借鉴

方法

本文的目标是:设计一个弱监督分类网络,注意本文的目标主要是提升分类。因为是2015年的文章,方法比较简单原始。

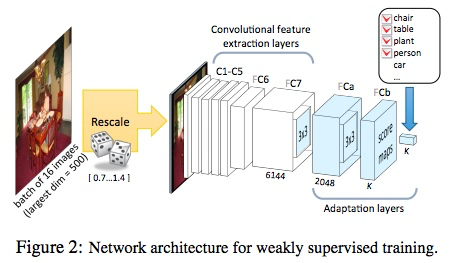

Following three modifications to a classification network.

- Treat the fully connected layers as convolutions, which allows us to deal with nearly arbitrary-sized images as input.

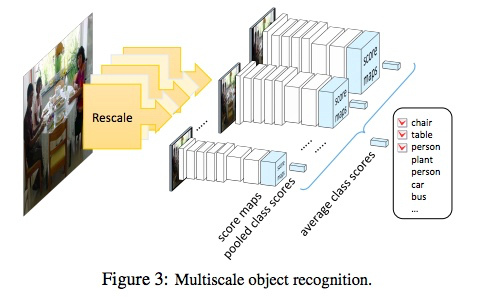

- The aim is to apply the network to bigger images in a sliding window manner thus extending its output to n×m× K, where n and m denote the number of sliding window positions in the x- and y- direction in the image, respectively.

- 3xhxw —> convs —> kxmxn (k: number of classes)

- Explicitly search for the highest scoring object position in the image by adding a single global max-pooling layer at the output.

- kxmxn —> kx1x1

- The max-pooling operation hypothesizes the location of the object in the image at the position with the maximum score

- Use a cost function that can explicitly model multiple objects present in the image.

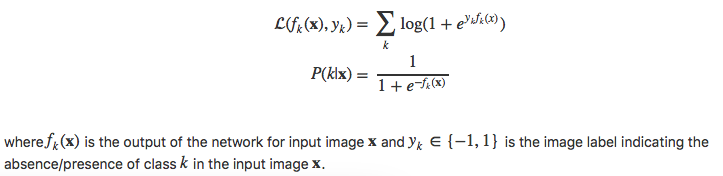

因为图中可能有很多物体,所以多类的分类loss不适用。作者把这个任务视为多个二分类问题,loss function和分类的分数如下

p.p1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333 }

p.p2 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333; min-height: 15.0px }

p.p3 { margin: 0.0px 0.0px 0.0px 0.0px; font: 15.0px "Helvetica Neue"; color: #323333 }

li.li1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333 }

span.s1 { }

ul.ul1 { list-style-type: disc }

training

muti-scale test

实验

classification

- mAP on VOC 2012 test: +3.1% compared with [56]

- mAP on VOC 2012 test: +7.6% compared with kx1x1 output and single scale training

- mAP on VOC: +2.6% compared with RCNN

- mAP on COCO 62.8%

Localisation

- Metric: if the maximal response across scales falls within the ground truth bounding box of an object of the same class within 18 pixels tolerance, we label the predicted location as correct. If not, then we count the response as a false positive (it hit the background), and we also increment the false negative count (no object was found).

- metric on VOC 2012 val: -0.3% compared with RCNN

- mAP on COCO 41.2%

缺点

- 定位评测的metric不具有权威性

- max pooling改为average pooling会不会对于多个instance的情况更好一些

[CVPR2015] Is object localization for free? – Weakly-supervised learning with convolutional neural networks论文笔记的更多相关文章

- Coursera, Deep Learning 4, Convolutional Neural Networks, week3, Object detection

学习目标 Understand the challenges of Object Localization, Object Detection and Landmark Finding Underst ...

- 论文笔记之:Spatially Supervised Recurrent Convolutional Neural Networks for Visual Object Tracking

Spatially Supervised Recurrent Convolutional Neural Networks for Visual Object Tracking arXiv Paper ...

- tensorfolw配置过程中遇到的一些问题及其解决过程的记录(配置SqueezeDet: Unified, Small, Low Power Fully Convolutional Neural Networks for Real-Time Object Detection for Autonomous Driving)

今天看到一篇关于检测的论文<SqueezeDet: Unified, Small, Low Power Fully Convolutional Neural Networks for Real- ...

- [CVPR2017] Weakly Supervised Cascaded Convolutional Networks论文笔记

p.p1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 14.0px "Helvetica Neue"; color: #042eee } p. ...

- A brief introduction to weakly supervised learning(简要介绍弱监督学习)

by 南大周志华 摘要 监督学习技术通过学习大量训练数据来构建预测模型,其中每个训练样本都有其对应的真值输出.尽管现有的技术已经取得了巨大的成功,但值得注意的是,由于数据标注过程的高成本,很多任务很难 ...

- [CVPR 2016] Weakly Supervised Deep Detection Networks论文笔记

p.p1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333 } p. ...

- 课程四(Convolutional Neural Networks),第三 周(Object detection) —— 0.Learning Goals

Learning Goals: Understand the challenges of Object Localization, Object Detection and Landmark Find ...

- [C4W3] Convolutional Neural Networks - Object detection

第三周 目标检测(Object detection) 目标定位(Object localization) 大家好,欢迎回来,这一周我们学习的主要内容是对象检测,它是计算机视觉领域中一个新兴的应用方向, ...

- 论文笔记(7):Constrained Convolutional Neural Networks for Weakly Supervised Segmentation

UC Berkeley的Deepak Pathak 使用了一个具有图像级别标记的训练数据来做弱监督学习.训练数据中只给出图像中包含某种物体,但是没有其位置信息和所包含的像素信息.该文章的方法将imag ...

随机推荐

- Android源码浅析(一)——VMware Workstation Pro和Ubuntu Kylin 16.04 LTS安装配置

Android源码浅析(一)--VMware Workstation Pro和Ubuntu Kylin 16.04 LTS安装配置 最近地方工作,就是接触源码的东西了,所以好东西还是要分享,系列开了这 ...

- Android 添加library的时候出错添加不上

在向android工程中导入library的时候,会和出现导入不成功,打开查看添加library界面,会发现你添加的library的路径出现D:/work/...?类似的情况,但是别的工程使用的时候又 ...

- LeetCode之“链表”:Reorder List

题目链接 题目要求: Given a singly linked list L: L0→L1→…→Ln-1→Ln, reorder it to: L0→Ln→L1→Ln-1→L2→Ln-2→… You ...

- PostgreSQL两种分页方法查询时间比较

数据库中存了3000W条数据,两种分页查询测试时间 第一种 SELECT * FROM test_table WHERE i_id> limit 100; Time: 0.016s 第二种 SE ...

- Android Preference详解

转载请标明出处:ttp://blog.csdn.net/sk719887916/article/details/42437253 Preference 用来管理应用程序的偏好设置和保证使用这些的每个应 ...

- Bookmarkable Pages

Build a Bookmarkable Edit Page with JDeveloper 11g Purpose In this tutorial, you use Oracle JDevel ...

- 机房收费系统之导出Excel

刚开始接触机房收费的时候,连上数据库,配置ODBC,登陆进去,那窗体叫一个多,不由地有种害怕的感觉,但是有人说,每天努力一点点,就会进步一点点,不会的就会少一点点,会的就会多一点点.. ...

- MQ队列管理器搭建(一)

多应用单MQ使用场景 如上图所示,MQ独立安装,或者与其中一个应用同处一机.Application1与Application2要进行通信,但因为跨系统,所以引入中间件来实现需求. Applicat ...

- eclipse工程当中的.classpath 和.project文件什么作用?

.project是项目文件,项目的结构都在其中定义,比如lib的位置,src的位置,classes的位置.classpath的位置定义了你这个项目在编译时所使用的$CLASSPATH .classpa ...

- selenium获取百度账户cookies

[效果图] 效果图最后即为获取到的cookies,百度账户的cookies首次获取,需要手动登录,之后就可以注入cookies,实现免密登录. [代码] public class baiduCooki ...