Keras vs. PyTorch

We strongly recommend that you pick either Keras or PyTorch. These are powerful tools that are enjoyable to learn and experiment with. We know them both from the teacher’s and the student’s perspective. Piotr has delivered corporate workshops on both, while Rafał is currently learning them.(See the discussion on Hacker News and Reddit).

Introduction

Keras and PyTorch are open-source frameworks for deep learning gaining much popularity among data scientists.

- Keras is a high-level API capable of running on top of TensorFlow, CNTK, Theano, or MXNet (or as tf.contrib within TensorFlow). Since its initial release in March 2015, it has gained favor for its ease of use and syntactic simplicity, facilitating fast development. It’s supported by Google.

- PyTorch, released in October 2016, is a lower-level API focused on direct work with array expressions. It has gained immense interest in the last year, becoming a preferred solution for academic research, and applications of deep learning requiring optimizing custom expressions. It’s supported by Facebook.

Before we discuss the nitty-gritty details of both frameworks (well described in this Reddit thread), we want to preemptively disappoint you – there’s no straight answer to the ‘which one is better?’. The choice ultimately comes down to your technical background, needs, and expectations. This article aims to give you a better idea of where each of the two frameworks you should be pick as the first.

TL;DR:

Keras may be easier to get into and experiment with standard layers, in a plug & play spirit.

PyTorch offers a lower-level approach and more flexibility for the more mathematically-inclined users.

Ok, but why not any other framework?

TensorFlow is a popular deep learning framework. Raw TensorFlow, however, abstracts computational graph-building in a way that may seem both verbose and not-explicit. Once you know the basics of deep learning, that is not a problem. But for anyone new to it, sticking with Keras as its officially-supported interface should be easier and more productive.

[Edit: Recently, TensorFlow introduced Eager Execution, enabling the execution of any Python code and making the model training more intuitive for beginners (especially when used with tf.keras API).]

While you may find some Theano tutorials, it is no longer in active development. Caffe lacks flexibility, while Torch uses Lua (though its rewrite is awesome :)). MXNet, Chainer, and CNTK are currently not widely popular.

Keras vs. PyTorch: Ease of use and flexibility

Keras and PyTorch differ in terms of the level of abstraction they operate on.

Keras is a higher-level framework wrapping commonly used deep learning layers and operations into neat, lego-sized building blocks, abstracting the deep learning complexities away from the precious eyes of a data scientist.

PyTorch offers a comparatively lower-level environment for experimentation, giving the user more freedom to write custom layers and look under the hood of numerical optimization tasks. Development of more complex architectures is more straightforward when you can use the full power of Python and access the guts of all functions used. This, naturally, comes at the price of verbosity.

Consider this head-to-head comparison of how a simple convolutional network is defined in Keras and PyTorch:

Keras

model=Sequential()

model.add(Conv2D(32,(3,3),activation='relu',input_shape=(32,32,3)))

model.add(MaxPool2D())

model.add(Conv2D(16,(3,3),activation='relu'))

model.add(MaxPool2D())

model.add(Flatten())

model.add(Dense(10,activation='softmax'))

PyTorch

classNet(nn.Module):

def __init__(self):

super(Net,self).__init__() self.conv1=nn.Conv2d(3,32,3)

self.conv2=nn.Conv2d(32,16,3)

self.fc1=nn.Linear(16*6*6,10)

self.pool=nn.MaxPool2d(2,2) def forward(self,x):

x=self.pool(F.relu(self.conv1(x)))

x=self.pool(F.relu(self.conv2(x)))

x=x.view(-1,16*6*6)

x=F.log_softmax(self.fc1(x),dim=-1) returnx model=Net()

The code snippets above give a little taste of the differences between the two frameworks. As for the model training itself – it requires around 20 lines of code in PyTorch, compared to a single line in Keras. Enabling GPU acceleration is handled implicitly in Keras, while PyTorch requires us to specify when to transfer data between the CPU and GPU.

If you’re a beginner, the high-levelness of Keras may seem like a clear advantage. Keras is indeed more readable and concise, allowing you to build your first end-to-end deep learning models faster, while skipping the implementational details. Glossing over these details, however, limits the opportunities for exploration of the inner workings of each computational block in your deep learning pipeline. Working with PyTorch may offer you more food for thought regarding the core deep learning concepts, like backpropagation, and the rest of the training process.

That said, Keras, being much simpler than PyTorch, is by no means a toy – it’s a serious deep learning tool used by beginners, and seasoned data scientists alike.

For instance, in the Dstl Satellite Imagery Feature Detection Kaggle competition, the 3 best teams used Keras in their solutions, while our deepsense.ai team (4th place) used a combination of PyTorch and (to a lesser extend) Keras.

Whether your applications of deep learning will require flexibility beyond what pure Keras has to offer is worth considering. Depending on your needs, Keras might just be that sweet spot following the rule of least power.

SUMMARY

- Keras – more concise, simpler API

- PyTorch – more flexible, encouraging deeper understanding of deep learning concepts

Keras vs. PyTorch: Popularity and access to learning resources

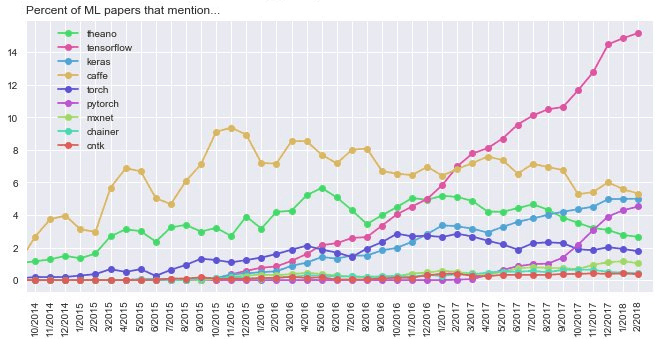

A framework’s popularity is not only a proxy of its usability. It is also important for community support – tutorials, repositories with working code, and discussions groups. As of June 2018, Keras and PyTorch are both enjoying growing popularity, both on GitHub and arXiv papers (note that most papers mentioning Keras mention also its TensorFlow backend). According to a KDnuggets survey, Keras and PyTorch are the fastest growing data science tools.

Unique mentions of deep learning frameworks in arxiv papers (full text) over time, based on 43K ML papers over last 6 years. So far TF mentioned in 14.3% of all papers, PyTorch 4.7%, Keras 4.0%, Caffe 3.8%, Theano 2.3%, Torch 1.5%, mxnet/chainer/cntk <1%. (cc @fchollet) pic.twitter.com/YOYAvc33iN

— Andrej Karpathy (@karpathy) 10 marca 2018

While both frameworks have satisfactory documentation, PyTorch enjoys stronger community support – their discussion board is a great place to visit to if you get stuck (you will get stuck) and the documentation or StackOverflow don’t provide you with the answers you need.

Anecdotally, we found well-annotated beginner level deep learning courses on a given network architecture easier to come across for Keras than for PyTorch, making the former somewhat more accessible for beginners. The readability of code and the unparalleled ease of experimentation Keras offers may make it the more widely covered by deep learning enthusiasts, tutors and hardcore Kaggle winners.

For examples of great Keras resources and deep learning courses, see “Starting deep learning hands-on: image classification on CIFAR-10“ by Piotr Migdał and “Deep Learning with Python” – a book written by François Chollet, the creator of Keras himself. For PyTorch resources, we recommend the official tutorials, which offer a slightly more challenging, comprehensive approach to learning the inner-workings of neural networks. For a concise overview of PyTorch API, see this article.

SUMMARY

- Keras – Great access to tutorials and reusable code

- PyTorch – Excellent community support and active development

Keras vs. PyTorch: Debugging and introspection

Keras, which wraps a lot of computational chunks in abstractions, makes it harder to pin down the exact line that causes you trouble.

PyTorch, being the more verbose framework, allows us to follow the execution of our script, line by line. It’s like debugging NumPy – we have easy access to all objects in our code and are able to use print statements (or any standard Pythonic debugging) to see where our recipe failed.

A Keras user creating a standard network has an order of magnitude fewer opportunities to go wrong than does a PyTorch user. But once something goes wrong, it hurts a lot and often it’s difficult to locate the actual line of code that breaks. PyTorch offers a more direct, unconvoluted debugging experience regardless of model complexity. Moreover, when in doubt, you can readily lookup PyTorch repo to see its readable code.

SUMMARY

- PyTorch – way better debugging capabilities

- Keras – (potentially) less frequent need to debug simple networks

Keras vs. PyTorch: Exporting models and cross-platform portability

What are the options for exporting and deploying your trained models in production?

PyTorch saves models in Pickles, which are Python-based and not portable, whereas Keras takes advantages of a safer approach with JSON + H5 files (though saving with custom layers in Keras is generally more difficult). There is also Keras in R, in case you need to collaborate with a data analyst team using R.

Running on Tensorflow, Keras enjoys a wider selection of solid options for deployment to mobile platforms through TensorFlow for Mobile and TensorFlow Lite. Your cool web apps can be deployed with TensorFlow.js or keras.js. As an example, see this deep learning-powered browser plugin detecting trypophobia triggers, developed by Piotr and his students.

Exporting PyTorch models is more taxing due to its Python code, and currently the widely recommended approach is to start by translating your PyTorch model to Caffe2 using ONNX.

SUMMARY

- Keras – more deployment options (directly and through the TensorFlow backend), easier model export.

Keras vs. PyTorch: Performance

Donald Knuth famously said:

Premature optimization is the root of all evil (or at least most of it) in programming.

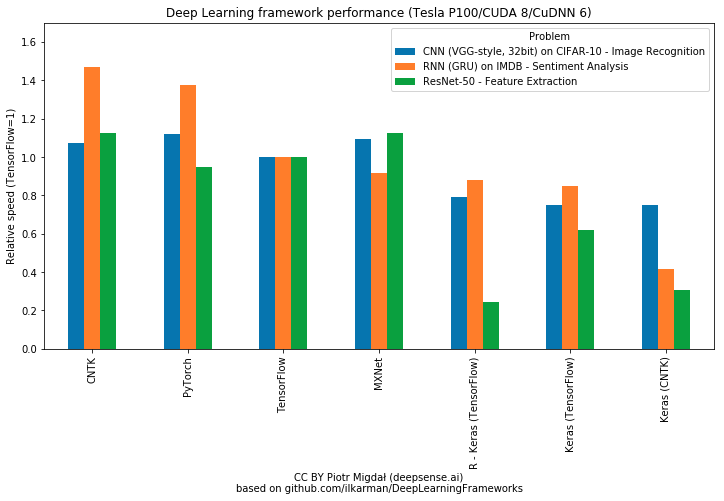

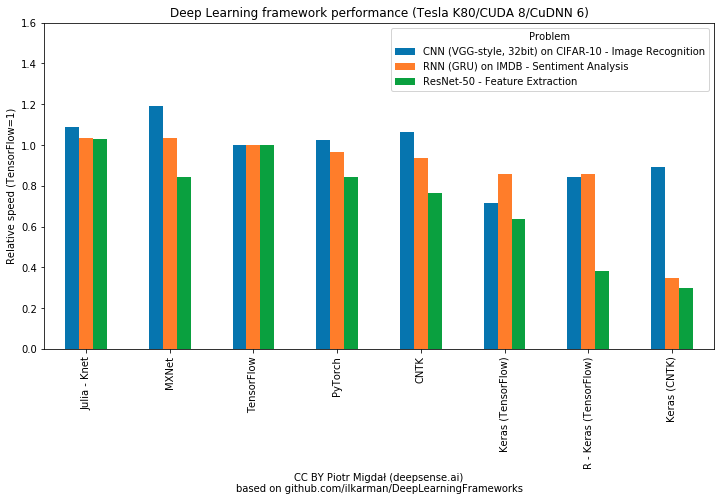

In most instances, differences in speed benchmarks should not be the main criterion for choosing a framework, especially when it is being learned. GPU time is much cheaper than a data scientist’s time. Moreover, while learning, performance bottlenecks will be caused by failed experiments, unoptimized networks, and data loading; not by the raw framework speed. Yet, for completeness, we feel compelled to touch on this subject. We recommend these two comparisons:

- TensorFlow, Keras and PyTorch comparison by Wojtek Rosiński

- Comparing Deep Learning Frameworks: A Rosetta Stone Approach by Microsoft (make sure to check notebooks to get the taste of different frameworks). For a detailed explanation of the multi-GPU framework comparisons, see this article.

PyTorch is as fast as TensorFlow, and potentially faster for Recurrent Neural Networks. Keras is consistently slower. As the author of the first comparison points out, gains in computational efficiency of higher-performing frameworks (ie. PyTorch & TensorFlow) will in most cases be outweighed by the fast development environment, and the ease of experimentation Keras offers.

SUMMARY:

- As far as training speed is concerned, PyTorch outperforms Keras

Keras vs. PyTorch: Conclusion

Keras and PyTorch are both excellent choices for your first deep learning framework to learn.

If you’re a mathematician, researcher, or otherwise inclined to understand what your model is really doing, consider choosing PyTorch. It really shines, where more advanced customization (and debugging thereof) is required (e.g. object detection with YOLOv3 or LSTMs with attention) or when we need to optimize array expressions other than neural networks (e.g. matrix decompositions or word2vec algorithms).

Keras is without a doubt the easier option if you want a plug & play framework: to quickly build, train, and evaluate a model, without spending much time on mathematical implementation details.

EDIT: For side-by-side code comparison on a real-life example, see our new article: Keras vs. PyTorch: Alien vs. Predator recognition with transfer learning.

Knowledge of the core concepts of deep learning is transferable. Once you master the basics in one environment, you can apply them elsewhere and hit the ground running as you transition to new deep learning libraries.

We encourage you to try out simple deep learning recipes in both Keras and PyTorch. What are your favourite and least favourite aspects of each? Which framework experience appeals to you more? Let us know in the comment section below!

Would you and your team like to learn more about deep learning in Keras, TensorFlow and PyTorch? See our tailored training offers.

Keras vs. PyTorch的更多相关文章

- Keras vs. PyTorch in Transfer Learning

We perform image classification, one of the computer vision tasks deep learning shines at. As traini ...

- 深度学习框架Keras与Pytorch对比

对于许多科学家.工程师和开发人员来说,TensorFlow是他们的第一个深度学习框架.TensorFlow 1.0于2017年2月发布,可以说,它对用户不太友好. 在过去的几年里,两个主要的深度学习库 ...

- Ubuntu在Anaconda中安装TensorFlow GPU,Keras,Pytorch

安装TensorFlow GPU pip install --ignore-installed --upgrade tensorflow-gpu 安装测试: $ source activate tf ...

- Hinton's paper Dynamic Routing Between Capsules 的 Tensorflow , Keras ,Pytorch实现

Tensorflow 实现 A Tensorflow implementation of CapsNet(Capsules Net) in Hinton's paper Dynamic Routing ...

- PyToune:一款类Keras的PyTorch框架

PyToune is a Keras-like framework for PyTorch and handles much of the boilerplating code needed to t ...

- [转] 理解CheckPoint及其在Tensorflow & Keras & Pytorch中的使用

作者用游戏的暂停与继续聊明白了checkpoint的作用,在三种主流框架中演示实际使用场景,手动点赞. 转自:https://blog.floydhub.com/checkpointing-tutor ...

- 常用深度学习框——Caffe/ TensorFlow / Keras/ PyTorch/MXNet

常用深度学习框--Caffe/ TensorFlow / Keras/ PyTorch/MXNet 一.概述 近几年来,深度学习的研究和应用的热潮持续高涨,各种开源深度学习框架层出不穷,包括Tenso ...

- 神经网络架构PYTORCH-初相识(3W)

who? Python是基于Torch的一种使用Python作为开发语言的开源机器学习库.主要是应用领域是在自然语言的处理和图像的识别上.它主要的开发者是Facebook人工智能研究院(FAIR)团队 ...

- (转)Awesome PyTorch List

Awesome-Pytorch-list 2018-08-10 09:25:16 This blog is copied from: https://github.com/Epsilon-Lee/Aw ...

随机推荐

- Java并发编程实践读书笔记(3)任务执行

类似于Web服务器这种多任务情况时,不可能只用一个线程来对外提供服务.这样效率和吞吐量都太低. 但是也不能来一个请求就创建一个线程,因为创建线程的成本很高,系统能创建的线程数量是有限的. 于是Exec ...

- Java虚拟机的内存组成

查了诸多的地方看到的都是这样一句话,我也Copy过来. 按照官方的说法:"Java 虚拟机具有一个堆,堆是运行时数据区域,所有类实例和数组的内存均从此处分配.堆是在 Java 虚拟机启动时创 ...

- PowerDesigner最基础的使用方法入门学习(转)

PowerDesigner最基础的使用方法入门学习 1:入门级使用PowerDesigner软件创建数据库(直接上图怎么创建,其他的概念知识可自行学习) 我的PowerDesigner版本是16. ...

- Hive Cli相关操作

landen@Master:~/UntarFile/hive-0.10.0$ bin/hive --database 'stuchoosecourse' -e 'select * from hidde ...

- margin折叠及hasLayout && Block Formatting Contexts

margin折叠的产生有几个条件: 这些margin都处于普通流中,并在同一个BFC中: 这些margin没有被非空内容.padding.border 或 clear 分隔开: 这些margin在垂直 ...

- Disconf 学习系列之Disconf 的主要目标

不多说,直接上干货! 部署极其简单:同一个上线包,无须改动配置,即可在 多个环境中(RD/QA/PRODUCTION) 上线: 部署动态化:更改配置,无需重新打包或重启,即可 实时生效: 统一管理:提 ...

- 计算机上面常用的计算单位 & 个人计算机架构与接口设备

不多说,直接上干货! 计算机上面常用的计算单位 容量单位 速度单位 此网络常使用的单位为 Mbps 是 Mbits per second,亦即是每秒多少 Mbit. 个人计算机架构与接口设备 整个 ...

- sql 保留两位小数

select convert(decimal(18,2),1800.2669)

- 运维甩锅神器---Jumpserver

简介jumpserver 也就是跳板机,堡垒机,主要用于免密钥登陆web终端,可以对所有操作进行记录,录像!对所有服务器进行资产管理, 给开发人员分配登陆主机的权限和sudo权限,为运维人员省了很多手 ...

- MySQL查询表结构的SQL语句

desc 数据库.表名; eg: desc mysql.user;