强化学习(六):n-step Bootstrapping

n-step Bootstrapping

n-step 方法将Monte Carlo 与 one-step TD统一起来。 n-step 方法作为 eligibility traces 的引入,eligibility traces 可以同时的在很多时间间隔进行bootstrapping.

n-step TD Prediction

one-step TD 方法只是基于下一步的奖励,通过下一步状态的价值进行bootstrapping,而MC方法则是基于某个episode的整个奖励序列。n-step 方法则是基于两者之间。使用n 步更新的方法被称作n-step TD 方法。

对于MC方法,估计\(v_{\pi}(S_t)\), 使用的是完全收益(complete return)是:

\]

而在one-step TD方法中,则是一步收益(one-step return):

\]

那么n-step return:

\]

其中 \(n\ge 1, 0\le t< T-n\)。

因为在t+n 时刻才可知道 \(R_{t+n}, V_{t+n-1}\) ,故可定义:

\]

# n-step TD for estimating V = v_pi

Input: a policy pi

Algorithm parameters: step size alpha in (0,1], a positive integer n

Initialize V(s) arbitrarily, s in S

All store and access operations (for S_t and R_t) can take their index mod n+1

Loop for each episode:

Initialize and store S_0 != terminal

T = infty

Loop for t = 0,1,2,...

if t < T, then:

Take an action according to pi(.|S_t)

Observe and store the next reward as R_{t+1} and the next state as S_{t+1}

If S_{t+1} is terminal, then T = t + 1

tau = t - n + 1 (tau is the time whose state's estimate is being updated)

if tau >= 0:

G = sum_{i = tau +1}^{min(tau+n,T)} gamma^{i-tau-1} R_i

if tau + n < T, then G = G + gamma^n V(S_{tau+n})

V(S_{tau}) = V(S_{tau} + alpha [G - V(S_tau)])

Until tau = T - 1

n-step Sarsa

与n-step TD方法类似,只不过n-step Sarsa 使用的state-action对,而不是state:

\]

自然地:

\]

# n-step Sarsa for estimating Q = q* or q_pi

Initialize Q(s,a) arbitrarily, for all s in S, a in A

Initialize pi to be e-greedy with respect to Q, or to a fixed given policy

Algorithm parameters: step size alpha in (0,1], small e >0, a positive integer n

All store and access operations (for S_t, A_t and R_t) can take their index mod n+1

Loop for each episode:

Initialize and store S_o != terminal

Select and store an action A_o from pi(.|S_0)

T = infty

Loop for t = 0,1,2,...:

if t < T, then:

Take action A_t

Observe and store the next reward as R_{t+1} and the next state as S_{t+1}

If S_{t+1} is terminal, then:

T = t + 1

else:

Select and store an action A_{t+1} from pi(.|S_{t+1})

tau = t - n + 1 (tau is the time whose estimate is being updated)

if tau >= 0:

G = sum_{i = tau+1}^{min(tau+n,T)} gamma^{i-tau-1}R_i

if tau + n < T, then G = G + gamma^nQ(S_{tau +n}, A_{tau+n})

Q(S_tau,A_tau) = Q(S_{tau},A_{tau}) + alpha [ G - Q(S_{tau},A_{tau})]

至于 Expected Sarsa:

\]

\]

n-step Off-policy Learning by Importance Sampling

一个简单off-policy 版的 n-step TD:

\]

其中 \(\rho_{t:t+n-1}\) 是 importance sampling ratio:

\]

off-policy n-step Sarsa更新形式:

\]

# Off-policy n-step Sarsa for estimating Q = q* or q_pi

Input: an arbitrary behavior policy b such that b(a|s) > 0, for all s in S, a in A

Initialize pi to be greedy with respect to Q, or as a fixed given policy

Algorithm parameters: step size alpha in (0,1], a positive integer n

All store and access operations (for S_t, A_t, and R_t) can take their index mod n + 1

Loop for each episode:

Initialize and store S_0 != terminal

Select and store an action A_0 from b(.|S0)

T = infty

Loop for t = 0,1,2,...:

if t<T, then:

take action At

Observe and store the next reward as R_{t+1} and the next state as S_{t+1}

if S_{t+1} is terminal, then:

T = t+1

else:

select and store an action A_{t+1} from b(.|S_{t+1})

tau = t - n + 1 (tau is the time whose estimate is being updated)

if tau >=0:

rho = \pi_{i = tau+1}^min(tau+n-1, T-1) pi(A_i|S_i)/b(A_i|S_i)

G = sum_{i = tau +1}^min(tau+n, T) gamma^{i-tau-1}R_i

if tau + n < T, then: G = G + gamma^n Q(S_{tau+n}, A_{tau+n})

Q(S_tau,A_tau) = Q(S_tau, A_tau) + alpha rho [G-Q(s_tau, A_tau)]

if pi is being learned, then ensure that pi(.|S_tau) is greedy wrt Q

Until tau = T - 1

Per-decision Off-policy Methods with Control Variates

pass

Off-policy Learning without Importance Sampling: The n-step Tree Backup Algorithm

tree-backup 算法是一种可以不借助importance sampling的off-policy n-step 方法。 tree-backup 的更新基于整个估计行动价值树,或者说,更新是基于树中叶结点(未被选中的行动)的估计的行动价值。树的内部的行动结点(即实际被选择的行动)不参加更新。

\]

G_{t:t+2} &\dot =& R_{t+1} + \gamma\sum_{a \ne A_{t+1}} \pi(a|S_{t+1})Q_{t+1}(S_{t+1},a)+ \gamma \pi(A_{t+1}|S_{t+1})(R_{t+2}+\gamma \sum_{a}\pi(a|S_{t+2},a)) \\

& = & R_{t+1} + \gamma\sum_{a\ne A_{t+1}}\pi(a|S_{t+1})Q_{t+1}(S_{t+1},a) + \gamma\pi(A_{t+1}|S_{t+1})G_{t+1:t+2}

\end{array}

\]

于是

\]

算法更新规则:

\]

# n-step Tree Backup for estimating Q = q* or q_pi

Initialize Q(s,a) arbitrarily, for all s in S, a in A

Initialize pi to be greedy with respect to Q, or as a fixed given policy

Algorithm parameters: step size alpha in (0,1], a positive integer n

All store and access operations can take their index mod n+1

Loop for each episode:

Initialize and store S_0 != terminal

Choose an action A_0 arbitrarily as a function of S_0; Store A_0

T = infty

Loop for t = 0,1,2,...:

If t < T:

Take action A_t; observe and store the next reward and state as R_{t+1}, S_{t+1}

if S_{t+1} is terminal:

T = t + 1

else:

Choose an action A_{t+1} arbitrarily as a function of S_{t+1}; Store A_{t+1}

tau = t+1 - n (tau is the time whose estimate is being updated)

if tau >= 0:

if t + 1 >= T:

G = R_T

else:

G = R_{t+1} + gamma sum_{a} pi(a|S_{t+1})Q(S_{t+1},a)

Loop for k = min(t, T - 1) down through tau + 1:

G = R_k + gamma sum_{a != A_k}pi(a|S_k)Q(S_k,a) + gamma pi(A_k|S_k) G

Q(S_tau,A_tau) = Q(S_tau,A_tau) + alpha [G - Q(S_tau,A_tau)]

if pi is being learned, then ensure that pi(.|S_tau) is greedy wrt Q

Until tau = T - 1

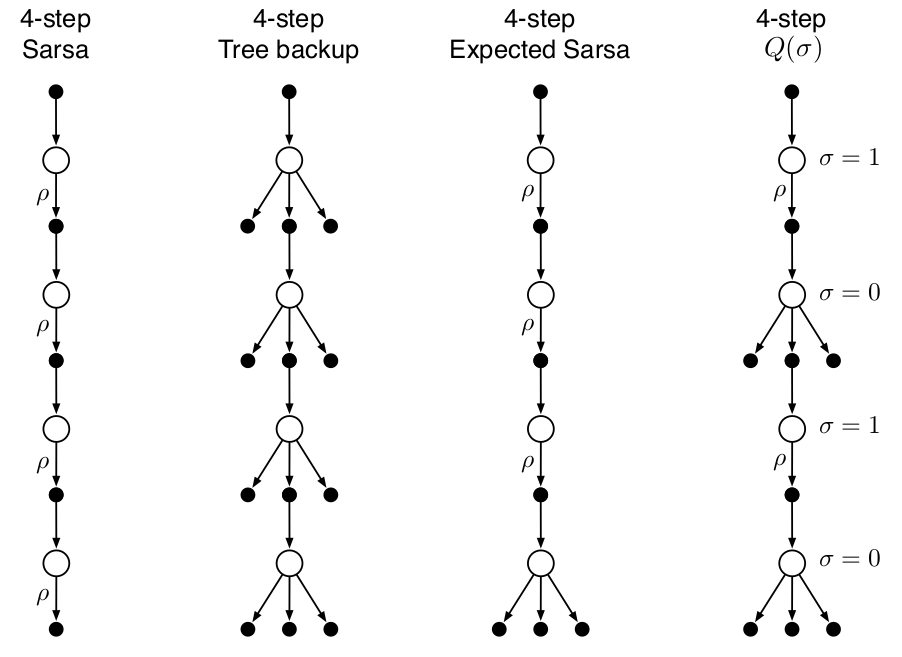

*A Unifying Algorithm: n-step Q(\(\sigma\))

在n-step Sarsa方法中,使用所有抽样转换(transitions), 在tree-backup 方法中,使用state-to-action所有分支的转换,而非抽样,而在期望 n-step 方法中,除了最后一步不使用抽样而使用所有分支的转换外,其他所有都进行抽样转换。

为统一以上三种算法,有一种思路是引入一个随机变量抽样率:\(\sigma\in [0,1]\),当其取1时,表示完全抽样,当取0时表示使用期望而不抽样。

根据tree-backup n-step return (h = t + n)以及\(\bar V\):

G_{t:h} &\dot =& R_{t+1} + \gamma\sum_{a\ne A_{t+1}}\pi(a|S_{t+1})Q_{t+1}(S_{t+1},a) + \gamma\pi(A_{t+1}|S_{t+1})G_{t+1:h}\\

& = & R_{t+1} +\gamma \bar V_{h-1} (S_{t+1}) - \gamma\pi(A_{t+1}|S_{t+1})Q_{h-1}(S_{t+1},A_{t+1}) + \gamma\pi(A_{t+1}| S_{t+1})G_{t+1:h}\\

& =& R_{t+1} +\gamma\pi(A_{t+1}|S_{t+1})(G_{t+1:h} - Q_{h-1}(S_{t+1},A_{t+1})) + \gamma \bar V_{h-1}(S_{t+1})\\

\\

&& (\text{引入}, \sigma)\\

\\

& = & R_{t+1} + \gamma(\sigma_{t+1}\rho_{t+1} + (1 - \sigma_{t+1})\pi(A_{t+1}|S_{t+1}))(G_{t+1:h} - Q_{h-1}(S_{t+1}, A_{t+1})) + \gamma \bar V_{h-1}(S_{t+1})

\end{array}

\]

# n-step Tree Backup for estimating Q = q* or q_pi

Initialize Q(s,a) arbitrarily, for all s in S, a in A

Initialize pi to be greedy with respect to Q, or as a fixed given policy

Algorithm parameters: step size alpha in (0,1], a positive integer n

All store and access operations can take their index mod n+1

Loop for each episode:

Initialize and store S_0 != terminal

Choose an action A_0 arbitrarily as a function of S_0; Store A_0

T = infty

Loop for t = 0,1,2,...:

If t < T:

Take action A_t; observe and store the next reward and state as R_{t+1}, S_{t+1}

if S_{t+1} is terminal:

T = t + 1

else:

Choose an action A_{t+1} arbitrarily as a function of S_{t+1}; Store A_{t+1}

Select and store sigma_{t+1}

Store rho_{t+1} = pi(A_{t+1}|S_{t+1})/b(A_{t+1}|S_{t+1})

tau = t+1 - n (tau is the time whose estimate is being updated)

if tau >= 0:

G = 0

Loop for k = min(t, T - 1) down through tau + 1:

if k = T:

G = R_t

else:

V_bar = sum_{a} pi(a|S_k) Q(S_k,a)

G = R_k + gamma(simga_k rho_k + (1-simga_k)pi(A_k|S_k))(G - Q(S_k,A_k)) + gamma V_bar

Q(S_tau,A_tau) = Q(S_tau,A_tau) + alpha [G - Q(S_tau,A_tau)]

if pi is being learned, then ensure that pi(.|S_tau) is greedy wrt Q

Until tau = T - 1

强化学习(六):n-step Bootstrapping的更多相关文章

- 强化学习(六)时序差分在线控制算法SARSA

在强化学习(五)用时序差分法(TD)求解中,我们讨论了用时序差分来求解强化学习预测问题的方法,但是对控制算法的求解过程没有深入,本文我们就对时序差分的在线控制算法SARSA做详细的讨论. SARSA这 ...

- 【转载】 强化学习(六)时序差分在线控制算法SARSA

原文地址: https://www.cnblogs.com/pinard/p/9614290.html ------------------------------------------------ ...

- 强化学习(十六) 深度确定性策略梯度(DDPG)

在强化学习(十五) A3C中,我们讨论了使用多线程的方法来解决Actor-Critic难收敛的问题,今天我们不使用多线程,而是使用和DDQN类似的方法:即经验回放和双网络的方法来改进Actor-Cri ...

- 强化学习(五)用时序差分法(TD)求解

在强化学习(四)用蒙特卡罗法(MC)求解中,我们讲到了使用蒙特卡罗法来求解强化学习问题的方法,虽然蒙特卡罗法很灵活,不需要环境的状态转化概率模型,但是它需要所有的采样序列都是经历完整的状态序列.如果我 ...

- 强化学习(八)价值函数的近似表示与Deep Q-Learning

在强化学习系列的前七篇里,我们主要讨论的都是规模比较小的强化学习问题求解算法.今天开始我们步入深度强化学习.这一篇关注于价值函数的近似表示和Deep Q-Learning算法. Deep Q-Lear ...

- 强化学习(七)时序差分离线控制算法Q-Learning

在强化学习(六)时序差分在线控制算法SARSA中我们讨论了时序差分的在线控制算法SARSA,而另一类时序差分的离线控制算法还没有讨论,因此本文我们关注于时序差分离线控制算法,主要是经典的Q-Learn ...

- 【转载】 强化学习(八)价值函数的近似表示与Deep Q-Learning

原文地址: https://www.cnblogs.com/pinard/p/9714655.html ------------------------------------------------ ...

- 【转载】 强化学习(七)时序差分离线控制算法Q-Learning

原文地址: https://www.cnblogs.com/pinard/p/9669263.html ------------------------------------------------ ...

- 强化学习中的无模型 基于值函数的 Q-Learning 和 Sarsa 学习

强化学习基础: 注: 在强化学习中 奖励函数和状态转移函数都是未知的,之所以有已知模型的强化学习解法是指使用采样估计的方式估计出奖励函数和状态转移函数,然后将强化学习问题转换为可以使用动态规划求解的 ...

- DRL强化学习:

IT博客网 热点推荐 推荐博客 编程语言 数据库 前端 IT博客网 > 域名隐私保护 免费 DRL前沿之:Hierarchical Deep Reinforcement Learning 来源: ...

随机推荐

- bzoj1036 [ZJOI2008]树的统计Count 树链剖分模板题

[ZJOI2008]树的统计Count Description 一棵树上有n个节点,编号分别为1到n,每个节点都有一个权值w.我们将以下面的形式来要求你对这棵树完成 一些操作: I. CHANGE u ...

- Lua 函数参数 & 默认实参

[1]Lua函数,默认实参 习惯了其他语言(如C++)的默认实参,利用Lua语言的过程中,发现没有默认实参这种机制. 所以,自己模拟了一个满足业务需求的带默认实参的函数. (1)示例如下: local ...

- pandas nan值处理

创建DataFrame样例数据 >>> import pandas as pd >>> import numpy as np >>> data = ...

- JDK8到JDK12各个版本的重要特性整理

JDK8新特性 1.Lambda表达式 2.函数式编程 3.接口可以添加默认方法和静态方法,也就是定义不需要实现类实现的方法 4.方法引用 5.重复注解,同一个注解可以使用多次 6.引入Optiona ...

- Flask实战-留言板-安装虚拟环境、使用包组织代码

Flask实战 留言板 创建项目目录messageboard,从GreyLi的代码中把Pipfile和Pipfile.lock文件拷贝过来,这两个文件中定义了虚拟环境中需要安装的包的信息和位置,进入m ...

- python模块的使用

这位老师的文章说的很清楚:模块 这里我只说一下,我在使用过程中的一些注意事项. 比如,我创建了一个包,该包下面有两个模块:model1和model2,如下图 那么我们再python中怎样去使用自己创建 ...

- Vue 组件&组件之间的通信 之 非父子关系组件之间的通信

Vue中不同的组件,即使不存在父子关系也可以相互通信,我们称为非父子关系通信: 我们需要借助一个空Vue实例,在不同的组件中,使用相同的Vue实例来发送/监听事件,达到数据通信的目的: 实例: 初始加 ...

- C#线程同步(3)- 互斥量 Mutex

文章原始出处 http://xxinside.blogbus.com/logs/47162540.html 预备知识:C#线程同步(1)- 临界区&Lock,C#线程同步(2)- 临界区&am ...

- sqlite3出现SQLITE_BUSY错误码的原因以及解决方法

转载:https://www.cnblogs.com/lijingcheng/p/4454884.html 转载:https://blog.csdn.net/venchia_lu/article/de ...

- Codeforces Global Round 1 解题报告

A 我的方法是: #include<bits/stdc++.h> using namespace std; #define int long long typedef long long ...