k8s 集群搭建

一,环境介绍

| master | node1 | node2 | |

| IP | 192.168.0.164 | 192.168.0.165 | 192.168.0.167 |

| 环境 | centos 7 | centos 7 | centos 7 |

二,配置安装

三台节点操作实例:"

01,配置yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

02,安装ETCD

yum install -y etcd

节点加入:

master节点加入:(IP 192.168.1.164)

etcd -name infra1 -initial-advertise-peer-urls http://192.168.0.164:2380 -listen-peer-urls http://192.168.0.164:2380 -listen-client-urls http://192.168.0.164:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.164:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new

node1节点加入:(IP 192.168.1.165)

etcd -name infra2 -initial-advertise-peer-urls http://192.168.0.165:2380 -listen-peer-urls http://192.168.0.165:2380 -listen-client-urls http://192.168.0.165:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.165:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new

node2节点加入:(IP 192.168.1.167)

etcd -name infra3 -initial-advertise-peer-urls http://192.168.0.167:2380 -listen-peer-urls http://192.168.0.167:2380 -listen-client-urls http://192.168.0.167:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.167:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new

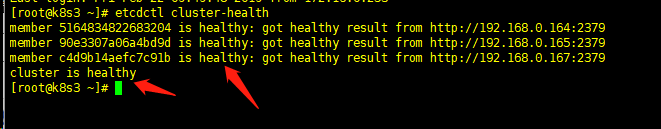

ECTD节点检查:

启动后另起一个窜口输入命令

etcdctl cluster-health

说明成功

节点管理:(更改ip即可完成多台)

vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target [Service]

Type=notify

WorkingDirectory=/root

ExecStart=etcd -name infra1 -initial-advertise-peer-urls http://192.168.0.164:2380 -listen-peer-urls http://192.168.0.164:2380 -listen-client-urls http://192.168.0.164:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.164:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new

Restart=on-failure

LimitNOFILE=65536 [Install]

WantedBy=multi-user.target

#!/bin/bash

echo '################ Prerequisites...'

systemctl stop firewalld

systemctl disable firewalld

yum -y install ntp

systemctl start ntpd

systemctl enable ntpd echo '################ Installing flannel...'

#安装flannel

yum install flannel -y echo '################ Add subnets for flannel...'

A_SUBNET=172.17.0.0/16

B_SUBNET=192.168.0.0/16

C_SUBNET=10.254.0.0/16 FLANNEL_SUBNET=$A_SUBNET

SERVICE_SUBNET=$B_SUBNET

OCCUPIED_IPs=(`ifconfig -a | grep 'inet ' | cut -d ':' -f 2 |cut -d ' ' -f 1 | grep -v '^127'`)

for ip in ${OCCUPIED_IPs[@]};do

if [ $(ipcalc -n $ip/${A_SUBNET#*/}) == $(ipcalc -n ${A_SUBNET}) ];then

FLANNEL_SUBNET=$C_SUBNET

SERVICE_SUBNET=$B_SUBNET

break

fi

if [ $(ipcalc -n $ip/${B_SUBNET#*/}) == $(ipcalc -n ${B_SUBNET}) ];then

FLANNEL_SUBNET=$A_SUBNET

SERVICE_SUBNET=$C_SUBNET

break

fi

if [ $(ipcalc -n $ip/${C_SUBNET#*/}) == $(ipcalc -n ${C_SUBNET}) ];then

FLANNEL_SUBNET=$A_SUBNET

SERVICE_SUBNET=$B_SUBNET

break

fi

done while ((1));do

sleep 2

etcdctl cluster-health

flag=$?

if [ $flag == 0 ];then

etcdctl mk /coreos.com/network/config '{"Network":"'${FLANNEL_SUBNET}'"}'

break

fi

done echo '################ Starting flannel...'

echo -e "FLANNEL_ETCD=\"http://192.168.0.164:2379,http://192.168.0.165:2379,http://192.168.0.167:2379\"

FLANNEL_ETCD_KEY=\"/coreos.com/network\"" > /etc/sysconfig/flanneld

systemctl enable flanneld

systemctl start flanneld echo '################ Installing K8S...'

yum -y install kubernetes

echo 'KUBE_API_ADDRESS="--address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBELET_PORT="--kubelet_port=10250"

KUBE_ETCD_SERVERS="--etcd_servers=http://192.168.0.164:2379,http://192.168.0.165:2379,http://192.168.0.167:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range='${SERVICE_SUBNET}'"

KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS=""' > /etc/kubernetes/apiserver echo '################ Start K8S components...'

for SERVICES in kube-apiserver kube-controller-manager kube-scheduler; do

systemctl restart $SERVICES

systemctl enable $SERVICES

systemctl status $SERVICES

done #!/bin/bash echo '################ Prerequisites...'

#关闭firewall 开启ntp时间同步

systemctl stop firewalld

systemctl disable firewalld

yum -y install ntp

systemctl start ntpd

systemctl enable ntpd #安装kubernetes所需要的几个软件

yum -y install kubernetes docker flannel bridge-utils #此处使用了一个vip 命名为vip 实际部署时需要替换为你的集群的vip 使用此ip的服务有 kube-master(8080) registry(5000) skydns(53) echo '################ Configuring nodes...'

echo '################ Configuring nodes > Find Kube master...'

KUBE_REGISTRY_IP="192.168.0.169"

KUBE_MASTER_IP="192.168.0.170"

echo '################ Configuring nodes > Configuring Minion...'

echo -e "KUBE_LOGTOSTDERR=\"--logtostderr=true\"

KUBE_LOG_LEVEL=\"--v=0\"

KUBE_ALLOW_PRIV=\"--allow_privileged=false\"

KUBE_MASTER=\"--master=http://${KUBE_MASTER_IP}:8080\"" > /etc/kubernetes/config

echo '################ Configuring nodes > Configuring kubelet...'

#取每个node机器的eth0的ip作为标识

KUBE_NODE_IP=`ifconfig eth0 | grep "inet " | awk '{print $2}'` #api_servers 使用master1 master2 master3的ip数组形式

echo -e "KUBELET_ADDRESS=\"--address=0.0.0.0\"

KUBELET_PORT=\"--port=10250\"

KUBELET_HOSTNAME=\"--hostname_override=${KUBE_NODE_IP}\"

KUBELET_API_SERVER=\"--api_servers=http://192.168.0.164:8080,http://192.168.0.165:8080,http://192.168.0.167:8080\"

KUBELET_ARGS=\"--cluster-dns=vip --cluster-domain=k8s --pod-infra-container-image=${KUBE_REGISTRY_IP}:5000/pause:latest\"" > /etc/kubernetes/kubelet #flannel读取etcd配置信息 为本机的docker0分配ip 保证node集群子网互通

echo '################ Configuring flannel...'

echo -e "FLANNEL_ETCD=\"http://192.168.0.162:2379,http://192.168.0.165:2379,http://192.168.0.167:2379\"

FLANNEL_ETCD_KEY=\"/coreos.com/network\"" > /etc/sysconfig/flanneld echo '################ Accept private registry...'

echo "OPTIONS='--selinux-enabled --insecure-registry ${KUBE_REGISTRY_IP}:5000'

DOCKER_CERT_PATH=/etc/docker" > /etc/sysconfig/docker echo '################ Start K8S Components...'

systemctl daemon-reload

for SERVICES in kube-proxy flanneld; do

systemctl restart $SERVICES

systemctl enable $SERVICES

systemctl status $SERVICES

done echo '################ Resolve interface conflicts...'

systemctl stop docker

ifconfig docker0 down

brctl delbr docker0 echo '################ Accept private registry...'

echo -e "OPTIONS='--selinux-enabled --insecure-registry ${KUBE_REGISTRY_IP}:5000'

DOCKER_CERT_PATH=/etc/docker" > /etc/sysconfig/docker for SERVICES in docker kubelet; do

systemctl restart $SERVICES

systemctl enable $SERVICES

systemctl status $SERVICES

done

k8s 集群搭建的更多相关文章

- K8S集群搭建

K8S集群搭建 摘要 是借鉴网上的几篇文章加上自己的理解整理得到的结果,去掉了一些文章中比较冗余的组件和操作,力争做到部署简单化. K8S组件说明 Kubernetes包含两种节点角色:master节 ...

- k8s集群搭建(三)

Dashboard安装 Kubernetes Dashboard是k8s提供基于Web的监控和操作界面,可以通过UI来显示集群的所有工作负载,除了查看资源,还是创建.编辑.更新.删除资源. 根据Kub ...

- k8s集群搭建 2019

参考,https://github.com/qxl1231/2019-k8s-centos 事实上k8s集群的搭建很简单,笔者在搭建的过程中遇到的主要问题是镜像无法下载的问题. 如果发现教程中提供的镜 ...

- k8s集群搭建(一)

k8s简介 kubernetes,简称K8s,是用8代替8个字符“ubernete”而成的缩写.是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简 ...

- K8S集群搭建——基于CentOS 7系统

环境准备集群数量此次使用3台CentOS 7系列机器,分别为7.3,7.4,7.5 节点名称 节点IPmaster 192.168.0.100node1 192.168.0.101node2 192. ...

- 高可用k8s集群搭建

虚拟机选择 Win10 Hyper-V 总体架构 三个master,三个node master的组件 etcd kube-apiserver kube-controller-manager kube- ...

- k8s集群搭建过程详解

准备工作 安装CentOS7虚拟机 略 安装Docker 略 关闭CentOS7自带的防火墙服务 systemctl disable firewalld systemctl stop firewall ...

- Kubernetes 系列(一):本地k8s集群搭建

我们需要做以下工作: (1)安装VMware,运行CentOs系统,一个做master,一个做node. (2)安装K8s. (3)安装docker和部分镜像会需要访问外网,所以你需要做些网络方面的准 ...

- k8s集群搭建笔记(细节有解释哦)

本文中所有带引号的命令,请手动输入引号,不知道为什么博客里输入引号,总是自动转换成了中文 基本组成 pod:k8s 最小单位,类似docker的容器(也许) 资源清单:资源.资源清单语法.pod生命周 ...

- k8s集群搭建EFK日志平台:ElasticSearch + Fluentd + Kibana

k8s集群 kubectl get node EFK简介 ElasticSearch:分布式存储检索引擎,用来搜索.存储日志 Fluentd:日志采集 Kibana:读取es中数据进行可视化web界面 ...

随机推荐

- js 简单抽奖实现

大家在很多活动页面上都看到绚丽多彩的抽奖运用,网上也有比较多关于这方面的js和用as.今天我在工作的时候也要做个抽奖的运用.我之前没有写过这类的js,也不会as,就得屁颠屁颠的问度娘啦,虽然找到有js ...

- Sharepoint2013搜索学习笔记之自定义结果精简分类(八)

搜索结果页左边的结果精简分类是可以根据搜索结果自定义的,在搜索的部门日志结果集页面上我搜索测试关键字,左边分类导航在默认分类的基础上增加了一个日志类型的分类,如下图: 要实现这个效果,导航到之前定义的 ...

- vs2010 在win8附加进程调试小技巧

在win8 附加进程居然找不到 我要的是iis 名为HKFlight的web的进程(下面2个勾也勾上了,就是找不到它)(下图是管理员身份运行截图) 解决方法:打开vs2010 用管理员身份打开...其 ...

- Partial关键字

Partial关键词定义的类可以在多个地方被定义,最后编译的时候会被当作一个类来处理. 首先看一段在C#中经常出现的代码,界面和后台分离,但是类名相同. public partial class Fo ...

- 「BJOI2012」连连看

题目链接 戳我 \(Solution\) 我们首先进行拆点操作,将每个点都拆成\(x\)和\(y\),将满足条件的两个点连起来就好了(记得要将\(x\)连\(y'\)的同时要将\(y\)联向\(x'\ ...

- 新建项目下的web文件夹下的dynamic web project和static web project和web fragment project的区别

dynamic web project是Eclipse的项目,与其对应的有static web project,前者指动态web项目,包含一些动态代码,如java:而static web projec ...

- golang并发练习代码笔记

golang语言的精髓就是它的并发机制,十分简单,并且极少数在语言层面实现并发机制的语言,golang被成为网络时代的c语言,golang的缔造者也有c语言的缔造者,Go语言是google 推出的一门 ...

- MySQL索引的索引长度问题

转自:http://samyubw.blog.51cto.com/978243/223773 MySQL的每个单表中所创建的索引长度是有限制的,且对不同存储引擎下的表有不同的限制. 在MyISAM表中 ...

- POM很重要的3个关系

POM有3个很重要的关系:依赖.继承.合成. 1.依赖关系 <dependencies></dependencies> 2.继承 <parent></pare ...

- 实现bootstrap的dropdown-menu(下拉菜单)点击后不关闭的方法 (转)

实现bootstrap的dropdown-menu(下拉菜单)点击后不关闭的方法 问题描述,在下拉菜单中,添加其他元素,例如,原文作者所述的<a>和我自己实际用到的<input> ...