阿里云恶意软件检测比赛-第三周-TextCNN

LSTM初试遇到障碍,使用较熟悉的TextCNN。

1.基础知识:

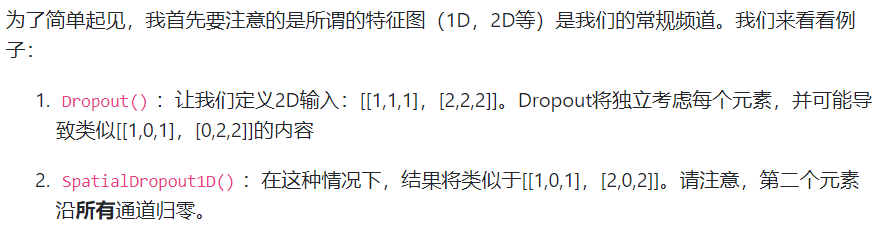

SpatialDropout1D

import pickle

from keras.preprocessing.sequence import pad_sequences

from keras_preprocessing.text import Tokenizer

from keras.models import Sequential, Model

from keras.layers import Dense, Embedding, Activation, merge, Input, Lambda, Reshape, LSTM, RNN, CuDNNLSTM, \

SimpleRNNCell, SpatialDropout1D, Add, Maximum

from keras.layers import Conv1D, Flatten, Dropout, MaxPool1D, GlobalAveragePooling1D, concatenate, AveragePooling1D

from keras import optimizers

from keras import regularizers

from keras.layers import BatchNormalization

from keras.callbacks import TensorBoard, EarlyStopping, ModelCheckpoint

from keras.utils import to_categorical

import time

import numpy as np

from keras import backend as K

from sklearn.model_selection import StratifiedKFold

import pickle

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import TfidfVectorizer

import time

import csv

import xgboost as xgb

import numpy as np

from sklearn.model_selection import StratifiedKFold my_security_train = './my_security_train.pkl'

my_security_test = './my_security_test.pkl'

my_result = './my_result.pkl'

my_result_csv = './my_result.csv'

inputLen=100

# config = K.tf.ConfigProto()

# # 程序按需申请内存

# config.gpu_options.allow_growth = True

# session = K.tf.Session(config = config) # 读取文件到变量中

with open(my_security_train, 'rb') as f:

train_labels = pickle.load(f)

train_apis = pickle.load(f)

with open(my_security_test, 'rb') as f:

test_files = pickle.load(f)

test_apis = pickle.load(f) # print(time.strftime("%Y-%m-%d-%H-%M-%S", time.localtime()))

# tensorboard = TensorBoard('./Logs/', write_images=1, histogram_freq=1)

# print(train_labels)

# 将标签转换为空格相隔的一维数组

train_labels = np.asarray(train_labels)

# print(train_labels) tokenizer = Tokenizer(num_words=None,

filters='!"#$%&()*+,-./:;<=>?@[\]^_`{|}~\t\n',

lower=True,

split=" ",

char_level=False)

# print(train_apis)

# 通过训练和测试数据集丰富取词器的字典,方便后续操作

tokenizer.fit_on_texts(train_apis)

# print(train_apis)

# print(test_apis)

tokenizer.fit_on_texts(test_apis)

# print(test_apis)

# print(tokenizer.word_index)

# #获取目前提取词的字典信息

# # vocal = tokenizer.word_index

train_apis = tokenizer.texts_to_sequences(train_apis)

# 通过字典信息将字符转换为对应的数字

test_apis = tokenizer.texts_to_sequences(test_apis)

# print(test_apis)

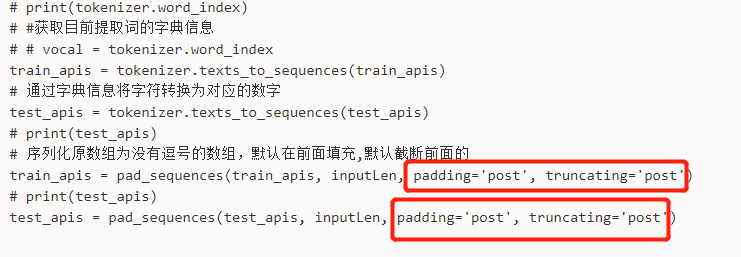

# 序列化原数组为没有逗号的数组,默认在前面填充,默认截断前面的

train_apis = pad_sequences(train_apis, inputLen, padding='post', truncating='post')

# print(test_apis)

test_apis = pad_sequences(test_apis, inputLen, padding='post', truncating='post') # print(test_apis) def SequenceModel():

# Sequential()是序列模型,其实是堆叠模型,可以在它上面堆砌网络形成一个复杂的网络结构

model = Sequential()

model.add(Dense(32, activation='relu', input_dim=6000))

model.add(Dense(8, activation='softmax'))

return model def lstm():

my_inpuy = Input(shape = (6000,), dtype = 'float64')

#在网络第一层,起降维的作用

emb = Embedding(len(tokenizer.word_index)+1, 256, input_length=6000)

emb = emb(my_inpuy)

net = Conv1D(16, 3, padding='same', kernel_initializer='glorot_uniform')(emb)

net = BatchNormalization()(net)

net = Activation('relu')(net)

net = Conv1D(32, 3, padding='same', kernel_initializer='glorot_uniform')(net)

net = BatchNormalization()(net)

net = Activation('relu')(net)

net = MaxPool1D(pool_size=4)(net) net1 = Conv1D(16, 4, padding='same', kernel_initializer='glorot_uniform')(emb)

net1 = BatchNormalization()(net1)

net1 = Activation('relu')(net1)

net1 = Conv1D(32, 4, padding='same', kernel_initializer='glorot_uniform')(net1)

net1 = BatchNormalization()(net1)

net1 = Activation('relu')(net1)

net1 = MaxPool1D(pool_size=4)(net1) net2 = Conv1D(16, 5, padding='same', kernel_initializer='glorot_uniform')(emb)

net2 = BatchNormalization()(net2)

net2 = Activation('relu')(net2)

net2 = Conv1D(32, 5, padding='same', kernel_initializer='glorot_uniform')(net2)

net2 = BatchNormalization()(net2)

net2 = Activation('relu')(net2)

net2 = MaxPool1D(pool_size=4)(net2) net = concatenate([net, net1, net2], axis=-1)

net = CuDNNLSTM(256)(net)

net = Dense(8, activation = 'softmax')(net)

model = Model(inputs=my_inpuy, outputs=net)

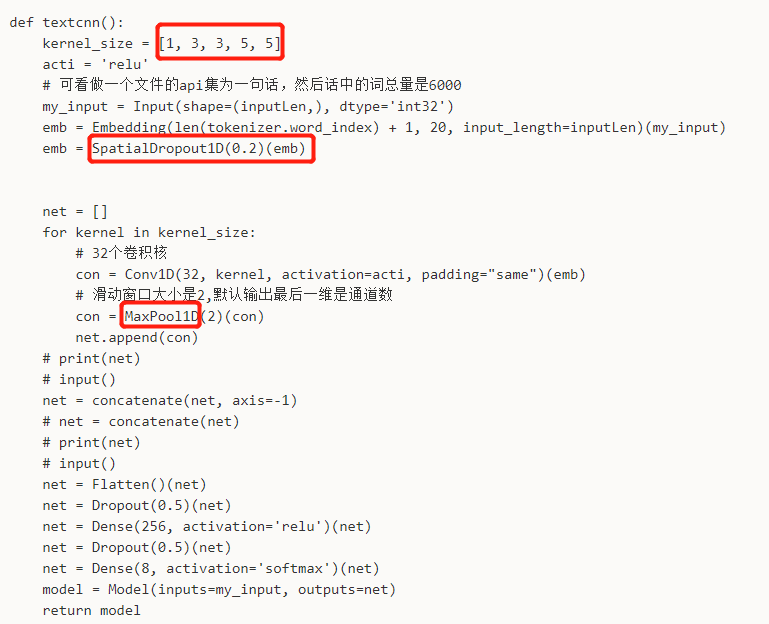

return model def textcnn():

kernel_size = [1, 3, 3, 5, 5]

acti = 'relu'

#可看做一个文件的api集为一句话,然后话中的词总量是6000

my_input = Input(shape=(inputLen,), dtype='int32')

emb = Embedding(len(tokenizer.word_index) + 1, 5, input_length=inputLen)(my_input)

emb = SpatialDropout1D(0.2)(emb) net = []

for kernel in kernel_size:

# 32个卷积核

con = Conv1D(32, kernel, activation=acti, padding="same")(emb)

# 滑动窗口大小是2,默认输出最后一维是通道数

con = MaxPool1D(2)(con)

net.append(con)

# print(net)

# input()

net = concatenate(net, axis =-1)

# net = concatenate(net)

# print(net)

# input()

net = Flatten()(net)

net = Dropout(0.5)(net)

net = Dense(256, activation='relu')(net)

net = Dropout(0.5)(net)

net = Dense(8, activation='softmax')(net)

model = Model(inputs=my_input, outputs=net)

return model # model = SequenceModel()

model = textcnn() # metrics默认只有loss,加accuracy后在model.evaluate(...)的返回值即有accuracy结果

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# print(train_apis.shape)

# print(train_labels.shape)

# 将训练集切分成训练和验证集

skf = StratifiedKFold(n_splits=5)

for i, (train_index, valid_index) in enumerate(skf.split(train_apis, train_labels)):

model.fit(train_apis[train_index], train_labels[train_index], epochs=10, batch_size=1000,

validation_data=(train_apis[valid_index], train_labels[valid_index]))

print(train_index, valid_index) # loss, acc = model.evaluate(train_apis, train_labels)

# print(loss)

# print(acc)

# print(model.predict(train_apis))

test_apis = model.predict(test_apis)

# print(test_files)

# print(test_apis) with open(my_result, 'wb') as f:

pickle.dump(test_files, f)

pickle.dump(test_apis, f) # print(len(test_files))

# print(len(test_apis)) result = []

for i in range(len(test_files)):

# # print(test_files[i])

# #之前test_apis不带逗号的格式是矩阵格式,现在tolist转为带逗号的列表格式

# print(test_apis[i])

# print(test_apis[i].tolist())

# result.append(test_files[i])

# result.append(test_apis[i])

tmp = []

a = test_apis[i].tolist()

tmp.append(test_files[i])

# extend相比于append可以添加多个值

tmp.extend(a)

# print(tmp)

result.append(tmp)

# print(1)

# print(result) with open(my_result_csv, 'w') as f:

# f.write([1,2,3])

result_csv = csv.writer(f)

result_csv.writerow(["file_id", "prob0", "prob1", "prob2", "prob3", "prob4", "prob5", "prob6", "prob7"])

result_csv.writerows(result)

确定好它的原始文件api序列最大长度:13264587

import pickle

from keras.preprocessing.sequence import pad_sequences

from keras_preprocessing.text import Tokenizer

from keras.models import Sequential, Model

from keras.layers import Dense, Embedding, Activation, merge, Input, Lambda, Reshape, LSTM, RNN, CuDNNLSTM, \

SimpleRNNCell, SpatialDropout1D, Add, Maximum

from keras.layers import Conv1D, Flatten, Dropout, MaxPool1D, GlobalAveragePooling1D, concatenate, AveragePooling1D

from keras import optimizers

from keras import regularizers

from keras.layers import BatchNormalization

from keras.callbacks import TensorBoard, EarlyStopping, ModelCheckpoint

from keras.utils import to_categorical

import time

import numpy as np

from keras import backend as K

from sklearn.model_selection import StratifiedKFold

import pickle

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import TfidfVectorizer

import time

import csv

import xgboost as xgb

import numpy as np

from sklearn.model_selection import StratifiedKFold my_security_train = './my_security_train.pkl'

my_security_test = './my_security_test.pkl'

my_result = './my_result1.pkl'

my_result_csv = './my_result1.csv'

inputLen = 5000

# config = K.tf.ConfigProto()

# # 程序按需申请内存

# config.gpu_options.allow_growth = True

# session = K.tf.Session(config = config) # 读取文件到变量中

with open(my_security_train, 'rb') as f:

train_labels = pickle.load(f)

train_apis = pickle.load(f)

with open(my_security_test, 'rb') as f:

test_files = pickle.load(f)

test_apis = pickle.load(f) # print(time.strftime("%Y-%m-%d-%H-%M-%S", time.localtime()))

# tensorboard = TensorBoard('./Logs/', write_images=1, histogram_freq=1)

# print(train_labels)

# 将标签转换为空格相隔的一维数组

train_labels = np.asarray(train_labels)

# print(train_labels) tokenizer = Tokenizer(num_words=None,

filters='!"#$%&()*+,-./:;<=>?@[\]^_`{|}~\t\n',

lower=True,

split=" ",

char_level=False)

# print(train_apis)

# 通过训练和测试数据集丰富取词器的字典,方便后续操作

tokenizer.fit_on_texts(train_apis)

# print(train_apis)

# print(test_apis)

tokenizer.fit_on_texts(test_apis)

# print(test_apis)

# print(tokenizer.word_index)

# #获取目前提取词的字典信息

# # vocal = tokenizer.word_index

train_apis = tokenizer.texts_to_sequences(train_apis)

# 通过字典信息将字符转换为对应的数字

test_apis = tokenizer.texts_to_sequences(test_apis)

# print(test_apis)

# 序列化原数组为没有逗号的数组,默认在前面填充,默认截断前面的

train_apis = pad_sequences(train_apis, inputLen, padding='post', truncating='post')

# print(test_apis)

test_apis = pad_sequences(test_apis, inputLen, padding='post', truncating='post') # print(test_apis) def SequenceModel():

# Sequential()是序列模型,其实是堆叠模型,可以在它上面堆砌网络形成一个复杂的网络结构

model = Sequential()

model.add(Dense(32, activation='relu', input_dim=6000))

model.add(Dense(8, activation='softmax'))

return model def lstm():

my_inpuy = Input(shape=(6000,), dtype='float64')

# 在网络第一层,起降维的作用

emb = Embedding(len(tokenizer.word_index) + 1, 5, input_length=6000)

emb = emb(my_inpuy)

net = Conv1D(16, 3, padding='same', kernel_initializer='glorot_uniform')(emb)

net = BatchNormalization()(net)

net = Activation('relu')(net)

net = Conv1D(32, 3, padding='same', kernel_initializer='glorot_uniform')(net)

net = BatchNormalization()(net)

net = Activation('relu')(net)

net = MaxPool1D(pool_size=4)(net) net1 = Conv1D(16, 4, padding='same', kernel_initializer='glorot_uniform')(emb)

net1 = BatchNormalization()(net1)

net1 = Activation('relu')(net1)

net1 = Conv1D(32, 4, padding='same', kernel_initializer='glorot_uniform')(net1)

net1 = BatchNormalization()(net1)

net1 = Activation('relu')(net1)

net1 = MaxPool1D(pool_size=4)(net1) net2 = Conv1D(16, 5, padding='same', kernel_initializer='glorot_uniform')(emb)

net2 = BatchNormalization()(net2)

net2 = Activation('relu')(net2)

net2 = Conv1D(32, 5, padding='same', kernel_initializer='glorot_uniform')(net2)

net2 = BatchNormalization()(net2)

net2 = Activation('relu')(net2)

net2 = MaxPool1D(pool_size=4)(net2) net = concatenate([net, net1, net2], axis=-1)

net = CuDNNLSTM(256)(net)

net = Dense(8, activation='softmax')(net)

model = Model(inputs=my_inpuy, outputs=net)

return model def textcnn():

kernel_size = [1, 3, 3, 5, 5]

acti = 'relu'

# 可看做一个文件的api集为一句话,然后话中的词总量是6000

my_input = Input(shape=(inputLen,), dtype='int32')

emb = Embedding(len(tokenizer.word_index) + 1, 20, input_length=inputLen)(my_input)

emb = SpatialDropout1D(0.2)(emb) net = []

for kernel in kernel_size:

# 32个卷积核

con = Conv1D(32, kernel, activation=acti, padding="same")(emb)

# 滑动窗口大小是2,默认输出最后一维是通道数

con = MaxPool1D(2)(con)

net.append(con)

# print(net)

# input()

net = concatenate(net, axis=-1)

# net = concatenate(net)

# print(net)

# input()

net = Flatten()(net)

net = Dropout(0.5)(net)

net = Dense(256, activation='relu')(net)

net = Dropout(0.5)(net)

net = Dense(8, activation='softmax')(net)

model = Model(inputs=my_input, outputs=net)

return model test_result = np.zeros(shape=(len(test_apis),8)) # print(train_apis.shape)

# print(train_labels.shape)

# 5折交叉验证,将训练集切分成训练和验证集

skf = StratifiedKFold(n_splits=5)

for i, (train_index, valid_index) in enumerate(skf.split(train_apis, train_labels)):

# print(i)

# model = SequenceModel()

model = textcnn() # metrics默认只有loss,加accuracy后在model.evaluate(...)的返回值即有accuracy结果

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

#模型保存规则

model_save_path = './my_model/my_model_{}.h5'.format(str(i))

checkpoint = ModelCheckpoint(model_save_path, save_best_only=True, save_weights_only=True)

#早停规则

earlystop = EarlyStopping(monitor='val_loss', min_delta=0, patience=5, verbose=0, mode='min', baseline=None,

restore_best_weights=True)

#训练的过程会保存模型并早停

model.fit(train_apis[train_index], train_labels[train_index], epochs=100, batch_size=1000,

validation_data=(train_apis[valid_index], train_labels[valid_index]), callbacks=[checkpoint, earlystop])

model.load_weights(model_save_path)

# print(train_index, valid_index) test_tmpapis = model.predict(test_apis)

test_result = test_result + test_tmpapis # loss, acc = model.evaluate(train_apis, train_labels)

# print(loss)

# print(acc)

# print(model.predict(train_apis)) # print(test_files)

# print(test_apis)

test_result = test_result/5.0

with open(my_result, 'wb') as f:

pickle.dump(test_files, f)

pickle.dump(test_result, f) # print(len(test_files))

# print(len(test_apis)) result = []

for i in range(len(test_files)):

# # print(test_files[i])

# #之前test_apis不带逗号的格式是矩阵格式,现在tolist转为带逗号的列表格式

# print(test_apis[i])

# print(test_apis[i].tolist())

# result.append(test_files[i])

# result.append(test_apis[i])

tmp = []

a = test_result[i].tolist()

tmp.append(test_files[i])

# extend相比于append可以添加多个值

tmp.extend(a)

# print(tmp)

result.append(tmp)

# print(1)

# print(result) with open(my_result_csv, 'w') as f:

# f.write([1,2,3])

result_csv = csv.writer(f)

result_csv.writerow(["file_id", "prob0", "prob1", "prob2", "prob3", "prob4", "prob5", "prob6", "prob7"])

result_csv.writerows(result)

可知,增加了早停机制后,约20代程序就被截止,valid不饱和。改进方案呢?

尝试参考网上的,前向填充,这个影响大吗?

阿里云恶意软件检测比赛-第三周-TextCNN的更多相关文章

- 确保数据零丢失!阿里云数据库RDS for MySQL 三节点企业版正式商用

2019年10月23号,阿里云数据库RDS for MySQL 三节点企业版正式商用,RDS for MySQL三节点企业版基于Paxos协议实现数据库复制,每个事务日志确保至少同步两个节点,实现任意 ...

- 阿里云 Aliplayer高级功能介绍(三):多字幕

基本介绍 国际化场景下面,播放器支持多字幕,可以有效解决视频的传播障碍难题,该功能适用于视频内容在全球范围内推广,阿里云的媒体处理服务提供接口可以生成多字幕,现在先看一下具体的效果: WebVTT格式 ...

- 记一次阿里云服务器被用作DDOS攻击肉鸡

事件描述:阿里云报警 ——检测该异常事件意味着您服务器上开启了"Chargen/DNS/NTP/SNMP/SSDP"这些UDP端口服务,黑客通过向该ECS发送伪造源IP和源端口的恶 ...

- 阿里云 ecs win2016 FileZilla Server

Windows Server 2016 下使用 FileZilla Server 安装搭建 FTP 服务 一.安装 Filezilla Server 下载最新版本的 Filezilla Server ...

- 阿里云配置通用服务的坑 ssh: connect to host 47.103.101.102 port 22: Connection refused

1.~ wjw$ ssh root@47.103.101.102 ssh: connect to host 47.103.101.102 port 22: Connection refused ssh ...

- 阿里云入选Gartner 2019 WAF魔力象限,唯一亚太厂商!

近期,在全球权威咨询机构Gartner发布的2019 Web应用防火墙魔力象限中,阿里云Web应用防火墙成功入围,是亚太地区唯一一家进入该魔力象限的厂商! Web应用防火墙,简称WAF.在保护Web应 ...

- SaaS加速器,到底加速了谁? 剖析阿里云的SaaS战略:企业和ISV不可错过的好文

过去二十年,中国诞生了大批To C的高市值互联网巨头,2C的领域高速发展,而2B领域一直不温不火.近两年来,在C端流量饱和,B端数字化转型来临的背景下,中国越来越多的科技公司已经慢慢将触角延伸到了B端 ...

- 有关阿里云对SaaS行业的思考,看这一篇就够了

过去二十年,随着改革开放的深化,以及中国的人口红利等因素,中国诞生了大批To C的高市值互联网巨头,2C的领域高速发展,而2B领域一直不温不火.近两年来,在C端流量饱和,B端数字化转型来临的背景下,中 ...

- 专访阿里云资深技术专家黄省江:中国SaaS公司的成功之路

笔者采访中国SaaS厂商10多年,深感面对获客成本巨大.产品技术与功能成熟度不足.项目经营模式难以大规模复制.客户观念有待转变等诸多挑战,很多中国SaaS公司的经营状况都不容乐观. 7月26日,阿里云 ...

随机推荐

- vmware 虚拟机共享 windows 目录

1.vmware 配置: 2.虚拟机进行配置: 虚拟机安装vmware-tools 3.虚拟机中挂载sr0(cdrom): [root@bogon ~]# mount /dev/sr0 /mnt/ m ...

- 操作系统-I/O(5)I/O软件的层次结构

IO软件的设计目标: (1)高效率:改善设备效率,尤其是磁盘I/O操作的效率 (2)通用性:用统一的标准来管理所有设备 IO软件的设计思路: 把软件组织成层次结构,低层软件用来屏蔽硬件细节,高层软件向 ...

- 第2篇scrum

第2篇scrum 一.站立式会议 1.1会议照片 想得美 1.2项目进展 团队成员 昨日完成任务 今日计划任务 感想 吴茂平 完善用户系统 改进评论数据表,增加评论,删除评论,查询评论 今天也是元气满 ...

- SPSSAU数据分析思维培养系列1:数据思维篇

今天,SPSSAU给大家带来[数据分析思维培养]系列课程.主要针对第一次接触数据分析,完全不懂分析的小白用户,或者懂一些简单方法但苦于没有分析思路,不知道如何规范化分析. 本文章为SPSSAU数据分析 ...

- 轻松应对并发,Newbe.Claptrap 框架入门,第四步 —— 利用 Minion,商品下单

接上一篇 Newbe.Claptrap 框架入门,第三步 —— 定义 Claptrap,管理商品库存 ,我们继续要了解一下如何使用 Newbe.Claptrap 框架开发业务.通过本篇阅读,您便可以开 ...

- CF1270B Interesting Subarray 题解

22:20下晚自习所以只打了10+min,然而这并不能成为我脑抽没一眼看出B题的借口,所以又掉回绿名了qwq.所以我还是太菜了. 题意分析 给出一个数列,要求找出一段连续的子数列满足这个子数列的最大值 ...

- UnitTest框架的快速构建与运行

我们先来简单介绍一下unittest框架,先上代码: 1.建立结构的文件夹: 注意,上面的文件夹都是package,也就是说你在new新建文件夹的时候不要选directory,而是要选package: ...

- Linux安装Rabbitmq3.8.5

安装环境: 操作系统为:centOS-7 erlang版本为22.3,软件包:otp_src_22.3.tar.gz rabbitMQ版本为3.8.5,软件包:rabbitmq-server-gene ...

- fragment没有getWindowManager 关于fragment下的报错解决方法

其实很简单:只需要在getWindowManager().getDefaultDisplay().getMetrics(metric) 前面加上getactivity()即可.

- .net core中使用jwt进行认证

JSON Web Token(JWT)是一个开放标准(RFC 7519),它定义了一种紧凑且自包含的方式,用于在各方之间作为JSON对象安全地传输信息.由于此信息是经过数字签名的,因此可以被验证和信任 ...