Deep Learning and the Triumph of Empiricism

Deep Learning and the Triumph of Empiricism

By Zachary Chase Lipton, July 2015

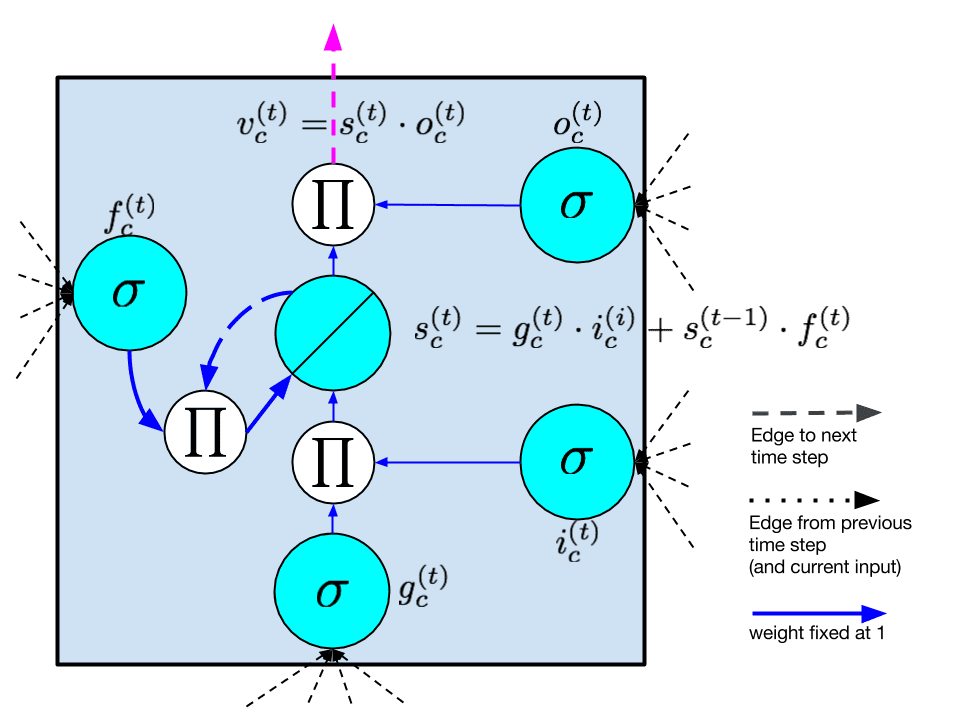

Deep learning is now the standard-bearer for many tasks in supervised machine learning. It could also be argued that deep learning has yielded the most practically useful algorithms in unsupervised machine learning in a few decades. The excitement stemming from these advances has provoked a flurry of research and sensational headlines from journalists. While I am wary of the hype, I too find the technology exciting, and recently joined the party, issuing a 30-page critical review on Recurrent Neural Networks (RNNs) for sequence learning.

But many in the machine learning research community are not fawning over the deepness. In fact, for many who fought to resuscitate artificial intelligence research by grounding it in the language of mathematics and protecting it with theoretical guarantees, deep learning represents a fad. Worse, to some it might seem to be a regression.1

In this article, I'll try to offer a high-level and even-handed analysis of the useful-ness of theoretical guarantees and why they might not always be as practically useful as intellectually rewarding. More to the point, I'll offer arguments to explain why after so many years of increasingly statistically sound machine learning, many of today's best performing algorithms offer no theoretical guarantees.

Guarantee What?

A guarantee is a statement that can be made with mathematical certainty about the behavior, performance or complexity of an algorithm. All else being equal, we would love to say that given sufficient time our algorithm A can find a classifier H from some class of models {H1, H2, ...} that performs no worse than H*, where H* is the best classifier in the class. This is, of course, with respect to some fixed loss function L. Short of that, we'd love to bound the difference or ratio between the performance of H and H* by some constant. Short of such an absolute bound, we'd love to be able to prove that with high probability H and H* are give similar values after running our algorithm for some fixed period of time.

Many existing algorithms offer strong statistical guarantees. Linear regression admits an exact solution. Logistic regression is guaranteed to converge over time. Deep learning algorithms, generally, offer nothing in the way of guarantees. Given an arbitrarily bad starting point, I know of no theoretical proof that a neural network trained by some variant of SGD will necessarily improve over time and not be trapped in a local minima. There is a flurry of recent work which suggests reasonably that saddle points outnumber local minima on the error surfaces of neural networks (an m-dimensional surface where m is the number of learned parameters, typically the weights on edges between nodes). However, this is not the same as proving that local minima do not exist or that they cannot be arbitrarily bad.

Problems with Guarantees

Provable mathematical properties are obviously desirable. They may have even saved machine learning, giving succor at a time when the field of AI was ill-defined, over-promising, and under-delivering. And yet many of today's best algorithms offer nothing in the way of guarantees. How is this possible?

I'll explain several reasons in the following paragraphs. They include:

- Guarantees are typically relative to a small class of hypotheses.

- Guarantees are often restricted to worst-case analysis, but the real world seldom presents the worst case.

- Guarantees are often predicated on incorrect assumptions about data.

Selecting a Winner from a Weak Pool

To begin, theoretical guarantees usually assure that a hypothesis is close to the best hypothesis in some given class. This in no way guarantees that there exists a hypothesis in the given class capable of performing satisfactorily.

Here's a heavy handed example: I desire a human editor to assist me in composing a document. Spell-check may come with guarantees about how it will behave. It will identify certain misspellings with 100% accuracy. But existing automated proof-reading tools cannot provide the insight offered by an intelligent human. Of course, a human offers nothing in the way of mathematical guarantees. The human may fall asleep, ignore my emails, or respond nonsensically. Nevertheless, a he/she is capable of expressing a far greater range of useful ideas than Clippy.

A cynical take might be that there are two ways to improve a theoretical guarantee. One is to improve the algorithm. Another is to weaken the hypothesis class of which it is a member. While neural networks offer little in the way of guarantees, they offer a far richer set of potential hypotheses than most better understood machine learning models. As heuristic learning techniques and more powerful computers have eroded the obstacles to effective learning, it seems clear that for many models, this increased expressiveness is essential for making predictions of practical utility.

The Worst Case May Not Matter

Guarantees are most often given in the worst case. By guaranteeing a result that is within a factor epsilon of optimal, we say that the worst case will be no worse than a factor epsilon. But in practice, the worst case scenario may never occur. Real world data is typically highly structured, and worst case scenarios may have a structure such that there is no overlap between a typical and pathological dataset. In these settings, the worst case bound still holds, but it may be the case that all algorithms perform much better. There may not be a reason to believe that the algorithm with the better worst case guarantee will have a better typical case performance.

Predicated on Provably Incorrect Assumptions

Another reason why models with theoretical soundness may not translate into real-world performance is that the assumptions about data necessary to produce theoretical results are often known to be false. Consider Latent Dirichlet Allocation (LDA) for example, a well understood and remarkably useful algorithm for topic modeling. Many theoretical proofs about LDA are predicated upon the assumption that a document is associated with a distribution over topics. Each topic is in turn associated with a distribution over all words in the vocabulary. The generative process then proceeds as follows. For each word in a document, first a topic is chosen stochastically according to the relative probabilities of each topic. Then, conditioned on the chosen topic, a word is chosen according to that topic's word distribution. This process repeats until all words are chosen.

Clearly, this assumption does not hold on any real-world natural language dataset. In real documents, words are chosen contextually and depend highly on the sentences they are placed in. Additionally document lengths aren't arbitrarily predetermined, although this may be the case in undergraduate coursework. However, given the assumption of such a generative process, many elegant proofs about theoretical properties of LDA hold.

To be clear, LDA is indeed a broadly useful, state of the art algorithm. Further, I am convinced that theoretical investigations of the properties of algorithms, even under unrealistic assumptions is a worthwhile and necessary step to improve our understanding and lay the groundwork for more general and powerful theorems later. In this article, I seek only to contextualize the nature of much known theory and to give intuition to data science practitioners about why the algorithms with the most favorable theoretical properties are not always the best performing empirically.

The Triumph of Empiricism

One might ask, If not guided entirely by theory, what allows methods like deep learning to prevail? Further Why are empirical methods backed by intuition so broadly successful now even as they fell out of favor decades ago?

In answer to these question, I believe that the existence of comparatively humongous, well-labeled datasets like ImageNet is responsible for resurgence in heuristic methods. Given sufficiently large datasets, the risk of overfitting is low. Further, validating against test data offers a means to address the typical case, instead of focusing on the worst case. Additionally, the advances in parallel computing and memory size have made it possible to follow-up on many hypotheses simultaneously with empirical experiments. Empirical studies backed by strong intuition offer a path forward when we reach the limits of our formal understanding.

Caveats

For all the success of deep learning in machine perception and natural language, one could reasonably argue that by far, the three most valuable machine learning algorithms are linear regression, logistic regression, and k-means clustering, all of which are well-understood theoretically. A reasonable counter-argument to the idea of a triumph of empiricism might be that far the best algorithms are theoretically motivated and grounded, and that empiricism is responsible only for the newest breakthroughs, not the most significant.

Few Things Are Guaranteed

When attainable, theoretical guarantees are beautiful. They reflect clear thinking and provide deep insight to the structure of a problem. Given a working algorithm, a theory which explains its performance deepens understanding and provides a basis for further intuition. Given the absence of a working algorithm, theory offers a path of attack.

However, there is also beauty in the idea that well-founded intuitions paired with rigorous empirical study can yield consistently functioning systems that outperform better-understood models, and sometimes even humans at many important tasks. Empiricism offers a path forward for applications where formal analysis is stifled, and potentially opens new directions that might eventually admit deeper theoretical understanding in the future.

1Yes, corny pun.

Zachary Chase Lipton is a PhD student in the Computer Science Engineering department at the University of California, San Diego. Funded by the Division of Biomedical Informatics, he is interested in both theoretical foundations and applications of machine learning. In addition to his work at UCSD, he has interned at Microsoft Research Labs.

Zachary Chase Lipton is a PhD student in the Computer Science Engineering department at the University of California, San Diego. Funded by the Division of Biomedical Informatics, he is interested in both theoretical foundations and applications of machine learning. In addition to his work at UCSD, he has interned at Microsoft Research Labs.

Related:

- Not So Fast: Questioning Deep Learning IQ Results

- The Myth of Model Interpretability

- (Deep Learning’s Deep Flaws)’s Deep Flaws

- Data Science’s Most Used, Confused, and Abused Jargon

- Differential Privacy: How to make Privacy and Data Mining Compatible

Deep Learning and the Triumph of Empiricism的更多相关文章

- Why Deep Learning Works – Key Insights and Saddle Points

Why Deep Learning Works – Key Insights and Saddle Points A quality discussion on the theoretical mot ...

- Does Deep Learning Come from the Devil?

Does Deep Learning Come from the Devil? Deep learning has revolutionized computer vision and natural ...

- Deep learning:五十一(CNN的反向求导及练习)

前言: CNN作为DL中最成功的模型之一,有必要对其更进一步研究它.虽然在前面的博文Stacked CNN简单介绍中有大概介绍过CNN的使用,不过那是有个前提的:CNN中的参数必须已提前学习好.而本文 ...

- 【深度学习Deep Learning】资料大全

最近在学深度学习相关的东西,在网上搜集到了一些不错的资料,现在汇总一下: Free Online Books by Yoshua Bengio, Ian Goodfellow and Aaron C ...

- 《Neural Network and Deep Learning》_chapter4

<Neural Network and Deep Learning>_chapter4: A visual proof that neural nets can compute any f ...

- Deep Learning模型之:CNN卷积神经网络(一)深度解析CNN

http://m.blog.csdn.net/blog/wu010555688/24487301 本文整理了网上几位大牛的博客,详细地讲解了CNN的基础结构与核心思想,欢迎交流. [1]Deep le ...

- paper 124:【转载】无监督特征学习——Unsupervised feature learning and deep learning

来源:http://blog.csdn.net/abcjennifer/article/details/7804962 无监督学习近年来很热,先后应用于computer vision, audio c ...

- Deep Learning 26:读论文“Maxout Networks”——ICML 2013

论文Maxout Networks实际上非常简单,只是发现一种新的激活函数(叫maxout)而已,跟relu有点类似,relu使用的max(x,0)是对每个通道的特征图的每一个单元执行的与0比较最大化 ...

- Deep Learning 23:dropout理解_之读论文“Improving neural networks by preventing co-adaptation of feature detectors”

理论知识:Deep learning:四十一(Dropout简单理解).深度学习(二十二)Dropout浅层理解与实现.“Improving neural networks by preventing ...

随机推荐

- ASP.NET二级域名站点共享Session状态

我的前面一篇文章提到了如何在使用了ASP.NET form authentication的二级站点之间共享登陆状态, http://www.cnblogs.com/jzywh/archive/2007 ...

- java createSQLQuery().list()返回日期格式没有时分秒的解决方法

方法一 将Oracel数据库对应表中“收单时间的字段”receive_sheet_time,由原来的Date类型改为timestamp 然后,在java程序中,由 (java.util.timesta ...

- 【Android学习】尺寸单位 px in mm pt dp sp

一.Android中支持的尺寸单位 下面用表格的方式将Android中支持的尺寸单位列举,供大家参考: Android的尺寸单位 单位表示 单位名称 单位说明 px 像素 屏幕上的真实像素 ...

- Swift中的循环语句

循环语句能够使程序代码重复执行.Swift编程语言支持4种循环构造类型:while.do while.for和for in.for和while循环是在执行循环体之前测试循环条件,而do while是在 ...

- SQL通过日期计算年龄

首先建立一个表如下: ======================= BirthDay datetime not null Age 通过公式计算得出 ======================= 以 ...

- T-SQL数组循环

T-SQL对字符串的处理能力比较弱,比如要循环遍历象1,2,3,4,5这样的字符串,如果用数组的话,遍历很简单,但是T-SQL不支持数组,所以处理下来比较麻烦.下边的函数,实现了象数组一样去处理字符串 ...

- 使用CSS修改HTML5 input placeholder颜色( 转载 )

问题:Chrome支持input=[type=text]占位文本属性,但下列CSS样式却不起作用: input[placeholder], [placeholder], *[placeholder] ...

- firefox ie chrome 设置单元格宽度 td width 有bug,不能正常工作。以下方式可以解决

1. firefox ie chrome 设置单元格宽度 td width 有bug,不能正常工作. 如果是上面一行 和下面一行是分别属于两个table,但是他们的列需要对齐,也就是说分开画的,然后设 ...

- 粗谈Android中的对齐

在谈这个之前先啰嗦几个概念. 基线:书写英语单词时为了规范书写会设有四条线,从上至下第三条就是基线.基线对齐主要是为了两个控件中显示的英文单词的基线对齐,如下所示: Start:在看API的时候经常会 ...

- tomcat源码解读(2)–容器责任链模式的实现

责任链模式:责任链模式可以用在这样的场景,当一个request过来的时候,需要对这个request做一系列的加工,使用责任链模式可以使每个加工组件化,减少耦合.也可以使用在当一个request过来的时 ...