自然语言14_Stemming words with NLTK

python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

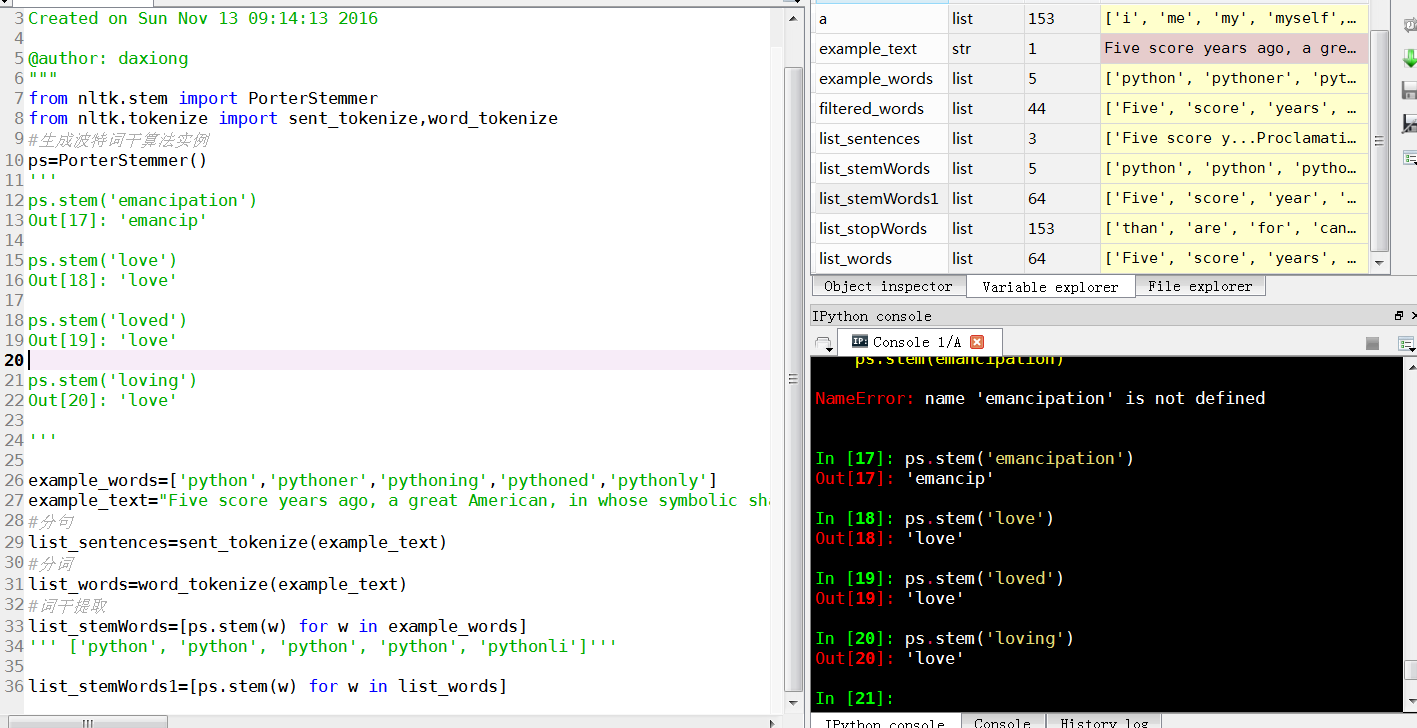

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016 @author: daxiong

"""

from nltk.stem import PorterStemmer

from nltk.tokenize import sent_tokenize,word_tokenize

#生成波特词干算法实例

ps=PorterStemmer()

'''

ps.stem('emancipation')

Out[17]: 'emancip' ps.stem('love')

Out[18]: 'love' ps.stem('loved')

Out[19]: 'love' ps.stem('loving')

Out[20]: 'love' ''' example_words=['python','pythoner','pythoning','pythoned','pythonly']

example_text="Five score years ago, a great American, in whose symbolic shadow we stand today, signed the Emancipation Proclamation. This momentous decree came as a great beacon light of hope to millions of Negro slaves who had been seared in the flames of withering injustice. It came as a joyous daybreak to end the long night of bad captivity."

#分句

list_sentences=sent_tokenize(example_text)

#分词

list_words=word_tokenize(example_text)

#词干提取

list_stemWords=[ps.stem(w) for w in example_words]

''' ['python', 'python', 'python', 'python', 'pythonli']''' list_stemWords1=[ps.stem(w) for w in list_words]

Stemming words with NLTK

The idea of stemming is a sort of normalizing method. Many

variations of words carry the same meaning, other than when tense is

involved.

The reason why we stem is to shorten the lookup, and normalize sentences.

Consider:

I was riding in the car.

This sentence means the same thing. in the car is the same. I was is

the same. the ing denotes a clear past-tense in both cases, so is it

truly necessary to differentiate between ride and riding, in the case of

just trying to figure out the meaning of what this past-tense activity

was?

No, not really.

This is just one minor example, but imagine every word in the English

language, every possible tense and affix you can put on a word. Having

individual dictionary entries per version would be highly redundant and

inefficient, especially since, once we convert to numbers, the "value"

is going to be identical.

One of the most popular stemming algorithms is the Porter stemmer, which has been around since 1979.

First, we're going to grab and define our stemmer:

from nltk.stem import PorterStemmer

from nltk.tokenize import sent_tokenize, word_tokenize ps = PorterStemmer()

Now, let's choose some words with a similar stem, like:

example_words = ["python","pythoner","pythoning","pythoned","pythonly"]

Next, we can easily stem by doing something like:

for w in example_words:

print(ps.stem(w))

Our output:

python

python

python

python

pythonli

Now let's try stemming a typical sentence, rather than some words:

new_text = "It is important to by very pythonly while you are pythoning with python. All pythoners have pythoned poorly at least once."

words = word_tokenize(new_text) for w in words:

print(ps.stem(w))

Now our result is:

It

is

import

to

by

veri

pythonli

while

you

are

python

with

python

.

All

python

have

python

poorli

at

least

onc

.

Next up, we're going to discuss something a bit more advanced from the NLTK module, Part of Speech tagging, where we can use the NLTK module to identify the parts of speech for each word in a sentence.

自然语言14_Stemming words with NLTK的更多相关文章

- 自然语言处理(1)之NLTK与PYTHON

自然语言处理(1)之NLTK与PYTHON 题记: 由于现在的项目是搜索引擎,所以不由的对自然语言处理产生了好奇,再加上一直以来都想学Python,只是没有机会与时间.碰巧这几天在亚马逊上找书时发现了 ...

- 自然语言23_Text Classification with NLTK

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/text-classification-nltk-tutorial/?compl ...

- 自然语言20_The corpora with NLTK

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/nltk-corpus-corpora-tutorial/?completed= ...

- 自然语言19.1_Lemmatizing with NLTK(单词变体还原)

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/lemmatizing-nltk-tutorial/?completed=/na ...

- 自然语言13_Stop words with NLTK

https://www.pythonprogramming.net/stop-words-nltk-tutorial/?completed=/tokenizing-words-sentences-nl ...

- 自然语言处理2.1——NLTK文本语料库

1.获取文本语料库 NLTK库中包含了大量的语料库,下面一一介绍几个: (1)古腾堡语料库:NLTK包含古腾堡项目电子文本档案的一小部分文本.该项目目前大约有36000本免费的电子图书. >&g ...

- python自然语言处理函数库nltk从入门到精通

1. 关于Python安装的补充 若在ubuntu系统中同时安装了Python2和python3,则输入python或python2命令打开python2.x版本的控制台:输入python3命令打开p ...

- Python自然语言处理实践: 在NLTK中使用斯坦福中文分词器

http://www.52nlp.cn/python%E8%87%AA%E7%84%B6%E8%AF%AD%E8%A8%80%E5%A4%84%E7%90%86%E5%AE%9E%E8%B7%B5-% ...

- 推荐《用Python进行自然语言处理》中文翻译-NLTK配套书

NLTK配套书<用Python进行自然语言处理>(Natural Language Processing with Python)已经出版好几年了,但是国内一直没有翻译的中文版,虽然读英文 ...

随机推荐

- 软件工程(FZU2015)增补作业

说明 张老师为FZU软件工程2015班级添加了一次增补作业,总分10分,deadline是2016/01/01-2016/01/03 前11次正式作业和练习的迭代评分见:http://www.cnbl ...

- openldap+phpadmin的搭建安装

1.概念介绍 LDAP是轻量目录访问协议,英文全称是Lightweight Directory Access Protocol,一般都简称为LDAP.它是基于X.500标准的,但是简单多了并且可以根据 ...

- 爱上MVC3系列~Html.BeginForm与Ajax.BeginForm

Html.BeginForm与Ajax.BeginForm都是MVC架构中的表单元素,它们从字面上可以看到区别,即Html.BeginForm是普通的表单提交,而Ajax.BeginForm是支持异步 ...

- mysql之路【第三篇】

1,查看表的结构 desc 表名; 查看表的详细结构 show create table; show create table \G; (加上G格式化输出), 2,修改表 2. ...

- HashMap Hashtable区别

http://blog.csdn.net/java2000_net/archive/2008/06/05/2512510.aspx 我们先看2个类的定义 public class Hashtable ...

- js json 对象相互转换

字符串转对象(strJSON代表json字符串) var obj = eval(strJSON); var obj = strJSON.parseJSON(); var obj = JSO ...

- jquery $.each 和for怎么跳出循环终止本次循环

1.for循环中我们使用continue:终止本次循环计入下一个循环,使用break终止整个循环. 2.而在jquery中 $.each则对应的使用return true 和return false. ...

- Java反编译插件JadClipse

Java反编译是很容易的,现在就介绍一个反编译插件,以后我们通过Ctrl+鼠标左键查看源码就容易得多了,不用再担心源码找不到了,配置过程很简单的. 准备: 1.下载JadClipse(jar文件,ec ...

- js 对象 copy 对象

function clone(myObj) { if (typeof (myObj) != 'object') return myObj; if (myObj == null) return myOb ...

- css设置background图片的位置实现居中

/* 例 1: 默认值 */ background-position: 0 0; /* 元素的左上角 */ /* 例 2: 把图片向右移动 */ background-position: 75px 0 ...