RabbitMQ之消息模式(下)

目的:

RabbitMQ之消息模式(上):https://www.cnblogs.com/huangting/p/11994539.html

消费端限流

消息的ACK与重回队列

TTL消息

死信队列

消费端限流

什么是消费端的限流?

假设一个场景,首先,我们RabbitMQ服务器有上万条未处理的消息,我们随便打开一个消费者客户端,会出现下面情况:

巨量的消息瞬间全部推送过来,但是我们单个客户端无法同时处理这么多数据

消费端限流RabbitMQ提供的解决方案

RabbitMQ提供了一种qos(服务质量保证)功能,即在非自动确认消息的前提下,如果一定数目的消息(通过基于Consumer或者Channel设置Qos的值)未被确认前,不进行消费新的消息

Void BasicQos(uint prefetchSize, ushort prefetchCount, bool global);

prefetchSize:0 不限制消息大小

prefetchSize:会告诉RabbitMQ不要同时给一个消费者推送多于N个消息,即一旦有N个消息还没有ack,则该Consumer将block(阻塞)掉,直到有消息ack

Global:true\false是否将上面设置应用于Channel;简单来说,就是上面限制是Channel级别的还是Consumer级别

注意:

prefetchSize和global这两项,RabbitMQ没有实现,暂且不研究;

prefetch_count在no_ask=false的情况下生效,即在自动应答的情况下,这两个值是不生效的

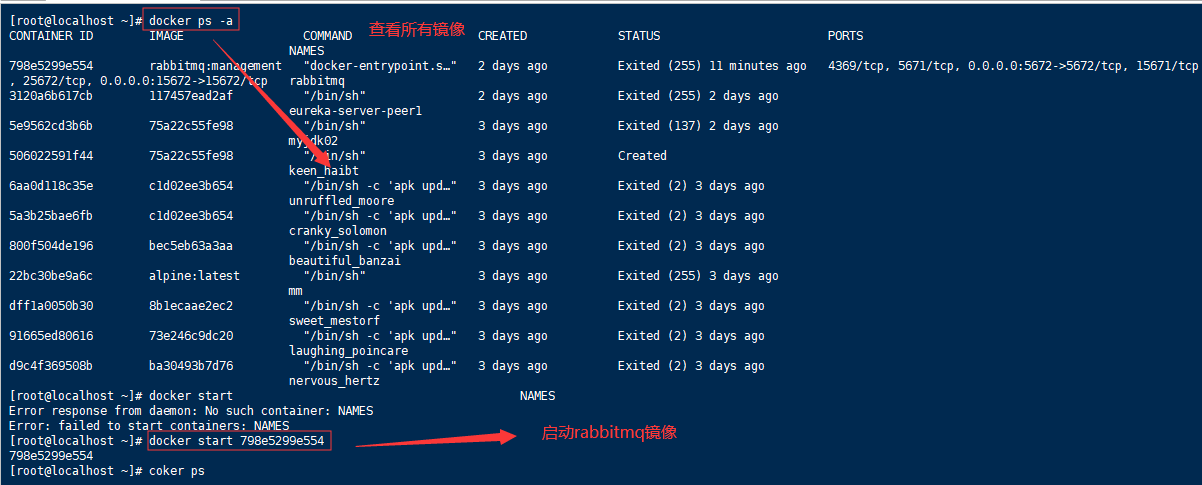

首先启动虚拟机打开centos启动rabbitmq镜像

不然后期在idea中运行项目绝对报错

自定义消费端代码

package com.javaxh.rabbitmqapi.limit;

import com.rabbitmq.client.AMQP;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.DefaultConsumer;

import com.rabbitmq.client.Envelope; import java.io.IOException; public class MyConsumer extends DefaultConsumer {

private Channel channel ; public MyConsumer(Channel channel) {

super(channel);

this.channel = channel;

} @Override

public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException {

System.err.println("-----------consume message----------");

System.err.println("consumerTag: " + consumerTag);

System.err.println("envelope: " + envelope);

System.err.println("properties: " + properties);

System.err.println("body: " + new String(body)); channel.basicAck(envelope.getDeliveryTag(), false);

} }

消费端代码:

package com.javaxh.rabbitmqapi.limit;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory; public class Consumer {

public static void main(String[] args) throws Exception {

ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); Connection connection = connectionFactory.newConnection();

Channel channel = connection.createChannel(); String exchangeName = "test_qos_exchange";

String queueName = "test_qos_queue";

String routingKey = "qos.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null);

channel.queueDeclare(queueName, true, false, false, null);

channel.queueBind(queueName, exchangeName, routingKey); //1 限流方式 第一件事就是 autoAck设置为 false

channel.basicQos(0, 1, false); channel.basicConsume(queueName, false, new MyConsumer(channel));

}

}

生产端代码:

package com.javaxh.rabbitmqapi.limit;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory; public class Producer {

public static void main(String[] args) throws Exception {

ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); Connection connection = connectionFactory.newConnection();

Channel channel = connection.createChannel(); String exchange = "test_qos_exchange";

String routingKey = "qos.save"; String msg = "Hello RabbitMQ QOS Message"; for(int i =0; i<5; i ++){

channel.basicPublish(exchange, routingKey, true, null, msg.getBytes());

} }

}

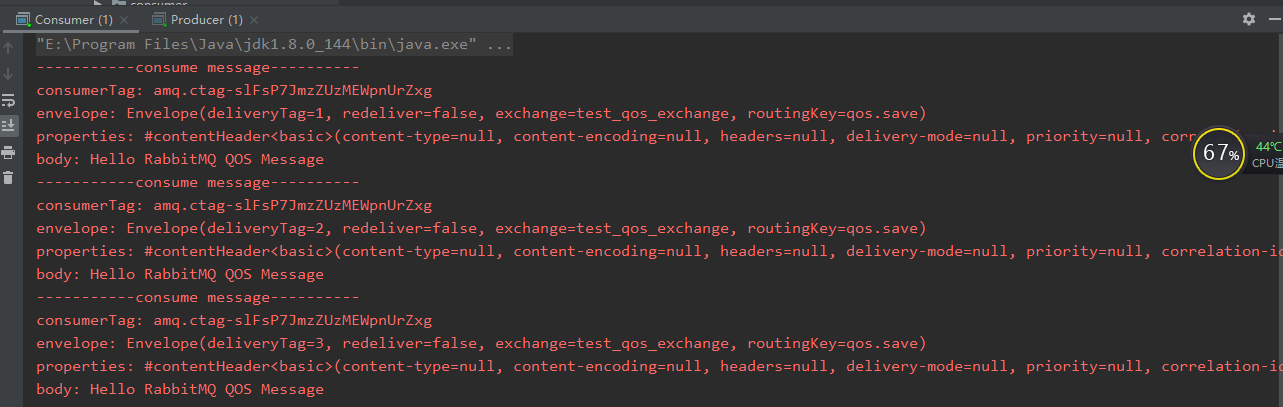

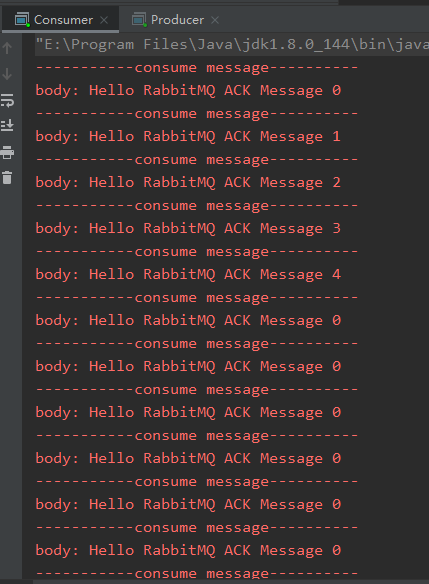

先运行消费端在运行生产端:

消息的ACK与重回队列

消费端手工ACK与NACK

消费端进行消费的时候,如果由于业务异常我们可以进行日志的记录,然后进行补偿

如果由于服务器宕机等严重问题,那么我们就需要手工进行ACK,保障消费端消费成功

消费端的重回队列

自定义消费者代码

package com.javaxh.rabbitmqapi.ack;

import com.rabbitmq.client.AMQP;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.DefaultConsumer;

import com.rabbitmq.client.Envelope; import java.io.IOException; public class MyConsumer extends DefaultConsumer { private Channel channel ; public MyConsumer(Channel channel) {

super(channel);

this.channel = channel;

} @Override

public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException {

System.err.println("-----------consume message----------");

System.err.println("body: " + new String(body));

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

if((Integer)properties.getHeaders().get("num") == 0) {

// 手动签收,重回队列

channel.basicNack(envelope.getDeliveryTag(), false, true);

} else {

channel.basicAck(envelope.getDeliveryTag(), false);

} } }

消费端代码:

package com.javaxh.rabbitmqapi.ack; import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory; public class Consumer {

public static void main(String[] args) throws Exception {

ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); Connection connection = connectionFactory.newConnection();

Channel channel = connection.createChannel(); String exchangeName = "test_ack_exchange";

String queueName = "test_ack_queue";

String routingKey = "ack.#"; channel.exchangeDeclare(exchangeName, "topic", true, false, null);

channel.queueDeclare(queueName, true, false, false, null);

channel.queueBind(queueName, exchangeName, routingKey); // 手工签收 必须要关闭 autoAck = false

channel.basicConsume(queueName, false, new MyConsumer(channel));

}

}

生产端代码:

package com.javaxh.rabbitmqapi.ack;

import com.rabbitmq.client.AMQP;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory; import java.util.HashMap;

import java.util.Map;

public class Producer {

public static void main(String[] args) throws Exception {

ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); Connection connection = connectionFactory.newConnection();

Channel channel = connection.createChannel(); String exchange = "test_ack_exchange";

String routingKey = "ack.save"; for(int i =0; i<5; i ++){

Map<String, Object> headers = new HashMap<String, Object>();

headers.put("num", i);

AMQP.BasicProperties properties = new AMQP.BasicProperties.Builder()

.deliveryMode(2)

.contentEncoding("UTF-8")

.headers(headers)

.build();

String msg = "Hello RabbitMQ ACK Message " + i;

channel.basicPublish(exchange, routingKey, true, properties, msg.getBytes());

}

}

}

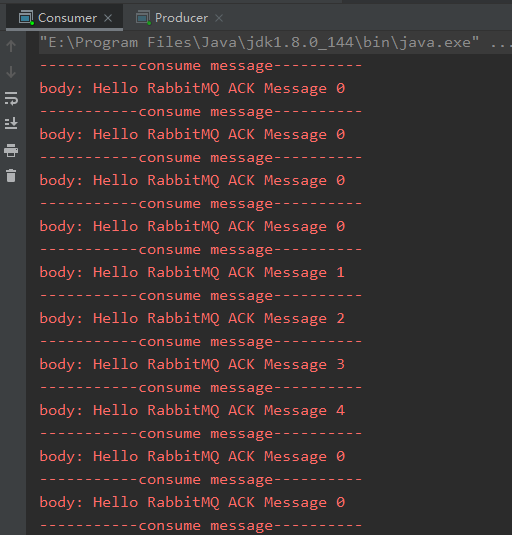

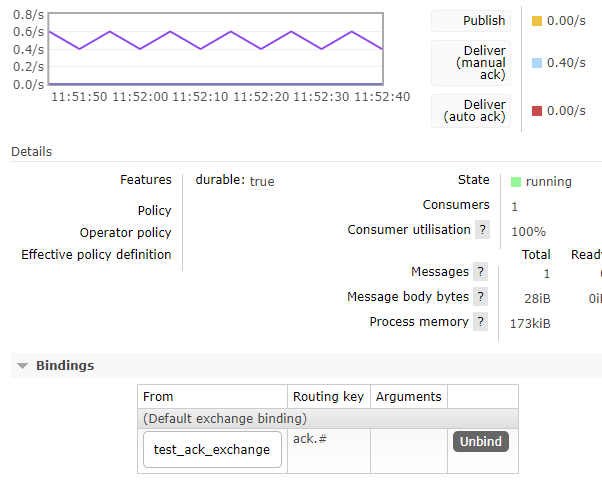

先运行消费端在运行生产端:

去rabbitmq中去查看

TTL消息

TTL是Time To Live的缩写,也就是生存时间

RabbitMQ支持消息的过期时间,在消息发送时可以进行指定

RabbitMQ支持队列的过期时间,从消息入队列开始计算,只要超过了队列的超时时间配置,那么消息自动的清除

纯控制台操作(演示TTL队列消息特点)

针对队列,只要是这个队列的消息,就只有这么长的存活时间

注意:主要针对消息设置,跟交换机、队列、消费者设置毫无关系

消费端代码

package com.javaxh.rabbitmqapi.ttl;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory;

import com.rabbitmq.client.QueueingConsumer; import java.util.Map;

public class Consumer {

public static void main(String[] args) throws Exception { //1 创建一个ConnectionFactory, 并进行配置

ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); //2 通过连接工厂创建连接

Connection connection = connectionFactory.newConnection(); //3 通过connection创建一个Channel

Channel channel = connection.createChannel(); //4 声明(创建)一个队列

String queueName = "test001";

channel.queueDeclare(queueName, true, false, false, null); //5 创建消费者

QueueingConsumer queueingConsumer = new QueueingConsumer(channel); //6 设置Channel

channel.basicConsume(queueName, true, queueingConsumer); while(true){

//7 获取消息

QueueingConsumer.Delivery delivery = queueingConsumer.nextDelivery();

String msg = new String(delivery.getBody());

System.err.println("消费端: " + msg);

Map<String, Object> headers = delivery.getProperties().getHeaders();

System.err.println("headers get my1 value: " + headers.get("my1")); //Envelope envelope = delivery.getEnvelope();

} }

}

生产端代码:

package com.javaxh.rabbitmqapi.ttl;

import com.rabbitmq.client.AMQP;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory; import java.util.HashMap;

import java.util.Map;

public class Procuder {

public static void main(String[] args) throws Exception {

//1 创建一个ConnectionFactory, 并进行配置

ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); //2 通过连接工厂创建连接

Connection connection = connectionFactory.newConnection(); //3 通过connection创建一个Channel

Channel channel = connection.createChannel(); Map<String, Object> headers = new HashMap<>();

headers.put("my1", "111");

headers.put("my2", "222"); AMQP.BasicProperties properties = new AMQP.BasicProperties.Builder()

.deliveryMode(2)

.contentEncoding("UTF-8")

.expiration("10000")

.headers(headers)

.build(); //4 通过Channel发送数据

for(int i=0; i < 5; i++){

String msg = "Hello RabbitMQ!";

//1 exchange 2 routingKey

channel.basicPublish("", "test001", properties, msg.getBytes());

} //5 记得要关闭相关的连接

channel.close();

connection.close();

}

}

还是先运行消费端在运行生产端:

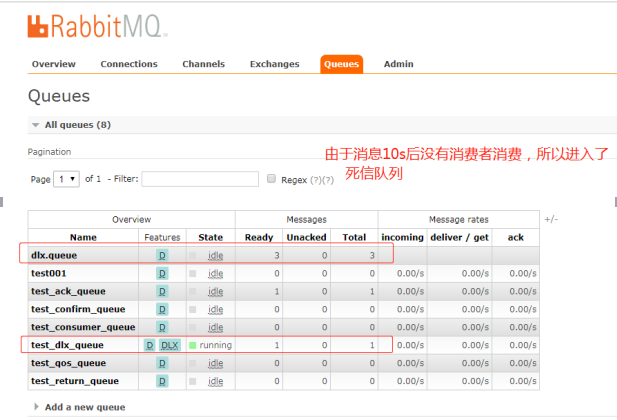

死信队列

死信队列:DLX,Dead-Letter-Exchange

利用DLX,当消息在一个队列中变成死信(dead message)之后,它能被重新publish到另一个Exchange,这个Exchange就是DLX

消息变成死信有以下几种情况

- 消息被拒绝(basic.reject/basic.nack)并且requeue=false

- 消息TTL过期

- 队列达到最大长度

死信队列的特点

DLX也是一个正常的Exchange,和一般的Exchange没有区别,它能在任何的队列上被指定,实际上就是设置某个队列的属性;

当这个队列中有死信时,RabbitMQ就会自动的将这个消息重新发布到设置的Exchange上去,进而被路由到另一个队列;

可以监听这个队列中消息做相应的处理,这个特性可以弥补RabbitMQ3.0以前支持的immediate参数的功能

死信队列设置

- 首先需要设置死信队列的Exchange和Queue,然后进行绑定:

Exchange:dlx.exchange

Queue:dlx.queue

RoutingKey:#

- 然后我们进行正常声明交换机、队列、绑定,只不过我们需要在队列加上一个参数即可:

Arguments.put(“x-dead-letter-exchange”,”dlx.exchange”);

这样消息在过期、requeue、队列在达到最大长度时,消息就可以直接路由到死信队列

自定义消费端

package com.javaxh.rabbitmqapi.dlx;

import com.rabbitmq.client.AMQP;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.DefaultConsumer;

import com.rabbitmq.client.Envelope;

import java.io.IOException;

public class MyConsumer extends DefaultConsumer {

public MyConsumer(Channel channel) {

super(channel);

} @Override

public void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException {

System.err.println("-----------consume message----------");

System.err.println("consumerTag: " + consumerTag);

System.err.println("envelope: " + envelope);

System.err.println("properties: " + properties);

System.err.println("body: " + new String(body));

}

}

消费端:

package com.javaxh.rabbitmqapi.dlx;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory;

import java.util.HashMap;

import java.util.Map;

public class Consumer {

public static void main(String[] args) throws Exception {

ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); Connection connection = connectionFactory.newConnection();

Channel channel = connection.createChannel(); // 这就是一个普通的交换机 和 队列 以及路由

String exchangeName = "test_dlx_exchange";

String routingKey = "dlx.#";

String queueName = "test_dlx_queue"; channel.exchangeDeclare(exchangeName, "topic", true, false, null); Map<String, Object> agruments = new HashMap<String, Object>();

agruments.put("x-dead-letter-exchange", "dlx.exchange");

//这个agruments属性,要设置到声明队列上

channel.queueDeclare(queueName, true, false, false, agruments);

channel.queueBind(queueName, exchangeName, routingKey); //要进行死信队列的声明:

channel.exchangeDeclare("dlx.exchange", "topic", true, false, null);

channel.queueDeclare("dlx.queue", true, false, false, null);

channel.queueBind("dlx.queue", "dlx.exchange", "#"); channel.basicConsume(queueName, true, new MyConsumer(channel)); }

}

生产端代码:

package com.javaxh.rabbitmqapi.dlx;

import com.rabbitmq.client.AMQP;

import com.rabbitmq.client.Channel;

import com.rabbitmq.client.Connection;

import com.rabbitmq.client.ConnectionFactory;

public class Producer {

public static void main(String[] args) throws Exception { ConnectionFactory connectionFactory = new ConnectionFactory();

connectionFactory.setHost("192.168.239.131");

connectionFactory.setPort(5672);

connectionFactory.setVirtualHost("/"); Connection connection = connectionFactory.newConnection();

Channel channel = connection.createChannel(); String exchange = "test_dlx_exchange";

String routingKey = "dlx.save"; String msg = "Hello RabbitMQ DLX Message"; for(int i =0; i<1; i ++){ AMQP.BasicProperties properties = new AMQP.BasicProperties.Builder()

.deliveryMode(2)

.contentEncoding("UTF-8")

.expiration("10000")

.build();

channel.basicPublish(exchange, routingKey, true, properties, msg.getBytes());

} }

}

谢谢观看!!!

RabbitMQ之消息模式(下)的更多相关文章

- RabbitMQ之消息模式简单易懂,超详细分享~~~

前言 上一篇对RabbitMQ的流程和相关的理论进行初步的概述,如果小伙伴之前对消息队列不是很了解,那么在看理论时会有些困惑,这里以消息模式为切入点,结合理论细节和代码实践的方式一起来学习. 正文 常 ...

- RabbitMQ之消息模式2

消费端限流 什么是消费端的限流? 假设一个场景,首先,我们RabbitMQ服务器有上万条未处理的消息,我们随便打开一个消费者客户端,会出现下面情况: 巨量的消息瞬间全部推送过来,但是我们单个客户端无法 ...

- RabbitMQ之消息模式

目的: 消息如何保证100%的投递 幂等性概念 Confirm确认消息 Return返回消息 自定义消费者 前言: 想必知道消息中间件RabbitMQ的小伙伴,对于引入中间件的好处可以起到抗高并发,削 ...

- RabbitMQ之消息模式1

消息100%的投递 消息如何保障100%的投递成功? 什么是生产端的可靠性投递? 保障消息的成功发出 保障MQ节点的成功接收 发送端收到MQ节点(Broker)确认应答 完善的消息进行补偿机制 BAT ...

- 解决spring boot在RabbitMQ堆积消息情况下无法启动问题

最近遇到一个问题,服务站点上线之前,先去新建需要的rabbitmq并绑定关系,此时 如果发送消息方运行, 那边会造成新建的q消息部分堆积得不到及时消费 那么问题来了? 在消息堆积情况下,服务站点无法启 ...

- RabbitMQ入门-消息订阅模式

消息派发 上篇<RabbitMQ入门-消息派发那些事儿>发布之后,收了不少反馈,其中问的最多的还是有关消息确认以及超时等场景的处理. 楼主,有遇到消费者后台进程不在,但consumer连接 ...

- simple模式下rabbitmq的代码

simple模式代码 package RabbitMQ import ( "fmt" "github.com/streadway/amqp" "log ...

- [老老实实学WCF] 第十篇 消息通信模式(下) 双工

老老实实学WCF 第十篇 消息通信模式(下) 双工 在前一篇的学习中,我们了解了单向和请求/应答这两种消息通信模式.我们知道可以通过配置操作协定的IsOneWay属性来改变模式.在这一篇中我们来研究双 ...

- RabbitMQ分布式消息队列服务器(一、Windows下安装和部署)

RabbitMQ消息队列服务器在Windows下的安装和部署-> 一.Erlang语言环境的搭建 RabbitMQ开源消息队列服务是使用Erlang语言开发的,因此我们要使用他就必须先进行Erl ...

随机推荐

- luoguP4721 【模板】分治 FFT

P4721 [模板]分治 FFT 链接 luogu 题目描述 给定长度为 \(n-1\) 的数组 \(g[1],g[2],..,g[n-1]\),求 \(f[0],f[1],..,f[n-1]\),其 ...

- NOIP 2013货车运输

当然这题有很多做法,但是我看到没有人写DSU的很惊奇 按照之前做连双向边题的经验,这题可以用并查集维护联通 然后对于每个询问\(x,y\),考虑启发式合并 当两个点集\(x,y\)合并时,一些涉及到其 ...

- MySQL之replace函数应用

replace函数,从字面上看其主要作用就是替换.实际它的作用确实是替换.那么替换有哪些应用场景呢?比如A表和B表有一个关联的字段就是id,但是在A中id是数字,在B中id也是数字,但是B中id多一个 ...

- MyBatis(七):mybatis Java API编程实现增、删、改、查的用法

最近工作中用到了mybatis的Java API方式进行开发,顺便也整理下该功能的用法,接下来会针对基本部分进行学习: 1)Java API处理一对多.多对一的用法: 2)增.删.改.查的用法: 3) ...

- R3300L按reset键无法进入USB Burning模式的问题分析

最开始并没有注意到这个问题, 因为从设备拿到手, 用USB Burning Tool刷入潜龙版的安卓4.4.2, 再到运行EmuELEC, Armbian, 再到给Kernel 5.3的Armbian ...

- Python知乎上推荐的项目

原文地址:https://www.zhihu.com/question/29372574/answer/88744491 作者:Wayne Shi链接:https://www.zhihu.com/qu ...

- some try on func swap about & and *

import "fmt" func swap(x,y *int ) { //x ,y = y,x //fault /* t := *x *x = *y *y = t */ //tr ...

- osgb文件过大,可以通过Compressor=zlib对纹理进行压缩

osg::ref_ptr<osgDB::ReaderWriter::Options> options = new osgDB::ReaderWriter::Options; options ...

- Centos7安装完成后设定基本的网络配置

Centos7设定网络 新安装的centos7,网络默认是不启动的,需要人为的手工修改配置文件,在这里把这个过程简要的记录一下. 设定ip地址与mac地址自定义 [root@web ~]# cd /e ...

- MSSQL Server 及 MSSQL Express版本 自动备份

一.SQL Server Management Studio(SMSS) 维护计划 [参考]SQL SERVER如何定期自动备份数据库 二.Windows 级 任务计划程序( MSSQL Expres ...