why’s kafka so fast

As we all know that Kafka is very fast, much faster than most of its competitors. So what’s the reason here?

Avoid Random Disk Access

Kafka writes everything onto the disk in order and consumers fetch data in order too. So disk access always works sequentially instead of randomly. For traditional hard disks(HDD), sequential access is much faster than random access. Here is a comparison:

| hardware | sequential writes | random writes |

|---|---|---|

| 6 * 7200rpm SATA RAID-5 | 300MB/s | 50KB/s |

Kafka Writes Everything Onto The Disk Instead of Memory

Yes, you read that right. Kafka writes everything onto the disk instead of memory. But wait a moment, isn’t memory supposed to be faster than disks? Typically it’s the case, for Random Disk Access. But for sequential access, the difference is much smaller. Here is a comparison taken from https://queue.acm.org/detail.cfm?id=1563874.

As you can see, it’s not that different. But still, sequential memory access is faster than Sequential Disk Access, why not choose memory? Because Kafka runs on top of JVM, which gives us two disadvantages.

1.The memory overhead of objects is very high, often doubling the size of the data stored(or even higher).

2.Garbage Collection happens every now and then, so creating objects in memory is very expensive as in-heap data increases because we will need more time to collect unused data(which is garbage).

So writing to file systems may be better than writing to memory. Even better, we can utilize MMAP(memory mapped files) to make it faster.

Memory Mapped Files(MMAP)

Basically, MMAP(Memory Mapped Files) can map the file contents from the disk into memory. And when we write something into the mapped memory, the OS will flush the change onto the disk sometime later. So everything is faster because we are using memory actually, but in an indirect way. So here comes the question. Why would we use MMAP to write data onto disks, which later will be mapped into memory? It seems to be a roundabout route. Why not just write data into memory directly? As we have learned previously, Kafka runs on top of JVM, if we wrote data into memory directly, the memory overhead would be high and GC would happen frequently. So we use MMAP here to avoid the issue.

Zero Copy

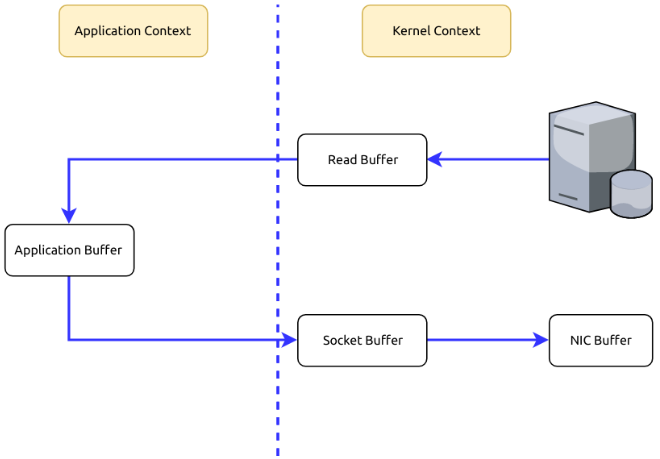

Suppose that we are fetching data from the memory and sending them to the Internet. What is happening in the process is usually twofold.

1.To fetch data from the memory, we need to copy those data from the Kernel Context into the Application Context.

2.To send those data to the Internet, we need to copy the data from the Application Context into the Kernel Context.

As you can see, it’s redundant to copy data between the Kernel Context and the Application Context. Can we avoid it? Yes, using Zero Copy we can copy data directly from the Kernel Context to the Kernel Context.

Batch Data

Kafka only sends data when batch.size is reached instead of one by one. Assuming the bandwidth is 10MB/s, sending 10MB data in one go is much faster than sending 10000 messages one by one(assuming each message takes 100 bytes).

"I would be fine and made no troubles."

why’s kafka so fast的更多相关文章

- Apache Kafka for Item Setup

At Walmart.com in the U.S. and at Walmart's 11 other websites around the world, we provide seamless ...

- Apache Kafka - Schema Registry

关于我们为什么需要Schema Registry? 参考, https://www.confluent.io/blog/how-i-learned-to-stop-worrying-and-love- ...

- Understanding, Operating and Monitoring Apache Kafka

Apache Kafka is an attractive service because it's conceptually simple and powerful. It's easy to un ...

- Build an ETL Pipeline With Kafka Connect via JDBC Connectors

This article is an in-depth tutorial for using Kafka to move data from PostgreSQL to Hadoop HDFS via ...

- How to choose the number of topics/partitions in a Kafka cluster?

This is a common question asked by many Kafka users. The goal of this post is to explain a few impor ...

- kafka producer源码

producer接口: /** * Licensed to the Apache Software Foundation (ASF) under one or more * contributor l ...

- Flume-ng+Kafka+storm的学习笔记

Flume-ng Flume是一个分布式.可靠.和高可用的海量日志采集.聚合和传输的系统. Flume的文档可以看http://flume.apache.org/FlumeUserGuide.html ...

- Apache Kafka: Next Generation Distributed Messaging System---reference

Introduction Apache Kafka is a distributed publish-subscribe messaging system. It was originally dev ...

- Exploring Message Brokers: RabbitMQ, Kafka, ActiveMQ, and Kestrel--reference

[This article was originally written by Yves Trudeau.] http://java.dzone.com/articles/exploring-mess ...

随机推荐

- vue.js 父组件主动获取子组件的数据和方法、子组件主动获取父组件的数据和方法

父组件主动获取子组件的数据和方法 1.调用子组件的时候 定义一个ref <headerchild ref="headerChild"></headerchild& ...

- Hibernate与Mybatis 对比

见知乎:https://www.zhihu.com/question/21104468 总结: 1:业务简单,不涉及多表关联查询的,用Hibernate更快,但是当业务量上去后,需要精通Hiberna ...

- 3-2-Pandas 索引

Pandas章节应用的数据可以在以下链接下载: https://files.cnblogs.com/files/AI-robort/Titanic_Data-master.zip In [4]: i ...

- 常用.gitignore

android开发 关键词:java,android,androidstudio 地址:https://www.gitignore.io/api/java,android,androidstudio ...

- 201871010108-高文利《面向对象程序设计(java)》第八周学习总结

项目 内容 这个作业属于哪个课程 <任课教师博客主页链接> https://www.cnblogs.com/nwnu-daizh/ 这个作业的要求在哪里 <作业链接地址> ht ...

- HDFS 分布式文件系统

博客出处W3c:https://www.w3cschool.cn/hadoop/xvmi1hd6.html 简介 Hadoop Distributed File System,分布式文件系统 架构 B ...

- Android Adapter中获得LayoutInflater

LayoutInflater li =(LayoutInflater)MyContext.getSystemService(Context.LAYOUT_INFLATER_SERVICE);

- Nginx介绍(一)

Nginx (engine x) 是一个高性能的HTTP和反向代理web服务器,同时也提供了IMAP/POP3/SMTP服务. Nginx最大的特点是对高并发的支持和高效的负载均衡,在高并发的需求场景 ...

- python利用beautifulsoup多页面爬虫

利用了beautifulsoup进行爬虫,解析网址分页面爬虫并存入文本文档: 结果: 源码: from bs4 import BeautifulSoup from urllib.request imp ...

- leetcode752. 打开转盘锁

我们可以将 0000 到 9999 这 10000 状态看成图上的 10000 个节点,两个节点之间存在一条边,当且仅当这两个节点对应的状态只有 1 位不同,且不同的那位相差 1(包括 0 和 9 也 ...