Bootstrap aggregating Bagging 合奏 Ensemble Neural Network

zh.wikipedia.org/wiki/Bagging算法

Bagging算法 (英语:Bootstrap aggregating,引导聚集算法),又称装袋算法,是机器学习领域的一种团体学习算法。最初由Leo Breiman于1994年提出。Bagging算法可与其他分类、回归算法结合,提高其准确率、稳定性的同时,通过降低结果的方差,避免过拟合的发生。

给定一个大小为

http://machine-learning.martinsewell.com/ensembles/bagging/

【bootstrap samples 放回抽样 random samples with replacement】

Bagging (Breiman, 1996), a name derived from “bootstrap aggregation”, was the first effective method of ensemble learning and is one of the simplest methods of arching [1]. The meta-algorithm, which is a special case of the model averaging, was originally designed for classification and is usually applied to decision tree models, but it can be used with any type of model for classification or regression. The method uses multiple versions of a training set by using the bootstrap, i.e. sampling with replacement. Each of these data sets is used to train a different model. The outputs of the models are combined by averaging (in case of regression) or voting (in case of classification) to create a single output. Bagging is only effective when using unstable (i.e. a small change in the training set can cause a significant change in the model) nonlinear models.

https://www.packtpub.com/mapt/book/big_data_and_business_intelligence/9781787128576/7/ch07lvl1sec46/bagging--building-an-ensemble-of-classifiers-from-bootstrap-samples

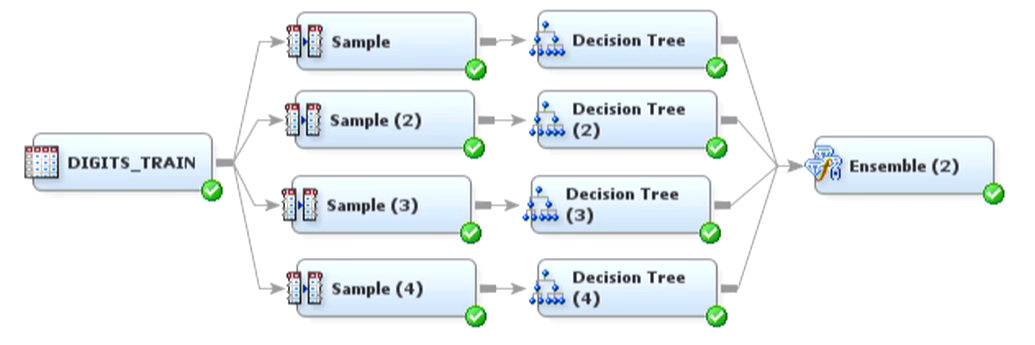

Bagging is an ensemble learning technique that is closely related to the MajorityVoteClassifier that we implemented in the previous section, as illustrated in the following diagram:

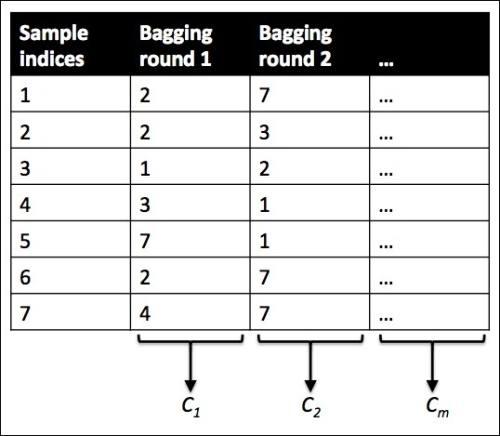

However, instead of using the same training set to fit the individual classifiers in the ensemble, we draw bootstrap samples (random samples with replacement) from the initial training set, which is why bagging is also known as bootstrap aggregating. To provide a more concrete example of how bootstrapping works, let's consider the example shown in the following figure. Here, we have seven different training instances (denoted as indices 1-7) that are sampled randomly with replacement in each round of bagging. Each bootstrap sample is then used to fit a classifier , which is most typically an unpruned decision tree:

, which is most typically an unpruned decision tree:

【LOWESS (locally weighted scatterplot smoothing) 局部散点加权平滑】

LOESS and LOWESS thus build on "classical" methods, such as linear and nonlinear least squares regression. They address situations in which the classical procedures do not perform well or cannot be effectively applied without undue labor. LOESS combines much of the simplicity of linear least squares regression with the flexibility of nonlinear regression. It does this by fitting simple models to localized subsets of the data to build up a function that describes the deterministic part of the variation in the data, point by point. In fact, one of the chief attractions of this method is that the data analyst is not required to specify a global function of any form to fit a model to the data, only to fit segments of the data.

【用局部数据去逐点拟合局部--不用全局函数拟合模型--局部问题局部解决】

http://www.richardafolabi.com/blog/non-technical-introduction-to-random-forest-and-gradient-boosting-in-machine-learning.html

【A collective wisdom of many is likely more accurate than any one. Wisdom of the crowd – Aristotle, 300BC-】

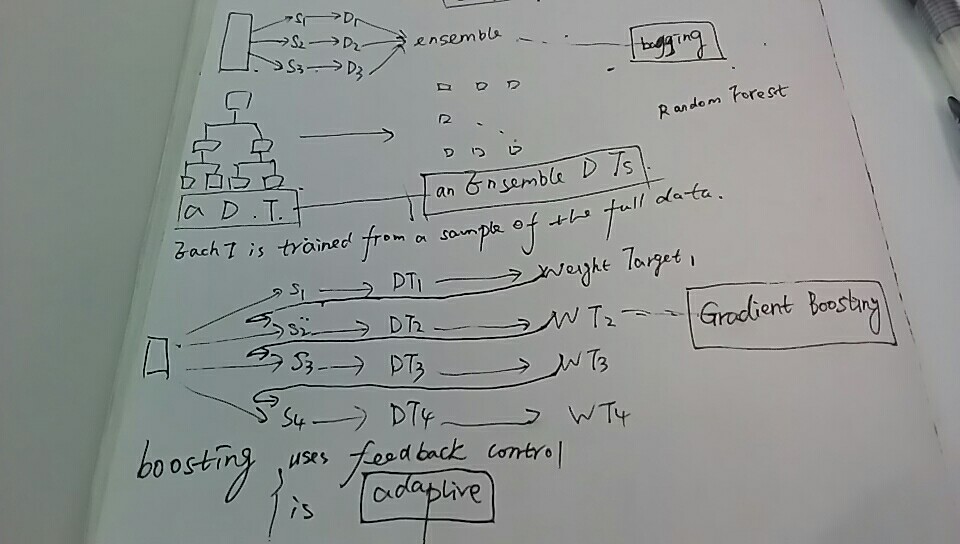

bagging

gradient boosting

- Ensemble model are great for producing robust, highly optimized and improved models.

- Random Forest and Gradient Boosting are Ensembled-Based algorithms

- Random Forest uses Bagging technique while Gradient Boosting uses Boosting technique.

- Bagging uses multiple random data sampling for modeling while Boosting uses iterative refinement for modeling.

- Ensemble models are not easy to interpret and they often work like a little back box.

- Multiple algorithms must be minimally used to that the prediction system can be reasonably tractable.

Bootstrap aggregating Bagging 合奏 Ensemble Neural Network的更多相关文章

- Ensemble Learning: Bootstrap aggregating (Bagging) & Boosting & Stacked generalization (Stacking)

Booststrap aggregating (有些地方译作:引导聚集),也就是通常为大家所熟知的bagging.在维基上被定义为一种提升机器学习算法稳定性和准确性的元算法,常用于统计分类和回归中. ...

- 读paper:Deep Convolutional Neural Network using Triplets of Faces, Deep Ensemble, andScore-level Fusion for Face Recognition

今天给大家带来一篇来自CVPR 2017关于人脸识别的文章. 文章题目:Deep Convolutional Neural Network using Triplets of Faces, Deep ...

- 【集成模型】Bootstrap Aggregating(Bagging)

0 - 思想 如下图所示,Bagging(Bootstrap Aggregating)的基本思想是,从训练数据集中有返回的抽象m次形成m个子数据集(bootstrapping),对于每一个子数据集训练 ...

- 转载:bootstrap, boosting, bagging 几种方法的联系

转:http://blog.csdn.net/jlei_apple/article/details/8168856 这两天在看关于boosting算法时,看到一篇不错的文章讲bootstrap, ja ...

- bootstrap, boosting, bagging 几种方法的联系

http://blog.csdn.net/jlei_apple/article/details/8168856 这两天在看关于boosting算法时,看到一篇不错的文章讲bootstrap, jack ...

- (转)关于bootstrap, boosting, bagging,Rand forest

转自:https://blog.csdn.net/jlei_apple/article/details/8168856 这两天在看关于boosting算法时,看到一篇不错的文章讲bootstrap, ...

- bootstrap, boosting, bagging

介绍boosting算法的资源: 视频讲义.介绍boosting算法,主要介绍AdaBoosing http://videolectures.net/mlss05us_schapire_b/ 在这个站 ...

- 【DKNN】Distilling the Knowledge in a Neural Network 第一次提出神经网络的知识蒸馏概念

原文链接 小样本学习与智能前沿 . 在这个公众号后台回复"DKNN",即可获得课件电子资源. 文章已经表明,对于将知识从整体模型或高度正则化的大型模型转换为较小的蒸馏模型,蒸馏非常 ...

- 【论文考古】知识蒸馏 Distilling the Knowledge in a Neural Network

论文内容 G. Hinton, O. Vinyals, and J. Dean, "Distilling the Knowledge in a Neural Network." 2 ...

随机推荐

- 纯手写Myatis框架

1.接口层-和数据库交互的方式 MyBatis和数据库的交互有两种方式: 使用传统的MyBatis提供的API: 使用Mapper接口: 2.使用Mapper接口 MyBatis 将配置文件中的每一个 ...

- 详解BitMap算法

所谓的BitMap就是用一个bit位来标记某个元素所对应的value,而key即是该元素,由于BitMap使用了bit位来存储数据,因此可以大大节省存储空间. 1. 基本思想 首先用一个简单的例子 ...

- BZOJ—— 3402: [Usaco2009 Open]Hide and Seek 捉迷藏

http://www.lydsy.com/JudgeOnline/problem.php?id=3402 Description 贝茜在和约翰玩一个“捉迷藏”的游戏. 她正要找出所有适 ...

- luogu P2915 [USACO08NOV]奶牛混合起来Mixed Up Cows

题目描述 Each of Farmer John's N (4 <= N <= 16) cows has a unique serial number S_i (1 <= S_i & ...

- 用NSLogger代替NSLog输出调试信息

安装 NSLogger分为两部分,LoggerClient和NSLogger Viewer,你的App需要导入前者,后者是一个独立的mac应用,NSLogger所有的调试信息将输出到这个应用中. 安装 ...

- 【java】Java中十六进制转换 Integer.toHexString()到底做了什么?什么时候会用到它?为什么要用它?byte为什么要&0xff?为什么要和0xff做与运算?

参考地址:http://www.cnblogs.com/think-in-java/p/5527389.html 参考地址:https://blog.csdn.net/scyatcs/article/ ...

- dogpile搜索引擎

有发现了一个新的搜索引擎——dogpile,结果还不错.据说是综合了多个搜索引擎的结果,展现了最终的搜索结果. 从百科上介绍说,这是一个[元搜索引擎].不懂,继续百科之,如下: 搜索引擎分为全文搜索引 ...

- appium查找元素心得

在使用appium测试app的时候并没有selenium那么好用,为什么呢? 个人觉得是因为定位方式太少,selenium中的xpath已经强大到基本可以找到任何一个元素. 但是在appium中xpa ...

- 【共享单车】—— React后台管理系统开发手记:主页面架构设计

前言:以下内容基于React全家桶+AntD实战课程的学习实践过程记录.最终成果github地址:https://github.com/66Web/react-antd-manager,欢迎star. ...

- 127.0.0.1和localhost和本机IP三者的区别

1,什么是环回地址??与127.0.0.1的区别呢?? 环回地址是主机用于向自身发送通信的一个特殊地址(也就是一个特殊的目的地址). 可以这么说:同一台主机上的两项服务若使用环回地址而非分配的主机地址 ...