吴裕雄 python 机器学习-DMT(1)

import numpy as np

import operator as op from math import log def createDataSet():

dataSet = [[1, 1, 'yes'],

[1, 1, 'yes'],

[1, 0, 'no'],

[0, 1, 'no'],

[0, 1, 'no']]

labels = ['no surfacing','flippers']

return dataSet, labels dataSet,labels = createDataSet()

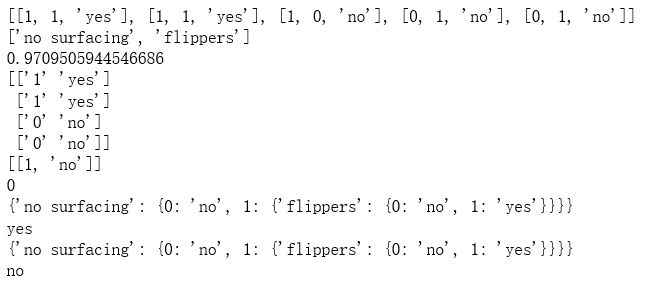

print(dataSet)

print(labels) def calcShannonEnt(dataSet):

labelCounts = {}

for featVec in dataSet:

currentLabel = featVec[-1]

if(currentLabel not in labelCounts.keys()):

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

shannonEnt = 0.0

rowNum = len(dataSet)

for key in labelCounts:

prob = float(labelCounts[key])/rowNum

shannonEnt -= prob * log(prob,2)

return shannonEnt shannonEnt = calcShannonEnt(dataSet)

print(shannonEnt) def splitDataSet(dataSet, axis, value):

retDataSet = []

for featVec in dataSet:

if(featVec[axis] == value):

reducedFeatVec = featVec[:axis]

reducedFeatVec.extend(featVec[axis+1:])

retDataSet.append(reducedFeatVec)

return retDataSet retDataSet = splitDataSet(dataSet,1,1)

print(np.array(retDataSet))

retDataSet = splitDataSet(dataSet,1,0)

print(retDataSet) def chooseBestFeatureToSplit(dataSet):

numFeatures = np.shape(dataSet)[1]-1

baseEntropy = calcShannonEnt(dataSet)

bestInfoGain = 0.0

bestFeature = -1

for i in range(numFeatures):

featList = [example[i] for example in dataSet]

uniqueVals = set(featList)

newEntropy = 0.0

for value in uniqueVals:

subDataSet = splitDataSet(dataSet, i, value)

prob = len(subDataSet)/float(len(dataSet))

newEntropy += prob * calcShannonEnt(subDataSet)

infoGain = baseEntropy - newEntropy

if (infoGain > bestInfoGain):

bestInfoGain = infoGain

bestFeature = i

return bestFeature bestFeature = chooseBestFeatureToSplit(dataSet)

print(bestFeature) def majorityCnt(classList):

classCount={}

for vote in classList:

if(vote not in classCount.keys()):

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def createTree(dataSet,labels):

classList = [example[-1] for example in dataSet]

if(classList.count(classList[0]) == len(classList)):

return classList[0]

if len(dataSet[0]) == 1:

return majorityCnt(classList)

bestFeat = chooseBestFeatureToSplit(dataSet)

bestFeatLabel = labels[bestFeat]

myTree = {bestFeatLabel:{}}

del(labels[bestFeat])

featValues = [example[bestFeat] for example in dataSet]

uniqueVals = set(featValues)

for value in uniqueVals:

subLabels = labels[:]

myTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value),subLabels)

return myTree myTree = createTree(dataSet,labels)

print(myTree) def classify(inputTree,featLabels,testVec):

for i in inputTree.keys():

firstStr = i

break

secondDict = inputTree[firstStr]

featIndex = featLabels.index(firstStr)

key = testVec[featIndex]

valueOfFeat = secondDict[key]

if isinstance(valueOfFeat, dict):

classLabel = classify(valueOfFeat, featLabels, testVec)

else:

classLabel = valueOfFeat

return classLabel featLabels = ['no surfacing', 'flippers']

classLabel = classify(myTree,featLabels,[1,1])

print(classLabel) import pickle def storeTree(inputTree,filename):

fw = open(filename,'wb')

pickle.dump(inputTree,fw)

fw.close() def grabTree(filename):

fr = open(filename,'rb')

return pickle.load(fr) filename = "D:\\mytree.txt"

storeTree(myTree,filename)

mySecTree = grabTree(filename)

print(mySecTree) featLabels = ['no surfacing', 'flippers']

classLabel = classify(mySecTree,featLabels,[0,0])

print(classLabel)

吴裕雄 python 机器学习-DMT(1)的更多相关文章

- 吴裕雄 python 机器学习-DMT(2)

import matplotlib.pyplot as plt decisionNode = dict(boxstyle="sawtooth", fc="0.8" ...

- 吴裕雄 python 机器学习——分类决策树模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets from sklearn.model_s ...

- 吴裕雄 python 机器学习——回归决策树模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets from sklearn.model_s ...

- 吴裕雄 python 机器学习——线性判断分析LinearDiscriminantAnalysis

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——逻辑回归

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——ElasticNet回归

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——Lasso回归

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets, linear_model from s ...

- 吴裕雄 python 机器学习——岭回归

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets, linear_model from s ...

- 吴裕雄 python 机器学习——线性回归模型

import numpy as np from sklearn import datasets,linear_model from sklearn.model_selection import tra ...

随机推荐

- DIV左、右布局

1.右边宽度固定,左边自适应 第一种(flex布局,不兼容IE9以下浏览器): <style> body { display: flex; } .left { background-col ...

- Bootstrap如何关闭弹窗

1.layer.closeAll()无法关闭弹窗的解决办法 使可以使用:parent.layer.closeAll() 2.layer.close()或者layer.closeAll()失效的情况下强 ...

- Python笔记:字典的fromkeys方法创建的初始value同内存地址问题

dict中的fromkeys()函数可以通过一个list来创建一个用同一初始value的dict. d = dict.fromkeys(["苹果", "菠萝"] ...

- js 数组的crud操作

增加push(); 向数组尾添加元素unshift(); 向数组头添加元素向数组指定下标添加元素:可以用Array提供的splice(); var arr = ['a','b','c']; arr.s ...

- 【IP代理】国内省市域名代理

最近遇到一个测试问题,就是投放时需要按地域投放,所以需要对指定的IP地址范围内的地方投放才有效. 所以,就调查了下IP代理的方式,一个是SSR,这个好像只能代理国外的域名方式,另外一个就是百度搜索IP ...

- 通俗理解cookies,sessionStorage,localStorage的区别

sessionStorage .localStorage 和 cookie 之间的区别共同点:都是保存在浏览器端,且同源的. 区别:cookie数据始终在同源的http请求中携带(即使不需要),即co ...

- 关系型数据库和NoSQL数据库

一.数据库排名和流行趋势 1.1 Complete ranking 链接: https://db-engines.com/en/ranking 在这个网站列出了所有数据库的排名,还可以看到所属数据库类 ...

- Java命令运行.class文件

class使用全名(点号间隔):java headfirst.designpatterns.proxy.gumball.GumballMonitorTestDrive

- java 获得系统当前时间

import org.junit.Test; import java.text.SimpleDateFormat; import java.util.Calendar; import java.uti ...

- 星号三角形 I python

'''星号三角形 I描述读入一个整数N,N是奇数,输出由星号字符组成的等边三角形,要求:第1行1个星号,第2行3个星号,第3行5个星号,依次类推,最后一行共N的星号.输入示例1:3输出示例2: * * ...