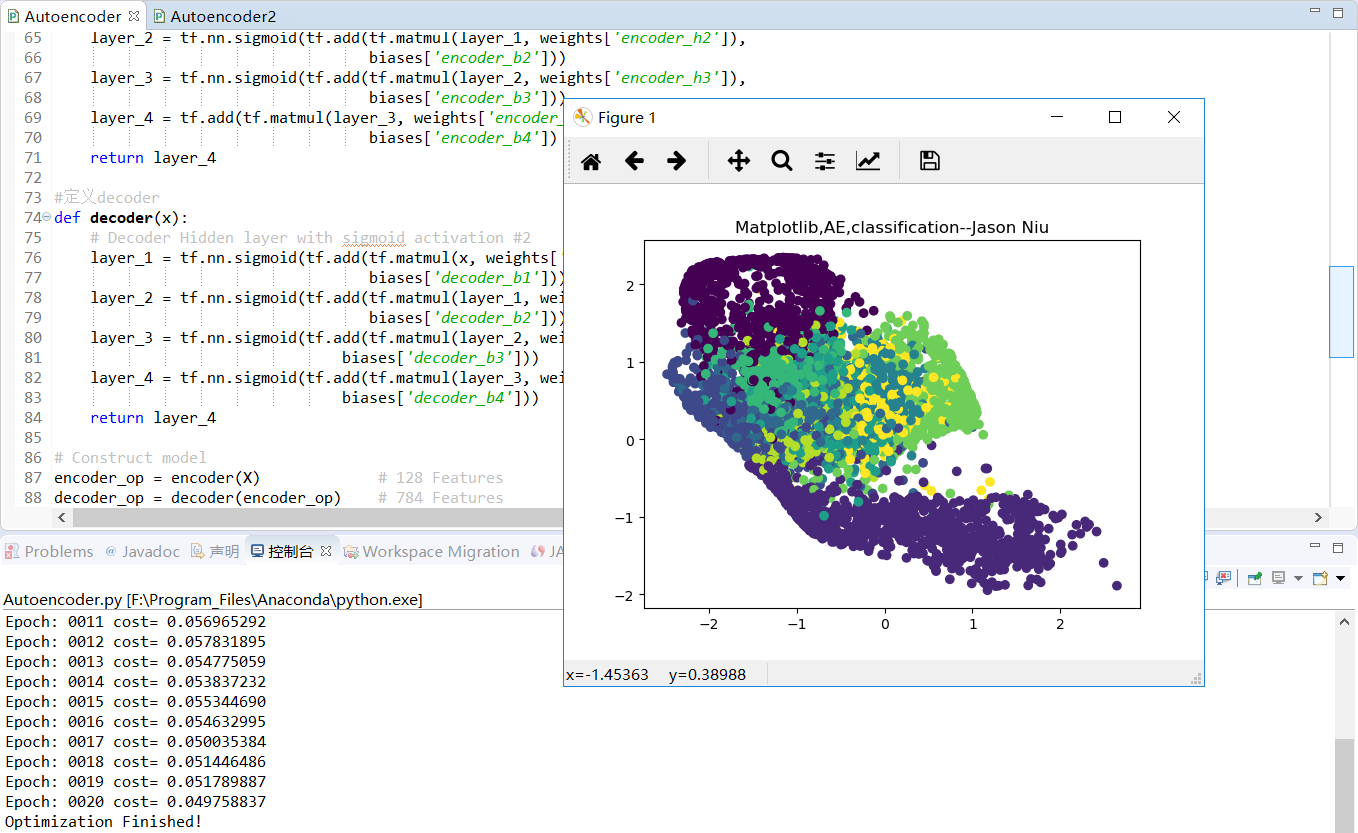

TF之AE:AE实现TF自带数据集AE的encoder之后decoder之前的非监督学习分类—Jason niu

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt #Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist=input_data.read_data_sets("/niu/mnist_data/",one_hot=False) # Parameter

learning_rate = 0.001

training_epochs = 20

batch_size = 256

display_step = 1

examples_to_show = 10 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28像素即784个特征值) #tf Graph input(only pictures)

X=tf.placeholder("float", [None,n_input]) # hidden layer settings

n_hidden_1 = 128

n_hidden_2 = 64

n_hidden_3 = 10

n_hidden_4 = 2 weights = {

'encoder_h1': tf.Variable(tf.random_normal([n_input,n_hidden_1])),

'encoder_h2': tf.Variable(tf.random_normal([n_hidden_1,n_hidden_2])),

'encoder_h3': tf.Variable(tf.random_normal([n_hidden_2,n_hidden_3])),

'encoder_h4': tf.Variable(tf.random_normal([n_hidden_3,n_hidden_4])), 'decoder_h1': tf.Variable(tf.random_normal([n_hidden_4,n_hidden_3])),

'decoder_h2': tf.Variable(tf.random_normal([n_hidden_3,n_hidden_2])),

'decoder_h3': tf.Variable(tf.random_normal([n_hidden_2,n_hidden_1])),

'decoder_h4': tf.Variable(tf.random_normal([n_hidden_1, n_input])),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'encoder_b3': tf.Variable(tf.random_normal([n_hidden_3])),

'encoder_b4': tf.Variable(tf.random_normal([n_hidden_4])), 'decoder_b1': tf.Variable(tf.random_normal([n_hidden_3])),

'decoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b3': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b4': tf.Variable(tf.random_normal([n_input])),

} def encoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['encoder_h3']),

biases['encoder_b3']))

layer_4 = tf.add(tf.matmul(layer_3, weights['encoder_h4']),

biases['encoder_b4'])

return layer_4 #定义decoder

def decoder(x):

# Decoder Hidden layer with sigmoid activation #2

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['decoder_h3']),

biases['decoder_b3']))

layer_4 = tf.nn.sigmoid(tf.add(tf.matmul(layer_3, weights['decoder_h4']),

biases['decoder_b4']))

return layer_4 # Construct model

encoder_op = encoder(X) # 128 Features

decoder_op = decoder(encoder_op) # 784 Features # Prediction

y_pred = decoder_op #After

# Targets (Labels) are the input data.

y_true = X #Before cost = tf.reduce_mean(tf.pow(y_true - y_pred, 2))

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(cost) # Launch the graph

with tf.Session() as sess: sess.run(tf.global_variables_initializer())

total_batch = int(mnist.train.num_examples/batch_size)

# Training cycle

for epoch in range(training_epochs):

# Loop over all batches

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size) # max(x) = 1, min(x) = 0

# Run optimization op (backprop) and cost op (to get loss value)

_, c = sess.run([optimizer, cost], feed_dict={X: batch_xs})

# Display logs per epoch step

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch+1),

"cost=", "{:.9f}".format(c)) print("Optimization Finished!") encode_result = sess.run(encoder_op,feed_dict={X:mnist.test.images})

plt.scatter(encode_result[:,0],encode_result[:,1],c=mnist.test.labels)

plt.title('Matplotlib,AE,classification--Jason Niu')

plt.show()

TF之AE:AE实现TF自带数据集AE的encoder之后decoder之前的非监督学习分类—Jason niu的更多相关文章

- TF之AE:AE实现TF自带数据集数字真实值对比AE先encoder后decoder预测数字的精确对比—Jason niu

import tensorflow as tf import numpy as np import matplotlib.pyplot as plt #Import MNIST data from t ...

- TF:利用TF的train.Saver载入曾经训练好的variables(W、b)以供预测新的数据—Jason niu

import tensorflow as tf import numpy as np W = tf.Variable(np.arange(6).reshape((2, 3)), dtype=tf.fl ...

- TF:利用sklearn自带数据集使用dropout解决学习中overfitting的问题+Tensorboard显示变化曲线—Jason niu

import tensorflow as tf from sklearn.datasets import load_digits #from sklearn.cross_validation impo ...

- 对抗生成网络-图像卷积-mnist数据生成(代码) 1.tf.layers.conv2d(卷积操作) 2.tf.layers.conv2d_transpose(反卷积操作) 3.tf.layers.batch_normalize(归一化操作) 4.tf.maximum(用于lrelu) 5.tf.train_variable(训练中所有参数) 6.np.random.uniform(生成正态数据

1. tf.layers.conv2d(input, filter, kernel_size, stride, padding) # 进行卷积操作 参数说明:input输入数据, filter特征图的 ...

- TF之RNN:实现利用scope.reuse_variables()告诉TF想重复利用RNN的参数的案例—Jason niu

import tensorflow as tf # 22 scope (name_scope/variable_scope) from __future__ import print_function ...

- TF之RNN:TF的RNN中的常用的两种定义scope的方式get_variable和Variable—Jason niu

# tensorflow中的两种定义scope(命名变量)的方式tf.get_variable和tf.Variable.Tensorflow当中有两种途径生成变量 variable import te ...

- TF之RNN:matplotlib动态演示之基于顺序的RNN回归案例实现高效学习逐步逼近余弦曲线—Jason niu

import tensorflow as tf import numpy as np import matplotlib.pyplot as plt BATCH_START = 0 TIME_STEP ...

- TF之RNN:TensorBoard可视化之基于顺序的RNN回归案例实现蓝色正弦虚线预测红色余弦实线—Jason niu

import tensorflow as tf import numpy as np import matplotlib.pyplot as plt BATCH_START = 0 TIME_STEP ...

- TF之RNN:基于顺序的RNN分类案例对手写数字图片mnist数据集实现高精度预测—Jason niu

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data mnist = input_dat ...

随机推荐

- Confluence 6 手动安装语言包和找到更多语言包

手动安装语言包 希望以手动的方式按照语言包,你需要按照下面描述的方式上传语言包.一旦你安装成功后,语言包插件将会默认启用. 插件通常以 JAR 或者 OBR(OSGi Bundle Repositor ...

- ionic3 点击input 弹出白色遮罩 遮挡上部内容

在Manifest中的activity里设置android:windowSoftInputMode为adjustPan,默认为adjustResize,当前窗口的内容将自动移动以便当前焦点从不被键盘覆 ...

- matalb 产生信号源 AM调制解调 FM调制解调

%%%%%%%%%%%%%%%%%%%%%%%%%%% %AM调制解调系统 %%%%%%%%%%%%%%%%%%%%%%%%%%% clear; clf; close all Fs=800000;%采 ...

- redis-cluster集群搭建

Redis3.0版本之前,可以通过Redis Sentinel(哨兵)来实现高可用 ( HA ),从3.0版本之后,官方推出了Redis Cluster,它的主要用途是实现数据分片(Data Sha ...

- 性能测试四十九:ngrinder压测平台

下载地址:https://sourceforge.net/projects/ngrinder/files/ ngrinder工作原理:这里的controller就是ngrinder平台 部署(以win ...

- Springboot+MyBatis+JPA集成

1.前言 Springboot最近可谓是非常的火,本人也在项目中尝到了甜头.之前一直使用Springboot+JPA,用了一段时间发现JPA不是太灵活,也有可能是我不精通JPA,总之为了多学学Sp ...

- SSM + Android 网络文件上传下载

SSM + Android 网络交互的那些事 2016年12月14日 17:58:36 ssm做为后台与android交互,相信只要是了解过的人都知道一些基本的数据交互,向json,对象,map的交互 ...

- txt提取相同内容、不同内容

findstr >相同部分.txt findstr >%~n2多余部分.txt findstr >%~n1多余部分.txt

- docker日志清理

前言:docker运行久了,会发现它的映射磁盘空间爆满,尤其是yum安装的docker的 解决方法: 1. 用脚本清理,一般yum安装的docker,其存储空间一般都在/var/lib/docker/ ...

- Android 中的缓存

AsimpleCache 1.它可以缓存什么东西? 普通的字符串. json. 序列化的java对象 字节数字. 2.主要特色 1:轻,轻到只有一个JAVA文件. 2:可配置,可以配置缓存路径,缓存 ...