Regularization —— linear regression

本节主要是练习regularization项的使用原则。因为在机器学习的一些模型中,如果模型的参数太多,而训练样本又太少的话,这样训练出来的模型很容易产生过拟合现象。因此在模型的损失函数中,需要对模型的参数进行“惩罚”,这样的话这些参数就不会太大,而越小的参数说明模型越简单,越简单的模型则越不容易产生过拟合现象。

Regularized linear regression

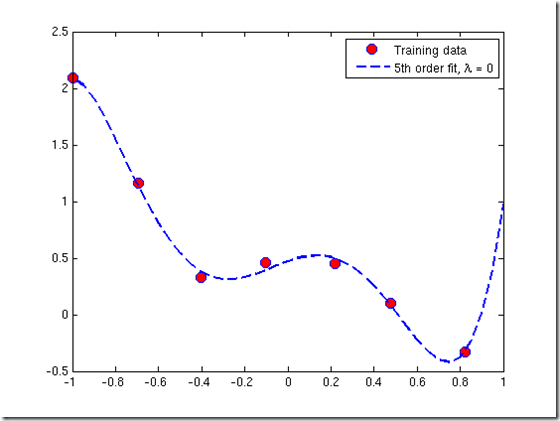

From looking at this plot, it seems that fitting a straight line might be too simple of an approximation. Instead, we will try fitting a higher-order polynomial to the data to capture more of the variations in the points.

Let's try a fifth-order polynomial. Our hypothesis will be

This means that we have a hypothesis of six features, because  are now all features of our regression. Notice that even though we are producing a polynomial fit, we still have a linear regression problem because the hypothesis is linear in each feature.

are now all features of our regression. Notice that even though we are producing a polynomial fit, we still have a linear regression problem because the hypothesis is linear in each feature.

Since we are fitting a 5th-order polynomial to a data set of only 7 points, over-fitting is likely to occur. To guard against this, we will use regularization in our model.

Recall that in regularization problems, the goal is to minimize the following cost function with respect to  :

:

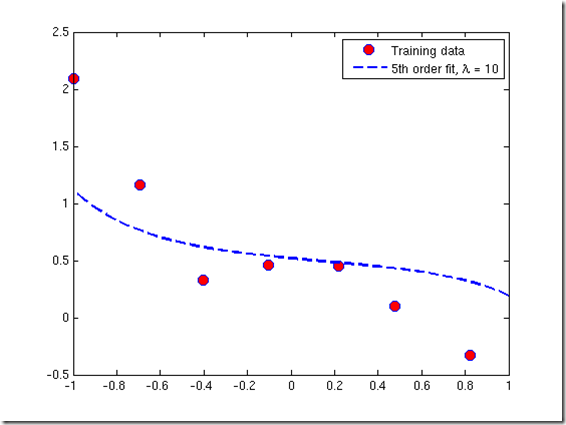

The regularization parameter  is a control on your fitting parameters. As the magnitues of the fitting parameters increase, there will be an increasing penalty on the cost function. This penalty is dependent on the squares of the parameters as well as the magnitude of

is a control on your fitting parameters. As the magnitues of the fitting parameters increase, there will be an increasing penalty on the cost function. This penalty is dependent on the squares of the parameters as well as the magnitude of  . Also, notice that the summation after

. Also, notice that the summation after  does not include

does not include

lamda 越大,训练出的模型越简单 —— 后一项的惩罚越大

Normal equations

Now we will find the best parameters of our model using the normal equations. Recall that the normal equations solution to regularized linear regression is

The matrix following

is an

diagonal matrix with a zero in the upper left and ones down the other diagonal entries. (Remember that

is the number of features, not counting the intecept term). The vector

and the matrix

have the same definition they had for unregularized regression:

Using this equation, find values for

using the three regularization parameters below:

a.

(this is the same case as non-regularized linear regression)

b.

c.

Code

clc,clear

%加载数据

x = load('ex5Linx.dat');

y = load('ex5Liny.dat'); %显示原始数据

plot(x,y,'o','MarkerEdgeColor','b','MarkerFaceColor','r') %将特征值变成训练样本矩阵

x = [ones(length(x),) x x.^ x.^ x.^ x.^];

[m n] = size(x);

n = n -; %计算参数sidta,并且绘制出拟合曲线

rm = diag([;ones(n,)]);%lamda后面的矩阵

lamda = [ ]';

colortype = {'g','b','r'};

sida = zeros(n+,); %初始化参数sida

xrange = linspace(min(x(:,)),max(x(:,)))';

hold on;

for i = :

sida(:,i) = inv(x'*x+lamda(i).*rm)*x'*y;%计算参数sida

norm_sida = norm(sida) % norm 求sida的2阶范数

yrange = [ones(size(xrange)) xrange xrange.^ xrange.^,...

xrange.^ xrange.^]*sida(:,i);

plot(xrange',yrange,char(colortype(i)))

hold on

end

legend('traning data', '\lambda=0', '\lambda=1','\lambda=10')%注意转义字符的使用方法

hold off

Regularization —— linear regression的更多相关文章

- machine learning(14) --Regularization:Regularized linear regression

machine learning(13) --Regularization:Regularized linear regression Gradient descent without regular ...

- Matlab实现线性回归和逻辑回归: Linear Regression & Logistic Regression

原文:http://blog.csdn.net/abcjennifer/article/details/7732417 本文为Maching Learning 栏目补充内容,为上几章中所提到单参数线性 ...

- Stanford机器学习---第二讲. 多变量线性回归 Linear Regression with multiple variable

原文:http://blog.csdn.net/abcjennifer/article/details/7700772 本栏目(Machine learning)包括单参数的线性回归.多参数的线性回归 ...

- Stanford机器学习---第一讲. Linear Regression with one variable

原文:http://blog.csdn.net/abcjennifer/article/details/7691571 本栏目(Machine learning)包括单参数的线性回归.多参数的线性回归 ...

- Regularized Linear Regression with scikit-learn

Regularized Linear Regression with scikit-learn Earlier we covered Ordinary Least Squares regression ...

- 机器学习笔记-1 Linear Regression with Multiple Variables(week 2)

1. Multiple Features note:X0 is equal to 1 2. Feature Scaling Idea: make sure features are on a simi ...

- Simple tutorial for using TensorFlow to compute a linear regression

"""Simple tutorial for using TensorFlow to compute a linear regression. Parag K. Mita ...

- 第五次编程作业-Regularized Linear Regression and Bias v.s. Variance

1.正规化的线性回归 (1)代价函数 (2)梯度 linearRegCostFunction.m function [J, grad] = linearRegCostFunction(X, y, th ...

- [UFLDL] Linear Regression & Classification

博客内容取材于:http://www.cnblogs.com/tornadomeet/archive/2012/06/24/2560261.html Deep learning:六(regulariz ...

随机推荐

- HDU 1166 敌兵布阵(线段树单节点更新 区间求和)

http://acm.hdu.edu.cn/showproblem.php?pid=1166 Problem Description C国的死对头A国这段时间正在进行军事演习,所以C国间谍头子Dere ...

- 4、Go for循环

package main import "fmt" func main(){ //for 循环是go语言唯一的循环结构,分为三种类型 //第一种 类似while i:=1 for ...

- [BeiJing2009 WinterCamp]取石子游戏 Nim SG 函数

Code: #include<cstdio> #include<algorithm> #include<cstring> using namespace std; ...

- Linux中常用命令(文件与目录)

1.pwd 查看当前目录(Print Working Directory) 2.cd 切换工作目录(Change Directory) (1)格式:cd [目录位置] 特殊目录: .当前目录 ..上一 ...

- 由防止表单重复提交引发的一系列问题--servletRequest的复制、body值的获取

@Time:2019年1月4日 16:19:19 @Author:QGuo 背景:最开始打算写个防止表单重复提交的拦截器:网上见到一种不错的方式,比较合适前后端分离,校验在后台实现: 我在此基础上 ...

- CF17E Palisection(回文树)

题意翻译 给定一个长度为n的小写字母串.问你有多少对相交的回文子 串(包含也算相交) . 输入格式 第一行是字符串长度n(1<=n<=2*10^6),第二行字符串 输出格式 相交的回文子串 ...

- Linux学习之socket编程(一)

socket编程 socket的概念: 在TCP/IP协议中,“IP地址+TCP或UDP端口号”唯一标识网络通讯中的一个进程,“IP地址+端口号”就称为socket. 在TCP协议中,建立连接的两个进 ...

- Myeclipse学习总结(4)——Eclipse常用开发插件

(1) AmaterasUML 介绍:Eclipse的UML插件,支持UML活动图,class图,sequence图,usecase图等:支持与Java class/interf ...

- Java基础学习总结(18)——网络编程

一.网络基础概念 首先理清一个概念:网络编程 != 网站编程,网络编程现在一般称为TCP/IP编程. 二.网络通信协议及接口 三.通信协议分层思想 四.参考模型 五.IP协议 每个人的电脑都有一个独一 ...

- unix mkdir命令的使用方法

[语法]: mkdir [-m 模式] [-p] 文件夹名 [说明]: 本命令用于建立文件夹,文件夹的存取模式由掩码(umask)决定,要求对其父文件夹具有写权限,文件夹的UID和GID为实际 ...