spark streaming基本概念一

在学习spark streaming时,建议先学习和掌握RDD。spark streaming无非是针对流式数据处理这个场景,在RDD基础上做了一层封装,简化流式数据处理过程。

spark streaming 引入一些新的概念和方法,本文将介绍这方面的知识。主要包括以下几点:

- 初始化流上下文

- Discretized Streams离散数据流

- Input DStreams and Receivers

- Transformations on DStreams

- Output Operations on DStreams

- DataFrame and SQL Operations

Basic Concepts

Initializing StreamingContext

SparkConf conf = new SparkConf().setAppName(appName).setMaster(master);

JavaStreamingContext ssc = new JavaStreamingContext(conf, new Duration(1000));

./bin/spark-submit \

--class <main-class> \

--master <master-url> \

--deploy-mode <deploy-mode> \

--conf <key>=<value> \

... # other options

<application-jar> \

[application-arguments]

- Run on a Spark standalone cluster in client deploy mode

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://207.184.161.138:7077 \

--executor-memory 20G \

--total-executor-cores 100 \

/path/to/examples.jar \

1000

Only one StreamingContext can be active in a JVM at the same time.**==在虚拟机中一次只能运行一个StreamingContext.

stop() on StreamingContext also stops the SparkContext. To stop only the StreamingContext, set the optional parameter of stop() called stopSparkContext to false.

stop()默认停止StreamingContext时同时停止SparkContext。通过参数可设置只停止StreamingContext。

A SparkContext can be re-used to create multiple StreamingContexts, as long as the previous StreamingContext is stopped (without stopping the SparkContext) before the next StreamingContext is created.

SparkContext 可以创建多个StreamingContexts,只要前一个StreamingContext已停止。

Discretized Streams (DStreams)

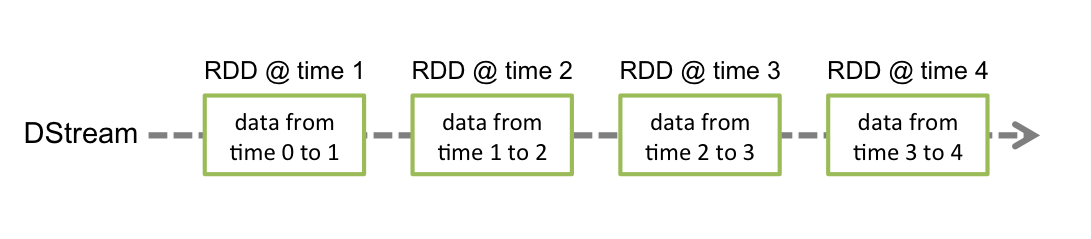

a DStream is represented by a continuous series of RDDs, which is Spark’s abstraction of an immutable, distributed dataset.

DStream离散数据流是不可变的、分布式数据集的抽象,它代表一系列连续的RDDs.

Input DStreams and Receivers

两种数据源

- 基本数据源:文件、socket

- 高级数据源: Kafka, Flume, Kinesis

But note that a Spark worker/executor is a long-running task, hence it occupies one of the cores allocated to the Spark Streaming application. Therefore, it is important to remember that a Spark Streaming application needs to be allocated enough cores (or threads, if running locally) to process the received data, as well as to run the receiver(s).

spark的worker是长期运行的任务,需要占据cpu的一个核。所以在集群上使用时,需要有足够的多cpu核,在本地运行时,需要足够多的线程。所以spark对CPU核数、内存有要求。

- 注意事项

- when running locally, always use “local[n]” as the master URL, where n > number of receivers to run (see Spark Properties for information on how to set the master). 运行本地模式时,n > 接收者数量。

- Extending the logic to running on a cluster, the number of cores allocated to the Spark Streaming application must be more than the number of receivers. Otherwise the system will receive data, but not be able to process it.在集群运行时,分配给spark的核数>接收者数量,否则系统只能接收数据,不能处理数据。

Receiver Reliability

两类接收者:

- 可靠接收者:数据源允许确认转换的数据。

- 不可靠接收者

Transformations on DStreams

A few of these transformations are worth discussing in more detail.

UpdateStateByKey Operation

在更新的时候可以保持任意的状态。

have to do two steps.

- Define the state - The state can be an arbitrary data type.

- Define the state update function - Specify with a function how to update the state using the previous state and the new values from an input stream.

Transform Operation

arbitrary RDD-to-RDD functions to be applied on a DStream.在DStream中可以使用任意的RDD-to-RDD函数。因为DStream本身是对RDD的封装,所以RDD的转换,DStream都支持。

This allows you to do time-varying RDD operations, that is, RDD operations, number of partitions, broadcast variables, etc. can be changed between batches. 允许执行基于时间变化的RDD操作,比如在批处理中 操作RDD,分组的数量、广播变量等。

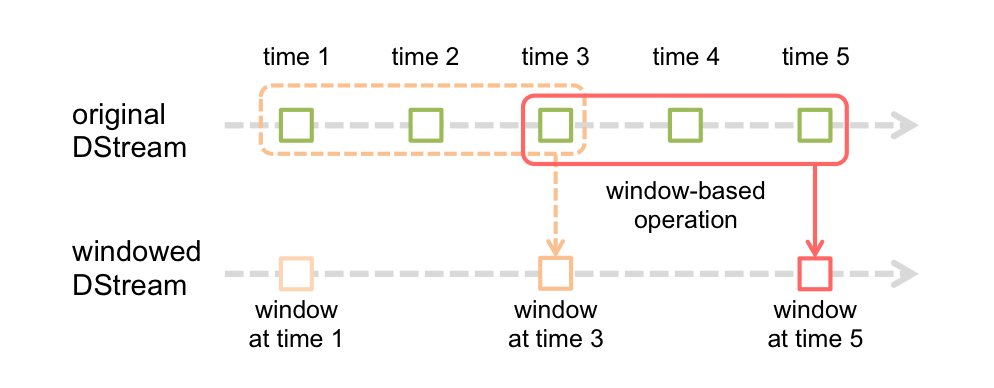

Window Operations

window operation needs to specify two parameters.

- window length - The duration of the window (3 in the figure).

- sliding interval - The interval at which the window operation is performed (2 in the figure).

Join Operations

- Stream-stream joins

- Stream-dataset joins

Output Operations on DStreams

Output operations allow DStream’s data to be pushed out to external systems like a database or a file systems.输出操作运行数据流推送到外部系统,比如数据库或者文件。

Design Patterns for using foreachRDD

dstream.foreachRDD(rdd -> {

rdd.foreachPartition(partitionOfRecords -> {

// ConnectionPool is a static, lazily initialized pool of connections

Connection connection = ConnectionPool.getConnection();

while (partitionOfRecords.hasNext()) {

connection.send(partitionOfRecords.next());

}

ConnectionPool.returnConnection(connection); // return to the pool for future reuse

});

});

Other points to remember:

DStreams are executed lazily by the output operations, just like RDDs are lazily executed by RDD actions. Specifically, RDD actions inside the DStream output operations force the processing of the received data. Hence, if your application does not have any output operation, or has output operations like dstream.foreachRDD() without any RDD action inside them, then nothing will get executed. The system will simply receive the data and discard it.数据流根据输出操作进行延迟处理。所以必须要有输出操作或者 在forechRDD()中有RDD的动作。

By default, output operations are executed one-at-a-time. And they are executed in the order they are defined in the application.输出操作一次一个。

DataFrame and SQL Operations

- Dataset is a new interface added in Spark 1.6 that provides the benefits of RDDs (strong typing, ability to use powerful lambda functions) with the benefits of Spark SQL’s optimized execution engine. A Dataset can be constructed from JVM objects and then manipulated using functional transformations (map, flatMap, filter, etc.).

DateSet吸收了RDD的优点,和sparksql优化执行引擎的好处。通过JVM Objects构造Dataset。

SparkSession

SparkSession spark = SparkSession

.builder()

.appName("Java Spark SQL basic example")

.config("spark.some.config.option", "some-value")

.getOrCreate();

- DataFrame: Untyped Dataset Operations (aka DataFrame Operations)

无类型的Dataset

Dataset<Row> sqlDF = spark.sql("SELECT * FROM people");

Each RDD is converted to a DataFrame, registered as a temporary table and then queried using SQL.将RDD转换成DataFrame,注册一个临时表,然后使用sql查询。

参考文献

spark streaming基本概念一的更多相关文章

- Spark Streaming核心概念与编程

Spark Streaming核心概念与编程 1. 核心概念 StreamingContext Create StreamingContext import org.apache.spark._ im ...

- Spark Streaming基础概念

为了更好地理解Spark Streaming 子框架的处理机制,必须得要自己弄清楚这些最基本概念. 1.离散流(Discretized Stream,DStream):这是Spark Streamin ...

- 通过案例对 spark streaming 透彻理解三板斧之二:spark streaming运行机制

本期内容: 1. Spark Streaming架构 2. Spark Streaming运行机制 Spark大数据分析框架的核心部件: spark Core.spark Streaming流计算. ...

- Spark Streaming笔记

Spark Streaming学习笔记 liunx系统的习惯创建hadoop用户在hadoop根目录(/home/hadoop)上创建如下目录app 存放所有软件的安装目录 app/tmp 存放临时文 ...

- 【慕课网实战】Spark Streaming实时流处理项目实战笔记十之铭文升级版

铭文一级: 第八章:Spark Streaming进阶与案例实战 updateStateByKey算子需求:统计到目前为止累积出现的单词的个数(需要保持住以前的状态) java.lang.Illega ...

- 【慕课网实战】Spark Streaming实时流处理项目实战笔记九之铭文升级版

铭文一级: 核心概念:StreamingContext def this(sparkContext: SparkContext, batchDuration: Duration) = { this(s ...

- 大数据开发实战:Spark Streaming流计算开发

1.背景介绍 Storm以及离线数据平台的MapReduce和Hive构成了Hadoop生态对实时和离线数据处理的一套完整处理解决方案.除了此套解决方案之外,还有一种非常流行的而且完整的离线和 实时数 ...

- Spark Streaming和Kafka集成深入浅出

写在前面 本文主要介绍Spark Streaming基本概念.kafka集成.Offset管理 本文主要介绍Spark Streaming基本概念.kafka集成.Offset管理 一.概述 Spar ...

- Spark踩坑记——Spark Streaming+Kafka

[TOC] 前言 在WeTest舆情项目中,需要对每天千万级的游戏评论信息进行词频统计,在生产者一端,我们将数据按照每天的拉取时间存入了Kafka当中,而在消费者一端,我们利用了spark strea ...

随机推荐

- 使用PowerShell 修改DNS并加入域中

运行环境:Windows Server 2012 R2 此powershell脚本为自动修改本机DNS并加入到域中 但有的时候会提示[本地计算机当前不是域的一部分.请重新执行脚本!]错误,如遇到该错误 ...

- (4.35)sql server清理过期文件【转】

在SQL Server中, 一般是用维护计划实现删除过期文件.不过直接用脚本也是可以的,而且更灵活. 下面介绍三种方法, 新建一个作业, 在作业的步骤里加上相关的脚本就可以了. --1. xp_del ...

- Web循环监控Java调用 / Java调用.net wcf接口

背景介紹 (Background Introduction) 目前有一些报表客户抱怨打不开 报表执行过程过长,5.8.10秒甚至更多 不能及时发现和掌握服务器web站点情况 用戶需求(User Req ...

- java 基础(一)-实验楼

一.标识符:区分大小写由数字.大小写字母.$._ 组成,不能由数字开头.各种变量.方法和类等要素命名时使用的字符序列称.凡是自己可以起名字的地方都叫标识符.不可以使用关键字和保留字,但能包含关键字和保 ...

- 阿里云云计算ACP专业认证考试

阿里云云计算专业认证(Alibaba Cloud Certified Professional,ACP)是面向使用阿里云云计算产品的架构.开发.运维类人员的专业技术认证. 更多阿里云云计算ACP专业认 ...

- SpringBoot起飞系列-国际化(六)

一.前言 国际化这个功能可能我们不常用,但是在有需要的地方还是必须要上的,今天我们就来看一下怎么在我们的web开发中配置国际化,让我们的网站可以根据语言来展示不同的形式.本文接续上一篇SpringBo ...

- tp5 post接到的json被转义 问题解决

今天做项目的时候前端需要可以保存可变数据, 然后原样返回给前端 接口 $data =input('post.');//用户唯一标识$goods = $data['goods']; $shopcuxia ...

- pt工具

percona-toolkit简介percona-toolkit是一组高级命令行工具的集合,用来执行各种通过手工执行非常复杂和麻烦的mysql任务和系统任务,这些任务包括: 检查master和slav ...

- 一个小时前,美国主流媒体,头条,谷歌两位创始人突然宣布退下来,把万亿美元的帝国交给Sundar Pichai

一个小时前,美国各大主流媒体头条,谷歌两位创始人,放弃了万亿美元的帝国控制权,交给了CEO Sundar Pichai.

- 养成一个SQL好习惯

要知道sql语句,我想我们有必要知道sqlserver查询分析器怎么执行我么sql语句的,我么很多人会看执行计划,或者用profile来监视和调优查询语句或者存储过程慢的原因,但是如果我们知道查询分析 ...