Optimization Algorithms

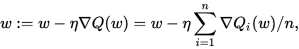

1. Stochastic Gradient Descent

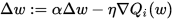

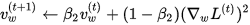

2. SGD With Momentum

Stochastic gradient descent with momentum remembers the update Δ w at each iteration, and determines the next update as a linear combination of the gradient and the previous update:

Unlike in classical stochastic gradient descent, it tends to keep traveling in the same direction, preventing oscillations.

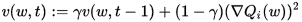

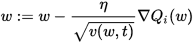

3. RMSProp

RMSProp (for Root Mean Square Propagation) is also a method in which the learning rate is adapted for each of the parameters. The idea is to divide the learning rate for a weight by a running average of the magnitudes of recent gradients for that weight. So, first the running average is calculated in terms of means square,

where,

And the parameters are updated as,

RMSProp has shown excellent adaptation of learning rate in different applications. RMSProp can be seen as a generalization of Rprop and is capable to work with mini-batches as well opposed to only full-batches.

4. The Adam Algorithm

Adam (short for Adaptive Moment Estimation) is an update to the RMSProp optimizer. In this optimization algorithm, running averages of both the gradients and the second moments of the gradients are used. Given parameters

where

参考链接:Wikipedia。

Optimization Algorithms的更多相关文章

- (转) An overview of gradient descent optimization algorithms

An overview of gradient descent optimization algorithms Table of contents: Gradient descent variants ...

- An overview of gradient descent optimization algorithms

原文地址:An overview of gradient descent optimization algorithms An overview of gradient descent optimiz ...

- 课程二(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization),第二周(Optimization algorithms) —— 2.Programming assignments:Optimization

Optimization Welcome to the optimization's programming assignment of the hyper-parameters tuning spe ...

- 优化算法动画演示Alec Radford's animations for optimization algorithms

Alec Radford has created some great animations comparing optimization algorithms SGD, Momentum, NAG, ...

- [C2W2] Improving Deep Neural Networks : Optimization algorithms

第二周:优化算法(Optimization algorithms) Mini-batch 梯度下降(Mini-batch gradient descent) 本周将学习优化算法,这能让你的神经网络运行 ...

- 【论文翻译】An overiview of gradient descent optimization algorithms

这篇论文最早是一篇2016年1月16日发表在Sebastian Ruder的博客.本文主要工作是对这篇论文与李宏毅课程相关的核心部分进行翻译. 论文全文翻译: An overview of gradi ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week2, Optimization algorithms

Gradient descent Batch Gradient Decent, Mini-batch gradient descent, Stochastic gradient descent 还有很 ...

- An overview of gradient descent optimization algorithms (更新到Adam)

Momentum:解快了收敛速度,同时也减弱了SGD的波动 NAG: 减速了Momentum更新参数太快 Adagrad: 出现频率较低参数采用较大的更新,对于出现频率较高的参数采用较小的,不共用一个 ...

- 最佳化常用测试函数 Optimization Test functions

http://www.sfu.ca/~ssurjano/optimization.html The functions listed below are some of the common func ...

随机推荐

- 消息中间件RabbitMq的代码使用案例

消费者: ---------------------- 构造初始化: public RabbitMqReceiver(String host, int port, String username, S ...

- Vue常见问题集中

a.VScode保持vue语法高亮的方式: 1.安装插件:vetur.打开VScode,Ctrl + P 然后输入 ext install vetur 然后回车点安装即可. 2.在 VSCode中使用 ...

- new与malloc有什么区别

转自http://www.cnblogs.com/QG-whz/p/5140930.html 前言 几个星期前去面试C++研发的实习岗位,面试官问了个问题: new与malloc有什么区别? 这是个老 ...

- Spring Boot 面试总结(一)

1.使用 Spring Boot 前景? 多年来,随着新功能的增加,spring变得越来越复杂.只需访问https://spring.io/projects页面,我们就会看到可以在我们的应用程序中使用 ...

- 老贾的幸福生活day 04

变量 变量名的规则: 变量名由字母,数字,下划线组成 变量名不能以数字开头 变量名要具有可描述性 变量名要区分大小写 变量名禁止使用python关键字 变量名不能使用中文和拼音 变量名推荐写法: 驼峰 ...

- Elastic Search中mapping的问题

Mapping在ES中是非常重要的一个概念.决定了一个index中的field使用什么数据格式存储,使用什么分词器解析,是否有子字段,是否需要copy to其他字段等.Mapping决定了index中 ...

- Elastic Search中Query String常见语法

1 搜索所有数据timeout参数:是超时时长定义.代表每个节点上的每个shard执行搜索时最多耗时多久.不会影响响应的正常返回.只会影响返回响应中的数据数量.如:索引a中,有10亿数据.存储在5个s ...

- 怎样理解 DOM 的三种层级关系

除了根节点,其他节点都有三种层级关系. 父节点关系(parentNode):直接的那个上级节点 子节点关系(childNodes):直接的下级节点 同级节点关系(sibling):拥有同一个父节点的节 ...

- C语言中signed和unsigned理解

一直在学java,今天开始研究ACM的算法题,需要用到C语言,发现好多知识点都不清楚了,看来以后要多多总结~ signed意思为有符号的,也就是第一个位代表正负,剩余的代表大小,例如:signed i ...

- Navicat 12的安装与使用(附加破解)

地址https://blog.csdn.net/tomos428/article/details/80483450?tdsourcetag=s_pctim_aiomsg