matlab(7) Regularized logistic regression : mapFeature(将feature增多) and costFunctionReg

Regularized logistic regression : mapFeature(将feature增多) and costFunctionReg

ex2_reg.m文件中的部分内容

%% =========== Part 1: Regularized Logistic Regression ============

% In this part, you are given a dataset with data points that are not

% linearly separable. However, you would still like to use logistic

% regression to classify the data points.

%

% To do so, you introduce more features to use -- in particular, you add

% polynomial features to our data matrix (similar to polynomial

% regression).

%

% Add Polynomial Features

% Note that mapFeature also adds a column of ones for us, so the intercept

% term is handled

X = mapFeature(X(:,1), X(:,2)); %调用下面的mapFeature.m文件中的mapFeature(X1,X2)函数

%将只有x1,x2feature map成一个有28个feature的6次的多项式  ,这样就能画出更复杂的decision boundary, 但同时也有可能带来overfitting的结果(取决于λ的值)

,这样就能画出更复杂的decision boundary, 但同时也有可能带来overfitting的结果(取决于λ的值)

% 调用完后X变为118*28(118个example,28个属性,包括前面的1做为一列)的矩阵

% Initialize fitting parameters

initial_theta = zeros(size(X, 2), 1); %initial_theta: 28*1

% Set regularization parameter lambda to 1

lambda = 1; % λ=1;当λ=0时表示不正则化(No regularization ),这时会出现overfitting;当λ=100时会出现Too much regularization(Underfitting)

% Compute and display initial cost and gradient for regularized logistic

% regression

[cost, grad] = costFunctionReg(initial_theta, X, y, lambda); %调用costFunctionReg.m文件中的costFunctionReg(theta, X, y, lambda)函数

fprintf('Cost at initial theta (zeros): %f\n', cost); %计算initial theta (zeros)时的cost 值

fprintf('\nProgram paused. Press enter to continue.\n');

pause;

mapFeature.m文件

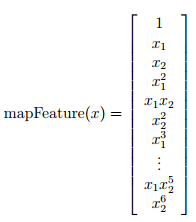

function out = mapFeature(X1, X2)

% MAPFEATURE Feature mapping function to polynomial features

%

% MAPFEATURE(X1, X2) maps the two input features

% to quadratic features used in the regularization exercise.

%

% Returns a new feature array with more features, comprising of

% X1, X2, X1.^2, X2.^2, X1*X2, X1*X2.^2, etc..

%

% Inputs X1, X2 must be the same size

%

degree = 6; %map the features into all polynomial terms of x1 and x2 up to the sixth power

out = ones(size(X1(:,1)));

for i = 1:degree

for j = 0:i

out(:, end+1) = (X1.^(i-j)).*(X2.^j);

end

end

end

costFunctionReg.m文件

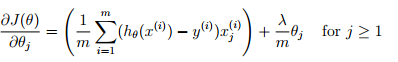

function [J, grad] = costFunctionReg(theta, X, y, lambda)

%COSTFUNCTIONREG Compute cost and gradient for logistic regression with regularization

% J = COSTFUNCTIONREG(theta, X, y, lambda) computes the cost of using

% theta as the parameter for regularized logistic regression and the

% gradient of the cost w.r.t. to the parameters.

% Initialize some useful values

m = length(y); % number of training examples

% You need to return the following variables correctly

J = 0;

grad = zeros(size(theta));

% ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta.

% You should set J to the cost.

% Compute the partial derivatives and set grad to the partial

% derivatives of the cost w.r.t. each parameter in theta

J = 1/m*(-1*y'*log(sigmoid(X*theta)) - (ones(1,m)-y')*log(ones(m,1)-sigmoid(X*theta)))...

+ lambda/(2*m) * (theta(2:end,:))' * theta(2:end,:); %Note that you should not regularize the parameter θ0.

the regularized cost function,

the regularized cost function,

grad = 1/m * (X' * (sigmoid(X*theta) - y)) + (lambda/m)*theta; %

grad(1) = 1/m * (X(:,1))' * (sigmoid(X*theta) - y); %

% Note that you should not regularize the parameter θ0.

% =============================================================

end

matlab(7) Regularized logistic regression : mapFeature(将feature增多) and costFunctionReg的更多相关文章

- matlab(6) Regularized logistic regression : plot data(画样本图)

Regularized logistic regression : plot data(画样本图) ex2data2.txt 0.051267,0.69956,1-0.092742,0.68494, ...

- matlab(8) Regularized logistic regression : 不同的λ(0,1,10,100)值对regularization的影响,对应不同的decision boundary\ 预测新的值和计算模型的精度predict.m

不同的λ(0,1,10,100)值对regularization的影响\ 预测新的值和计算模型的精度 %% ============= Part 2: Regularization and Accur ...

- machine learning(15) --Regularization:Regularized logistic regression

Regularization:Regularized logistic regression without regularization 当features很多时会出现overfitting现象,图 ...

- Regularized logistic regression

要解决的问题是,给出了具有2个特征的一堆训练数据集,从该数据的分布可以看出它们并不是非常线性可分的,因此很有必要用更高阶的特征来模拟.例如本程序中个就用到了特征值的6次方来求解. Data To be ...

- 编程作业2.2:Regularized Logistic regression

题目 在本部分的练习中,您将使用正则化的Logistic回归模型来预测一个制造工厂的微芯片是否通过质量保证(QA),在QA过程中,每个芯片都会经过各种测试来保证它可以正常运行.假设你是这个工厂的产品经 ...

- 吴恩达机器学习笔记22-正则化逻辑回归模型(Regularized Logistic Regression)

针对逻辑回归问题,我们在之前的课程已经学习过两种优化算法:我们首先学习了使用梯度下降法来优化代价函数

- Stanford机器学习---第三讲. 逻辑回归和过拟合问题的解决 logistic Regression & Regularization

原文:http://blog.csdn.net/abcjennifer/article/details/7716281 本栏目(Machine learning)包括单参数的线性回归.多参数的线性回归 ...

- Andrew Ng机器学习编程作业:Logistic Regression

编程作业文件: machine-learning-ex2 1. Logistic Regression (逻辑回归) 有之前学生的数据,建立逻辑回归模型预测,根据两次考试结果预测一个学生是否有资格被大 ...

- ML 逻辑回归 Logistic Regression

逻辑回归 Logistic Regression 1 分类 Classification 首先我们来看看使用线性回归来解决分类会出现的问题.下图中,我们加入了一个训练集,产生的新的假设函数使得我们进行 ...

随机推荐

- linux 给运行程序指定动态库路径

1. 连接和运行时库文件搜索路径到设置 库文件在连接(静态库和共享 库)和运行(仅限于使用共享库的程序)时被使用,其搜索路径是在系统中进行设置的.一般 Linux 系统把 /lib 和 /usr/li ...

- scau 10692 XYM-入门之道

题目:http://paste.ubuntu.com/14157516/ 思路:判断一个西瓜,看看能不能直接吃完,如果能,就吃了.但是:如果不能,就要分成两半,就这样分割,不用以为要用到n维数组,用一 ...

- java微服务的统一配置中心

为了更好的管理应用的配置,也为了不用每次更改配置都重启应用,我们可以使用配置中心 关于eureka的服务注册和rabbitMQ的安装使用(自动更新配置需要用到rabbitMQ)这里不赘述,只关注配置中 ...

- Gulp-构建工具 相关内容整理

Gulp- 简介 Automate and enhance your workflow | 用自动化构建工具增强你的工作流程 Gulp 是什么? gulp是前端开发过程中一种基于流的代码构建工具,是自 ...

- 2019年6月12日——开始记录并分享学习心得——Python3.7中对列表进行排序

Python中对列表的排序按照是排序是否可以恢复分为:永久性排序和临时排序. Python中对列表的排序可以按照使用函数的不同可以分为:sort( ), sorted( ), reverse( ). ...

- 从零开始学Flask框架-005

表单 Flask-WTF 项目结构 pip install flask-wtf 为了实现CSRF 保护,Flask-WTF 需要程序设置一个密钥.Flask-WTF 使用这个密钥生成加密令牌,再用令牌 ...

- Z算法板子

给定一个串$s$, $Z$算法可以$O(n)$时间求出一个$z$数组 $z_i$表示$s[i...n]$与$s$的前缀匹配的最长长度, 下标从$0$开始 void init(char *s, int ...

- shell 学习笔记2-shell-test

一.字符串测试表达式 前面一篇介绍:什么是shell,shell变量请参考: shell 学习笔记1-什么是shell,shell变量 1.字符串测试表达式参数 字符串需要用""引 ...

- 20190705-记IIS发布.NET CORE框架系统之所遇

新手在IIS上发布.NET CORE框架的系统之注意事项 序:本篇随笔是我的处子笔,只想记录自己觉得在系统发布过程中比较重要的步骤,一来,忝作自己的学习笔记,以备不时之需,二来,也希望可以帮助有需要的 ...

- opencv Cascade Classifier Training中 opencv_annotation命令

在win7 cmd中试了官方的指令opencv_annotation --annotations=/path/to/annotations/file.txt --images=/path/to/ima ...