Paper Reading - Show, Attend and Tell: Neural Image Caption Generation with Visual Attention ( ICML 2015 )

Link of the Paper: https://arxiv.org/pdf/1502.03044.pdf

Main Points:

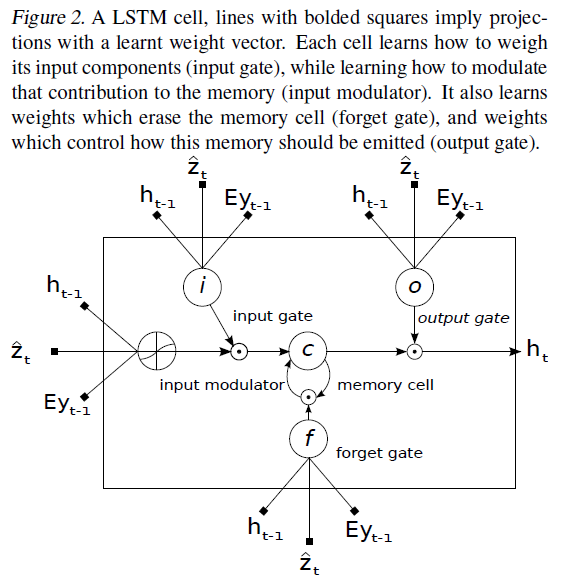

- Encoder-Decoder Framework: Encoder uses a convolutional neural network to extract a set of feature vectors which the authors refer to as annotation vectors. The extractor produces L vectors, each of which is a D-dimensional representation corresponding to a part of the image. a = { a1, ..., aL }, ai ∈ RD. In order to obtain a correspondence between the feature vectors and portions of the 2-D image, they extract features from a lower convolutional layer unlike previous work which instead used a fully connected layer. This allows the decoder to selectively focus on certain parts of an image by weighting a subset of all the feature vectors. Decoder uses a LSTM network to produce a caption by generating one word at every time step conditioned on a context vector, the previous hidden state and the previously generated words.

- Two attention-based image caption generators under a common framework: a "soft" deterministic attention mechanism trainable by standard back-propagation methods; and a "hard" stochastic attention mechanism trainable by maximizing an approximate variational lower bound or equivalently by Reinforce.

Other Key Points:

- Rather than compress an entire image into a static representation, attention allows for salient features to dynamically come to the forefront as needed. This is especially important when there is a lot of clutter in an image. Using representations ( such as those from the very top layer of a conv net ) that distill information in image down to the most salient objects is one effective solution that has been widely adopted in previous work. Unfortunately, this has one potential drawback of losing information which could be useful for richer, more descriptive captions. Using lower-level representation can help preserve this information.

Paper Reading - Show, Attend and Tell: Neural Image Caption Generation with Visual Attention ( ICML 2015 )的更多相关文章

- [Paper Reading] Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

论文链接:https://arxiv.org/pdf/1502.03044.pdf 代码链接:https://github.com/kelvinxu/arctic-captions & htt ...

- 论文笔记:Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention 2018-08-10 10:15:06 Pap ...

- 论文:Show, Attend and Tell: Neural Image Caption Generation with Visual Attention-阅读总结

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention-阅读总结 笔记不能简单的抄写文中的内容,得有自 ...

- Paper Reading - Show and Tell: A Neural Image Caption Generator ( CVPR 2015 )

Link of the Paper: https://arxiv.org/abs/1411.4555 Main Points: A generative model ( NIC, GoogLeNet ...

- [Paper Reading] Show and Tell: A Neural Image Caption Generator

论文链接:https://arxiv.org/pdf/1411.4555.pdf 代码链接:https://github.com/karpathy/neuraltalk & https://g ...

- [Paper Reading] Image Captioning using Deep Neural Architectures (arXiv: 1801.05568v1)

Main Contributions: A brief introduction about two different methods (retrieval based method and gen ...

- Paper Reading - CNN+CNN: Convolutional Decoders for Image Captioning

Link of the Paper: https://arxiv.org/abs/1805.09019 Innovations: The authors propose a CNN + CNN fra ...

- Paper Reading: Stereo DSO

开篇第一篇就写一个paper reading吧,用markdown+vim写东西切换中英文挺麻烦的,有些就偷懒都用英文写了. Stereo DSO: Large-Scale Direct Sparse ...

- Paper Reading - Mind’s Eye: A Recurrent Visual Representation for Image Caption Generation ( CVPR 2015 )

Link of the Paper: https://ieeexplore.ieee.org/document/7298856/ A Correlative Paper: Learning a Rec ...

随机推荐

- 课时53.video标签第二种格式(掌握)

由于视频数据非常非常的重要,所以五大浏览器厂商都不愿意支持别人都视频格式,所以导致了没有一种视频格式是所有浏览器都支持的,这个时候W3C为了解决这个问题,所以推出了第二种video标签的格式 如何查看 ...

- Lodash数组篇

概念简述 lodash 是一个类库 Lodash 通过降低 array.number.objects.string 等等的使用难度从而让 JavaScript 变得更简单 用法 let _ = re ...

- java ssm 后台框架平台 项目源码 websocket IM quartz springmvc

A代码编辑器,在线模版编辑,仿开发工具编辑器,pdf在线预览,文件转换编码B 集成代码生成器 [正反双向](单表.主表.明细表.树形表,快速开发利器)+快速表单构建器freemaker模版技术 ,0个 ...

- [转]收集Oracle UNDO诊断信息脚本

使用该脚本可收集与undo相关的信息,在undo表空间出问题时可使用该脚本来诊断. 使用方法: 1.将脚本拷贝到服务器,创建文件保存,文件名可随意取,例如:diag.out 2.以sys用户登录数据库 ...

- Oracle 触发器(二)

Oracle触发器详解 触发器是许多关系数据库系统都提供的一项技术.在oracle系统里,触发器类似过程和函数,都有声明,执行和异常处理过程的PL/SQL块. 8.1 触发器类型 触发器在数据库里 ...

- LightOJ 1118--Incredible Molecules(两圆相交)

1118 - Incredible Molecules PDF (English) Statistics Forum Time Limit: 0.5 second(s) Memory Lim ...

- QueryableHelper

using System; using System.Collections.Generic; using System.Linq; using System.Linq.Expressions; us ...

- python3的下载与安装

python3的下载与安装 1.首先,从Python官方网站:http://python.org/getit/ ,下载Windows的安装包 ython官网有几个下载文件,有什么区别?Python 3 ...

- 从python2.x到python3.x进阶突破

1.p2是重复代码,语言不同,不支持中文;p3则相反,其中代码不重复,语言用的相同的,并且是支持中文的. 2.p2中input中输入数字输出数字,输入字符串必须自己手动加引号才行;p3中input输出 ...

- Java语法糖 : try-with-resources

先了解几个背景知识 什么是语法糖 语法糖是在语言中增加的某种语法,在不影响功能的情况下为程序员提供更方便的使用方式. 什么是资源 使用之后需要释放或者回收的都可以称为资源,比如JDBC的connect ...