Event Recommendation Engine Challenge分步解析第五步

一、请知晓

本文是基于:

Event Recommendation Engine Challenge分步解析第一步

Event Recommendation Engine Challenge分步解析第二步

Event Recommendation Engine Challenge分步解析第三步

Event Recommendation Engine Challenge分步解析第四步

需要读者先阅读前四篇文章解析

二、活跃度/event热度数据

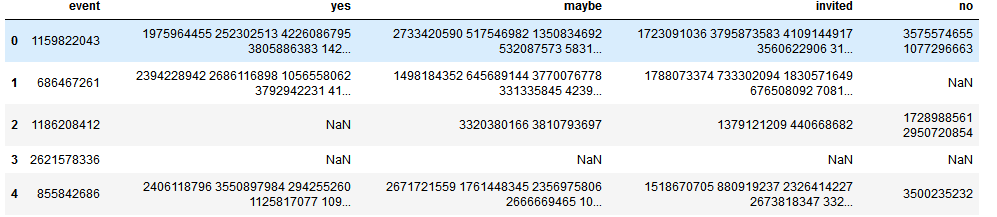

由于用到event_attendees.csv.gz文件,我们先看看该文件

import pandas as pd

df_events_attendees = pd.read_csv('event_attendees.csv.gz', compression='gzip')

df_events_attendees.head()

代码示例结果(该文件保存了某event出席情况信息):

1)变量解释

nevents:train.csv和test.csv中总共的events数目,这里值为13418

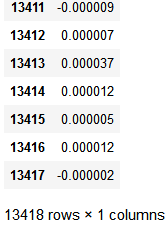

self.eventPopularity:稀疏矩阵,shape为(nevents,1),保存的值是某个event在上图中yes数目-no数目,即一行行处理上述文件,获取该event的index,后yes列空格分割后数目减去no列空格分割数目,并做归一化

import pandas as pd

import scipy.io as sio

eventPopularity = sio.mmread('EA_eventPopularity').todense()

pd.DataFrame(eventPopularity)

代码示例结果:

第五步完整代码:

from collections import defaultdict

import locale, pycountry

import scipy.sparse as ss

import scipy.io as sio

import itertools

#import cPickle

#From python3, cPickle has beed replaced by _pickle

import _pickle as cPickle import scipy.spatial.distance as ssd

import datetime

from sklearn.preprocessing import normalize import gzip

import numpy as np import hashlib #处理user和event关联数据

class ProgramEntities:

"""

我们只关心train和test中出现的user和event,因此重点处理这部分关联数据,

经过统计:train和test中总共3391个users和13418个events

"""

def __init__(self):

#统计训练集中有多少独立的用户的events

uniqueUsers = set()#uniqueUsers保存总共多少个用户:3391个

uniqueEvents = set()#uniqueEvents保存总共多少个events:13418个

eventsForUser = defaultdict(set)#字典eventsForUser保存了每个user:所对应的event

usersForEvent = defaultdict(set)#字典usersForEvent保存了每个event:哪些user点击

for filename in ['train.csv', 'test.csv']:

f = open(filename)

f.readline()#跳过第一行

for line in f:

cols = line.strip().split(',')

uniqueUsers.add( cols[0] )

uniqueEvents.add( cols[1] )

eventsForUser[cols[0]].add( cols[1] )

usersForEvent[cols[1]].add( cols[0] )

f.close() self.userEventScores = ss.dok_matrix( ( len(uniqueUsers), len(uniqueEvents) ) )

self.userIndex = dict()

self.eventIndex = dict()

for i, u in enumerate(uniqueUsers):

self.userIndex[u] = i

for i, e in enumerate(uniqueEvents):

self.eventIndex[e] = i ftrain = open('train.csv')

ftrain.readline()

for line in ftrain:

cols = line.strip().split(',')

i = self.userIndex[ cols[0] ]

j = self.eventIndex[ cols[1] ]

self.userEventScores[i, j] = int( cols[4] ) - int( cols[5] )

ftrain.close()

sio.mmwrite('PE_userEventScores', self.userEventScores) #为了防止不必要的计算,我们找出来所有关联的用户或者关联的event

#所谓关联用户指的是至少在同一个event上有行为的用户user pair

#关联的event指的是至少同一个user有行为的event pair

self.uniqueUserPairs = set()

self.uniqueEventPairs = set()

for event in uniqueEvents:

users = usersForEvent[event]

if len(users) > 2:

self.uniqueUserPairs.update( itertools.combinations(users, 2) )

for user in uniqueUsers:

events = eventsForUser[user]

if len(events) > 2:

self.uniqueEventPairs.update( itertools.combinations(events, 2) )

#rint(self.userIndex)

cPickle.dump( self.userIndex, open('PE_userIndex.pkl', 'wb'))

cPickle.dump( self.eventIndex, open('PE_eventIndex.pkl', 'wb') ) #数据清洗类

class DataCleaner:

def __init__(self):

#一些字符串转数值的方法

#载入locale

self.localeIdMap = defaultdict(int) for i, l in enumerate(locale.locale_alias.keys()):

self.localeIdMap[l] = i + 1 #载入country

self.countryIdMap = defaultdict(int)

ctryIdx = defaultdict(int)

for i, c in enumerate(pycountry.countries):

self.countryIdMap[c.name.lower()] = i + 1

if c.name.lower() == 'usa':

ctryIdx['US'] = i

if c.name.lower() == 'canada':

ctryIdx['CA'] = i for cc in ctryIdx.keys():

for s in pycountry.subdivisions.get(country_code=cc):

self.countryIdMap[s.name.lower()] = ctryIdx[cc] + 1 self.genderIdMap = defaultdict(int, {'male':1, 'female':2}) #处理LocaleId

def getLocaleId(self, locstr):

#这样因为localeIdMap是defaultdict(int),如果key中没有locstr.lower(),就会返回默认int 0

return self.localeIdMap[ locstr.lower() ] #处理birthyear

def getBirthYearInt(self, birthYear):

try:

return 0 if birthYear == 'None' else int(birthYear)

except:

return 0 #性别处理

def getGenderId(self, genderStr):

return self.genderIdMap[genderStr] #joinedAt

def getJoinedYearMonth(self, dateString):

dttm = datetime.datetime.strptime(dateString, "%Y-%m-%dT%H:%M:%S.%fZ")

return "".join( [str(dttm.year), str(dttm.month) ] ) #处理location

def getCountryId(self, location):

if (isinstance( location, str)) and len(location.strip()) > 0 and location.rfind(' ') > -1:

return self.countryIdMap[ location[location.rindex(' ') + 2: ].lower() ]

else:

return 0 #处理timezone

def getTimezoneInt(self, timezone):

try:

return int(timezone)

except:

return 0 def getFeatureHash(self, value):

if len(value.strip()) == 0:

return -1

else:

#return int( hashlib.sha224(value).hexdigest()[0:4], 16) python3会报如下错误

#TypeError: Unicode-objects must be encoded before hashing

return int( hashlib.sha224(value.encode('utf-8')).hexdigest()[0:4], 16)#python必须先进行encode def getFloatValue(self, value):

if len(value.strip()) == 0:

return 0.0

else:

return float(value) #用户与用户相似度矩阵

class Users:

"""

构建user/user相似度矩阵

"""

def __init__(self, programEntities, sim=ssd.correlation):#spatial.distance.correlation(u, v) #计算向量u和v之间的相关系数

cleaner = DataCleaner()

nusers = len(programEntities.userIndex.keys())#3391

#print(nusers)

fin = open('users.csv')

colnames = fin.readline().strip().split(',') #7列特征

self.userMatrix = ss.dok_matrix( (nusers, len(colnames)-1 ) )#构建稀疏矩阵

for line in fin:

cols = line.strip().split(',')

#只考虑train.csv中出现的用户,这一行是作者注释上的,但是我不是很理解

#userIndex包含了train和test的所有用户,为何说只考虑train.csv中出现的用户

if cols[0] in programEntities.userIndex:

i = programEntities.userIndex[ cols[0] ]#获取user:对应的index

self.userMatrix[i, 0] = cleaner.getLocaleId( cols[1] )#locale

self.userMatrix[i, 1] = cleaner.getBirthYearInt( cols[2] )#birthyear,空值0填充

self.userMatrix[i, 2] = cleaner.getGenderId( cols[3] )#处理性别

self.userMatrix[i, 3] = cleaner.getJoinedYearMonth( cols[4] )#处理joinedAt列

self.userMatrix[i, 4] = cleaner.getCountryId( cols[5] )#处理location

self.userMatrix[i, 5] = cleaner.getTimezoneInt( cols[6] )#处理timezone

fin.close() #归一化矩阵

self.userMatrix = normalize(self.userMatrix, norm='l1', axis=0, copy=False)

sio.mmwrite('US_userMatrix', self.userMatrix) #计算用户相似度矩阵,之后会用到

self.userSimMatrix = ss.dok_matrix( (nusers, nusers) )#(3391,3391)

for i in range(0, nusers):

self.userSimMatrix[i, i] = 1.0 for u1, u2 in programEntities.uniqueUserPairs:

i = programEntities.userIndex[u1]

j = programEntities.userIndex[u2]

if (i, j) not in self.userSimMatrix:

#print(self.userMatrix.getrow(i).todense()) 如[[0.00028123,0.00029847,0.00043592,0.00035208,0,0.00032346]]

#print(self.userMatrix.getrow(j).todense()) 如[[0.00028123,0.00029742,0.00043592,0.00035208,0,-0.00032346]]

usim = sim(self.userMatrix.getrow(i).todense(),self.userMatrix.getrow(j).todense())

self.userSimMatrix[i, j] = usim

self.userSimMatrix[j, i] = usim

sio.mmwrite('US_userSimMatrix', self.userSimMatrix) #用户社交关系挖掘

class UserFriends:

"""

找出某用户的那些朋友,想法非常简单

1)如果你有更多的朋友,可能你性格外向,更容易参加各种活动

2)如果你朋友会参加某个活动,可能你也会跟随去参加一下

"""

def __init__(self, programEntities):

nusers = len(programEntities.userIndex.keys())#3391

self.numFriends = np.zeros( (nusers) )#array([0., 0., 0., ..., 0., 0., 0.]),保存每一个用户的朋友数

self.userFriends = ss.dok_matrix( (nusers, nusers) )

fin = gzip.open('user_friends.csv.gz')

print( 'Header In User_friends.csv.gz:',fin.readline() )

ln = 0

#逐行打开user_friends.csv.gz文件

#判断第一列的user是否在userIndex中,只有user在userIndex中才是我们关心的user

#获取该用户的Index,和朋友数目

#对于该用户的每一个朋友,如果朋友也在userIndex中,获取其朋友的userIndex,然后去userEventScores中获取该朋友对每个events的反应

#score即为该朋友对所有events的平均分

#userFriends矩阵记录了用户和朋友之间的score

#如851286067:1750用户出现在test.csv中,该用户在User_friends.csv.gz中一共2151个朋友

#那么其朋友占比应该是2151 / 总的朋友数sumNumFriends=3731377.0 = 2151 / 3731377 = 0.0005764627910822198

for line in fin:

if ln % 200 == 0:

print( 'Loading line:', ln )

cols = line.decode().strip().split(',')

user = cols[0]

if user in programEntities.userIndex:

friends = cols[1].split(' ')#获得该用户的朋友列表

i = programEntities.userIndex[user]

self.numFriends[i] = len(friends)

for friend in friends:

if friend in programEntities.userIndex:

j = programEntities.userIndex[friend]

#the objective of this score is to infer the degree to

#and direction in which this friend will influence the

#user's decision, so we sum the user/event score for

#this user across all training events

eventsForUser = programEntities.userEventScores.getrow(j).todense()#获取朋友对每个events的反应:0, 1, or -1

#print(eventsForUser.sum(), np.shape(eventsForUser)[1] )

#socre即是用户朋友在13418个events上的平均分

score = eventsForUser.sum() / np.shape(eventsForUser)[1]#eventsForUser = 13418,

#print(score)

self.userFriends[i, j] += score

self.userFriends[j, i] += score

ln += 1

fin.close()

#归一化数组

sumNumFriends = self.numFriends.sum(axis=0)#每个用户的朋友数相加

#print(sumNumFriends)

self.numFriends = self.numFriends / sumNumFriends#每个user的朋友数目比例

sio.mmwrite('UF_numFriends', np.matrix(self.numFriends) )

self.userFriends = normalize(self.userFriends, norm='l1', axis=0, copy=False)

sio.mmwrite('UF_userFriends', self.userFriends) #构造event和event相似度数据

class Events:

"""

构建event-event相似度,注意这里有2种相似度

1)由用户-event行为,类似协同过滤算出的相似度

2)由event本身的内容(event信息)计算出的event-event相似度

"""

def __init__(self, programEntities, psim=ssd.correlation, csim=ssd.cosine):

cleaner = DataCleaner()

fin = gzip.open('events.csv.gz')

fin.readline()#skip header

nevents = len(programEntities.eventIndex)

print(nevents)#13418

self.eventPropMatrix = ss.dok_matrix( (nevents, 7) )

self.eventContMatrix = ss.dok_matrix( (nevents, 100) )

ln = 0

for line in fin:

#if ln > 10:

#break

cols = line.decode().strip().split(',')

eventId = cols[0]

if eventId in programEntities.eventIndex:

i = programEntities.eventIndex[eventId]

self.eventPropMatrix[i, 0] = cleaner.getJoinedYearMonth( cols[2] )#start_time

self.eventPropMatrix[i, 1] = cleaner.getFeatureHash( cols[3] )#city

self.eventPropMatrix[i, 2] = cleaner.getFeatureHash( cols[4] )#state

self.eventPropMatrix[i, 3] = cleaner.getFeatureHash( cols[5] )#zip

self.eventPropMatrix[i, 4] = cleaner.getFeatureHash( cols[6] )#country

self.eventPropMatrix[i, 5] = cleaner.getFloatValue( cols[7] )#lat

self.eventPropMatrix[i, 6] = cleaner.getFloatValue( cols[8] )#lon

for j in range(9, 109):

self.eventContMatrix[i, j-9] = cols[j] ln += 1

fin.close() self.eventPropMatrix = normalize(self.eventPropMatrix, norm='l1', axis=0, copy=False)

sio.mmwrite('EV_eventPropMatrix', self.eventPropMatrix)

self.eventContMatrix = normalize(self.eventContMatrix, norm='l1', axis=0, copy=False)

sio.mmwrite('EV_eventContMatrix', self.eventContMatrix) #calculate similarity between event pairs based on the two matrices

self.eventPropSim = ss.dok_matrix( (nevents, nevents) )

self.eventContSim = ss.dok_matrix( (nevents, nevents) )

for e1, e2 in programEntities.uniqueEventPairs:

i = programEntities.eventIndex[e1]

j = programEntities.eventIndex[e2]

if not ((i, j) in self.eventPropSim):

epsim = psim( self.eventPropMatrix.getrow(i).todense(), self.eventPropMatrix.getrow(j).todense())

if np.isnan(epsim):

epsim = 0

self.eventPropSim[i, j] = epsim

self.eventPropSim[j, i] = epsim if not ((i, j) in self.eventContSim):

#两个向量,如果某个全为0,会返回nan

"""

import numpy as np

a = np.array([0, 1, 1, 1, 0, 0, 0, 1, 0, 0])

b = np.array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0]) from scipy.spatial.distance import cosine

temp = cosine(a, b)

会出现下面问题:

Warning (from warnings module):

File "D:\Python35\lib\site-packages\scipy\spatial\distance.py", line 644

dist = 1.0 - uv / np.sqrt(uu * vv)

RuntimeWarning: invalid value encountered in double_scalars """

ecsim = csim( self.eventContMatrix.getrow(i).todense(), self.eventContMatrix.getrow(j).todense())

if np.isnan(ecsim):

ecsim = 0

self.eventContSim[i, j] = ecsim

self.eventContSim[j, i] = ecsim sio.mmwrite('EV_eventPropSim', self.eventPropSim)

sio.mmwrite('EV_eventContSim', self.eventContSim) class EventAttendees:

"""

统计某个活动,参加和不参加的人数,从而为活动活跃度做准备

"""

def __init__(self, programEntities):

nevents = len(programEntities.eventIndex)#13418

self.eventPopularity = ss.dok_matrix( (nevents, 1) )

f = gzip.open('event_attendees.csv.gz')

f.readline()#skip header

for line in f:

cols = line.decode().strip().split(',')

eventId = cols[0]

if eventId in programEntities.eventIndex:

i = programEntities.eventIndex[eventId]

self.eventPopularity[i, 0] = len(cols[1].split(' ')) - len(cols[4].split(' '))#yes人数-no人数,即出席人数减未出席人数

f.close() self.eventPopularity = normalize( self.eventPopularity, norm='l1', axis=0, copy=False)

sio.mmwrite('EA_eventPopularity', self.eventPopularity) def data_prepare():

"""

计算生成所有的数据,用矩阵或者其他形式存储方便后续提取特征和建模

"""

print('第1步:统计user和event相关信息...')

pe = ProgramEntities()

print('第1步完成...\n') print('第2步:计算用户相似度信息,并用矩阵形式存储...')

Users(pe)

print('第2步完成...\n') print('第3步:计算用户社交关系信息,并存储...')

UserFriends(pe)

print('第3步完成...\n') print('第4步:计算event相似度信息,并用矩阵形式存储...')

Events(pe)

print('第4步完成...\n') print('第5步:计算event热度信息...')

EventAttendees(pe)

print('第5步完成...\n') #运行进行数据准备

data_prepare()

综上完成数据的预处理和保存功能

下面我们来看看特征构建:Event Recommendation Engine Challenge分步解析第六步

Event Recommendation Engine Challenge分步解析第五步的更多相关文章

- Event Recommendation Engine Challenge分步解析第七步

一.请知晓 本文是基于: Event Recommendation Engine Challenge分步解析第一步 Event Recommendation Engine Challenge分步解析第 ...

- Event Recommendation Engine Challenge分步解析第六步

一.请知晓 本文是基于: Event Recommendation Engine Challenge分步解析第一步 Event Recommendation Engine Challenge分步解析第 ...

- Event Recommendation Engine Challenge分步解析第四步

一.请知晓 本文是基于: Event Recommendation Engine Challenge分步解析第一步 Event Recommendation Engine Challenge分步解析第 ...

- Event Recommendation Engine Challenge分步解析第三步

一.请知晓 本文是基于: Event Recommendation Engine Challenge分步解析第一步 Event Recommendation Engine Challenge分步解析第 ...

- Event Recommendation Engine Challenge分步解析第二步

一.请知晓 本文是基于Event Recommendation Engine Challenge分步解析第一步,需要读者先阅读上篇文章解析 二.用户相似度计算 第二步:计算用户相似度信息 由于用到:u ...

- Event Recommendation Engine Challenge分步解析第一步

一.简介 此项目来自kaggle:https://www.kaggle.com/c/event-recommendation-engine-challenge/ 数据集的下载需要账号,并且需要手机验证 ...

- Cwinux源码解析(五)

Cwinux源码解析(五)

- (转) Quick Guide to Build a Recommendation Engine in Python

本文转自:http://www.analyticsvidhya.com/blog/2016/06/quick-guide-build-recommendation-engine-python/ Int ...

- 卷积神经网络 cnnff.m程序 中的前向传播算法 数据 分步解析

最近在学习卷积神经网络,哎,真的是一头雾水!最后决定从阅读CNN程序下手! 程序来源于GitHub的DeepLearnToolbox 由于确实缺乏理论基础,所以,先从程序的数据流入手,虽然对高手来讲, ...

随机推荐

- John the Ripper-弱口令检测

简介 John the Ripper免费的开源软件,是一个快速的密码破解工具,用于在已知密文的情况下尝试破解出明文的破解密码软件,支持目前大多数的加密算法,如DES.MD4.MD5等.它支持多种不同类 ...

- puppet一些常用的参数

puppet一些常用的参数 通过@,realize来定义使用虚拟资源 虚拟资源主要来解决在安装包的时候,互相冲突的问题 具体参考这里 简单说下,在定义资源的时候加上@ 例如: @package { & ...

- C# 两个类是否继承关系

IsAssignableFrom:确定指定类型的实例是否可以分配给当前类型的实例 B继承自A static void Main(string[] args) { Type a = typeof(A); ...

- SQL Server查询优化器的工作原理

SQL Server的查询优化器是一个基于成本的优化器.它为一个给定的查询分析出很多的候选的查询计划,并且估算每个候选计划的成本,从而选择一个成本最低的计划进行执行.实际上,因为查询优化器不可能对每一 ...

- Elasticsearch 5.x 字段折叠的使用

在Elasticsearch 5.x 之前,如果实现一个数据折叠的功能是非常复杂的,随着5.X的更新,这一问题变得简单,找到了一遍技术文章,对这个问题描述的非常清楚,收藏下. 参考:https:// ...

- Nginx代理MysqlCluster集群

-------Nginx代理MysqlCluster 公司有一个公网ip,有公网ip(222.222.222.222)那台服务器上装的nginx,mysql装在公司另外一台服务器上假设ip为192.1 ...

- 发现环 (拓扑或dfs)

题目链接:http://lx.lanqiao.cn/problem.page?gpid=T453 问题描述 小明的实验室有N台电脑,编号1~N.原本这N台电脑之间有N-1条数据链接相连,恰好构成一个树 ...

- Python3 与 C# 并发编程之~进程先导篇

在线预览:http://github.lesschina.com/python/base/concurrency/1.并发编程-进程先导篇.html Python3 与 C# 并发编程之- 进程篇 ...

- 编写高质量代码:改善Java程序的151个建议 --[117~128]

编写高质量代码:改善Java程序的151个建议 --[117~128] Thread 不推荐覆写start方法 先看下Thread源码: public synchronized void start( ...

- [WC2018]州区划分

[WC2018]州区划分 注意审题: 1.有序选择 2.若干个州 3.贡献是州满意度的乘积 枚举最后一个州是哪一个,合法时候贡献sum[s]^p,否则贡献0 存在欧拉回路:每个点都是偶度数,且图连通( ...