filebeat输出结果到elasticsearch的多个索引

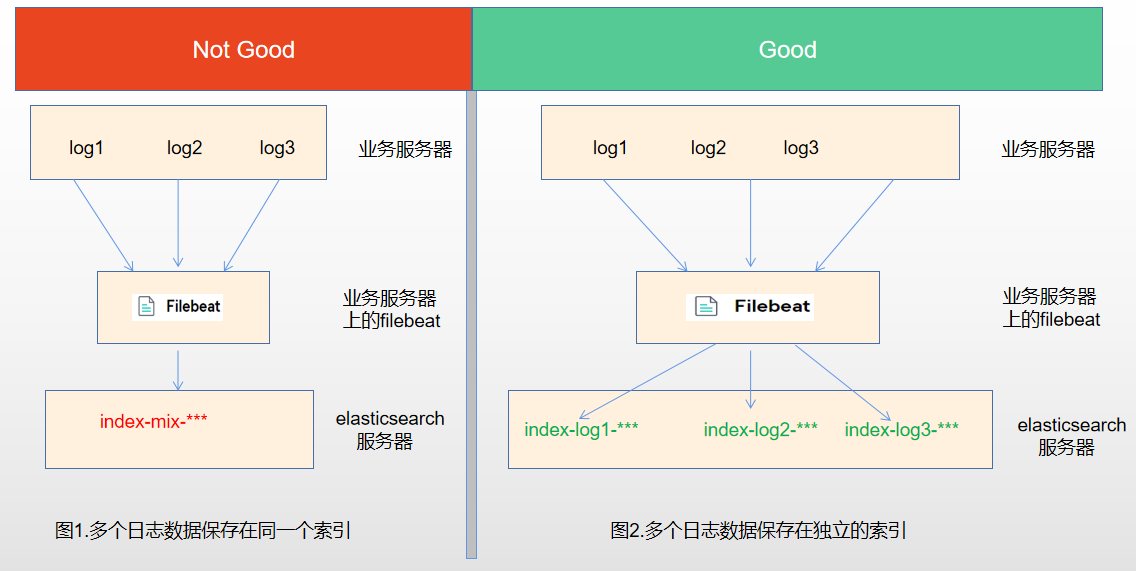

- 将同一台服务器上的日志收集到elasticsearch的同一个索引中,这种方式存在一个较大的问题,如果服务器上有多个业务在运行,产生了多个日志,那么将会被收集到elasticsearch的同一个索引中,如图1。

- 将同一台服务器上的日志收集到elasticsearch的不同索引中,每个索引都存放相关业务的日志,如图2。

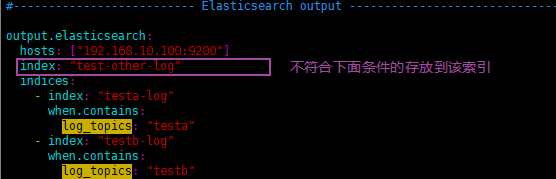

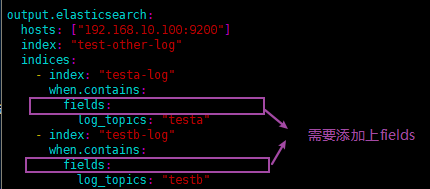

- testa.log日志的数据存放到testa-log索引中

- testb.log日志的数据存放到testb-log索引中

- 其它(非testa.log和testb.log)的日志数据存放到test-other-log索引中

###################### Filebeat Configuration Example ######################### # This file is an example configuration file highlighting only the most common

# options. The filebeat.reference.yml file from the same directory contains all the

# supported options with more comments. You can use it as a reference.

#

# You can find the full configuration reference here:

# https://www.elastic.co/guide/en/beats/filebeat/index.html # For more available modules and options, please see the filebeat.reference.yml sample

# configuration file. #=========================== Filebeat inputs ============================= filebeat.inputs: # Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

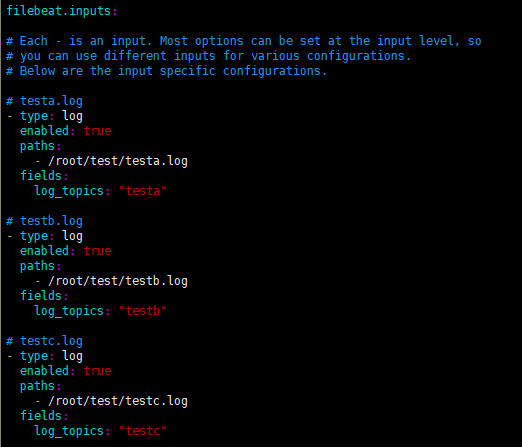

# Below are the input specific configurations. # testa.log

- type: log

enabled: true

paths:

- /root/test/testa.log

fields:

log_topics: "testa"

fields_under_root: true # testb.log

- type: log

enabled: true

paths:

- /root/test/testb.log

fields:

log_topics: "testb"

fields_under_root: true # testc.log

- type: log

enabled: true

paths:

- /root/test/testc.log

fields:

log_topics: "testc"

fields_under_root: true # Exclude lines. A list of regular expressions to match. It drops the lines that are

# matching any regular expression from the list.

#exclude_lines: ['^DBG'] # Include lines. A list of regular expressions to match. It exports the lines that are

# matching any regular expression from the list.

#include_lines: ['^ERR', '^WARN'] # Exclude files. A list of regular expressions to match. Filebeat drops the files that

# are matching any regular expression from the list. By default, no files are dropped.

#exclude_files: ['.gz$'] # Optional additional fields. These fields can be freely picked

# to add additional information to the crawled log files for filtering

#fields:

# level: debug

# review: 1 ### Multiline options # Multiline can be used for log messages spanning multiple lines. This is common

# for Java Stack Traces or C-Line Continuation # The regexp Pattern that has to be matched. The example pattern matches all lines starting with [

#multiline.pattern: ^\[ # Defines if the pattern set under pattern should be negated or not. Default is false.

#multiline.negate: false # Match can be set to "after" or "before". It is used to define if lines should be append to a pattern

# that was (not) matched before or after or as long as a pattern is not matched based on negate.

# Note: After is the equivalent to previous and before is the equivalent to to next in Logstash

#multiline.match: after #============================= Filebeat modules =============================== filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml # Set to true to enable config reloading

reload.enabled: true # Period on which files under path should be checked for changes

#reload.period: 10s #==================== Elasticsearch template setting ========================== setup.template.settings:

index.number_of_shards: 1

#index.codec: best_compression

#_source.enabled: false

setup.template.name: "prod-file*"

setup.template.pattern: "prod-file*"

setup.ilm.enabled: false

#================================ General ===================================== # The name of the shipper that publishes the network data. It can be used to group

# all the transactions sent by a single shipper in the web interface.

#name: # The tags of the shipper are included in their own field with each

# transaction published.

#tags: ["service-X", "web-tier"] # Optional fields that you can specify to add additional information to the

# output.

#fields:

# env: staging #============================== Dashboards =====================================

# These settings control loading the sample dashboards to the Kibana index. Loading

# the dashboards is disabled by default and can be enabled either by setting the

# options here or by using the `setup` command.

#setup.dashboards.enabled: false # The URL from where to download the dashboards archive. By default this URL

# has a value which is computed based on the Beat name and version. For released

# versions, this URL points to the dashboard archive on the artifacts.elastic.co

# website.

#setup.dashboards.url: #============================== Kibana ===================================== # Starting with Beats version 6.0.0, the dashboards are loaded via the Kibana API.

# This requires a Kibana endpoint configuration.

setup.kibana: # Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

#host: "localhost:5601" # Kibana Space ID

# ID of the Kibana Space into which the dashboards should be loaded. By default,

# the Default Space will be used.

#space.id: #============================= Elastic Cloud ================================== # These settings simplify using Filebeat with the Elastic Cloud (https://cloud.elastic.co/). # The cloud.id setting overwrites the `output.elasticsearch.hosts` and

# `setup.kibana.host` options.

# You can find the `cloud.id` in the Elastic Cloud web UI.

#cloud.id: # The cloud.auth setting overwrites the `output.elasticsearch.username` and

# `output.elasticsearch.password` settings. The format is `<user>:<pass>`.

#cloud.auth: #================================ Outputs ===================================== # Configure what output to use when sending the data collected by the beat. #-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch:

# hosts: ["192.168.10.30:9200"]

# index: "testlog-666" #output.elasticsearch:

# hosts: ["192.168.10.30:9200"]

# indices:

# - index: "testa-log"

# when.contains:

# log_topics: "testa"

# - index: "testb-log"

# when.contains:

# log_topics: "testb" output.elasticsearch:

hosts: ["192.168.10.100:9200"]

index: "test-other-log"

indices:

- index: "testa-log"

when.contains:

log_topics: "testa"

- index: "testb-log"

when.contains:

log_topics: "testb"

#----------------------------- Logstash output --------------------------------

#output.logstash:

# The Logstash hosts

#hosts: ["localhost:5044"] # Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"] # Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem" # Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key" #================================ Processors ===================================== # Configure processors to enhance or manipulate events generated by the beat. #================================ Logging ===================================== # Sets log level. The default log level is info.

# Available log levels are: error, warning, info, debug

#logging.level: debug # At debug level, you can selectively enable logging only for some components.

# To enable all selectors use ["*"]. Examples of other selectors are "beat",

# "publish", "service".

#logging.selectors: ["*"] #============================== Xpack Monitoring ===============================

# filebeat can export internal metrics to a central Elasticsearch monitoring

# cluster. This requires xpack monitoring to be enabled in Elasticsearch. The

# reporting is disabled by default. # Set to true to enable the monitoring reporter.

#monitoring.enabled: false # Uncomment to send the metrics to Elasticsearch. Most settings from the

# Elasticsearch output are accepted here as well.

# Note that the settings should point to your Elasticsearch *monitoring* cluster.

# Any setting that is not set is automatically inherited from the Elasticsearch

# output configuration, so if you have the Elasticsearch output configured such

# that it is pointing to your Elasticsearch monitoring cluster, you can simply

# uncomment the following line.

#monitoring.elasticsearch: #================================= Migration ================================== # This allows to enable 6.7 migration aliases

#migration.6_to_7.enabled: true

###################### Filebeat Configuration Example ######################### # This file is an example configuration file highlighting only the most common

# options. The filebeat.reference.yml file from the same directory contains all the

# supported options with more comments. You can use it as a reference.

#

# You can find the full configuration reference here:

# https://www.elastic.co/guide/en/beats/filebeat/index.html # For more available modules and options, please see the filebeat.reference.yml sample

# configuration file. #=========================== Filebeat inputs ============================= filebeat.inputs: # Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations. # testa.log

- type: log

enabled: true

paths:

- /root/test/testa.log

fields:

log_topics: "testa" # testb.log

- type: log

enabled: true

paths:

- /root/test/testb.log

fields:

log_topics: "testb" # testc.log

- type: log

enabled: true

paths:

- /root/test/testc.log

fields:

log_topics: "testc" # Exclude lines. A list of regular expressions to match. It drops the lines that are

# matching any regular expression from the list.

#exclude_lines: ['^DBG'] # Include lines. A list of regular expressions to match. It exports the lines that are

# matching any regular expression from the list.

#include_lines: ['^ERR', '^WARN'] # Exclude files. A list of regular expressions to match. Filebeat drops the files that

# are matching any regular expression from the list. By default, no files are dropped.

#exclude_files: ['.gz$'] # Optional additional fields. These fields can be freely picked

# to add additional information to the crawled log files for filtering

#fields:

# level: debug

# review: 1 ### Multiline options # Multiline can be used for log messages spanning multiple lines. This is common

# for Java Stack Traces or C-Line Continuation # The regexp Pattern that has to be matched. The example pattern matches all lines starting with [

#multiline.pattern: ^\[ # Defines if the pattern set under pattern should be negated or not. Default is false.

#multiline.negate: false # Match can be set to "after" or "before". It is used to define if lines should be append to a pattern

# that was (not) matched before or after or as long as a pattern is not matched based on negate.

# Note: After is the equivalent to previous and before is the equivalent to to next in Logstash

#multiline.match: after #============================= Filebeat modules =============================== filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml # Set to true to enable config reloading

reload.enabled: true # Period on which files under path should be checked for changes

#reload.period: 10s #==================== Elasticsearch template setting ========================== setup.template.settings:

index.number_of_shards: 1

#index.codec: best_compression

#_source.enabled: false

setup.template.name: "prod-file*"

setup.template.pattern: "prod-file*"

setup.ilm.enabled: false

#================================ General ===================================== # The name of the shipper that publishes the network data. It can be used to group

# all the transactions sent by a single shipper in the web interface.

#name: # The tags of the shipper are included in their own field with each

# transaction published.

#tags: ["service-X", "web-tier"] # Optional fields that you can specify to add additional information to the

# output.

#fields:

# env: staging #============================== Dashboards =====================================

# These settings control loading the sample dashboards to the Kibana index. Loading

# the dashboards is disabled by default and can be enabled either by setting the

# options here or by using the `setup` command.

#setup.dashboards.enabled: false # The URL from where to download the dashboards archive. By default this URL

# has a value which is computed based on the Beat name and version. For released

# versions, this URL points to the dashboard archive on the artifacts.elastic.co

# website.

#setup.dashboards.url: #============================== Kibana ===================================== # Starting with Beats version 6.0.0, the dashboards are loaded via the Kibana API.

# This requires a Kibana endpoint configuration.

setup.kibana: # Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

#host: "localhost:5601" # Kibana Space ID

# ID of the Kibana Space into which the dashboards should be loaded. By default,

# the Default Space will be used.

#space.id: #============================= Elastic Cloud ================================== # These settings simplify using Filebeat with the Elastic Cloud (https://cloud.elastic.co/). # The cloud.id setting overwrites the `output.elasticsearch.hosts` and

# `setup.kibana.host` options.

# You can find the `cloud.id` in the Elastic Cloud web UI.

#cloud.id: # The cloud.auth setting overwrites the `output.elasticsearch.username` and

# `output.elasticsearch.password` settings. The format is `<user>:<pass>`.

#cloud.auth: #================================ Outputs ===================================== # Configure what output to use when sending the data collected by the beat. #-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch:

# hosts: ["192.168.10.30:9200"]

# index: "testlog-666" #output.elasticsearch:

# hosts: ["192.168.10.30:9200"]

# indices:

# - index: "testa-log"

# when.contains:

# log_topics: "testa"

# - index: "testb-log"

# when.contains:

# log_topics: "testb" output.elasticsearch:

hosts: ["192.168.10.100:9200"]

index: "test-other-log"

indices:

- index: "testa-log"

when.contains:

fields:

log_topics: "testa"

- index: "testb-log"

when.contains:

fields:

log_topics: "testb"

#----------------------------- Logstash output --------------------------------

#output.logstash:

# The Logstash hosts

#hosts: ["localhost:5044"] # Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"] # Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem" # Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key" #================================ Processors ===================================== # Configure processors to enhance or manipulate events generated by the beat. #================================ Logging ===================================== # Sets log level. The default log level is info.

# Available log levels are: error, warning, info, debug

#logging.level: debug # At debug level, you can selectively enable logging only for some components.

# To enable all selectors use ["*"]. Examples of other selectors are "beat",

# "publish", "service".

#logging.selectors: ["*"] #============================== Xpack Monitoring ===============================

# filebeat can export internal metrics to a central Elasticsearch monitoring

# cluster. This requires xpack monitoring to be enabled in Elasticsearch. The

# reporting is disabled by default. # Set to true to enable the monitoring reporter.

#monitoring.enabled: false # Uncomment to send the metrics to Elasticsearch. Most settings from the

# Elasticsearch output are accepted here as well.

# Note that the settings should point to your Elasticsearch *monitoring* cluster.

# Any setting that is not set is automatically inherited from the Elasticsearch

# output configuration, so if you have the Elasticsearch output configured such

# that it is pointing to your Elasticsearch monitoring cluster, you can simply

# uncomment the following line.

#monitoring.elasticsearch: #================================= Migration ================================== # This allows to enable 6.7 migration aliases

#migration.6_to_7.enabled: true

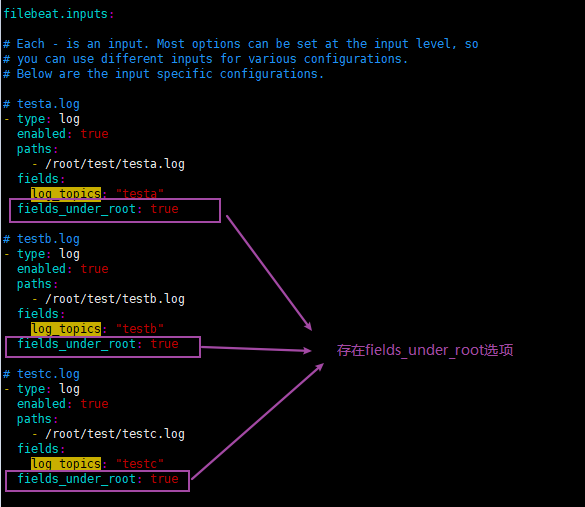

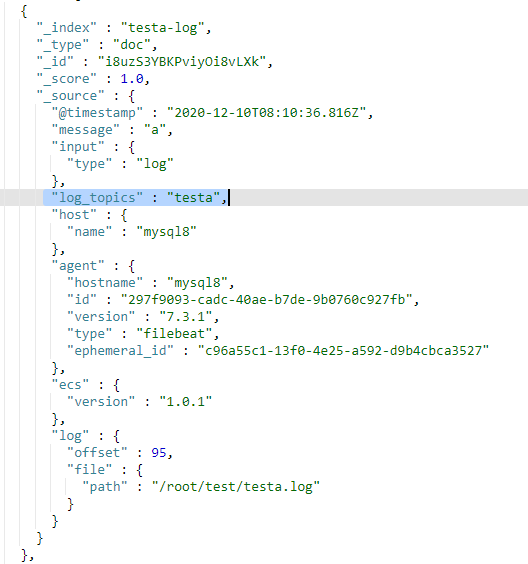

- 如果值为ture,那么fields存储在输出文档的顶级位置,如果与filebeat中字段冲突,自定义字段会覆盖其他字段

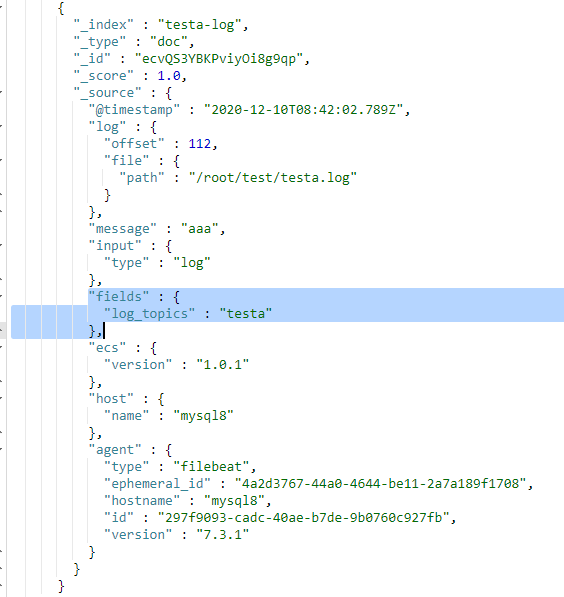

- 如果值为false或者未设置,那么fields存储在输出文档的子位置。

filebeat输出结果到elasticsearch的多个索引的更多相关文章

- 使用ElasticSearch赋能HBase二级索引 | 实践一年后总结

前言:还记得那是2018年的一个夏天,天气特别热,我一边擦汗一边听领导大刀阔斧的讲述自己未来的改革蓝图.会议开完了,核心思想就是:我们要搞一个数据大池子,要把公司能灌的数据都灌入这个大池子,然后让别人 ...

- 第三百六十二节,Python分布式爬虫打造搜索引擎Scrapy精讲—elasticsearch(搜索引擎)基本的索引和文档CRUD操作、增、删、改、查

第三百六十二节,Python分布式爬虫打造搜索引擎Scrapy精讲—elasticsearch(搜索引擎)基本的索引和文档CRUD操作.增.删.改.查 elasticsearch(搜索引擎)基本的索引 ...

- (转)ElasticSearch Java Api-检索索引库

上篇博客记录了如何用java调用api把数据写入索引,这次记录下如何搜索. 一.准备数据 String data1 = JsonUtil.model2Json(new Blog(1, "gi ...

- 四十一 Python分布式爬虫打造搜索引擎Scrapy精讲—elasticsearch(搜索引擎)基本的索引和文档CRUD操作、增、删、改、查

elasticsearch(搜索引擎)基本的索引和文档CRUD操作 也就是基本的索引和文档.增.删.改.查.操作 注意:以下操作都是在kibana里操作的 elasticsearch(搜索引擎)都是基 ...

- Elasticsearch之curl创建索引

前提,是 Elasticsearch之curl创建索引库 [hadoop@djt002 elasticsearch-2.4.3]$ curl -XPUT 'http://192.168.80.200: ...

- Elasticsearch之curl创建索引库

关于curl的介绍,请移步 Elasticsearch学习概念之curl 启动es,请移步 Elasticsearch的前后台运行与停止(tar包方式) Elasticsearch的前后台运行与停止( ...

- Elasticsearch之curl删除索引库

关于curl创建索引库的介绍,请移步 Elasticsearch之curl创建索引库 [hadoop@djt002 elasticsearch-2.4.3]$ curl -XPUT 'http://1 ...

- Elasticsearch之curl创建索引库和索引时注意事项

前提, Elasticsearch之curl创建索引库 Elasticsearch之curl创建索引 注意事项 1.索引库名称必须要全部小写,不能以下划线开头,也不能包含逗号 2.如果没有明确指定索引 ...

- Elasticsearch之cur查询索引

前提, Elasticsearch之curl创建索引库 Elasticsearch之curl创建索引 Elasticsearch之curl创建索引库和索引时注意事项 Elasticsearch之cur ...

随机推荐

- Visual Studio2013应用笔记---WinForm事件中的Object sender和EventArgs e参数

Windows程序有一个事件机制.用于处理用户事件. 在WinForm中我们经常需要给控件添加事件.例如给一个Button按钮添加一个Click点击事件.给TextBox文本框添加一个KeyPress ...

- TIP/Collision-Free Video Synopsis Incorporating Object Speed and Size Changes Code

代码地址 https://github.com/scutlzk/Collision-Free-Video-Synopsis-Incorporating-Object-Speed-and-Size-C ...

- wireguard使用

1.编译与安装 sudo apt-get install libmnl-dev libelf-dev linux-headers-$(uname -r) build-essential pkg-con ...

- 快速增加osdmap的epoch

最近因为一个实验需要用到一个功能,需要快速的增加 ceph 的 osdmap 的 epoch 编号 查询osd的epoch编号 root@lab8107:~# ceph osd stat osdmap ...

- win10安装MySQL5.7.31 zip版

因为我之前卸载了安装的(msi,exe)格式的MySQL,现在重新安装zip版的MySQL. 1,下载MySQL MySQL下载地址 : https://dev.mysql.com/downloads ...

- Python_爬虫项目

1.爬虫--智联招聘信息搜集 原文链接 1 #-*- coding: utf-8 -*- 2 import re 3 import csv 4 import requests 5 from tq ...

- 企业级工作流解决方案(八)--微服务Tcp消息传输模型之服务端处理

服务端启动 服务端启动主要做几件事情,1. 从配置文件读取服务配置(主要是服务监听端口和编解码配置),2. 注册编解码器工厂,3. 启动dotnetty监听端口,4. 读取配置文件,解析全局消息处理模 ...

- FL Studio音频混音教程

FL Studio是一款音乐制作.编曲.混音软件,其内置众多电子合成音色,还支持第三方VST等格式插件.软件操作界面简洁易上手,即使你是零音乐基础小白,通过它也能轻松实现自己音乐梦想,很多人给他起了个 ...

- 如何将MathType恢复出厂设置

必大家都知道,我们日常使用的手机是自带恢复出厂设置功能的,其实除了手机,咱们今天要说的这款公式编辑器MathType,也是可以进行恢复出厂设置操作的哦,下面就让小编给大家介绍一下吧. 一.打开Math ...

- bash反弹shell检测

1.进程 file descriptor 异常检测 检测 file descriptor 是否指向一个socket 以重定向+/dev/tcp Bash反弹Shell攻击方式为例,这类反弹shell的 ...