opencv+树莓PI的基于HOG特征的行人检测

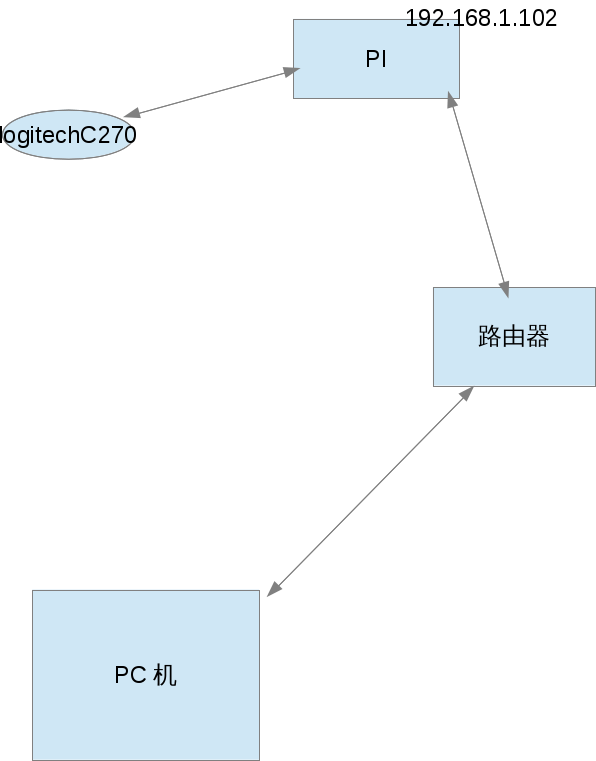

树莓PI远程控制摄像头请参考前文:http://www.cnblogs.com/yuliyang/p/3561209.html

参考:http://answers.opencv.org/question/133/how-do-i-access-an-ip-camera/

http://blog.youtueye.com/work/opencv-hog-peopledetector-trainning.html

项目环境:opencv2.8 ,debian, QT

代码:

运行:

yuliyang@debian-yuliyang:~/build-peopledetect-桌面-Debug$ ./peopledetect /home/yuliyang/OLTbinaries/INRIAPerson/HOG/model_4BiSVMLight.alt

model_4BiSVMLight.alt文件是INRIAPerson行人检测库HOG文件夹下的modle文件

#include <fstream>

#include <iostream>

#include <vector> #include <stdio.h>

#include <string.h>

#include <ctype.h> #include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/objdetect/objdetect.hpp"

#include "opencv2/highgui/highgui.hpp" using namespace std;

using namespace cv; vector<float> load_lear_model(const char* model_file)

{

vector<float> detector;

FILE *modelfl;

if ((modelfl = fopen (model_file, "rb")) == NULL)

{

cout<<"Unable to open the modelfile"<<endl;

return detector;

} char version_buffer[10];

if (!fread (&version_buffer,sizeof(char),10,modelfl))

{

cout<<"Unable to read version"<<endl;

return detector;

} if(strcmp(version_buffer,"V6.01"))

{

cout<<"Version of model-file does not match version of svm_classify!"<<endl;

return detector;

}

// read version number

int version = 0;

if (!fread (&version,sizeof(int),1,modelfl))

{

cout<<"Unable to read version number"<<endl;

return detector;

} if (version < 200)

{

cout<<"Does not support model file compiled for light version"<<endl;

return detector;

} long kernel_type;

fread(&(kernel_type),sizeof(long),1,modelfl); {// ignore these

long poly_degree;

fread(&(poly_degree),sizeof(long),1,modelfl); double rbf_gamma;

fread(&(rbf_gamma),sizeof(double),1,modelfl); double coef_lin;

fread(&(coef_lin),sizeof(double),1,modelfl);

double coef_const;

fread(&(coef_const),sizeof(double),1,modelfl); long l;

fread(&l,sizeof(long),1,modelfl);

char* custom = new char[l];

fread(custom,sizeof(char),l,modelfl);

delete[] custom;

} long totwords;

fread(&(totwords),sizeof(long),1,modelfl); {// ignore these

long totdoc;

fread(&(totdoc),sizeof(long),1,modelfl); long sv_num;

fread(&(sv_num), sizeof(long),1,modelfl);

} double linearbias = 0.0;

fread(&linearbias, sizeof(double),1,modelfl); if(kernel_type == 0) { /* linear kernel */

/* save linear wts also */

double* linearwt = new double[totwords+1];

int length = totwords;

fread(linearwt, sizeof(double),totwords+1,modelfl); for(int i = 0;i<totwords;i++){

float term = linearwt[i];

detector.push_back(term);

}

float term = -linearbias;

detector.push_back(term);

delete [] linearwt; } else {

cout<<"Only supports linear SVM model files"<<endl;

} fclose(modelfl);

return detector; } void help()

{

printf(

"\nDemonstrate the use of the HoG descriptor using\n"

" HOGDescriptor::hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());\n"

"Usage:\n"

"./peopledetect (<image_filename> | <image_list>.txt)\n\n");

} int main(int argc, char** argv)

{

VideoCapture cap;

cap.open("http://192.168.1.102:8001/?action=stream?dummy=param.mjpg");//在浏览器里输入http://192.168.1.102:8001/?action=stream

cap.set(CV_CAP_PROP_FRAME_WIDTH, 320);

cap.set(CV_CAP_PROP_FRAME_HEIGHT, 240);

if (!cap.isOpened())

return -1;

Mat img;

FILE* f = 0;

// char _filename[1024]; // if( argc != 3 )

// {

// cout<<"ERROR"<<endl;

// return 0;

// }

// img = imread(argv[1]); // cap >> img;

// if( img.data )

// {

// strcpy(_filename, argv[1]);

// }

// else

// {

// f = fopen(argv[1], "rt");

// if(!f)

// {

// fprintf( stderr, "ERROR: the specified file could not be loaded\n");

// return -1;

// }

// } HOGDescriptor hog;

//hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());

vector<float> detector = load_lear_model(argv[1]);

hog.setSVMDetector(detector);

namedWindow("people detector", 1); while (true)

{

cap >> img;

if (!img.data)

continue; vector<Rect> found, found_filtered;

hog.detectMultiScale(img, found, 0, Size(4,4), Size(0,0), 1.05, 2); size_t i, j;

for (i=0; i<found.size(); i++)

{

Rect r = found[i];

for (j=0; j<found.size(); j++)

if (j!=i && (r & found[j])==r)

break;

if (j==found.size())

found_filtered.push_back(r);

}

for (i=0; i<found_filtered.size(); i++)

{

Rect r = found_filtered[i];

r.x += cvRound(r.width*0.1);

r.width = cvRound(r.width*0.8);

r.y += cvRound(r.height*0.06);

r.height = cvRound(r.height*0.9);

rectangle(img, r.tl(), r.br(), cv::Scalar(0,255,0), 2);

}

imshow("people detector", img);

if (waitKey(20) >= 0)

break;

}

// for(;;)

// {

// char* filename = _filename;

// if(f)

// {

// if(!fgets(filename, (int)sizeof(_filename)-2, f))

// break;

// //while(*filename && isspace(*filename))

// // ++filename;

// if(filename[0] == '#')

// continue;

// int l = strlen(filename);

// while(l > 0 && isspace(filename[l-1]))

// --l;

// filename[l] = '\0';

// img = imread(filename);

// }

// printf("%s:\n", filename);

// if(!img.data)

// continue; // fflush(stdout);

// vector<Rect> found, found_filtered;

// double t = (double)getTickCount();

// // run the detector with default parameters. to get a higher hit-rate

// // (and more false alarms, respectively), decrease the hitThreshold and

// // groupThreshold (set groupThreshold to 0 to turn off the grouping completely).

// hog.detectMultiScale(img, found, 0, Size(8,8), Size(32,32), 1.05, 2);

// t = (double)getTickCount() - t;

// printf("tdetection time = %gms\n", t*1000./cv::getTickFrequency());

// size_t i, j;

// for( i = 0; i < found.size(); i++ )

// {

// Rect r = found[i];

// for( j = 0; j < found.size(); j++ )

// if( j != i && (r & found[j]) == r)

// break;

// if( j == found.size() )

// found_filtered.push_back(r);

// }

// for( i = 0; i < found_filtered.size(); i++ )

// {

// Rect r = found_filtered[i];

// // the HOG detector returns slightly larger rectangles than the real objects.

// // so we slightly shrink the rectangles to get a nicer output.

// r.x += cvRound(r.width*0.1);

// r.width = cvRound(r.width*0.8);

// r.y += cvRound(r.height*0.07);

// r.height = cvRound(r.height*0.8);

// rectangle(img, r.tl(), r.br(), cv::Scalar(0,255,0), 3);

// }

// imshow("people detector", img);

// int c = waitKey(0) & 255;

// if( c == 'q' || c == 'Q' || !f)

// break;

// }

if(f)

fclose(f);

return 0;

} //#include "opencv2/imgproc/imgproc.hpp"

//#include "opencv2/objdetect/objdetect.hpp"

//#include "opencv2/highgui/highgui.hpp" //#include <stdio.h>

//#include <string.h>

//#include <ctype.h> //using namespace cv;

//using namespace std; //void help()

//{

// printf(

// "\nDemonstrate the use of the HoG descriptor using\n"

// " HOGDescriptor::hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());\n"

// "Usage:\n"

// "./peopledetect (<image_filename> | <image_list>.txt)\n\n");

//} //int main(int argc, char** argv)

//{

// Mat img;

// FILE* f = 0;

// char _filename[1024]; // if( argc == 1 )

// {

// printf("Usage: peopledetect (<image_filename> | <image_list>.txt)\n");

// return 0;

// }

// img = imread(argv[1]); // if( img.data )

// {

// strcpy(_filename, argv[1]);

// }

// else

// {

// f = fopen(argv[1], "rt");

// if(!f)

// {

// fprintf( stderr, "ERROR: the specified file could not be loaded\n");

// return -1;

// }

// } // HOGDescriptor hog;

// hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());

// namedWindow("people detector", 1); // for(;;)

// {

// char* filename = _filename;

// if(f)

// {

// if(!fgets(filename, (int)sizeof(_filename)-2, f))

// break;

// //while(*filename && isspace(*filename))

// // ++filename;

// if(filename[0] == '#')

// continue;

// int l = strlen(filename);

// while(l > 0 && isspace(filename[l-1]))

// --l;

// filename[l] = '\0';

// img = imread(filename);

// }

// printf("%s:\n", filename);

// if(!img.data)

// continue; // fflush(stdout);

// vector<Rect> found, found_filtered;

// double t = (double)getTickCount();

// // run the detector with default parameters. to get a higher hit-rate

// // (and more false alarms, respectively), decrease the hitThreshold and

// // groupThreshold (set groupThreshold to 0 to turn off the grouping completely).

// hog.detectMultiScale(img, found, 0, Size(8,8), Size(32,32), 1.05, 2);

// t = (double)getTickCount() - t;

// printf("tdetection time = %gms\n", t*1000./cv::getTickFrequency());

// size_t i, j;

// for( i = 0; i < found.size(); i++ )

// {

// Rect r = found[i];

// for( j = 0; j < found.size(); j++ )

// if( j != i && (r & found[j]) == r)

// break;

// if( j == found.size() )

// found_filtered.push_back(r);

// }

// for( i = 0; i < found_filtered.size(); i++ )

// {

// Rect r = found_filtered[i];

// // the HOG detector returns slightly larger rectangles than the real objects.

// // so we slightly shrink the rectangles to get a nicer output.

// r.x += cvRound(r.width*0.1);

// r.width = cvRound(r.width*0.8);

// r.y += cvRound(r.height*0.07);

// r.height = cvRound(r.height*0.8);

// rectangle(img, r.tl(), r.br(), cv::Scalar(0,255,0), 3);

// }

// imshow("people detector", img);

// int c = waitKey(0) & 255;

// if( c == 'q' || c == 'Q' || !f)

// break;

// }

// if(f)

// fclose(f);

// return 0;

//}

效果:

opencv+树莓PI的基于HOG特征的行人检测的更多相关文章

- OpenCV中基于HOG特征的行人检测

目前基于机器学习方法的行人检测的主流特征描述子之一是HOG(Histogram of Oriented Gradient, 方向梯度直方图).HOG特征是用于目标检测的特征描述子,它通过计算和统计图像 ...

- 基于Haar特征Adaboost人脸检测级联分类

基于Haar特征Adaboost人脸检测级联分类 基于Haar特征Adaboost人脸检测级联分类,称haar分类器. 通过这个算法的名字,我们能够看到这个算法事实上包括了几个关键点:Haar特征.A ...

- 利用HOG+SVM实现行人检测

利用HOG+SVM实现行人检测 很久以前做的行人检测,现在稍加温习,上传记录一下. 首先解析视频,提取视频的每一帧形成图片存到磁盘.代码如下 import os import cv2 videos_s ...

- Opencv学习之路—Opencv下基于HOG特征的KNN算法分类训练

在计算机视觉研究当中,HOG算法和LBP算法算是基础算法,但是却十分重要.后期很多图像特征提取的算法都是基于HOG和LBP,所以了解和掌握HOG,是学习计算机视觉的前提和基础. HOG算法的原理很多资 ...

- 基于HOG特征的Adaboost行人检测

原地址:http://blog.csdn.net/van_ruin/article/details/9166591 .方向梯度直方图(Histogramof Oriented Gradient, HO ...

- Hog SVM 车辆 行人检测

HOG SVM 车辆检测 近期需要对卡口车辆的车脸进行检测,首先选用一个常规的检测方法即是hog特征与SVM,Hog特征是由dalal在2005年提出的用于道路中行人检测的方法,并且取的了不错的识别效 ...

- 基于SURF特征的目标检测

转战matlab了.步骤说一下: 目标图obj 含目标的场景图scene 载入图像 分别检测SURF特征点 分别提取SURF描述子,即特征向量 用两个特征相互匹配 利用匹配结果计算两者之间的trans ...

- 【Demo 1】基于object_detection API的行人检测 3:模型训练并在OpenCV调用模型

训练准备 模型选择 选择ssd_mobilenet_v2_coco模型,下载地址(https://github.com/tensorflow/models/blob/master/research/o ...

- 【Demo 1】基于object_detection API的行人检测 2:数据制作

项目文件结构 因为目录太多又太杂,而且数据格式对路径有要求,先把文件目录放出来.(博主目录结构并不规范) 1.根目录下的models为克隆下来的项目.2.pedestrian_data目录下的路径以及 ...

随机推荐

- javascript 弹出的窗口返回值给 父窗口

直接上代码,有些地方可以用到: <!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.0 Transitional//EN"> <H ...

- iPad知识点记录

这两天玩了玩虚拟机安装Mac OS系统.iPad1的越狱以及利用iTunes将iPad2的系统升级到iOS8.1,这里将一些参考资源以及关键点记录一下. 一.虚拟机安装Mac OS 首先你的系统要能够 ...

- CSS3 animation-fill-mode 属性

现在专注于移动端开发项目,对于动画这个点是非常重要的,每当我遇到一个新的知识点,我就会和大家一起分享 animation-fill-mode :把物体动画地从一个地方移动到另一个地方,并让它停留在那里 ...

- 51nod1242 斐波那契数列 矩阵快速幂

1242 斐波那契数列的第N项 基准时间限制:1 秒 空间限制:131072 KB 分值: 0 难度:基础题 #include<stdio.h> #define mod 100000000 ...

- Yii 配置默认controller和action

设置默认controller 在/protected/config/main.php添加配置 <?php return array( 'name'=>'Auto', 'defaultCon ...

- laravel1

生成模型的时候 同时生成migration文件php artisan make:model User --migration

- 003.XE3包含了TPerlRegEx的单元

使用'|'替换所有的内容 代码: program Project1; {$APPTYPE CONSOLE} uses System.SysUtils, System.RegularExpression ...

- 【方言】Access to DialectResolutionInfo cannot be null when 'hibernate.dialect' not set

Access to DialectResolutionInfo cannot be null when 'hibernate.dialect' not set 几种 方言配置差异 <?xml v ...

- mysql 的存储引擎

注意:mysql开发很少显示使用外键,MyISAM可以定义外键,但不起作用. mysql最新的5.6版本支持,全文索引.

- iOS 日历类(NSCalendar)

对于时间的操作在开发中很常见,但有时候我们需要获取到一年后的时间,或者一周后的时间.靠通过秒数计算是不行的.那就牵扯到另外一个日历类(NSCalendar).下面先简单看一下 NSDate let d ...