(转)A Beginner's Guide To Understanding Convolutional Neural Networks Part 2

A Beginner's Guide To Understanding Convolutional Neural Networks Part 2

Introduction

In this post, we’ll go into a lot more of the specifics of ConvNets. Disclaimer: Now, I do realize that some of these topics are quite complex and could be made in whole posts by themselves. In an effort to remain concise yet retain comprehensiveness, I will provide links to research papers where the topic is explained in more detail.

Stride and Padding

Alright, let’s look back at our good old conv layers. Remember the filters, the receptive fields, the convolving? Good. Now, there are 2 main parameters that we can change to modify the behavior of each layer. After we choose the filter size, we also have to choose the stride and the padding.

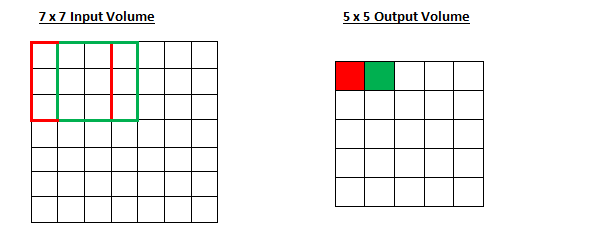

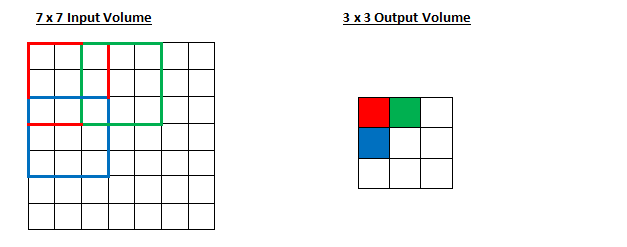

Stride controls how the filter convolves around the input volume. In the example we had in part 1, the filter convolves around the input volume by shifting one unit at a time. The amount by which the filter shifts is the stride. In that case, the stride was implicitly set at 1. Stride is normally set in a way so that the output volume is an integer and not a fraction. Let’s look at an example. Let’s imagine a 7 x 7 input volume, a 3 x 3 filter (Disregard the 3rd dimension for simplicity), and a stride of 1. This is the case that we’re accustomed to.

Same old, same old, right? See if you can try to guess what will happen to the output volume as the stride increases to 2.

So, as you can see, the receptive field is shifting by 2 units now and the output volume shrinks as well. Notice that if we tried to set our stride to 3, then we’d have issues with spacing and making sure the receptive fields fit on the input volume. Normally, programmers will increase the stride if they want receptive fields to overlap less and if they want smaller spatial dimensions.

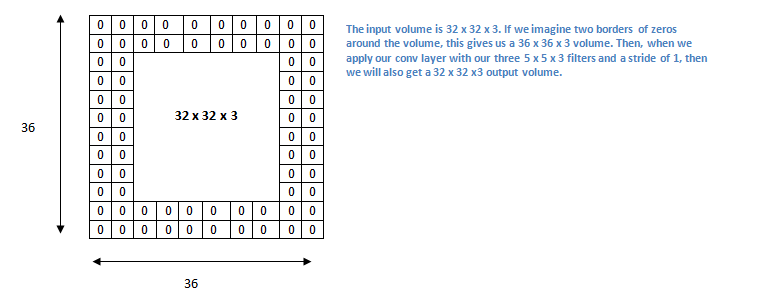

Now, let’s take a look at padding. Before getting into that, let’s think about a scenario. What happens when you apply three 5 x 5 x 3 filters to a 32 x 32 x 3 input volume? The output volume would be 28 x 28 x 3. Notice that the spatial dimensions decrease. As we keep applying conv layers, the size of the volume will decrease faster than we would like. In the early layers of our network, we want to preserve as much information about the original input volume so that we can extract those low level features. Let’s say we want to apply the same conv layer but we want the output volume to remain 32 x 32 x 3. To do this, we can apply a zero padding of size 2 to that layer. Zero padding pads the input volume with zeros around the border. If we think about a zero padding of two, then this would result in a 36 x 36 x 3 input volume.

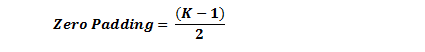

If you have a stride of 1 and if you set the size of zero padding to

where K is the filter size, then the input and output volume will always have the same spatial dimensions.

The formula for calculating the output size for any given conv layer is

where O is the output height/length, W is the input height/length, K is the filter size, P is the padding, and S is the stride.

Choosing Hyperparameters

How do we know how many layers to use, how many conv layers, what are the filter sizes, or the values for stride and padding? These are not trivial questions and there isn’t a set standard that is used by all researchers. This is because the network will largely depend on the type of data that you have. Data can vary by size, complexity of the image, type of image processing task, and more. When looking at your dataset, one way to think about how to choose the hyperparameters is to find the right combination that creates abstractions of the image at a proper scale.

ReLU (Rectified Linear Units) Layers

After each conv layer, it is convention to apply a nonlinear layer (or activation layer) immediately afterward.The purpose of this layer is to introduce nonlinearity to a system that basically has just been computing linear operations during the conv layers (just element wise multiplications and summations).In the past, nonlinear functions like tanh and sigmoid were used, but researchers found out that ReLU layers work far better because the network is able to train a lot faster (because of the computational efficiency) without making a significant difference to the accuracy. It also helps to alleviate the vanishing gradient problem, which is the issue where the lower layers of the network train very slowly because the gradient decreases exponentially through the layers (Explaining this might be out of the scope of this post, but see here and here for good descriptions). The ReLU layer applies the function f(x) = max(0, x) to all of the values in the input volume. In basic terms, this layer just changes all the negative activations to 0.This layer increases the nonlinear properties of the model and the overall network without affecting the receptive fields of the conv layer.

Paper by the great Geoffrey Hinton (aka the father of deep learning).

Pooling Layers

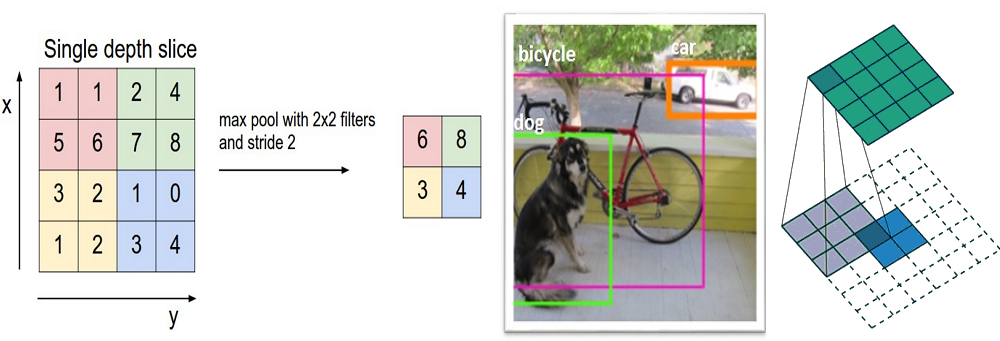

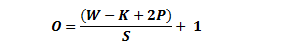

After some ReLU layers, programmers may choose to apply a pooling layer. It is also referred to as a downsampling layer. In this category, there are also several layer options, with maxpooling being the most popular. This basically takes a filter (normally of size 2x2) and a stride of the same length. It then applies it to the input volume and outputs the maximum number in every subregion that the filter convolves around.

Other options for pooling layers are average pooling and L2-norm pooling. The intuitive reasoning behind this layer is that once we know that a specific feature is in the original input volume (there will be a high activation value), its exact location is not as important as its relative location to the other features. As you can imagine, this layer drastically reduces the spatial dimension (the length and the width change but not the depth) of the input volume. This serves two main purposes. The first is that the amount of parameters or weights is reduced by 75%, thus lessening the computation cost. The second is that it will controloverfitting. This term refers to when a model is so tuned to the training examples that it is not able to generalize well for the validation and test sets. A symptom of overfitting is having a model that gets 100% or 99% on the training set, but only 50% on the test data.

Dropout Layers

Now, dropout layers have a very specific function in neural networks. In the last section, we discussed the problem of overfitting, where after training, the weights of the network are so tuned to the training examples they are given that the network doesn’t perform well when given new examples. The idea of dropout is simplistic in nature. This layer “drops out” a random set of activations in that layer by setting them to zero in the forward pass. Simple as that. Now, what are the benefits of such a simple and seemingly unnecessary and counterintuitive process? Well, in a way, it forces the network to be redundant. By that I mean the network should be able to provide the right classification or output for a specific example even if some of the activations are dropped out. It makes sure that the network isn’t getting too “fitted” to the training data and thus helps alleviate the overfitting problem. An important note is that this layer is only used during training, and not during test time.

Paper by Geoffrey Hinton.

Network in Network Layers

A network in network layer refers to a conv layer where a 1 x 1 size filter is used. Now, at first look, you might wonder why this type of layer would even be helpful since receptive fields are normally larger than the space they map to. However, we must remember that these 1x1 convolutions span a certain depth, so we can think of it as a 1 x 1 x N convolution where N is the number of filters applied in the layer. Effectively, this layer is performing a N-D element-wise multiplication where N is the depth of the input volume into the layer.

Paper by Min Lin.

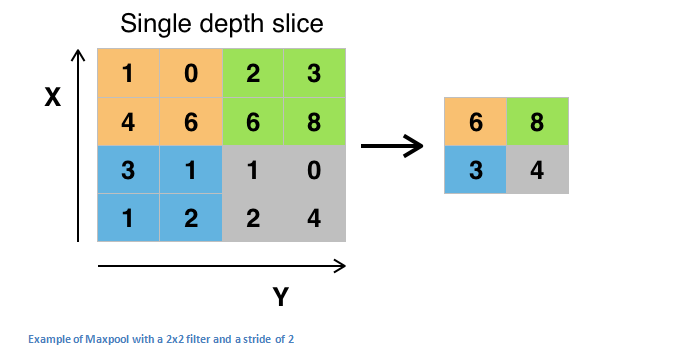

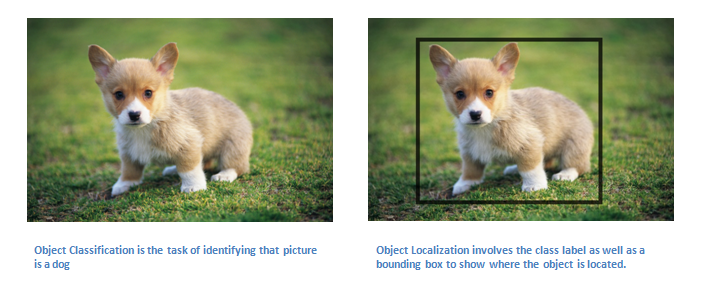

Classification, Localization, Detection, Segmentation

In the example we used in Part 1 of this series, we looked at the task of image classification. This is the process of taking an input image and outputting a class number out of a set of categories. However, when we take a task like object localization, our job is not only to produce a class label but also a bounding box that describes where the object is in the picture.

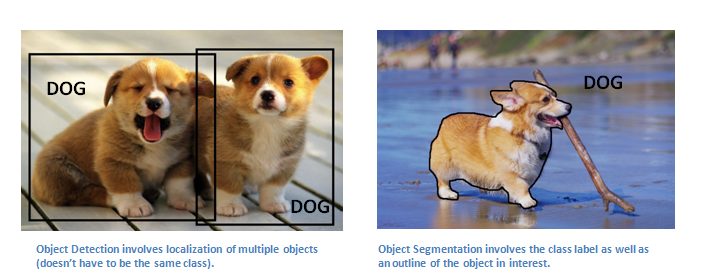

We also have the task of object detection, where localization needs to be done on all of the objects in the image. Therefore, you will have multiple bounding boxes and multiple class labels.

Finally, we also have object segmentation where the task is to output a class label as well as an outline of every object in the input image.

More detail on how these are implemented to come in Part 3, but for those who can’t wait…

Detection/ Localization: RCNN, Fast RCNN, Faster RCNN, MultiBox, Bayesian Optimization, Multi-region, RCNN Minus R, Image Windows

Segmentation: Semantic Seg, Unconstrained Video, Shape Guided, Object Regions, Shape Sharing

Yeah, there’s a lot more.

Transfer Learning

Now, a common misconception in the DL community is that without a Google-esque amount of data, you can’t possibly hope to create effective deep learning models. While data is a critical part of creating the network, the idea of transfer learning has helped to lessen the data demands. Transfer learning is the process of taking a pre-trained model (the weights and parameters of a network that has been trained on a large dataset by somebody else) and “fine-tuning” the model with your own dataset. The idea is that this pre-trained model will act as a feature extractor. You will remove the last layer of the network and replace it with your own classifier (depending on what your problem space is). You then freeze the weights of all the other layers and train the network normally (Freezing the layers means not changing the weights during gradient descent/optimization).

Let’s investigate why this works. Let’s say the pre-trained model that we’re talking about was trained on ImageNet (For those that aren’t familiar, ImageNet is a dataset that contains 14 million images with over 1,000 classes). When we think about the lower layers of the network, we know that they will detect features like edges and curves. Now, unless you have a very unique problem space and dataset, your network is going to need to detect curves and edges as well. Rather than training the whole network through a random initialization of weights, we can use the weights of the pre-trained model (and freeze them) and focus on the more important layers (ones that are higher up) for training. If your dataset is quite different than something like ImageNet, then you’d want to train more of your layers and freeze only a couple of the low layers.

Paper by Yoshua Bengio (another deep learning pioneer).

Paper by Ali Sharif Razavian.

Paper by Jeff Donahue.

Data Augmentation Techniques

By now, we’re all probably numb to the importance of data in ConvNets, so let’s talk about ways that you can make your existing dataset even larger, just with a couple easy transformations. Like we’ve mentioned before, when a computer takes an image as an input, it will take in an array of pixel values. Let’s say that the whole image is shifted left by 1 pixel. To you and me, this change is imperceptible. However, to a computer, this shift can be fairly significant as the classification or label of the image doesn’t change, while the array does. Approaches that alter the training data in ways that change the array representation while keeping the label the same are known as data augmentation techniques. They are a way to artificially expand your dataset. Some popular augmentations people use are grayscales, horizontal flips, vertical flips, random crops, color jitters, translations, rotations, and much more. By applying just a couple of these transformations to your training data, you can easily double or triple the number of training examples.

Dueces.

(转)A Beginner's Guide To Understanding Convolutional Neural Networks Part 2的更多相关文章

- A Beginner's Guide To Understanding Convolutional Neural Networks(转)

A Beginner's Guide To Understanding Convolutional Neural Networks Introduction Convolutional neural ...

- (转)A Beginner's Guide To Understanding Convolutional Neural Networks

Adit Deshpande CS Undergrad at UCLA ('19) Blog About A Beginner's Guide To Understanding Convolution ...

- A Beginner's Guide To Understanding Convolutional Neural Networks Part One (CNN)笔记

原文链接:https://adeshpande3.github.io/adeshpande3.github.io/A-Beginner's-Guide-To-Understanding-Convolu ...

- [转] Understanding Convolutional Neural Networks for NLP

http://www.wildml.com/2015/11/understanding-convolutional-neural-networks-for-nlp/ 讲CNN以及其在NLP的应用,非常 ...

- Understanding Convolutional Neural Networks for NLP

When we hear about Convolutional Neural Network (CNNs), we typically think of Computer Vision. CNNs ...

- [转]An Intuitive Explanation of Convolutional Neural Networks

An Intuitive Explanation of Convolutional Neural Networks https://ujjwalkarn.me/2016/08/11/intuitive ...

- An Intuitive Explanation of Convolutional Neural Networks

https://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/ An Intuitive Explanation of Convolu ...

- 一目了然卷积神经网络 - An Intuitive Explanation of Convolutional Neural Networks

An Intuitive Explanation of Convolutional Neural Networks 原文地址:https://ujjwalkarn.me/2016/08/11/intu ...

- 卷积神经网络用于视觉识别Convolutional Neural Networks for Visual Recognition

Table of Contents: Architecture Overview ConvNet Layers Convolutional Layer Pooling Layer Normalizat ...

随机推荐

- MySQL中like的使用方法

Like的运用场合主要在模糊查询的时候,一般以查询字符串居多,这里据一些例子来说他的一般用法: <1>查询name字段中包含有“明”字的:例 select * from table1 wh ...

- PHP中函数的使用

函数是一种可以在任何被需要的时候执行的代码块函数的定义 1.函数是一个被命名的独立的代码段 2.它执行特殊的任务 3.并可以给调用它的程序返回值 函数的优点: 1.提高程序的重 ...

- 转载大神的dfs讲解

http://acm.hdu.edu.cn/forum/read.php?tid=6158 看完明白了好多! 关于剪枝,没有剪枝的搜索不太可能,这题老刘上课的时候讲过两个剪枝,一个是奇偶剪枝,一个是路 ...

- MongoDB索引、聚合

用$where可以执行任意的js作为查询的一部分. db.foo.find({"$where" : function(){ for(var current in ...

- 走过路过不要错过~教你用java抓取网页中你想要的东东~~

学习了正则之后,打算用java玩一玩,所以就决定用它来实现一个好玩的idea import java.io.BufferedReader; import java.io.IOException; im ...

- 一个简单的tr:hover效果

昨天,搞项目的时候,在一个小问题上卡了40分钟,现在想想,还是平时比较少去注意一些细节,经过这次,一定要去多注意细节了. 好了废话不多说,我现在说明下遇到的问题,一个表格中,要求是当鼠标滑过每一行时, ...

- 找不到请求的 .Net Framework Data Provider。可能没有安装.

学习中遇到的问题: 找不到请求的 .Net Framework Data Provider.可能没有安装. 找到的解决方法 解决方法: 安装Microsoft SQL Server Compact 4 ...

- iOS-申请开发证书流程

1.开发者证书(分为开发和发布两种,类型为ios Development,ios Distribution),这个是最基础的,不论是真机调试,还是上传到appstore都是需要的,是一个基证书,用来证 ...

- 作业6 分析项目的NABCD和项目的产品Backlog

项目scrum:邵家文 NABCD模型分析 N(Need 需求)根据采访用户下面可以得出用户的基本需求:1.小孩说:我想要做适合自己能力的四则运算2.小孩说:我想这个四则运算软件里面的题目越做越提高自 ...

- 【题解】【BT】【Leetcode】Populating Next Right Pointers in Each Node

Given a binary tree struct TreeLinkNode { TreeLinkNode *left; TreeLinkNode *right; TreeLinkNode *nex ...