Sparse Autoencoder(二)

Gradient checking and advanced optimization

In this section, we describe a method for numerically checking the derivatives computed by your code to make sure that your implementation is correct. Carrying out the derivative checking procedure described here will significantly increase your confidence in the correctness of your code.

Suppose we want to minimize

as a function of

. For this example, suppose

, so that

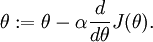

. In this 1-dimensional case, one iteration of gradient descent is given by

Suppose also that we have implemented some function

that purportedly computes

, so that we implement gradient descent using the update

.

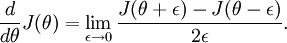

Recall the mathematical definition of the derivative as

Thus, at any specific value of

, we can numerically approximate the derivative as follows:

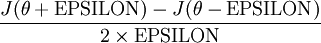

Thus, given a function

that is supposedly computing

, we can now numerically verify its correctness by checking that

The degree to which these two values should approximate each other will depend on the details of

. But assuming

, you'll usually find that the left- and right-hand sides of the above will agree to at least 4 significant digits (and often many more).

Suppose we have a function

that purportedly computes

; we'd like to check if

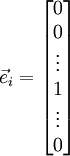

is outputting correct derivative values. Let

, where

is the

-th basis vector (a vector of the same dimension as

, with a "1" in the

-th position and "0"s everywhere else). So,

is the same as

, except its

-th element has been incremented by EPSILON. Similarly, let

be the corresponding vector with the

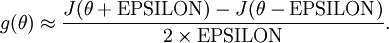

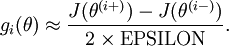

-th element decreased by EPSILON. We can now numerically verify

's correctness by checking, for each

, that:

参数为向量,为了验证每一维的计算正确性,可以控制其他变量

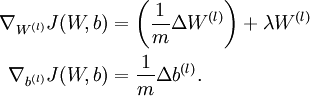

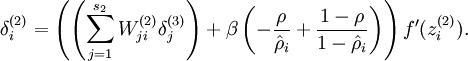

When implementing backpropagation to train a neural network, in a correct implementation we will have that

This result shows that the final block of psuedo-code in Backpropagation Algorithm is indeed implementing gradient descent. To make sure your implementation of gradient descent is correct, it is usually very helpful to use the method described above to numerically compute the derivatives of

, and thereby verify that your computations of

and

are indeed giving the derivatives you want.

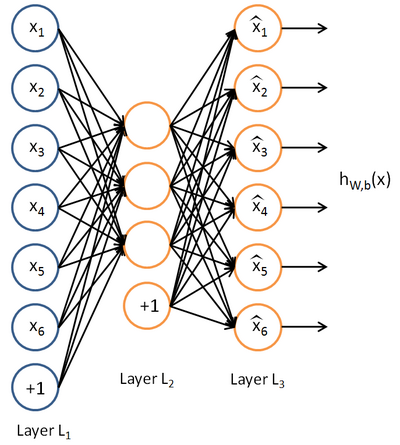

Autoencoders and Sparsity

Anautoencoder neural network is an unsupervised learning algorithm that applies backpropagation, setting the target values to be equal to the inputs. I.e., it uses

.

Here is an autoencoder:

we will write

to denote the activation of this hidden unit when the network is given a specific input

. Further, let

be the average activation of hidden unit

(averaged over the training set). We would like to (approximately) enforce the constraint

where

is a sparsity parameter, typically a small value close to zero (say

). In other words, we would like the average activation of each hidden neuron

to be close to 0.05 (say). To satisfy this constraint, the hidden unit's activations must mostly be near 0.

To achieve this, we will add an extra penalty term to our optimization objective that penalizes

deviating significantly from

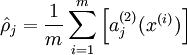

. Many choices of the penalty term will give reasonable results. We will choose the following:

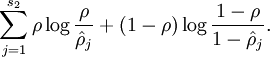

Here,

is the number of neurons in the hidden layer, and the index

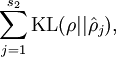

is summing over the hidden units in our network. If you are familiar with the concept of KL divergence, this penalty term is based on it, and can also be written

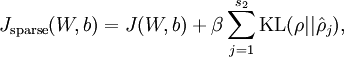

Our overall cost function is now

where

is as defined previously, and

controls the weight of the sparsity penalty term. The term

(implicitly) depends on

also, because it is the average activation of hidden unit

, and the activation of a hidden unit depends on the parameters

.

Visualizing a Trained Autoencoder

Consider the case of training an autoencoder on

images, so that

. Each hidden unit

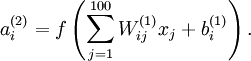

computes a function of the input:

We will visualize the function computed by hidden unit

---which depends on the parameters

(ignoring the bias term for now)---using a 2D image. In particular, we think of

as some non-linear feature of the input

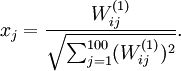

If we suppose that the input is norm constrained by

, then one can show (try doing this yourself) that the input which maximally activates hidden unit

is given by setting pixel

(for all 100 pixels,

) to

By displaying the image formed by these pixel intensity values, we can begin to understand what feature hidden unit

is looking for.

对一幅图像进行Autoencoder ,前面的隐藏结点一般捕获的是边缘等初级特征,越靠后隐藏结点捕获的特征语义更深。

Sparse Autoencoder(二)的更多相关文章

- DL二(稀疏自编码器 Sparse Autoencoder)

稀疏自编码器 Sparse Autoencoder 一神经网络(Neural Networks) 1.1 基本术语 神经网络(neural networks) 激活函数(activation func ...

- Deep Learning 1_深度学习UFLDL教程:Sparse Autoencoder练习(斯坦福大学深度学习教程)

1前言 本人写技术博客的目的,其实是感觉好多东西,很长一段时间不动就会忘记了,为了加深学习记忆以及方便以后可能忘记后能很快回忆起自己曾经学过的东西. 首先,在网上找了一些资料,看见介绍说UFLDL很不 ...

- (六)6.5 Neurons Networks Implements of Sparse Autoencoder

一大波matlab代码正在靠近.- -! sparse autoencoder的一个实例练习,这个例子所要实现的内容大概如下:从给定的很多张自然图片中截取出大小为8*8的小patches图片共1000 ...

- UFLDL实验报告2:Sparse Autoencoder

Sparse Autoencoder稀疏自编码器实验报告 1.Sparse Autoencoder稀疏自编码器实验描述 自编码神经网络是一种无监督学习算法,它使用了反向传播算法,并让目标值等于输入值, ...

- 七、Sparse Autoencoder介绍

目前为止,我们已经讨论了神经网络在有监督学习中的应用.在有监督学习中,训练样本是有类别标签的.现在假设我们只有一个没有带类别标签的训练样本集合 ,其中 .自编码神经网络是一种无监督学习算法,它使用 ...

- CS229 6.5 Neurons Networks Implements of Sparse Autoencoder

sparse autoencoder的一个实例练习,这个例子所要实现的内容大概如下:从给定的很多张自然图片中截取出大小为8*8的小patches图片共10000张,现在需要用sparse autoen ...

- 【DeepLearning】Exercise:Sparse Autoencoder

Exercise:Sparse Autoencoder 习题的链接:Exercise:Sparse Autoencoder 注意点: 1.训练样本像素值需要归一化. 因为输出层的激活函数是logist ...

- Sparse AutoEncoder简介

1. AutoEncoder AutoEncoder是一种特殊的三层神经网络, 其输出等于输入:\(y^{(i)}=x^{(i)}\), 如下图所示: 亦即AutoEncoder想学到的函数为\(f_ ...

- Exercise:Sparse Autoencoder

斯坦福deep learning教程中的自稀疏编码器的练习,主要是参考了 http://www.cnblogs.com/tornadomeet/archive/2013/03/20/2970724 ...

随机推荐

- <Sicily>Fibonacci 2

一.题目描述 In the Fibonacci integer sequence, F0 = 0, F1 = 1, and Fn = Fn-1 + Fn-2 for n ≥ 2. For exampl ...

- 重复执行shell脚本。

while ((1)); do gclient runhooks; sleep 2; donewhile ((1)); do ifconfig; sleep 1; done

- php数据类型及运算

数据类型: 标量类型: int(intege), float, string, bool 复合类型: array, object 特殊类型: null, resouce进制转换十进制转二进制decb ...

- vue项目的一些最佳实践提炼和经验总结

项目组织结构 ajax数据请求的封装和api接口的模块化管理 第三方库按需加载 利用less的深度选择器优雅覆盖当前页面UI库组件的样式 webpack实时打包进度 vue组件中选项的顺序 路由的懒加 ...

- centeros 7配置mailx使用外部smtp服务器发送邮件

发送邮件的两种方式: 1.连接现成的smtp服务器去发送(此方法比较简单,直接利用现有的smtp服务器比如qq.新浪.网易等邮箱,只需要直接配置mail.rc文件即可实现) 2.自己搭建私有的smtp ...

- 洛谷 P1293 班级聚会

P1293 班级聚会 题目描述 毕业25年以后,我们的主人公开始准备同学聚会.打了无数电话后他终于搞到了所有同学的地址.他们有些人仍在本城市,但大多数人分散在其他的城市.不过,他发现一个巧合,所有地址 ...

- Hadoop2 伪分布式部署

一.简单介绍 二.安装部署 三.执行hadoop样例并測试部署环境 四.注意的地方 一.简单介绍 Hadoop是一个由Apache基金会所开发的分布式系统基础架构,Hadoop的框架最核心的设计就是: ...

- [Teamcenter 2007 开发实战] 调用web service

前言 在TC的服务端开发中, 能够使用gsoap 来调用web service. 怎样使用 gsoap , 參考 gsoap 实现 C/C++ 调用web service 接下来介绍怎样在TC中进行 ...

- CodeForces 550E Brackets in Implications(构造)

[题目链接]:click here~~ [题目大意]给定一个逻辑运算符号a->b:当前仅当a为1b为0值为0,其余为1,构造括号.改变运算优先级使得最后结果为0 [解题思路]: todo~~ / ...

- 从Linux系统内存逐步认识Android应用内存

总述 Android应用程序被限制了内存使用上限,一般为16M或24M(具体看系统设置),当应用的使用内存超过这个上限时,就会被系统认为内存泄漏,被kill掉.所以在Android开发时,管理好内存的 ...