矩池云上安装 NVCaffe教程

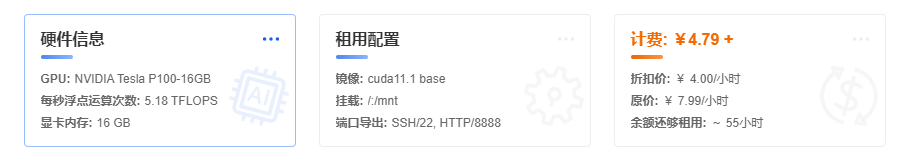

使用的是P100,cuda11.1base镜像

创建虚拟环境

conda create -n py36 python=3.6

conda deactivate

conda activate py36

安装依赖包

apt update

apt-get install libopencv-dev libopenblas-dev libopenblas-base libhdf5-dev protobuf-compiler libgoogle-glog-dev libgflags-dev libprotobuf-dev libboost-dev libleveldb-dev liblmdb-dev libturbojpeg0-dev libboost-filesystem-dev libboost-system-dev libboost-thread-dev libboost-regex-dev libsnappy-dev

下载NVIDIA caffe

cd /home/

# 官方链接wget https://github.com/NVIDIA/caffe/archive/refs/tags/v0.17.4.tar.gz

我这里用了镜像来下载

wget https://download.fastgit.org/NVIDIA/caffe/archive/refs/tags/v0.17.4.tar.gz

tar -xvf v0.17.4.tar.gz

cd caffe-0.17.4

for req in $(cat python/requirements.txt); do pip install $req; done

pip install --upgrade google-api-python-client

cp Makefile.config.example Makefile.config

修改Makefile.config

直接复制进去,保存即可。

## Refer to http://caffe.berkeleyvision.org/installation.html

# Contributions simplifying and improving our build system are welcome!

# cuDNN acceleration switch (uncomment to build with cuDNN).

# cuDNN version 6 or higher is required.

USE_CUDNN := 1

# NCCL acceleration switch (uncomment to build with NCCL)

# See https://github.com/NVIDIA/nccl

USE_NCCL := 1

# Builds tests with 16 bit float support in addition to 32 and 64 bit.

# TEST_FP16 := 1

# uncomment to disable IO dependencies and corresponding data layers

# USE_OPENCV := 0

# USE_LEVELDB := 0

# USE_LMDB := 0

# Uncomment and set accordingly if you're using OpenCV 3/4

OPENCV_VERSION := 3

# To customize your choice of compiler, uncomment and set the following.

# N.B. the default for Linux is g++ and the default for OSX is clang++

# CUSTOM_CXX := g++

# CUDA directory contains bin/ and lib/ directories that we need.

CUDA_DIR := /usr/local/cuda

# On Ubuntu 14.04, if cuda tools are installed via

# "sudo apt-get install nvidia-cuda-toolkit" then use this instead:

# CUDA_DIR := /usr

# CUDA architecture setting: going with all of them.

CUDA_ARCH := -gencode arch=compute_60,code=sm_60 \

-gencode arch=compute_61,code=sm_61 \

-gencode arch=compute_70,code=sm_70 \

-gencode arch=compute_75,code=sm_75 \

-gencode arch=compute_75,code=compute_75

# BLAS choice:

# atlas for ATLAS

# mkl for MKL

# open for OpenBlas - default, see https://github.com/xianyi/OpenBLAS

BLAS := open

# Custom (MKL/ATLAS/OpenBLAS) include and lib directories.

BLAS_INCLUDE := /opt/OpenBLAS/include/

BLAS_LIB := /opt/OpenBLAS/lib/

# Homebrew puts openblas in a directory that is not on the standard search path

# BLAS_INCLUDE := $(shell brew --prefix openblas)/include

# BLAS_LIB := $(shell brew --prefix openblas)/lib

# This is required only if you will compile the matlab interface.

# MATLAB directory should contain the mex binary in /bin.

# MATLAB_DIR := /usr/local

# MATLAB_DIR := /Applications/MATLAB_R2012b.app

# NOTE: this is required only if you will compile the python interface.

# We need to be able to find Python.h and numpy/arrayobject.h.

#PYTHON_INCLUDE := /usr/include/python2.7 \

# /usr/lib/python2.7/dist-packages/numpy/core/include

# Anaconda Python distribution is quite popular. Include path:

# Verify anaconda location, sometimes it's in root.

# ANACONDA_HOME := $(HOME)/anaconda

# PYTHON_INCLUDE := $(ANACONDA_HOME)/include \

# $(ANACONDA_HOME)/include/python2.7 \

# $(ANACONDA_HOME)/lib/python2.7/site-packages/numpy/core/include \

# Uncomment to use Python 3 (default is Python 2)

PYTHON_LIBRARIES := boost_python3 python3.6m

PYTHON_INCLUDE := /root/miniconda3/envs/py36/include/python3.6m \

/root/miniconda3/envs/py36/lib/python3.6/site-packages/numpy/core/include

# We need to be able to find libpythonX.X.so or .dylib.

PYTHON_LIB := /root/miniconda3/envs/py36/lib

# PYTHON_LIB := $(ANACONDA_HOME)/lib

# Homebrew installs numpy in a non standard path (keg only)

# PYTHON_INCLUDE += $(dir $(shell python -c 'import numpy.core; print(numpy.core.__file__)'))/include

# PYTHON_LIB += $(shell brew --prefix numpy)/lib

# Uncomment to support layers written in Python (will link against Python libs)

WITH_PYTHON_LAYER := 1

# Whatever else you find you need goes here.

INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include /usr/include/hdf5/serial

LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib /usr/lib/x86_64-linux-gnu/hdf5/serial

# If Homebrew is installed at a non standard location (for example your home directory) and you use it for general dependencies

# INCLUDE_DIRS += $(shell brew --prefix)/include

# LIBRARY_DIRS += $(shell brew --prefix)/lib

# Uncomment to use `pkg-config` to specify OpenCV library paths.

# (Usually not necessary -- OpenCV libraries are normally installed in one of the above $LIBRARY_DIRS.)

# USE_PKG_CONFIG := 1

BUILD_DIR := build

DISTRIBUTE_DIR := distribute

# Uncomment for debugging. Does not work on OSX due to https://github.com/BVLC/caffe/issues/171

# DEBUG := 1

# The ID of the GPU that 'make runtest' will use to run unit tests.

TEST_GPUID := 0

# enable pretty build (comment to see full commands)

Q ?= @

# shared object suffix name to differentiate branches

LIBRARY_NAME_SUFFIX := -nv

想自己找到上面修改的路径,可以使用下面的命令查找

python -c "from distutils.sysconfig import get_python_inc; print(get_python_inc())"

python -c "import distutils.sysconfig as sysconfig; print(sysconfig.get_config_var('LIBDIR'))"

find /root/miniconda3/envs/py36/lib/ -name numpy

设置环境变量

export PYTHONPATH=/home/caffe-0.17.4/python/:$PYTHONPATH

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/root/miniconda3/envs/py36/lib

开始编译

make clean

make all -j12

make pycaffe -j12

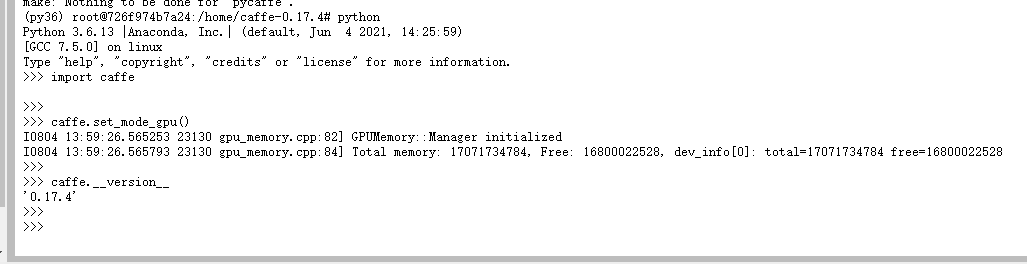

使用python环境测试

python

import caffe

caffe.set_mode_gpu()

caffe.__version__

使用官方examples测试

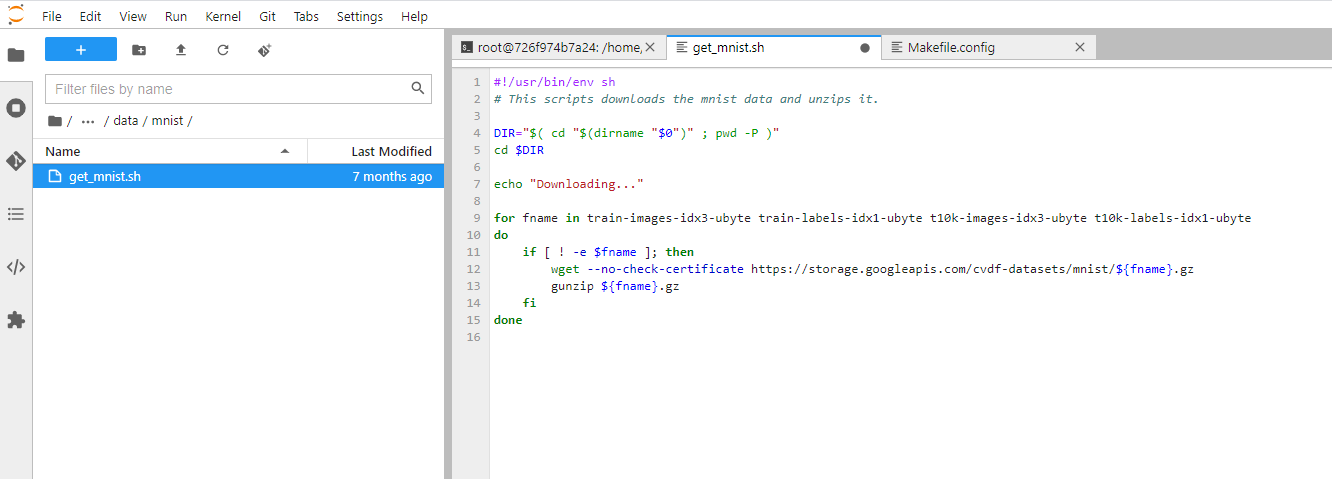

#!/usr/bin/env sh

# This scripts downloads the mnist data and unzips it.

DIR="$( cd "$(dirname "$0")" ; pwd -P )"

cd $DIR

echo "Downloading..."

for fname in train-images-idx3-ubyte train-labels-idx1-ubyte t10k-images-idx3-ubyte t10k-labels-idx1-ubyte

do

if [ ! -e $fname ]; then

wget --no-check-certificate https://storage.googleapis.com/cvdf-datasets/mnist/${fname}.gz

gunzip ${fname}.gz

fi

done

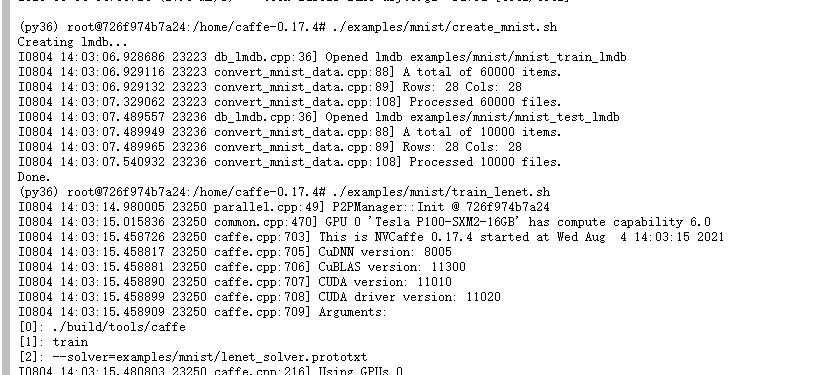

./data/mnist/get_mnist.sh

./examples/mnist/create_mnist.sh

./examples/mnist/train_lenet.sh

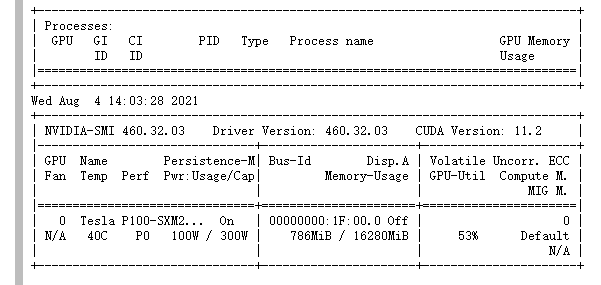

查看显存使用率

nvidia-smi -l 5

参考文章

https://stackoverflow.com/questions/36183486/importerror-no-module-named-google

https://github.com/xianyi/OpenBLAS/issues/1114

https://pypi.org/project/scipy/0.17.0/

https://github.com/NVIDIA/caffe/releases/tag/v0.17.4

矩池云上安装 NVCaffe教程的更多相关文章

- 矩池云上安装ikatago及远程链接教程

https://github.com/kinfkong/ikatago-resources/tree/master/dockerfiles 从作者的库中可以看到,该程序支持cuda9.2.cuda10 ...

- 矩池云上安装yolov4 darknet教程

这里我是用PyTorch 1.8.1来安装的 拉取仓库 官方仓库 git clone https://github.com/AlexeyAB/darknet 镜像仓库 git clone https: ...

- 矩池云上安装yolov5并测试教程

官方仓库:https://github.com/ultralytics/yolov5 官方文档:https://docs.ultralytics.com/quick-start/ 此案例我是租用了k8 ...

- 矩池云上安装及使用Milvus教程

选择cuda10.1的镜像 更新源及拷贝文件到本地 apt-get update cp -r /public/database/milvus/ / cd /milvus/ cp ./lib/* /us ...

- 矩池云上安装caffe gpu教程

选用CUDA10.0镜像 添加nvidia-cuda和修改apt源 curl -fsSL https://mirrors.aliyun.com/nvidia-cuda/ubuntu1804/x86_6 ...

- 矩池云上编译安装dlib库

方法一(简单) 矩池云上的k80因为内存问题,请用其他版本的GPU去进行编译,保存环境后再在k80上用. 准备工作 下载dlib的源文件 进入python的官网,点击PyPi选项,搜索dilb,再点击 ...

- 矩池云上使用nvidia-smi命令教程

简介 nvidia-smi全称是NVIDIA System Management Interface ,它是一个基于NVIDIA Management Library(NVML)构建的命令行实用工具, ...

- 在矩池云上复现 CVPR 2018 LearningToCompare_FSL 环境

这是 CVPR 2018 的一篇少样本学习论文:Learning to Compare: Relation Network for Few-Shot Learning 源码地址:https://git ...

- 矩池云上TensorBoard/TensorBoardX配置说明

Tensorflow用户使用TensorBoard 矩池云现在为带有Tensorflow的镜像默认开启了6006端口,那么只需要在租用后使用命令启动即可 tensorboard --logdir lo ...

随机推荐

- Gitee 自已提交的代码提交人头像为他人、码云上独自开发的项目显示为 2 个开发者

简介 自己写的代码提交到码云(Gitee)上却变成了两个人,一个被正确的代码提交统计了,另一个却没有,并且确信自己输入的Gitee账号是自己绑定的邮箱,具体如下: 解决办法 查看自己的用户名 git ...

- NSPredicate类,指定过滤器的条件---董鑫

/* 比较和逻辑运算符 就像前面的例子中使用了==操作符,NSPredicate还支持>, >=, <, <=, !=, <>,还支持AND, OR, NOT(或写 ...

- 类(静态)变量和类(静态)static方法以及main方法、代码块,final方法的使用,单例设计模式

类的加载:时间 1.创建对象实例(new 一个新对象时) 2.创建子类对象实例,父类也会被加载 3.使用类的静态成员时(静态属性,静态方法) 一.static 静态变量:类变量,静态属性(会被该类的所 ...

- 他人学习Python感悟

作者:王一 链接:https://www.zhihu.com/question/26235428/answer/36568428 来源:知乎 著作权归作者所有.商业转载请联系作者获得授权,非商业转载请 ...

- 基于ASP.NET Core 5.0使用RabbitMQ消息队列实现事件总线(EventBus)

文章阅读请前先参考看一下 https://www.cnblogs.com/hudean/p/13858285.html 安装RabbitMQ消息队列软件与了解C#中如何使用RabbitMQ 和 htt ...

- 为什么大厂前端监控都在用GIF做埋点?

什么是前端监控? 它指的是通过一定的手段来获取用户行为以及跟踪产品在用户端的使用情况,并以监控数据为基础,为产品优化指明方向,为用户提供更加精确.完善的服务. 如果这篇文章有帮助到你,️关注+点赞️鼓 ...

- 03并发编程(多道技术+进程理论+进程join方法)

目录 03 并发编程 03 并发编程

- 从 MMU 看内存管理

在计算机早期的时候,计算机是无法将大于内存大小的应用装入内存的,因为计算机读写应用数据是直接通过总线来对内存进行直接操作的,对于写操作来说,计算机会直接将地址写入内存:对于读操作来说,计算机会直接读取 ...

- .NET 固定时间窗口算法实现(无锁线程安全)

一.前言 最近有一个生成 APM TraceId 的需求,公司的APM系统的 TraceId 的格式为:APM AgentId+毫秒级时间戳+自增数字,根据此规则生成的 Id 可以保证全局唯一(有 N ...

- 自学linux(常用命令)STEP3

tty tty 可以查看当前处于哪一个系统中. 比如我在图形化界面输入 tty: alt+ctrl+F3切换到命令行: linux命令 linux命令,一般都是 命令+选项+参数,这种格式,为了防止选 ...