Sparse Autoencoder(一)

Neural Networks

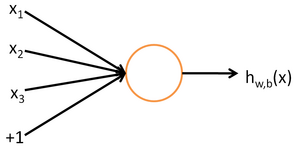

We will use the following diagram to denote a single neuron:

This "neuron" is a computational unit that takes as input x1,x2,x3 (and a +1 intercept term), and outputs

, where

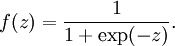

is called the activation function. In these notes, we will choose

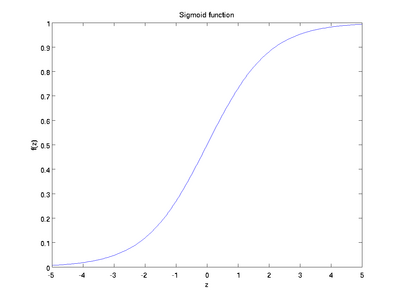

to be the sigmoid function:

Thus, our single neuron corresponds exactly to the input-output mapping defined by logistic regression.

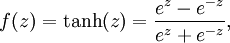

Although these notes will use the sigmoid function, it is worth noting that another common choice for f is the hyperbolic tangent, or tanh, function:

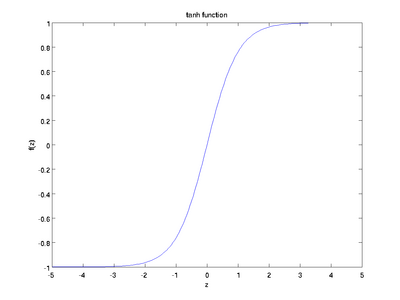

Here are plots of the sigmoid and tanh functions:

Finally, one identity that'll be useful later: If f(z) = 1 / (1 + exp( − z)) is the sigmoid function, then its derivative is given by f'(z) = f(z)(1 − f(z))

sigmoid 函数 或 tanh 函数都可用来完成非线性映射

Neural Network model

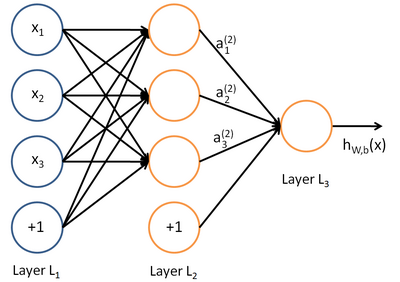

A neural network is put together by hooking together many of our simple "neurons," so that the output of a neuron can be the input of another. For example, here is a small neural network:

In this figure, we have used circles to also denote the inputs to the network. The circles labeled "+1" are called bias units, and correspond to the intercept term. The leftmost layer of the network is called the input layer, and the rightmost layer the output layer (which, in this example, has only one node). The middle layer of nodes is called the hidden layer, because its values are not observed in the training set. We also say that our example neural network has 3 input units (not counting the bias unit), 3 hidden units, and 1 output unit.

Our neural network has parameters (W,b) = (W(1),b(1),W(2),b(2)), where we write

to denote the parameter (or weight) associated with the connection between unit j in layer l, and unit i in layerl + 1. (Note the order of the indices.) Also,

is the bias associated with unit i in layer l + 1.

We will write

to denote the activation (meaning output value) of unit i in layer l. For l = 1, we also use

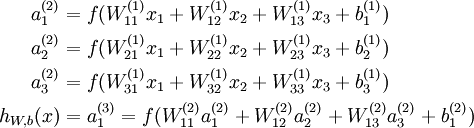

to denote the i-th input. Given a fixed setting of the parameters W,b, our neural network defines a hypothesis hW,b(x) that outputs a real number. Specifically, the computation that this neural network represents is given by:

每层都是线性组合 + 非线性映射

In the sequel, we also let

denote the total weighted sum of inputs to unit i in layer l, including the bias term (e.g.,

), so that

.

Note that this easily lends itself to a more compact notation. Specifically, if we extend the activation function

to apply to vectors in an element-wise fashion (i.e., f([z1,z2,z3]) = [f(z1),f(z2),f(z3)]), then we can write the equations above more compactly as:

We call this step forward propagation.

Backpropagation Algorithm

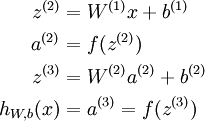

for a single training example (x,y), we define the cost function with respect to that single example to be:

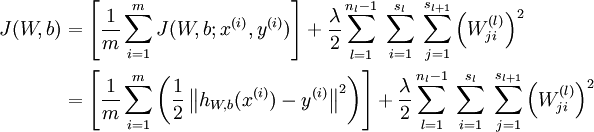

This is a (one-half) squared-error cost function. Given a training set of m examples, we then define the overall cost function to be:

J(W,b;x,y) is the squared error cost with respect to a single example; J(W,b) is the overall cost function, which includes the weight decay term.

Our goal is to minimize J(W,b) as a function of W and b. To train our neural network, we will initialize each parameter

and each

to a small random value near zero (say according to a Normal(0,ε2) distribution for some small ε, say 0.01), and then apply an optimization algorithm such as batch gradient descent.Finally, note that it is important to initialize the parameters randomly, rather than to all 0's. If all the parameters start off at identical values, then all the hidden layer units will end up learning the same function of the input (more formally,

will be the same for all values of i, so that

for any input x). The random initialization serves the purpose of symmetry breaking.

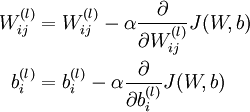

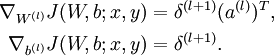

One iteration of gradient descent updates the parameters W,b as follows:

The two lines above differ slightly because weight decay is applied to W but not b.

The intuition behind the backpropagation algorithm is as follows. Given a training example (x,y), we will first run a "forward pass" to compute all the activations throughout the network, including the output value of the hypothesis hW,b(x). Then, for each node i in layer l, we would like to compute an "error term"

that measures how much that node was "responsible" for any errors in our output.

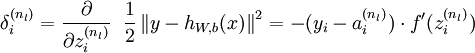

For an output node, we can directly measure the difference between the network's activation and the true target value, and use that to define

(where layer nl is the output layer). For hidden units, we will compute

based on a weighted average of the error terms of the nodes that uses

as an input. In detail, here is the backpropagation algorithm:

- 1,Perform a feedforward pass, computing the activations for layers L2, L3, and so on up to the output layer

.

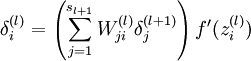

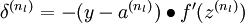

2,For each output unit i in layer nl (the output layer), set

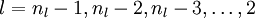

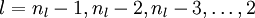

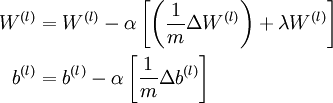

For

For each node i in layer l, set

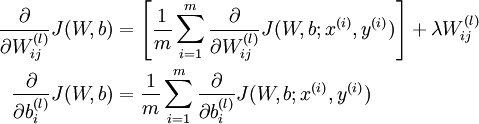

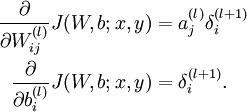

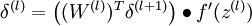

4,Compute the desired partial derivatives, which are given as:

We will use "

" to denote the element-wise product operator (denoted ".*" in Matlab or Octave, and also called the Hadamard product), so that if

, then

. Similar to how we extended the definition of

to apply element-wise to vectors, we also do the same for

(so that

).

The algorithm can then be written:

- 1,Perform a feedforward pass, computing the activations for layers

,

, up to the output layer

, using the equations defining the forward propagation steps

2,For the output layer (layer

), set

3,For

- Set

4,Compute the desired partial derivatives:

Implementation note: In steps 2 and 3 above, we need to compute

for each value of

. Assuming

is the sigmoid activation function, we would already have

stored away from the forward pass through the network. Thus, using the expression that we worked out earlier for

, we can compute this as

.

Finally, we are ready to describe the full gradient descent algorithm. In the pseudo-code below,

is a matrix (of the same dimension as

), and

is a vector (of the same dimension as

). Note that in this notation, "

" is a matrix, and in particular it isn't "

times

." We implement one iteration of batch gradient descent as follows:

- 1,Set

,

(matrix/vector of zeros) for all

.

- 2,For

to

,

- Use backpropagation to compute

and

.

- Set

.

- Set

.

- 3,Update the parameters:

Sparse Autoencoder(一)的更多相关文章

- Deep Learning 1_深度学习UFLDL教程:Sparse Autoencoder练习(斯坦福大学深度学习教程)

1前言 本人写技术博客的目的,其实是感觉好多东西,很长一段时间不动就会忘记了,为了加深学习记忆以及方便以后可能忘记后能很快回忆起自己曾经学过的东西. 首先,在网上找了一些资料,看见介绍说UFLDL很不 ...

- (六)6.5 Neurons Networks Implements of Sparse Autoencoder

一大波matlab代码正在靠近.- -! sparse autoencoder的一个实例练习,这个例子所要实现的内容大概如下:从给定的很多张自然图片中截取出大小为8*8的小patches图片共1000 ...

- UFLDL实验报告2:Sparse Autoencoder

Sparse Autoencoder稀疏自编码器实验报告 1.Sparse Autoencoder稀疏自编码器实验描述 自编码神经网络是一种无监督学习算法,它使用了反向传播算法,并让目标值等于输入值, ...

- 七、Sparse Autoencoder介绍

目前为止,我们已经讨论了神经网络在有监督学习中的应用.在有监督学习中,训练样本是有类别标签的.现在假设我们只有一个没有带类别标签的训练样本集合 ,其中 .自编码神经网络是一种无监督学习算法,它使用 ...

- CS229 6.5 Neurons Networks Implements of Sparse Autoencoder

sparse autoencoder的一个实例练习,这个例子所要实现的内容大概如下:从给定的很多张自然图片中截取出大小为8*8的小patches图片共10000张,现在需要用sparse autoen ...

- 【DeepLearning】Exercise:Sparse Autoencoder

Exercise:Sparse Autoencoder 习题的链接:Exercise:Sparse Autoencoder 注意点: 1.训练样本像素值需要归一化. 因为输出层的激活函数是logist ...

- Sparse AutoEncoder简介

1. AutoEncoder AutoEncoder是一种特殊的三层神经网络, 其输出等于输入:\(y^{(i)}=x^{(i)}\), 如下图所示: 亦即AutoEncoder想学到的函数为\(f_ ...

- Exercise:Sparse Autoencoder

斯坦福deep learning教程中的自稀疏编码器的练习,主要是参考了 http://www.cnblogs.com/tornadomeet/archive/2013/03/20/2970724 ...

- DL二(稀疏自编码器 Sparse Autoencoder)

稀疏自编码器 Sparse Autoencoder 一神经网络(Neural Networks) 1.1 基本术语 神经网络(neural networks) 激活函数(activation func ...

- sparse autoencoder

1.autoencoder autoencoder的目标是通过学习函数,获得其隐藏层作为学习到的新特征. 从L1到L2的过程成为解构,从L2到L3的过程称为重构. 每一层的输出使用sigmoid方法, ...

随机推荐

- 《剑指offer》字符串中的字符替换

一.题目描述 请实现一个函数,将一个字符串中的空格替换成"%20".例如,当字符串为We Are Happy.则经过替换之后的字符串为We%20Are%20Happy. 二.输入描 ...

- windows gitbub使用

1.安装git bush (windows没什么好说的 下一步,下一步,,) 2. 通过gitbush命令行生成密钥: (拷贝密钥) 3.密钥添加到github上面: 4.克隆项目: 5.提交: 查看 ...

- cygwin下调用make出现的奇怪现象

<lenovo@root 11:48:03> /cygdrive/d/liuhang/GitHub/rpi_linux/linux$make help 1 [main] make 4472 ...

- 【UVA 437】The Tower of Babylon(拓扑排序+DP,做法)

[Solution] 接上一篇,在处理有向无环图的最长链问题的时候,可以在做拓扑排序的同时,一边做DP; 设f[i]表示第i个方块作为最上面的最高值; f[y]=max(f[y],f[x]+h[y]) ...

- 云服务器 ECS Linux 系统下使用 dig 命令查询域名解析

云服务器 ECS Linux 系统可以使用通常自带的 dig 命令来查询域名解析情况.本文对此进行简要说明. 查询域名 A 记录 命令格式: dig <域名> 比如,查询域名 www.al ...

- hdu 1005 Number Sequence(矩阵连乘+二分快速求幂)

题目:http://acm.hdu.edu.cn/showproblem.php?pid=1005 代码: #include<iostream> #include<stdio.h&g ...

- ecnu 1244

SERCOI 近期设计了一种积木游戏.每一个游戏者有N块编号依次为1 ,2,-,N的长方体积木. 对于每块积木,它的三条不同的边分别称为"a边"."b边"和&q ...

- mahout处理路透社语料步骤,转换成须要的格式

首先下载路透社语料(百度就能够下载): 然后上传Linux 并解压到指定文件夹.Tips:此处我放在可 /usr/hadoop/mahout/reutersTest/reuters tar -zxvf ...

- 6.C语言迷宫程序界面版

写迷宫程序首先需要安装图形库easyX 安装地址链接:https://pan.baidu.com/s/1qZwFn3m 密码:ozge 项目截图: //左上角是七点,右下角是终点,蓝色表示的是走过的路 ...

- 实测Untangle - Linux下的安全网关

UntangleGateway是一个Linux下开源的的网关模块,支持垃圾过滤.URL阻截.反病毒蠕虫等多种功能,其实他的功能还远不止这些,经过一段时间研究本人特制作本视频供大家参考. 本文出自 &q ...