ELK基础原理

搜索引擎

索引组件: 获取数据-->建立文档-->文档分析-->文档索引(倒排索引)

搜索组件: 用户搜索接口-->建立查询(将用户键入的信息转换为可处理的查询对象)-->搜索查询-->展现结果

索引组件:Lucene 核心组件

索引(index):数据库(database)

类型(type):表(table)

文档(Document):行(row)

映射(Mapping):

Lucene只负责文档分析 不负责获取数据和建立文档 必须借助其它工具建立文档后才能发挥Lucene的作用

文档分析最重要的就是切词 把整个文档切分成一个一个单词

搜索组件:

Solr 基于单机运行

ElasticSearch 基于分布式运行(弹性搜索引擎) 分散的运行到多个节点

一个搜索引擎是由两个部分组成:

1.search 搜索组件

面向用户的接口 接入用户的请求 把用户的请求转换成适合搜索算法执行搜索的形式 把搜索结果返回给用户

2.index 索引组件

分析原始数据 改造原始数据 把原始数据结构变成适合搜索算法搜索的结构

3.倒排索引的实现

1.首先把原始数据构建成文档

1 winter is coming

2 our is the big

3 the pig is big

2.把文档创建出倒排索引

term freg documents

winter 1 1

big 2 2,3

is 3 1,2,3

our 1 2

通过hash算法在倒排索引中把包含关键字的文档编号返回给客户端

ELK的两种使用场景:

1.整站的日志存储分析 2.全站搜索

ELK和Hadoop的区别:

Hadoop 只能实现离线计算

文件系统 HDFS

数据存储 HBase

分布式计算 MapReduce

Elasticsearch安装和配置

修改相关配置

1.修改jvm初始化内存分配大小 /etc/elasticsearch/jvm.options

2.主配置文件段 /etc/elasticsearch/elasticsearch.yml

Cluster配置段 标识某个节点是否属于当前集群的成员

Node配置段 集群中当前节点的唯一标识

Paths配置段 设置日志和数据的存放路径

Memory配置段 内存管理设置

Network配置段 网络接口的设置

Discovery配置段 成员关系判定的相关协议

Gateway配置段 网关设置

Various配置段 其他可变参数设置

测试安装成功

[root@wi]# curl -XGET http://192.168.74.128:9200

{

"name" : "192.168.74.128",

"cluster_name" : "myels",

"cluster_uuid" : "Qq0ms0ncQle85Wm27STTHg",

"version" : {

"number" : "5.6.10",

"build_hash" : "b727a60",

"build_date" : "2018-06-06T15:48:34.860Z",

"build_snapshot" : false,

"lucene_version" : "6.6.1"

},

"tagline" : "You Know, for Search"

}

创建索引

[root@192 logs]# curl -XPUT http://192.168.74.128:9200/myindex

{"acknowledged":true,"shards_acknowledged":true,"index":"myindex"}

查看索引的分片信息

[root@192 logs]# curl -XGET http://192.168.74.128:9200/_cat/shards

myindex 4 p STARTED 0 162b 192.168.74.128 192.168.74.128

myindex 4 r STARTED 0 162b 192.168.74.129 192.168.74.129

myindex 1 r STARTED 0 162b 192.168.74.128 192.168.74.128

myindex 1 p STARTED 0 162b 192.168.74.129 192.168.74.129

myindex 3 r STARTED 0 162b 192.168.74.128 192.168.74.128

myindex 3 p STARTED 0 162b 192.168.74.129 192.168.74.129

myindex 2 p STARTED 0 162b 192.168.74.128 192.168.74.128

myindex 2 r STARTED 0 162b 192.168.74.129 192.168.74.129

myindex 0 p STARTED 0 162b 192.168.74.128 192.168.74.128

myindex 0 r STARTED 0 162b 192.168.74.129 192.168.74.129

Logstash安装和配置

集中,转发并存储数据 高度插件化

1. 数据输入插件(日志,redis)

2. 数据过滤插件

3. 数据输出插件

logstash既可以做agent从本地收集数据信息 把数据文档化输出到elasticsearch

logstash也可以做server收集各个logstash agent收集的数据并对agent提交的数据统一做格式化,文档化再发送给easticsearch

logstash安装的默认目录在/usr/share/logstash中 此目录并没有在系统环境变量中 启动服务的时候需要指明绝对路径

ip地址数据库 maxmind geolite2

[root@ bin]# ./logstash -f /etc/logstash/conf.d/test1.conf

jjjj

{

"@version" => "",

"host" => "192.168.1.4",

"@timestamp" => --19T08::.449Z,

"message" => "jjjj"

} logstash配置文件格式

input{ } filter { } output{ }

logstash 内建pattern

less /usr/share/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-4.1./patterns/ input {

file{

start_position => "end"

path => ["/var/log/httpd/access_log"]

}

} filter {

grok {

match => {"message" => "%{IP:client}" }

}

} output {

stdout {

codec => rubydebug

}

} filter {

logstash内建很多插件模块

grok {

match => {"message" => "%{HTTPD_COMBINEDLOG}" }

}

}

配置文件基础框架

input {

file{

start_position => "end"

path => ["/var/log/httpd/access_log"]

}

}

filter {

grok {

match => {"message" => "%{HTTPD_COMBINEDLOG}" }

remove_field => "message"

}

date {

match => ["timestamp","dd/MMM/YYYY:H:m:s Z"]

remove_field => "timestamp"

}

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/geoip/GeoLite2-City.mmdb"

}

}

output {

elasticsearch {

hosts => ["http://192.168.74.128:9200","http://192.168.74.129:9200"]

index => "logstash-%{+YYYY.MM.dd}"

document_type => "apache_logs"

}

}

logstash收集文件

input {

beats {

port =>

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

remove_field => "message"

}

date {

match => ["timestamp","dd/MMM/YYYY:H:m:s Z"]

remove_field => "timestamp"

}

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/geoip/GeoLite2-City.mmdb"

}

}

output {

elasticsearch {

hosts => ["http://192.168.74.128:9200/","http://192.168.74.129:9200/"]

index => "logstash-%{+YYYY.MM.dd}-33"

document_type => "apache_logs"

}

}

logstash收集filebeats数据

input {

redis {

data_type => "list"

db =>

host => "192.168.74.129"

port =>

key => "filebeat"

password => "food"

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

remove_field => "message"

}

date {

match => ["timestamp","dd/MMM/YYYY:H:m:s Z"]

remove_field => "timestamp"

}

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/geoip/GeoLite2-City.mmdb"

}

}

output {

elasticsearch {

hosts => ["http://192.168.74.128:9200/","http://192.168.74.129:9200/"]

index => "logstash-%{+YYYY.MM.dd}"

document_type => "apache_logs"

}

}

logstash读取redis

logstashserver 配置文件支持if条件判断设置

filter {

if [path] =~ "access" {

grok {

match => {"message" => "%{IP:client}" }

}

}

if [geo][city] = "bj" {

}

}

if条件判断设置

FileBeat安装和配置

filebeat支持的所有插件实例文件存放在: /etc/filebeat/filebeat.full.yml

#-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch:

# Array of hosts to connect to.

#hosts: ["192.168.74.128:9200","192.168.74.129:9200"] # Optional protocol and basic auth credentials.

#protocol: "https"

#username: "elastic"

#password: "changeme" #----------------------------- Logstash output --------------------------------

#output.logstash:

# The Logstash hosts

#hosts: ["192.168.74.128:5044"] # Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"] # Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem" # Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key" #----------- Redis output -------------------

output.redis:

# Boolean flag to enable or disable the output module.

enabled: true # The list of Redis servers to connect to. If load balancing is enabled, the

# events are distributed to the servers in the list. If one server becomes

# unreachable, the events are distributed to the reachable servers only.

hosts: ["192.168.74.129:6379"] # The Redis port to use if hosts does not contain a port number. The default

# is .

port: # The name of the Redis list or channel the events are published to. The

# default is filebeat.

key: filebeat # The password to authenticate with. The default is no authentication.

password: food # The Redis database number where the events are published. The default is .

db: # The Redis data type to use for publishing events. If the data type is list,

# the Redis RPUSH command is used. If the data type is channel, the Redis

# PUBLISH command is used. The default value is list.

datatype: list # The number of workers to use for each host configured to publish events to

# Redis. Use this setting along with the loadbalance option. For example, if

# you have hosts and workers, in total workers are started ( for each

# host).

worker: # If set to true and multiple hosts or workers are configured, the output

# plugin load balances published events onto all Redis hosts. If set to false,

# the output plugin sends all events to only one host (determined at random)

# and will switch to another host if the currently selected one becomes

# unreachable. The default value is true.

loadbalance: true # The Redis connection timeout in seconds. The default is seconds.

timeout: 5s # The number of times to retry publishing an event after a publishing failure.

# After the specified number of retries, the events are typically dropped.

# Some Beats, such as Filebeat, ignore the max_retries setting and retry until

# all events are published. Set max_retries to a value less than to retry

# until all events are published. The default is .

#max_retries: # The maximum number of events to bulk in a single Redis request or pipeline.

# The default is .

#bulk_max_size: # The URL of the SOCKS5 proxy to use when connecting to the Redis servers. The

# value must be a URL with a scheme of socks5://.

#proxy_url: # This option determines whether Redis hostnames are resolved locally when

# using a proxy. The default value is false, which means that name resolution

# occurs on the proxy server.

#proxy_use_local_resolver: false # Enable SSL support. SSL is automatically enabled, if any SSL setting is set.

#ssl.enabled: true # Configure SSL verification mode. If `none` is configured, all server hosts

# and certificates will be accepted. In this mode, SSL based connections are

# susceptible to man-in-the-middle attacks. Use only for testing. Default is

# `full`.

#ssl.verification_mode: full # List of supported/valid TLS versions. By default all TLS versions 1.0 up to

# 1.2 are enabled.

#ssl.supported_protocols: [TLSv1., TLSv1., TLSv1.] # Optional SSL configuration options. SSL is off by default.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"] # Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem" # Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key" # Optional passphrase for decrypting the Certificate Key.

#ssl.key_passphrase: '' # Configure cipher suites to be used for SSL connections

#ssl.cipher_suites: [] # Configure curve types for ECDHE based cipher suites

#ssl.curve_types: [] # Configure what types of renegotiation are supported. Valid options are

# never, once, and freely. Default is never.

#ssl.renegotiation: never

Filebeat收集数据到redis

Kibana安装配置

kibana是一个独立的web服务器 可以单独安装在任何一台主机上

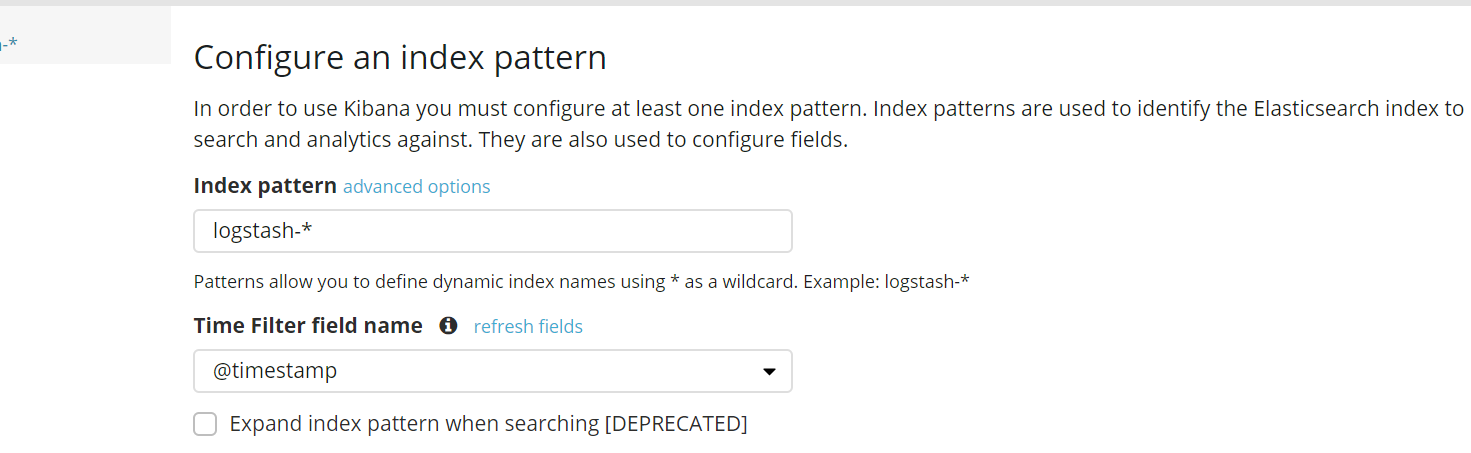

kibana首次打开页面手动指定加载elasticsearch集群中的哪些索引(数据库) 》 index pattern

@timestamp 获取记录的生成时间按照这列的值来进行排序

查看进程是否启动 ps aux

查看端口是否正确监听 ss -tnl

logstash正则匹配实例

\[[A-Z ]*\]\[(?<logtime>[-]{,}\/[-]{}\/[-]{} [-]{}\:[-]{}\:[-]{}\.[-]{})\].*\[rid:(?<rid>[a-z0-9A-Z\s.]*),sid:(?<sid>[a-z0-9A-Z\s.]*),uid:(?<uid>[a-z0-9A-Z\s.]*),tid:(?<tid>[a-z0-9A-Z\s.]*),swjg:(?<swjg>[a-z0-9A-Z\s.]*)\] (?:timecost:(?<timecost>[-]*)){,},(?:url:(?<url>(.*?[^,]),)).*

"url": "http://99.13.82.233:8080/api/common/basecode/datamapinvalues,",

\[[A-Z ]*\]\[(?<logtime>[-]{,}\/[-]{}\/[-]{} [-]{}\:[-]{}\:[-]{}\.[-]{})\].*\[rid:(?<rid>[a-z0-9A-Z\s.]*),sid:(?<sid>[a-z0-9A-Z\s.]*),uid:(?<uid>[a-z0-9A-Z\s.]*),tid:(?<tid>[a-z0-9A-Z\s.]*),swjg:(?<swjg>[a-z0-9A-Z\s.]*)\] (?:timecost:(?<timecost>[-]*)){,},(?:url:(?<url>(.*?[^,])),).*

"url": "http://99.13.82.233:8080/api/common/basecode/datamapinvalues",

\[[A-Z ]*\]\[(?<logtime>[-]{,}\/[-]{}\/[-]{} [-]{}\:[-]{}\:[-]{}\.[-]{})\].*\[rid:(?<rid>[a-z0-9A-Z\s.]*),sid:(?<sid>[a-z0-9A-Z\s.]*),uid:(?<uid>[a-z0-9A-Z\s.]*),tid:(?<tid>[a-z0-9A-Z\s.]*),swjg:(?<swjg>[a-z0-9A-Z\s.]*)\] (?:timecost:(?<timecost>[-]*)){,},(((?:resturl):(?<resturl>(.*?[^,])),)|((?:url):(?<url>(.*?[^,])),)).*

"url": "http://99.13.82.233:8080/api/common/basecode/datamapinvalues",

"resturl": "http://99.13.82.233:8080/api/common/basecode/datamapinvalues",

\[[A-Z ]*\]\[(?<logtime>[-]{,}\/[-]{}\/[-]{} [-]{}\:[-]{}\:[-]{}\.[-]{})\].*\[rid:(?<rid>[a-z0-9A-Z\s.]*),sid:(?<sid>[a-z0-9A-Z\s.]*),uid:(?<uid>[a-z0-9A-Z\s.]*),tid:(?<tid>[a-z0-9A-Z\s.]*),swjg:(?<swjg>[a-z0-9A-Z\s.]*)\] (?:timecost:(?<timecost>[-]*)){,},(((?:resturl):(?<resturl>(.*?[^,])),)|((?:url):(?<url>(.*?[^,])),)|.*).*

"url": "http://99.13.82.233:8080/api/common/basecode/datamapinvalues",

"resturl": "http://99.13.82.233:8080/api/common/basecode/datamapinvalues",

如果没有url或者resturl

{

"uid": "b1133",

"swjg": "3232.2",

"rid": "",

"logtime": "2018/09/19 11:39:00.098",

"tid": "nh3211111.2",

"timecost": "",

"sid": ""

}

ELK基础原理的更多相关文章

- I2C 基础原理详解

今天来学习下I2C通信~ I2C(Inter-Intergrated Circuit)指的是 IC(Intergrated Circuit)之间的(Inter) 通信方式.如上图所以有很多的周边设备都 ...

- C#基础原理拾遗——引用类型的值传递和引用传递

C#基础原理拾遗——引用类型的值传递和引用传递 以前写博客不深动,只搭个架子,像做笔记,没有自己的思考,也没什么人来看.这个毛病得改,就从这一篇开始… 最近准备面试,深感基础之重要,奈何我不是计算机科 ...

- OpenStack的基础原理

OpenStack的基础原理 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. OpenStack既是一个社区,也是一个项目和一个开源软件,它提供了一个部署云的操作平台或工具集.其 ...

- DNS服务基础原理介绍

FQDN 全称域名 localhost(主机名或者是别名).localdomain(域名) FQDN=主机名.域名 根域 . 顶级域名 .com .n ...

- Sql注入基础原理介绍

说明:文章所有内容均截选自实验楼教程[Sql注入基础原理介绍]~ 实验原理 Sql 注入攻击是通过将恶意的 Sql 查询或添加语句插入到应用的输入参数中,再在后台 Sql 服务器上解析执行进行的攻击, ...

- Macaca 基础原理浅析

导语 前面几篇文章介绍了在Macaca实践中的一些实用技巧与解决方案,今天简单分析一下Macaca的基础原理.这篇文章将以前面所分享的UI自动化Macaca-Java版实践心得中的demo为基础,进行 ...

- JVM知识(一):基础原理

学过java知识和技术人,都应该听说过jvm,jvm一直是java知识里面晋级阶段的重要部分,如果想要在java技术领域更深入一步,jvm是必须需要明白的知识点. 本篇来讲解jvm的基础原理,先来熟悉 ...

- Hadoop基础原理

Hadoop基础原理 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 业内有这么一句话说:云计算可能改变了整个传统IT产业的基础架构,而大数据处理,尤其像Hadoop组件这样的技术出 ...

- hashmap的一些基础原理

本文来源于翁舒航的博客,点击即可跳转原文观看!!!(被转载或者拷贝走的内容可能缺失图片.视频等原文的内容) 若网站将链接屏蔽,可直接拷贝原文链接到地址栏跳转观看,原文链接:https://www.cn ...

随机推荐

- 在 WPF 中如何在控件上屏蔽系统默认的触摸长按事件

来源:https://stackoverflow.com/questions/5962108/disable-a-right-click-press-and-hold-in-wpf-applicati ...

- QtCreator pro中相对路径和debug文件夹下未放动态库时调试报QtCreator:during startup program exited with code 0xc0000135错误

QtCreator pro中相对路径一般是以pro文件(非main函数所在文件)所在的当前目录为起点,用$$PWD表示. 如头文件和库文件 INCLUDEPATH +=$$PWD/inc win32 ...

- netty 的 Google protobuf 开发

根据上一篇博文 Google Protobuf 使用 Java 版 netty 集成 protobuf 的方法非常简单.代码如下: server package protobuf.server.imp ...

- ZOJ 3886 Nico Number(筛素数+Love(线)Live(段)树)

problemCode=3886">ZOJ 3886 题意: 定义一种NicoNico数x,x有下面特征: 全部不大于x且与x互质的数成等差数列,如x = 5 ,与5互素且不大于5的数 ...

- Golang遇到的一些问题总结

当类成员是struct指针.map.slice 时,默认初始化的值是 nil,在使用前需要提前初始化,否则会报相关的 nil 错误.引用类型的成员,默认会初始化为 nil,但对 nil 的切片进行 l ...

- iOS开发之--UIImageView的animationImages动画

图片动画实现,代码如下: -(UIImageView *)animationImageView { if (!_animationImageView) { _animationImageView= [ ...

- mac xmind 激活

下载地址 https://www.jb51.net/softjc/624167.html 打开压缩包中的[K].zip 按里面的READ ME!.rtf 文件来操作 嗯,就这样

- jedis中scan的实现

我的版本说明: redis服务端版本:redis_version:2.8.19 jedis: <dependency> <groupId>redis.clients</g ...

- kafka---->kafka connect的使用(一)

这里面介绍一下kafka connect的一些使用. kafka connect的使用 一.在config目录下面复制一个file-srouce.properties并且修改内容 huhx@gohuh ...

- P - Air Raid

来源poj1422 Consider a town where all the streets are one-way and each street leads from one intersect ...