深度增强学习--A3C

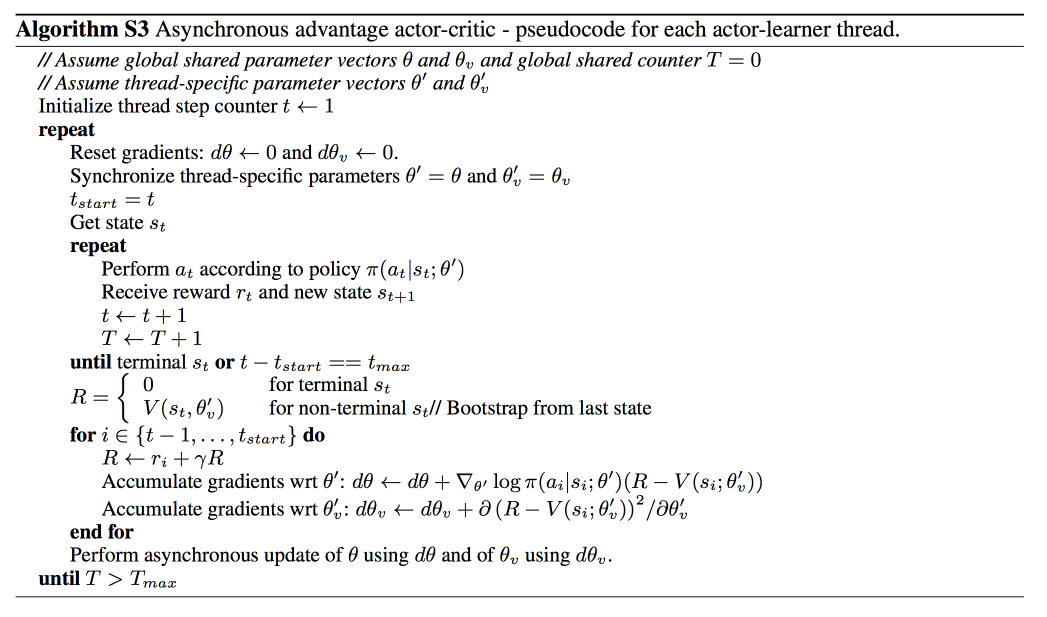

它会创建多个并行的环境, 让多个拥有副结构的 agent 同时在这些并行环境上更新主结构中的参数. 并行中的 agent 们互不干扰, 而主结构的参数更新受到副结构提交更新的不连续性干扰, 所以更新的相关性被降低, 收敛性提高

import threading

import numpy as np

import tensorflow as tf

import pylab

import time

import gym

from keras.layers import Dense, Input

from keras.models import Model

from keras.optimizers import Adam

from keras import backend as K # global variables for threading

episode = 0

scores = [] EPISODES = 2000 # This is A3C(Asynchronous Advantage Actor Critic) agent(global) for the Cartpole

# In this example, we use A3C algorithm

class A3CAgent:

def __init__(self, state_size, action_size, env_name):

# get size of state and action

self.state_size = state_size

self.action_size = action_size # get gym environment name

self.env_name = env_name # these are hyper parameters for the A3C

self.actor_lr = 0.001

self.critic_lr = 0.001

self.discount_factor = .99

self.hidden1, self.hidden2 = 24, 24

self.threads = 8 #8个线程并行 # create model for actor and critic network

self.actor, self.critic = self.build_model() # method for training actor and critic network

self.optimizer = [self.actor_optimizer(), self.critic_optimizer()] self.sess = tf.InteractiveSession()

K.set_session(self.sess)

self.sess.run(tf.global_variables_initializer()) # approximate policy and value using Neural Network

# actor -> state is input and probability of each action is output of network

# critic -> state is input and value of state is output of network

# actor and critic network share first hidden layer

def build_model(self):

state = Input(batch_shape=(None, self.state_size))

shared = Dense(self.hidden1, input_dim=self.state_size, activation='relu', kernel_initializer='glorot_uniform')(state) actor_hidden = Dense(self.hidden2, activation='relu', kernel_initializer='glorot_uniform')(shared)

action_prob = Dense(self.action_size, activation='softmax', kernel_initializer='glorot_uniform')(actor_hidden) value_hidden = Dense(self.hidden2, activation='relu', kernel_initializer='he_uniform')(shared)

state_value = Dense(1, activation='linear', kernel_initializer='he_uniform')(value_hidden) actor = Model(inputs=state, outputs=action_prob)

critic = Model(inputs=state, outputs=state_value) actor._make_predict_function()

critic._make_predict_function() actor.summary()

critic.summary() return actor, critic # make loss function for Policy Gradient

# [log(action probability) * advantages] will be input for the back prop

# we add entropy of action probability to loss

def actor_optimizer(self):

action = K.placeholder(shape=(None, self.action_size))

advantages = K.placeholder(shape=(None, )) policy = self.actor.output good_prob = K.sum(action * policy, axis=1)

eligibility = K.log(good_prob + 1e-10) * K.stop_gradient(advantages)

loss = -K.sum(eligibility) entropy = K.sum(policy * K.log(policy + 1e-10), axis=1) actor_loss = loss + 0.01*entropy optimizer = Adam(lr=self.actor_lr)

updates = optimizer.get_updates(self.actor.trainable_weights, [], actor_loss)

train = K.function([self.actor.input, action, advantages], [], updates=updates)

return train # make loss function for Value approximation

def critic_optimizer(self):

discounted_reward = K.placeholder(shape=(None, )) value = self.critic.output loss = K.mean(K.square(discounted_reward - value)) optimizer = Adam(lr=self.critic_lr)

updates = optimizer.get_updates(self.critic.trainable_weights, [], loss)

train = K.function([self.critic.input, discounted_reward], [], updates=updates)

return train # make agents(local) and start training

def train(self):

# self.load_model('./save_model/cartpole_a3c.h5')

agents = [Agent(i, self.actor, self.critic, self.optimizer, self.env_name, self.discount_factor,

self.action_size, self.state_size) for i in range(self.threads)]#建立8个local agent for agent in agents:

agent.start() while True:

time.sleep(20) plot = scores[:]

pylab.plot(range(len(plot)), plot, 'b')

pylab.savefig("./save_graph/cartpole_a3c.png") self.save_model('./save_model/cartpole_a3c.h5') def save_model(self, name):

self.actor.save_weights(name + "_actor.h5")

self.critic.save_weights(name + "_critic.h5") def load_model(self, name):

self.actor.load_weights(name + "_actor.h5")

self.critic.load_weights(name + "_critic.h5") # This is Agent(local) class for threading

class Agent(threading.Thread):

def __init__(self, index, actor, critic, optimizer, env_name, discount_factor, action_size, state_size):

threading.Thread.__init__(self) self.states = []

self.rewards = []

self.actions = [] self.index = index

self.actor = actor

self.critic = critic

self.optimizer = optimizer

self.env_name = env_name

self.discount_factor = discount_factor

self.action_size = action_size

self.state_size = state_size # Thread interactive with environment

def run(self):

global episode

env = gym.make(self.env_name)

while episode < EPISODES:

state = env.reset()

score = 0

while True:

action = self.get_action(state)

next_state, reward, done, _ = env.step(action)

score += reward self.memory(state, action, reward) state = next_state if done:

episode += 1

print("episode: ", episode, "/ score : ", score)

scores.append(score)

self.train_episode(score != 500)

break # In Policy Gradient, Q function is not available.

# Instead agent uses sample returns for evaluating policy

def discount_rewards(self, rewards, done=True):

discounted_rewards = np.zeros_like(rewards)

running_add = 0

if not done:

running_add = self.critic.predict(np.reshape(self.states[-1], (1, self.state_size)))[0]

for t in reversed(range(0, len(rewards))):

running_add = running_add * self.discount_factor + rewards[t]

discounted_rewards[t] = running_add

return discounted_rewards # save <s, a ,r> of each step

# this is used for calculating discounted rewards

def memory(self, state, action, reward):

self.states.append(state)

act = np.zeros(self.action_size)

act[action] = 1

self.actions.append(act)

self.rewards.append(reward) # update policy network and value network every episode

def train_episode(self, done):

discounted_rewards = self.discount_rewards(self.rewards, done) values = self.critic.predict(np.array(self.states))

values = np.reshape(values, len(values)) advantages = discounted_rewards - values self.optimizer[0]([self.states, self.actions, advantages])

self.optimizer[1]([self.states, discounted_rewards])

self.states, self.actions, self.rewards = [], [], [] def get_action(self, state):

policy = self.actor.predict(np.reshape(state, [1, self.state_size]))[0]

return np.random.choice(self.action_size, 1, p=policy)[0] if __name__ == "__main__":

env_name = 'CartPole-v1'

env = gym.make(env_name) state_size = env.observation_space.shape[0]

action_size = env.action_space.n env.close() global_agent = A3CAgent(state_size, action_size, env_name)

global_agent.train()

深度增强学习--A3C的更多相关文章

- 深度增强学习--DPPO

PPO DPPO介绍 PPO实现 代码DPPO

- 深度增强学习--DDPG

DDPG DDPG介绍2 ddpg输出的不是行为的概率, 而是具体的行为, 用于连续动作 (continuous action) 的预测 公式推导 推导 代码实现的gym的pendulum游戏,这个游 ...

- 深度增强学习--DQN的变形

DQN的变形 double DQN prioritised replay dueling DQN

- 深度增强学习--Actor Critic

Actor Critic value-based和policy-based的结合 实例代码 import sys import gym import pylab import numpy as np ...

- 深度增强学习--Policy Gradient

前面都是value based的方法,现在看一种直接预测动作的方法 Policy Based Policy Gradient 一个介绍 karpathy的博客 一个推导 下面的例子实现的REINFOR ...

- 深度增强学习--Deep Q Network

从这里开始换个游戏演示,cartpole游戏 Deep Q Network 实例代码 import sys import gym import pylab import random import n ...

- 常用增强学习实验环境 II (ViZDoom, Roboschool, TensorFlow Agents, ELF, Coach等) (转载)

原文链接:http://blog.csdn.net/jinzhuojun/article/details/78508203 前段时间Nature上发表的升级版Alpha Go - AlphaGo Ze ...

- 马里奥AI实现方式探索 ——神经网络+增强学习

[TOC] 马里奥AI实现方式探索 --神经网络+增强学习 儿时我们都曾有过一个经典游戏的体验,就是马里奥(顶蘑菇^v^),这次里约奥运会闭幕式,日本作为2020年东京奥运会的东道主,安倍最后也已经典 ...

- 增强学习 | AlphaGo背后的秘密

"敢于尝试,才有突破" 2017年5月27日,当今世界排名第一的中国棋手柯洁与AlphaGo 2.0的三局对战落败.该事件标志着最新的人工智能技术在围棋竞技领域超越了人类智能,借此 ...

随机推荐

- Python+Selenium 自动化实现实例-Css捕捉元素的几种方法

#coding=utf-8 from selenium import webdriverimport timedriver = webdriver.Chrome()driver.get("h ...

- LeetCode解题报告—— N-Queens && Edit Distance

1. N-Queens The n-queens puzzle is the problem of placing n queens on an n×n chessboard such that no ...

- TP-LINK路由器设置内网的一台电脑在外网可以远程操控

1.[IP和MAC绑定]--[静态ARP绑定设置]对MAC和IP进行绑定 2.[转发规则]--[DMZ主机],选择启用并把刚才设置的内网IP填入 3.直接访问路由器的外网IP就可以直接访问绑定的MAC ...

- 追忆似水流年sed

sed是一种非交互式的流编辑器,默认情况下并不会修改源文件内容,只是把命令的结果输出处理单位:行功能:查找替换.添加.插入.删除适用场景:常规编辑器难以胜任的文本:大文件(几百兆):有规律的文本修改操 ...

- 对TDD原则的理解

1,在编写好失败的单元测试之前,不要编写任何产品代码 如果不先写测试,那么各个函数就会耦合在一起,最后变得无法测试 如果后写测试,你也许能对大块大块的代码进行测试,但是无法对每个函数进行测 ...

- phpstorm如何进行文件或者文件夹重命名

1.phpstorm的重构 1.1重命名 在phpstorm中,右键点击我们要进行修改的文件,然后又一项重构,我们就可以进行对文件的重命名. 接下来点击重命名进行文件或者文件夹的重新命名. 在框中输入 ...

- Spring源码阅读入门指引

本文大概的对IOC和AOP进行了解,入门先到这一点便已经有了大概的印象了,详细内容请看下文. AD: 本文说明2点: 1.阅读源码的入口在哪里? 2.入门前必备知识了解:IOC和AOP 一.我们从哪里 ...

- 树形dp入门(poj 2342 Anniversary party)

题意: 某公司要举办一次晚会,但是为了使得晚会的气氛更加活跃,每个参加晚会的人都不希望在晚会中见到他的直接上司,现在已知每个人的活跃指数和上司关系(当然不可能存在环),求邀请哪些人(多少人)来能使得晚 ...

- BZOJ 1877 [SDOI2009]晨跑(多条不交叉最短路)

[题目链接] http://www.lydsy.com/JudgeOnline/problem.php?id=1877 [题目大意] 找出最多有几条点不重复的从1到N的路,并且要求在满足这个条件的情况 ...

- 【最大权森林/Kruskal】POJ3723-Conscription

[题目大意] 招募m+n个人每人需要花费$10000,给出一些关系,征募某个人的费用是原价-已招募人中和他亲密值的最大值,求最小费用. [思路] 人与人之间的亲密值越大,花费越少,即求出最大权森林,可 ...