DeepLearning - Forard & Backward Propogation

In the previous post I go through basic 1-layer Neural Network with sigmoid activation function, including

How to get sigmoid function from a binary classification problem?

NN is still an optimization problem, so what's the target to optimize? - cost function

How does model learn?- gradient descent

Work flow of NN? - Backward/Forward propagation

Now let's get deeper to 2-layers Neural Network, from where you can have as many hidden layers as you want. Also let's try to vectorize everything.

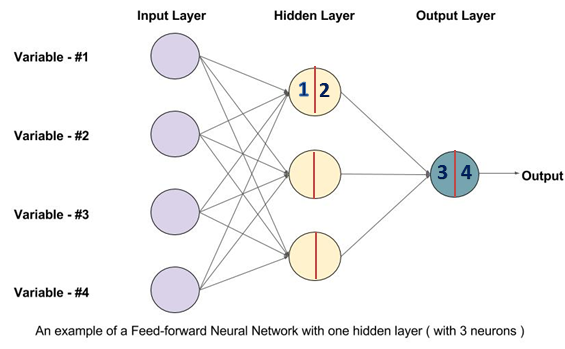

1. The architecture of 2-layers shallow NN

Below is the architecture of 2-layers NN, including input layer, one hidden layer and one output layer. The input layer is not counted.

(1) Forward propagation

In each neuron, there are 2 activities going on after take in the input from previous hidden layer:

- a linear transformation of the input

- a non-linear activation function applied after

Then the ouput will pass to the next hidden layer as input.

From input layer to output layer, do above computation layer by layer is forward propagation. It tries to map each input \(x \in R^n\) to $ y$.

For each training sample, the forward propagation is defined as following:

\(x \in R^{n*1}\) denotes the input data. In the picture n = 4.

\((w^{[1]} \in R^{k*n},b^{[1]}\in R^{k*1})\) is the parameter in the first hidden layer. Here k = 3.

\((w^{[2]} \in R^{1*k},b^{[2]}\in R^{1*1})\) is the parameter in the output layer. The output is a binary variable with 1 dimension.

\((z^{[1]} \in R^{k*1},z^{[2]}\in R^{1*1})\) is the intermediate output after linear transformation in the hidden and output layer.

\((a^{[1]} \in R^{k*1},a^{[2]}\in R^{1*1})\) is the output from each layer. To make it more generalize we can use \(a^{[0]} \in R^n\) to denote \(x\)

*Here we use \(g(x)\) as activation function for hidden layer, and sigmoid \(\sigma(x)\) for output layer. we will discuss what are the available activation functions \(g(x)\) out there in the following post. What happens in forward propagation is following:

\([1]\) \(z^{[1]} = {w^{[1]}} a^{[0]} + b^{[1]}\)

\([2]\) \(a^{[1]} = g((z^{[1]} ) )\)

\([3]\) \(z^{[2]} = {w^{[2]}} a^{[1]} + b^{[2]}\)

\([4]\) \(a^{[2]} = \sigma(z^{[2]} )\)

(2) Backward propagation

After forward propagation, for each training sample \(x\) is done ,we will have a prediction \(\hat{y}\). Comparing \(\hat{y}\) with \(y\), we then use the error between prediction and real value to update the parameter via gradient descent.

Backward propagation is passing the gradient descent from output layer back to input layer using chain rule like below. The deduction is in the previous post.

\[ \frac{\partial L(a,y)}{\partial w} =

\frac{\partial L(a,y)}{\partial a} \cdot

\frac{\partial a}{\partial z} \cdot

\frac{\partial z}{\partial w}\]

\([4]\) \(dz^{[2]} = a^{[2]} - y\)

\([3]\) \(dw^{[2]} = dz^{[2]} a^{[1]T}\)

\([3]\) \(db^{[2]} = dz^{[2]}\)

\([2]\) \(dz^{[1]} = da^{[1]} * g^{[1]'}(z[1]) = w^{[2]T} dz^{[2]}* g^{[1]'}(z[1])\)

\([1]\) \(dw^{[1]} = dz^{[1]} a^{[0]T}\)

\([1]\) \(db^{[1]} = dz^{[1]}\)

2. Vectorize and Generalize your NN

Let's derive the vectorize representation of the above forward and backward propagation. The usage of vector is to speed up the computation. We will talk about this again in batch gradient descent.

\(w^{[1]},b^{[1]}, w^{[2]}, b^{[2]}\) stays the same. Generally \(w^{[i]}\) has dimension \((h_{i},h_{i-1})\) and \(b^{[i]}\) has dimension \((h_{i},1)\)

\(Z^{[1]} \in R^{k*m}, Z^{[2]} \in R^{1*m}, A^{[0]} \in R^{n*m}, A^{[1]} \in R^{k*m}, A^{[2]}\in R^{1*m}\) where \(A^{[0]}\)is the input vector, each column is one training sample.

(1) Forward propogation

Follow above logic, vectorize representation is below:

\([1]\) \(Z^{[1]} = {w^{[1]}} A^{[0]} + b^{[1]}\)

\([2]\) \(A^{[1]} = g((Z^{[1]} ) )\)

\([3]\) \(Z^{[2]} = {w^{[2]}} A^{[1]} + b^{[2]}\)

\([4]\) \(A^{[2]} = \sigma(Z^{[2]} )\)

Have you noticed that the dimension above is not a exact matched?

\({w^{[1]}} A^{[0]}\) has dimension \((k,m)\), \(b^{[1]}\) has dimension \((k,1)\).

However Python will take care of this for you with Broadcasting. Basically it will replicate the lower dimension to the higher dimension. Here \(b^{[1]}\) will be replicated m times to become \((k,m)\)

(1) Backward propogation

Same as above, backward propogation will be:

\([4]\) \(dZ^{[2]} = A^{[2]} - Y\)

\([3]\) \(dw^{[2]} =\frac{1}{m} dZ^{[2]} A^{[1]T}\)

\([3]\) \(db^{[2]} = \frac{1}{m} \sum{dZ^{[2]}}\)

\([2]\) \(dZ^{[1]} = dA^{[1]} * g^{[1]'}(z[1]) = w^{[2]T} dZ^{[2]}* g^{[1]'}(z[1])\)

\([1]\) \(dw^{[1]} = \frac{1}{m} dZ^{[1]} A^{[0]T}\)

\([1]\) \(db^{[1]} = \frac{1}{m} \sum{dZ^{[1]} }\)

In the next post, I will talk about some other details in NN, like hyper parameter, activation function.

To be continued.

Reference

- Ian Goodfellow, Yoshua Bengio, Aaron Conrville, "Deep Learning"

- Deeplearning.ai https://www.deeplearning.ai/

DeepLearning - Forard & Backward Propogation的更多相关文章

- Deeplearning - Overview of Convolution Neural Network

Finally pass all the Deeplearning.ai courses in March! I highly recommend it! If you already know th ...

- DeepLearning - Regularization

I have finished the first course in the DeepLearnin.ai series. The assignment is relatively easy, bu ...

- Coursera机器学习+deeplearning.ai+斯坦福CS231n

日志 20170410 Coursera机器学习 2017.11.28 update deeplearning 台大的机器学习课程:台湾大学林轩田和李宏毅机器学习课程 Coursera机器学习 Wee ...

- DeepLearning - Overview of Sequence model

I have had a hard time trying to understand recurrent model. Compared to Ng's deep learning course, ...

- back propogation 的线代描述

参考资料: 算法部分: standfor, ufldl : http://ufldl.stanford.edu/wiki/index.php/UFLDL_Tutorial 一文弄懂BP:https: ...

- DeepLearning Intro - sigmoid and shallow NN

This is a series of Machine Learning summary note. I will combine the deep learning book with the de ...

- 用纯Python实现循环神经网络RNN向前传播过程(吴恩达DeepLearning.ai作业)

Google TensorFlow程序员点赞的文章! 前言 目录: - 向量表示以及它的维度 - rnn cell - rnn 向前传播 重点关注: - 如何把数据向量化的,它们的维度是怎么来的 ...

- 吴恩达DeepLearning.ai的Sequence model作业Dinosaurus Island

目录 1 问题设置 1.1 数据集和预处理 1.2 概览整个模型 2. 创建模型模块 2.1 在优化循环中梯度裁剪 2.2 采样 3. 构建语言模型 3.1 梯度下降 3.2 训练模型 4. 结论 ...

- Sql Server 聚集索引扫描 Scan Direction的两种方式------FORWARD 和 BACKWARD

最近发现一个分页查询存储过程中的的一个SQL语句,当聚集索引列的排序方式不同的时候,效率差别达到数十倍,让我感到非常吃惊 由此引发出来分页查询的情况下对大表做Clustered Scan的时候, 不同 ...

随机推荐

- 【js】深拷贝和浅拷贝区别,以及实现深拷贝的方式

一.区别:简单点来说,就是假设B复制了A,当修改A时,看B是否会发生变化,如果B也跟着变了,说明这是浅拷贝,如果B没变,那就是深拷贝. 此篇文章中也会简单阐述到栈堆,基本数据类型与引用数据类型,因为这 ...

- MYSQL 8.0.11 安装过程及 Navicat 链接时遇到的问题

参考博客:https://blog.csdn.net/WinstonLau/article/details/78666423 我的系统和软件版本是这样的: 系统环境:win7.64位 MySQL版本: ...

- form表单的一个页面多个上传按钮实例

/* * 图片上传 */ @RequestMapping("/uploadFile") @ResponseBody public String uploadFile(@Reques ...

- operator.attrgetter() 进行对象排序

## 使用operator.attrgetter() 进行对象排序 from operator import attrgetter class Student: def __init__(self, ...

- 我的Tmux学习笔记

0. 修改指令前缀 // ~/.tmux.conf ubind C-b set -g prefix C-a 1. 新建会话 tmux tmux new -s session-name // 可以设置会 ...

- Xshell配色方案推荐

使用方法: 新建mycolor.xcs文件 复制粘贴如下代码,将文件导入,修改自己喜欢的字体即可 [mycolor] text=00ff80 cyan(bold)=00ffff text(bold)= ...

- MAVLink功能开发,移植教程。

MAVLink功能开发 -----------------本文由"智御电子"提供,同时提供视频移植教程,以便电子爱好者交流学习.---------------- 1.MAVLink ...

- linux 通过 openconnect 来连接学校内网

参考 http://xingda1989.iteye.com/blog/1969908 https://blog.csdn.net/edin_blackpoint/article/details/70 ...

- HTTPS相关知识以及在golang中的应用

最近简单学习了HTTPS,并在golang中实践了一下,现在把学到的知识记录下来,方便以后查看,如果有幸能帮到有需要的人就更好了,如果有错误欢迎留言指出. 一些简单的概念,可以自行百度百科 HTTPS ...

- R语言爬虫:爬取百度百科词条

抓取目标:抓取花儿与少年的百度百科中成员信息 url <- "http://baike.baidu.com/item/%E8%8A%B1%E5%84%BF%E4%B8%8E%E5%B0 ...