Optimal Value Functions and Optimal Policy

Optimal Value Function is how much reward the best policy can get from a state s, which is the best senario given state s. It can be defined as:

Value Function and Optimal State-Value Function

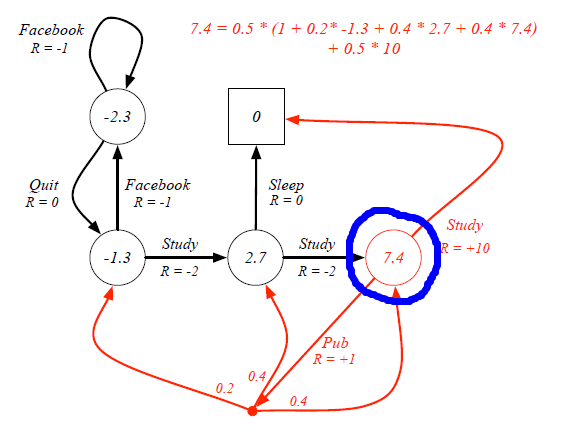

Let's see firstly compare Value Function with Optimal Value Function. For example, in the student study case, the value function for the blue circle state under 50:50 policy is 7.4.

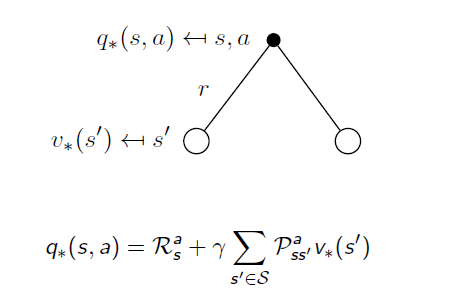

However, when we consider the Optimal State-Value function, 'branches' that may prevent us from getting the best scores are proned. For instance, the optimal senario for the blue circle state is having 100% probability to continue his study rather than going to pub.

Optimal Action-Value Function

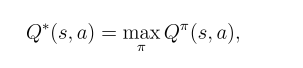

Then we move to Action-Value Function, and the following equation also reveals the Optimal Action-Value Function is from the policy who gives the best Action Returns.

The Optimal Action-Value Function is strongly related to Optimal State-Value Function by:

The equation means when action a is taken at state s, what the best return is. At this condition, the probability of reaching each state and the immediate reward is determined, so the only variable is the State-Value function . Therefore it is obvious that obtaining the Optimal State-Value function is equivalent to holding the Optimal Action-Value Function.

Conversely, the Optimal State-Value function is the best combination of Action and the following states with Optimal State-value Functions:

Still in the student example, when we know the Optimal State-Value Function, the Optimal Action-Value Function can be calculated as:

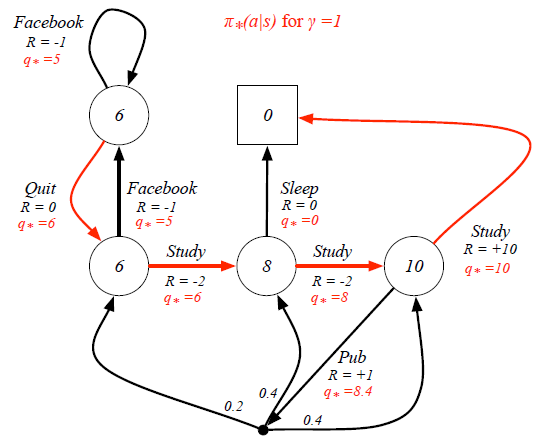

Finally we can derive the best policy from the Optimal Action-Value Function:

This means the policy only picks up the best action at every state rather than having a probability distribution. This deterministic policy is the goal of Reinforcement Learning, as it will guide the action to complete the task.

Optimal Value Functions and Optimal Policy的更多相关文章

- Reinforcement Learning: An Introduction读书笔记(3)--finite MDPs

> 目 录 < Agent–Environment Interface Goals and Rewards Returns and Episodes Policies and Val ...

- Machine Learning——吴恩达机器学习笔记(酷

[1] ML Introduction a. supervised learning & unsupervised learning 监督学习:从给定的训练数据集中学习出一个函数(模型参数), ...

- RL_Learning

Key Concepts in RL 标签(空格分隔): RL_learning OpenAI Spinning Up原址 states and observations (状态和观测) action ...

- Massively parallel supercomputer

A novel massively parallel supercomputer of hundreds of teraOPS-scale includes node architectures ba ...

- Factoextra R Package: Easy Multivariate Data Analyses and Elegant Visualization

factoextra is an R package making easy to extract and visualize the output of exploratory multivaria ...

- 深度学习课程笔记(七):模仿学习(imitation learning)

深度学习课程笔记(七):模仿学习(imitation learning) 2017.12.10 本文所涉及到的 模仿学习,则是从给定的展示中进行学习.机器在这个过程中,也和环境进行交互,但是,并没有显 ...

- DP Intro - OBST

http://radford.edu/~nokie/classes/360/dp-opt-bst.html Overview Optimal Binary Search Trees - Problem ...

- [C5] Andrew Ng - Structuring Machine Learning Projects

About this Course You will learn how to build a successful machine learning project. If you aspire t ...

- Reinforcement Learning Index Page

Reinforcement Learning Posts Step-by-step from Markov Property to Markov Decision Process Markov Dec ...

随机推荐

- 洛谷 - P4567 - 文本编辑器 - 无旋Treap

https://www.luogu.org/problem/P4567 事实证明无旋Treap是不是不可能会比Splay快? #include<bits/stdc++.h> using n ...

- HDOJ 1150 Machine Schedule

版权声明:来自: 码代码的猿猿的AC之路 http://blog.csdn.net/ck_boss https://blog.csdn.net/u012797220/article/details/3 ...

- 使用IP在局域网内访问System.Net.HttpListenerException:“拒绝访问。”

记录一下,自己写的程序之前运行没有遇到这个问题,突然遇到这个问题,找了一圈没有找到有效的解决方案,到最后发现,以管理员身份运行程序即可.简单记录一下. 还有就是 .UseUrls("http ...

- HTML拖放元素

实现来回拖放图片 <!DOCTYPE HTML> <html> <title>来回拖放元素</title> <meta charset=" ...

- 03机器学习实战之决策树scikit-learn实现

sklearn.tree.DecisionTreeClassifier 基于 scikit-learn 的决策树分类模型 DecisionTreeClassifier 进行的分类运算 http://s ...

- cf2c(模拟退火 步长控制

https://www.luogu.org/problem/CF2C 题意:在平面上有三个没有公共部分的圆,求平面上一点使得到三个圆的切线的夹角相等.(若没答案满足条件,则不打印 思路:可用模拟退火算 ...

- uiautomatorviewer不能直接截取手机屏幕信息

本身可以用sdk——>tools里自带的ui automator viewer截取如果截取不了,采用以下方法: 新建一个文本文档,名字自己起如uni.bat(注意把后缀给改成.bat) adb ...

- uboot学习之五-----uboot如何启动Linux内核

uboot和内核到底是什么?uboot实质就是一个复杂的裸机程序:uboot可以被配置也可以做移植: 操作系统内核本身就是一个裸机程序,和我们学的uboot和其他裸机程序没有本质的区别:区别就是我们操 ...

- Codeforces 图论题板刷(2000~2400)

前言 首先先刷完这些在说 题单 25C Roads in Berland 25D Roads not only in Berland 9E Interestring graph and Apples ...

- 函数柯里化(Currying)小实践

什么是函数柯里化 在计算机科学中,柯里化(Currying)是把接受多个参数的函数变换成接受一个单一参数(最初函数的第一个参数)的函数,并且返回接受余下的参数且返回结果的新函数的技术.这个技术由 Ch ...