Machine learning 第8周编程作业 K-means and PCA

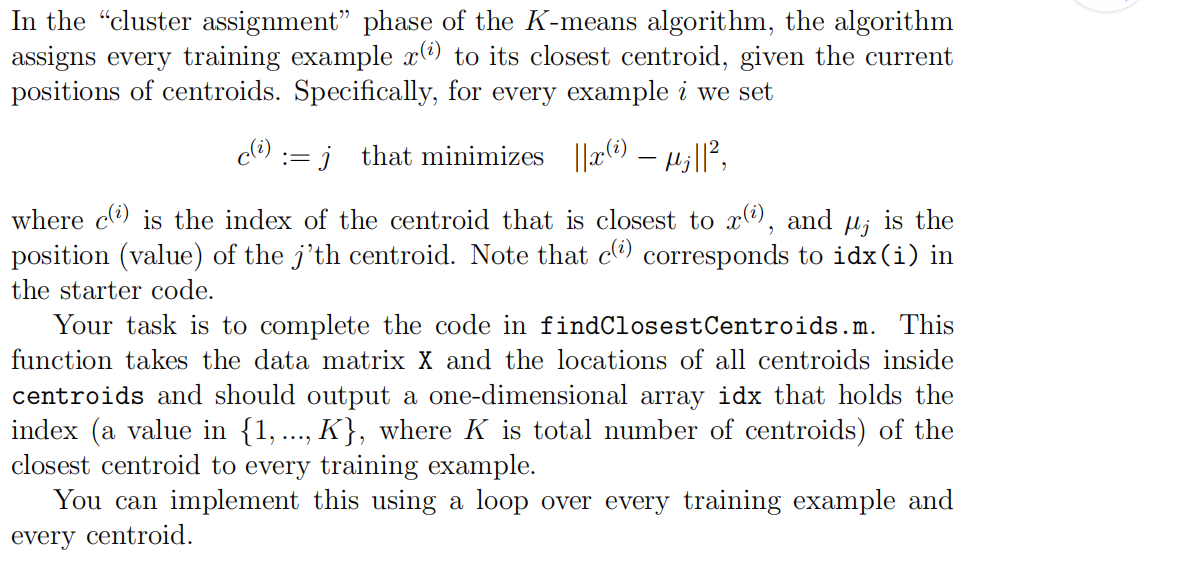

1.findClosestCentroids

function idx = findClosestCentroids(X, centroids)

%FINDCLOSESTCENTROIDS computes the centroid memberships for every example

% idx = FINDCLOSESTCENTROIDS (X, centroids) returns the closest centroids

% in idx for a dataset X where each row is a single example. idx = m x 1

% vector of centroid assignments (i.e. each entry in range [1..K])

% % Set K

K = size(centroids, 1); % You need to return the following variables correctly.

idx = zeros(size(X,1), 1); % ====================== YOUR CODE HERE ======================

% Instructions: Go over every example, find its closest centroid, and store

% the index inside idx at the appropriate location.

% Concretely, idx(i) should contain the index of the centroid

% closest to example i. Hence, it should be a value in the

% range 1..K

%

% Note: You can use a for-loop over the examples to compute this.

% for i=1:size(X,1),

for j=1:K,

dis(j)=sum( (centroids(j,:)-X(i,:)).^2, 2 );

endfor

[t,idx(i)]=min(dis);

endfor % ============================================================= end

2.computerCentroids

function centroids = computeCentroids(X, idx, K)

%COMPUTECENTROIDS returns the new centroids by computing the means of the

%data points assigned to each centroid.

% centroids = COMPUTECENTROIDS(X, idx, K) returns the new centroids by

% computing the means of the data points assigned to each centroid. It is

% given a dataset X where each row is a single data point, a vector

% idx of centroid assignments (i.e. each entry in range [1..K]) for each

% example, and K, the number of centroids. You should return a matrix

% centroids, where each row of centroids is the mean of the data points

% assigned to it.

% % Useful variables

[m n] = size(X); % You need to return the following variables correctly.

centroids = zeros(K, n); % ====================== YOUR CODE HERE ======================

% Instructions: Go over every centroid and compute mean of all points that

% belong to it. Concretely, the row vector centroids(i, :)

% should contain the mean of the data points assigned to

% centroid i.

%

% Note: You can use a for-loop over the centroids to compute this.

% for i=1:K,

ALL=0;

cnt=sum(idx==i);

temp=find(idx==i);

for j=1:numel(temp),

ALL=ALL+X(temp(j),:);

endfor

centroids(i,:)=ALL/cnt;

endfor % ============================================================= end

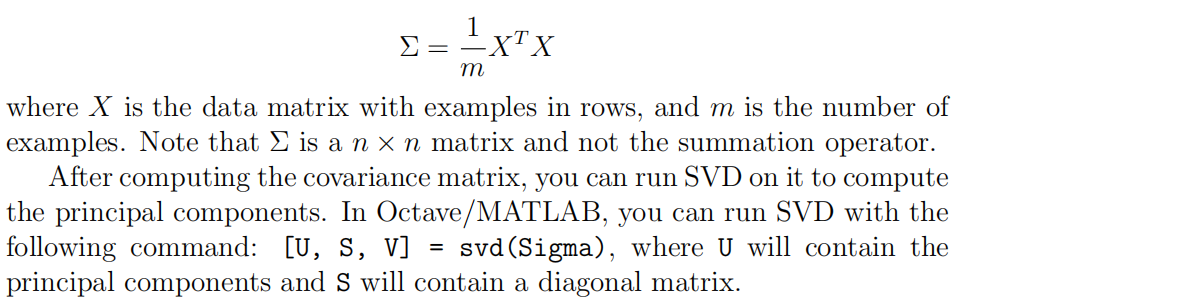

3.pca

function [U, S] = pca(X)

%PCA Run principal component analysis on the dataset X

% [U, S, X] = pca(X) computes eigenvectors of the covariance matrix of X

% Returns the eigenvectors U, the eigenvalues (on diagonal) in S

% % Useful values

[m, n] = size(X); % You need to return the following variables correctly.

U = zeros(n);

S = zeros(n); % ====================== YOUR CODE HERE ======================

% Instructions: You should first compute the covariance matrix. Then, you

% should use the "svd" function to compute the eigenvectors

% and eigenvalues of the covariance matrix.

%

% Note: When computing the covariance matrix, remember to divide by m (the

% number of examples).

% Sigma=(X'*X)./m;

[U,S,V]=svd(Sigma); % ========================================================================= end

4.projectData

function Z = projectData(X, U, K)

%PROJECTDATA Computes the reduced data representation when projecting only

%on to the top k eigenvectors

% Z = projectData(X, U, K) computes the projection of

% the normalized inputs X into the reduced dimensional space spanned by

% the first K columns of U. It returns the projected examples in Z.

% % You need to return the following variables correctly.

Z = zeros(size(X, 1), K); % ====================== YOUR CODE HERE ======================

% Instructions: Compute the projection of the data using only the top K

% eigenvectors in U (first K columns).

% For the i-th example X(i,:), the projection on to the k-th

% eigenvector is given as follows:

% x = X(i, :)';

% projection_k = x' * U(:, k);

% U_reduce=U(:,1:K);

Z=X*U_reduce; % ============================================================= end

5.recoverData

function X_rec = recoverData(Z, U, K)

%RECOVERDATA Recovers an approximation of the original data when using the

%projected data

% X_rec = RECOVERDATA(Z, U, K) recovers an approximation the

% original data that has been reduced to K dimensions. It returns the

% approximate reconstruction in X_rec.

% % You need to return the following variables correctly.

X_rec = zeros(size(Z, 1), size(U, 1)); % ====================== YOUR CODE HERE ======================

% Instructions: Compute the approximation of the data by projecting back

% onto the original space using the top K eigenvectors in U.

%

% For the i-th example Z(i,:), the (approximate)

% recovered data for dimension j is given as follows:

% v = Z(i, :)';

% recovered_j = v' * U(j, 1:K)';

%

% Notice that U(j, 1:K) is a row vector.

%

X_rec=Z*U(:,1:K)'; % ============================================================= end

Machine learning 第8周编程作业 K-means and PCA的更多相关文章

- Machine learning 第7周编程作业 SVM

1.Gaussian Kernel function sim = gaussianKernel(x1, x2, sigma) %RBFKERNEL returns a radial basis fun ...

- Machine learning第6周编程作业

1.linearRegCostFunction: function [J, grad] = linearRegCostFunction(X, y, theta, lambda) %LINEARREGC ...

- Machine learning 第5周编程作业

1.Sigmoid Gradient function g = sigmoidGradient(z) %SIGMOIDGRADIENT returns the gradient of the sigm ...

- Machine learning第四周code 编程作业

1.lrCostFunction: 和第三周的那个一样的: function [J, grad] = lrCostFunction(theta, X, y, lambda) %LRCOSTFUNCTI ...

- c++ 西安交通大学 mooc 第十三周基础练习&第十三周编程作业

做题记录 风影影,景色明明,淡淡云雾中,小鸟轻灵. c++的文件操作已经好玩起来了,不过掌握好控制结构显得更为重要了. 我这也不做啥题目分析了,直接就题干-代码. 总结--留着自己看 1. 流是指从一 ...

- 吴恩达深度学习第4课第3周编程作业 + PIL + Python3 + Anaconda环境 + Ubuntu + 导入PIL报错的解决

问题描述: 做吴恩达深度学习第4课第3周编程作业时导入PIL包报错. 我的环境: 已经安装了Tensorflow GPU 版本 Python3 Anaconda 解决办法: 安装pillow模块,而不 ...

- 吴恩达深度学习第2课第2周编程作业 的坑(Optimization Methods)

我python2.7, 做吴恩达深度学习第2课第2周编程作业 Optimization Methods 时有2个坑: 第一坑 需将辅助文件 opt_utils.py 的 nitialize_param ...

- Machine Learning - 第7周(Support Vector Machines)

SVMs are considered by many to be the most powerful 'black box' learning algorithm, and by posing构建 ...

- Machine Learning – 第2周(Linear Regression with Multiple Variables、Octave/Matlab Tutorial)

Machine Learning – Coursera Octave for Microsoft Windows GNU Octave官网 GNU Octave帮助文档 (有900页的pdf版本) O ...

随机推荐

- 2018.10.12 NOIP训练 01 串(倍增+hash)

传送门 一道挺不错的倍增. 其实就是处理出每个数连向的下一个数. 由于每个点只会出去一条边,所以倍增就可以了. 开始和zxyzxyzxy口胡了一波O(n+m)O(n+m)O(n+m)假算法,后来发现如 ...

- 2018.09.25 poj2068 Nim(博弈论+dp)

传送门 题意简述:m个石子,有两个队每队n个人循环取,每个人每次取石子有数量限制,取最后一块的输,问先手能否获胜. 博弈论+dp. 我们令f[i][j]f[i][j]f[i][j]表示当前第i个人取石 ...

- 2018.08.16 洛谷P2029 跳舞(线性dp)

传送门 简单的线性dp" role="presentation" style="position: relative;">dpdp. 直接推一推 ...

- python编码(七)

本文中,以'哈'来解释作示例解释所有的问题,“哈”的各种编码如下: 1. UNICODE (UTF8-16),C854:2. UTF-8,E59388:3. GBK,B9FE. 一.python中的s ...

- webservice大文件怎么传输

版权所有 2009-2018荆门泽优软件有限公司 保留所有权利 官方网站:http://www.ncmem.com/ 产品首页:http://www.ncmem.com/webapp/up6.2/in ...

- 重大发现 springmvc Controller 高级接收参数用法

1. 数组接收 @RequestMapping(value="deleteRole.json") @ResponseBody public Object deleteRole(S ...

- 13.A={1,2,3,5}和为10的问题

题目:集合A={1,2,3,5},从中任取几个数相加等于10,并打印各得哪几个数?补充参照:http://www.cnblogs.com/tinaluo/p/5294341.html上午弄明白了幂集的 ...

- SPATIALINDEX_LIBRARY Cmake

https://libspatialindex.org/ QGIS:https://github.com/qgis/QGIS/blob/master/cmake/FindSpatialindex.cm ...

- PriorityQueue源码分析

PriorityQueue其实是一个优先队列,和先进先出(FIFO)的队列的区别在于,优先队列每次出队的元素都是优先级最高的元素.那么怎么确定哪一个元素的优先级最高呢,jdk中使用堆这么一 ...

- Linux 输入输出(I/O)重定向

目录 1.概念 Linux 文件描述符 2.输出重定向 格式 示例 注意 3.输入重定向 格式 示例 4.自定义输入输出设备 解释 示例 最后说两句 1.概念 在解释什么是重定向之前,先来说说什么是文 ...