【原创】大数据基础之Oozie(1)简介、源代码解析

Oozie4.3

一 简介

1 官网

Apache Oozie Workflow Scheduler for Hadoop

Hadoop生态的工作流调度器

Overview

Oozie is a workflow scheduler system to manage Apache Hadoop jobs.

Oozie Workflow jobs are Directed Acyclical Graphs (DAGs) of actions.

Oozie Coordinator jobs are recurrent Oozie Workflow jobs triggered by time (frequency) and data availability.

Oozie is integrated with the rest of the Hadoop stack supporting several types of Hadoop jobs out of the box (such as Java map-reduce, Streaming map-reduce, Pig, Hive, Sqoop and Distcp) as well as system specific jobs (such as Java programs and shell scripts).

Oozie is a scalable, reliable and extensible system.

2 部署

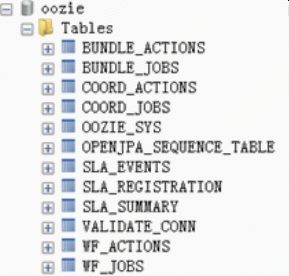

3 数据库表结构

wf_jobs:工作流实例

wf_actions:任务实例

coord_jobs:调度实例

coord_actions:调度任务实例

4 概念

l Control Node:工作流的开始、结束以及决定Workflow的执行路径的节点(start、end、kill、decision、fork/join)

l Action Node:工作流执行的计算任务,支持的类型包括(HDFS、MapReduce、Java、Shell、SSH、Pig、Hive、E-Mail、Sub-Workflow、Sqoop、Distcp),即任务

l Workflow:由Control Node以及一系列Action Node组成的工作流,即工作流

l Coordinator:根据指定Cron信息触发workflow,即调度

l Bundle:按照组的方式批量管理Coordinator任务,实现集中的启停

二 代码解析

1 启动过程

加载配置的所有service:

ServicesLoader.contextInitialized

Services.init

Services.loadServices (oozie.services, oozie.services.ext)

Service结构:

Service

org.apache.oozie.service.SchedulerService,

org.apache.oozie.service.InstrumentationService,

org.apache.oozie.service.MemoryLocksService,

org.apache.oozie.service.UUIDService,

org.apache.oozie.service.ELService,

org.apache.oozie.service.AuthorizationService,

org.apache.oozie.service.UserGroupInformationService,

org.apache.oozie.service.HadoopAccessorService,

org.apache.oozie.service.JobsConcurrencyService,

org.apache.oozie.service.URIHandlerService,

org.apache.oozie.service.DagXLogInfoService,

org.apache.oozie.service.SchemaService,

org.apache.oozie.service.LiteWorkflowAppService,

org.apache.oozie.service.JPAService,

org.apache.oozie.service.StoreService,

org.apache.oozie.service.SLAStoreService,

org.apache.oozie.service.DBLiteWorkflowStoreService,

org.apache.oozie.service.CallbackService,

org.apache.oozie.service.ActionService,

org.apache.oozie.service.ShareLibService,

org.apache.oozie.service.CallableQueueService,

org.apache.oozie.service.ActionCheckerService,

org.apache.oozie.service.RecoveryService,

org.apache.oozie.service.PurgeService,

org.apache.oozie.service.CoordinatorEngineService,

org.apache.oozie.service.BundleEngineService,

org.apache.oozie.service.DagEngineService,

org.apache.oozie.service.CoordMaterializeTriggerService,

org.apache.oozie.service.StatusTransitService,

org.apache.oozie.service.PauseTransitService,

org.apache.oozie.service.GroupsService,

org.apache.oozie.service.ProxyUserService,

org.apache.oozie.service.XLogStreamingService,

org.apache.oozie.service.JvmPauseMonitorService,

org.apache.oozie.service.SparkConfigurationService

2 核心引擎

BaseEngine

DAGEngine (负责workflow执行)

CoordinatorEngine (负责coordinator执行)

BundleEngine (负责bundle执行)

3 workflow提交执行过程

DAGEngine.submitJob| submitJobFromCoordinator (提交workflow)

SubmitXCommand.call

execute

LiteWorkflowAppService.parseDef (解析得到WorkflowApp)

LiteWorkflowLib.parseDef

LiteWorkflowAppParser.validateAndParse

parse

WorkflowLib.createInstance (创建WorkflowInstance)

BatchQueryExecutor.executeBatchInsertUpdateDelete (保存WorkflowJobBean 到wf_jobs)

StartXCommand.call

SignalXCommand.call

execute

WorkflowInstance.start

LiteWorkflowInstance.start

signal

NodeHandler.enter

ActionNodeHandler.enter

start

LiteWorkflowStoreService.liteExecute (添加WorkflowActionBean到ACTIONS_TO_START)

WorkflowStoreService.getActionsToStart (从ACTIONS_TO_START取Action)

ActionStartXCommand.call

ActionExecutor.start

WorkflowNotificationXCommand.call

BatchQueryExecutor.executeBatchInsertUpdateDelete (保存WorkflowActionBean到wf_actions)

ActionExecutor.start是异步的,还需要检查Action执行状态来推进流程,有两种情况:

一种是Oozie Server正常运行:利用JobEndNotification

CallbackServlet.doGet

DagEngine.processCallback

CompletedActionXCommand.call

ActionCheckXCommand.call

ActionExecutor.check

ActionEndXCommand.call

SignalXCommand.call

一种是Oozie Server重启:利用ActionCheckerService

ActionCheckerService.init

ActionCheckRunnable.run

runWFActionCheck (GET_RUNNING_ACTIONS, oozie.service.ActionCheckerService.action.check.delay=600)

ActionCheckXCommand.call

ActionExecutor.check

ActionEndXCommand.call

SignalXCommand.call

runCoordActionCheck

4 coordinator提交执行过程

CoordinatorEngine.submitJob(提交coordinator)

CoordSubmitXCommand.call

submit

submitJob

storeToDB

CoordJobQueryExecutor.insert (保存CoordinatorJobBean到coord_jobs)

queueMaterializeTransitionXCommand

CoordMaterializeTransitionXCommand.call

execute

materialize

materializeActions

CoordCommandUtils.materializeOneInstance(创建CoordinatorActionBean)

storeToDB

performWrites

BatchQueryExecutor.executeBatchInsertUpdateDelete(保存CoordinatorActionBean到coord_actions)

CoordActionInputCheckXCommand.call

CoordActionReadyXCommand.call

CoordActionStartXCommand.call

DAGEngine.submitJobFromCoordinator

定时任务触发Materialize:

CoordMaterializeTriggerService.init

CoordMaterializeTriggerRunnable.run

CoordMaterializeTriggerService.runCoordJobMatLookup

materializeCoordJobs (GET_COORD_JOBS_OLDER_FOR_MATERIALIZATION)

CoordMaterializeTransitionXCommand.call

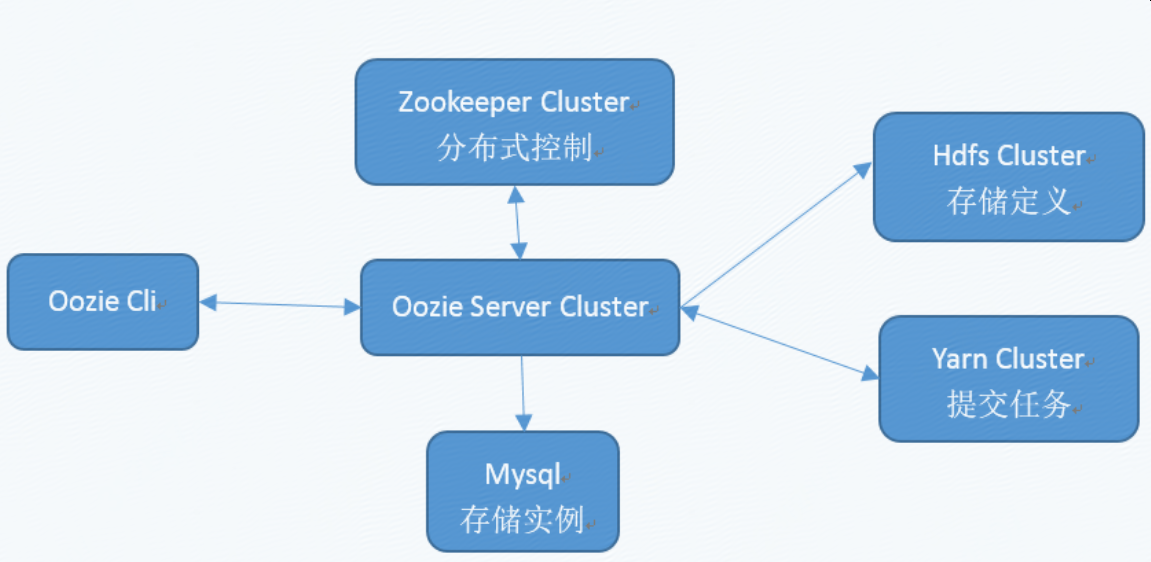

5 分布式

有些内部任务只能启动一个,单server环境Oozie中通过MemoryLocksService来保证,多server环境Oozie通过ZKLocksService来保证,要开启ZK,需要开启一些service:

org.apache.oozie.service.ZKLocksService,

org.apache.oozie.service.ZKXLogStreamingService,

org.apache.oozie.service.ZKJobsConcurrencyService,

org.apache.oozie.service.ZKUUIDService

同时需要配置oozie.zookeeper.connection.string

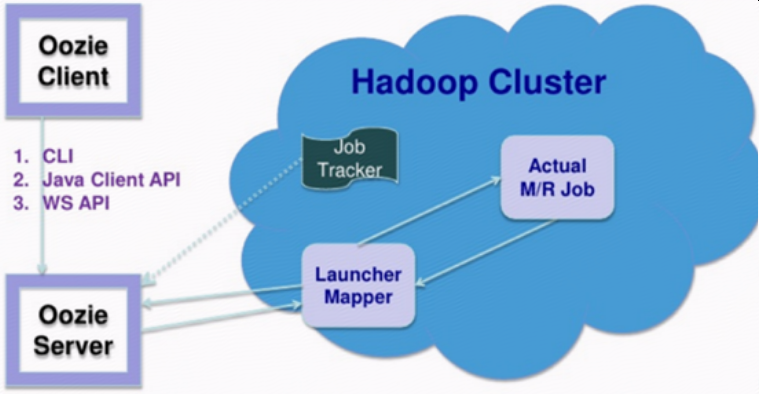

6 任务执行过程

ActionExecutor是任务执行的核心抽象基类,所有的具体任务都是这个类的子类

ActionExecutor

JavaActionExecutor

SshActionExecutor

FsActionExecutor

SubWorkflowActionExecutor

其中JavaActionExecutor是最重要的一个子类,很多其他的任务都是这个类的子类(比如HiveActionExecutor、SparkActionExecutor等)

JavaActionExecutor.start

prepareActionDir

submitLauncher

JobClient.getJob

injectLauncherCallback

ActionExecutor.Context.getCallbackUrl

job.end.notification.url

createLauncherConf

LauncherMapperHelper.setupLauncherInfo

JobClient.submitJob

check

JavaActionExecutor执行时会提交一个map任务到yarn,即LauncherMapper,

LauncherMapper.map

LauncherMain.main

LauncherMain是具体任务的执行类

LauncherMain

JavaMain

HiveMain

Hive2Main

SparkMain

ShellMain

SqoopMain

【原创】大数据基础之Oozie(1)简介、源代码解析的更多相关文章

- 【原创】大数据基础之Oozie vs Azkaban

概括 Azkaban是一个非常轻量的开源调度框架,适合二次开发,但是无法直接用于生产环境,存在致命缺陷(比如AzkabanWebServer是单点,1年多时间没有修复),在一些情景下的行为简单粗暴(比 ...

- 【原创】大数据基础之Oozie(3)Oozie从4.3升级到5.0

官方文档如下: http://oozie.apache.org/docs/5.0.0/AG_OozieUpgrade.html 这里写的比较简单,大概过程如下:1 下载5.0代码并编译:2 解压5.0 ...

- 【原创】大数据基础之Oozie(2)使用

命令行 $ oozie help 1 导出环境变量 $ export OOZIE_URL=http://oozie_server:11000/oozie 否则都需要增加 -oozie 参数,比如 $ ...

- 【原创】大数据基础之Oozie(4)oozie使用的spark版本升级

oozie默认使用的spark是1.6,一直没有升级,如果想用最新的2.4,需要自己手工升级 首先看当前使用的spark版本的jar # oozie admin -oozie http://$oozi ...

- 【原创】大数据基础之Zookeeper(2)源代码解析

核心枚举 public enum ServerState { LOOKING, FOLLOWING, LEADING, OBSERVING; } zookeeper服务器状态:刚启动LOOKING,f ...

- 【原创】大数据基础之Impala(1)简介、安装、使用

impala2.12 官方:http://impala.apache.org/ 一 简介 Apache Impala is the open source, native analytic datab ...

- 【原创】大数据基础之Benchmark(2)TPC-DS

tpc 官方:http://www.tpc.org/ 一 简介 The TPC is a non-profit corporation founded to define transaction pr ...

- 【原创】大数据基础之词频统计Word Count

对文件进行词频统计,是一个大数据领域的hello word级别的应用,来看下实现有多简单: 1 Linux单机处理 egrep -o "\b[[:alpha:]]+\b" test ...

- 大数据基础知识:分布式计算、服务器集群[zz]

大数据中的数据量非常巨大,达到了PB级别.而且这庞大的数据之中,不仅仅包括结构化数据(如数字.符号等数据),还包括非结构化数据(如文本.图像.声音.视频等数据).这使得大数据的存储,管理和处理很难利用 ...

随机推荐

- Git命令集

安装 Window https://gitforwindows.org/ MAC http://sourceforge.net/projects/git-osx-installer/ git conf ...

- Python--day04(流程控制)

day03主要内容回顾 1.变量名命名规范 -- 1.只能由数字.字母 及 _ 组成 -- 2.不能以数字开头 -- 3.不能与系统关键字重名 -- 4._开头有特殊含义 -- 5.__开头__结尾的 ...

- linux python2.x 升级python3.x

Linux下python升级步骤 Python2 ->Python3 多数情况下,系统自动的Python版本是2.x 或者yum直接安装的也是2.x 但是,现在多数情况下建议使用3.x 那么如 ...

- spoj 839-Optimal Marks

Description SPOJ.com - Problem OPTM Solution 容易发现各个位之间互不影响, 因此分开考虑每一位. 考虑题中是怎样的一个限制: 对每个点确定一个0/1的权值; ...

- 关于Qt的StyleSheet作用范围

Qt的StyleSheet是很方便的一个设置各种控件风格形态的属性,但是默认的StyleSheet会作用于所有的子控件,容易带来麻烦,以下几种情况,可以限制作用范围 以QTextEdit为例,实体名为 ...

- FMT 与 子集(逆)卷积

本文参考了 Dance of Faith 大佬的博客 我们定义集合并卷积 \[ h_{S} = \sum_{L \subseteq S}^{} \sum_{R \subseteq S}^{} [L \ ...

- 【并发编程】【JDK源码】J.U.C--AQS 及其同步组件(2/2)

原文:慕课网高并发实战(七)- J.U.C之AQS 在[并发编程][JDK源码]AQS (AbstractQueuedSynchronizer)(1/2)中简要介绍了AQS的概念和基本原理,下面继续对 ...

- BSGS算法

BSGS算法 我是看着\(ppl\)的博客学的,您可以先访问\(ppl\)的博客 Part1 BSGS算法 求解关于\(x\)的方程 \[y^x=z(mod\ p)\] 其中\((y,p)=1\) 做 ...

- springboot2.0整合es的异常总结

异常: availableProcessors is already set to [4], rejecting [4] 在启动类中加入 System.setProperty("es.set ...

- 忘掉Ghost!利用Win10自带功能,玩转系统备份&恢复 -- 关于系统恢复的深度思考

上一篇文章讲了,系统可以正常启动,如何从D盘恢复系统到C盘的情况. 如果系统不能启动,要怎么去恢复系统,恢复后会是什么结果? 先说明系统结构: 系统版本:Windows 10 (1709) 硬盘1(5 ...