TensorFlow object detection API应用

前一篇讲述了TensorFlow object detection API的安装与配置,现在我们尝试用这个API搭建自己的目标检测模型。

一、准备数据集

本篇旨在人脸识别,在百度图片上下载了120张张钧甯的图片,存放在/models/research/object_detection下新建的images文件夹内,images文件夹下新建train和test两个文件夹,然后将120分为100和20张分别存放在train和test中。

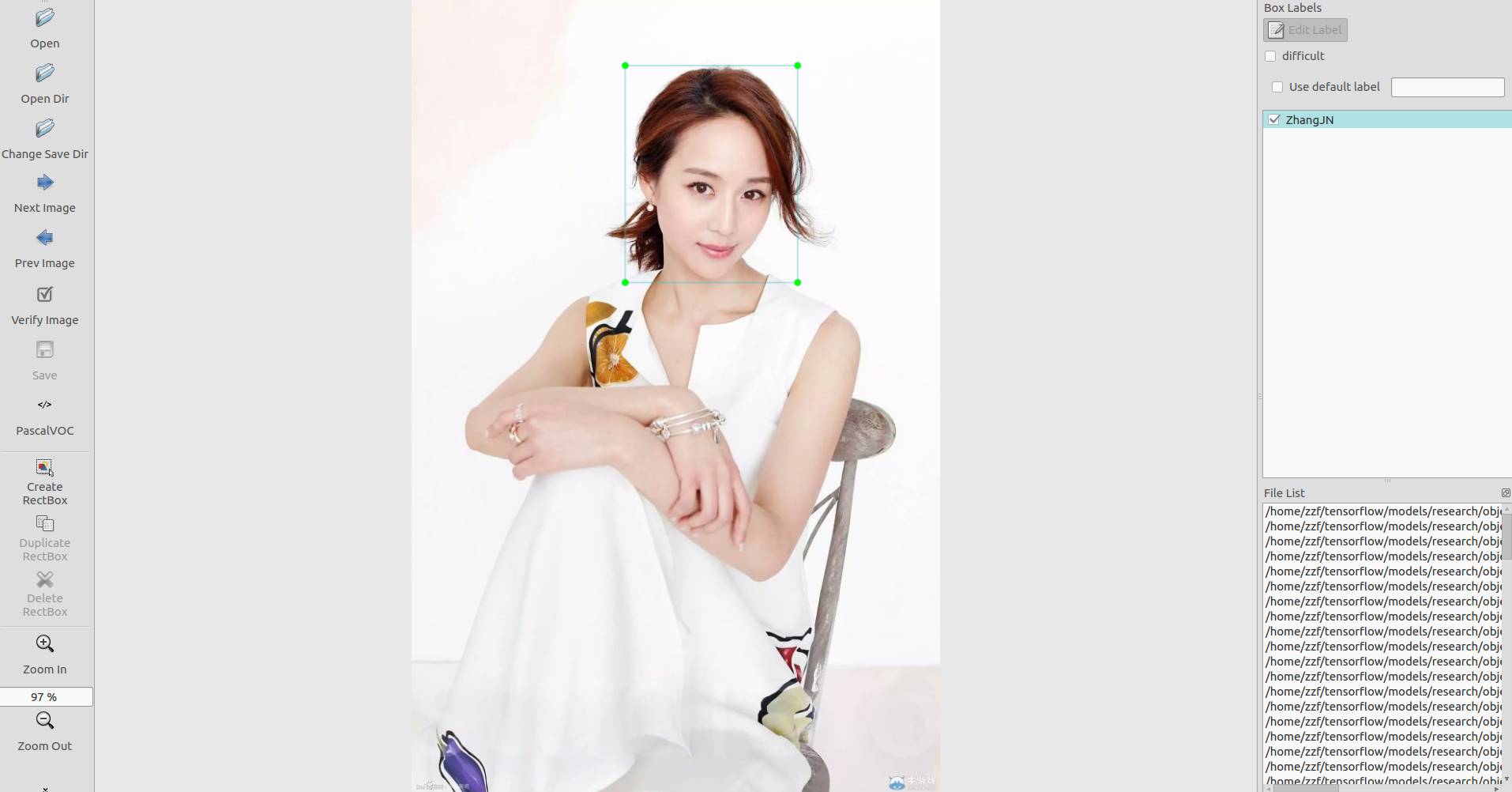

接下来使用 LabelImg 这款小软件,安装方法参考这里,对train和test里的图片进行人工标注(时间充裕的话越多越好),如下图所示。

标注完成后保存为同名的xml文件,并存在原文件夹中。

对于Tensorflow,需要输入专门的TFRecords Format 格式。

写两个小python脚本文件,第一个将文件夹内的xml文件内的信息统一记录到.csv表格中,第二个从.csv表格中创建tfrecord格式。

附上对应代码:

# xml2csv.py import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET os.chdir('/home/zzf/tensorflow/models/research/object_detection/images/test')

path = '/home/zzf/tensorflow/models/research/object_detection/images/test' def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df def main():

image_path = path

xml_df = xml_to_csv(image_path)

xml_df.to_csv('zhangjn_train.csv', index=None)

print('Successfully converted xml to csv.') main()

# generate_tfrecord.py # -*- coding: utf-8 -*- """

Usage:

# From tensorflow/models/

# Create train data:

python generate_tfrecord.py --csv_input=data/tv_vehicle_labels.csv --output_path=train.record

# Create test data:

python generate_tfrecord.py --csv_input=data/test_labels.csv --output_path=test.record

""" import os

import io

import pandas as pd

import tensorflow as tf from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict os.chdir('/home/zzf/tensorflow/models/research/object_detection') flags = tf.app.flags

flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

FLAGS = flags.FLAGS # TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'ZhangJN': # 需改动

return 1

else:

None def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)] def create_tf_example(group, path):

with tf.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = [] for index, row in group.object.iterrows():

xmins.append(row['xmin'] / width)

xmaxs.append(row['xmax'] / width)

ymins.append(row['ymin'] / height)

ymaxs.append(row['ymax'] / height)

classes_text.append(row['class'].encode('utf8'))

classes.append(class_text_to_int(row['class'])) tf_example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename),

'image/source_id': dataset_util.bytes_feature(filename),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature(image_format),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

}))

return tf_example def main(_):

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

path = os.path.join(os.getcwd(), 'images/test') # 需改动

examples = pd.read_csv(FLAGS.csv_input)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_example.SerializeToString()) writer.close()

output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path)) if __name__ == '__main__':

tf.app.run()

对于xml2csv.py,注意改变8,9行,os.chdir和path路径,以及35行,最后生成的csv文件的命名。generate_tfrecord.py也一样,路径需改为自己的,注意33行后的标签识别代码中改为相应的标签,我这里就一个。

对于训练集与测试集分别运行上述代码即可,得到train.record与test.record文件。

二、配置文件和模型

为了方便,我把image下的train和test的csv和record文件都放到object_detection/data目录下,如此,在object_dection文件夹下,我们有如下的文件结构:

Object-Detection -data/

--test_labels.csv --test.record --train_labels.csv --train.record -images/

--test/

---testingimages.jpg

--train/

---testingimages.jpg

--...yourimages.jpg -training/ # 新建,用于一会训练模型使用

接下来需要设置配置文件,在objec_detection/samples下,寻找需要的对于模型的config文件,

我们还可以在官方提供的model zoo里下载训练好的模型。我们使用ssd_mobilenet_v1_coco,先下载它。

在 object_dection文件夹下,解压 ssd_mobilenet_v1_coco_2017_11_17.tar.gz,

将ssd_mobilenet_v1_coco.config 放在training 文件夹下,用文本编辑器打开(我用的sublime 3),进行如下更改:

1、搜索其中的 PATH_TO_BE_CONFIGURED ,将对应的路径改为自己的路径,注意不要把test跟train弄反了;

注意最后train input reader和evaluation input reader中label_map_path必须保持一致。

2、将 num_classes 按照实际情况更改,我的例子中是1;

3、batch_size 原本是24,我在运行的时候出现显存不足的问题,为了保险起见,改为1,如果1还是出现类似问题的话,建议换电脑……

4、fine_tune_checkpoint: "ssd_mobilenet_v1_coco_11_06_2017/model.ckpt"

from_detection_checkpoint: true

这里是使用finetune,在它原来训练好的模型数据上进行训练,这样可以快很多。不然从头训练好慢。

此时在对应目录(/data)下,创建一个 zhangjn.pbtxt的文本文件(可以复制一个其他名字的文件,然后用文本编辑软件打开修改),写入我们的标签,我的例子中是两个,id序号注意与前面创建CSV文件时保持一致,从1开始。

item {

id: 1

name: 'ZhangJN'

}

好,所有数据都已准备好。可以开始训练了。

三、训练模型

我在本地GPU训练(本机环境:Ubuntu 16.04LTS),终端进入 object_detection目录下,最新版用model_main.py,也可以用老版本的train.py训练,后面会讲到。model_main.py训练时散热器风扇已经呼呼转动了,但终端没有step the loss 信息输出,心有点慌,需要先改几个地方,

- 添加 tf.logging.set_verbosity(tf.logging.INFO) 到model_main.py 的 import 区域之后,会每隔一百个step输出loss,总比没有好,至少它让你知道它在跑。

- 如果是python3训练,添加

list()到 model_lib.py的大概390行category_index.values()变成:list(category_index.values()),否则会有 can't pickle dict_values ERROR出现 - 还有一个问题是,用model_main.py 训练时,因为它把老版本的train.py和eval.py集合到了一起,所以制定eval num时指定不好会有warning出现,就像:

WARNING:tensorflow:Ignoring ground truth with image id since it was previously added

所以在config文件设置时,eval部分的 num_examples (如下)和 运行设置参数--num_eval_steps 时任何一个值只要比你数据集中训练图片数目要大就会出现警告,因为它没那么多图片来评估,所以这两个值直接设置成训练图片数量就好了。

eval_config: {

num_examples:

# Note: The below line limits the evaluation process to evaluations.

# Remove the below line to evaluate indefinitely.

max_evals:

}

然后在终端输入:

python3 model_main.py \

--pipeline_config_path=training/ssd_mobilenet_v1_coco.config \

--model_dir=training \

--num_train_steps= \

--num_eval_steps= \

--alsologtostderr

正常的话,稍等片刻,听到风扇开始加速转动的声音时,训练正在有条不紊地进行。model_main.py最后还生成了一个export文件夹,里面居然把save_model.pb都生成了,我没试过这个是不是我们后面要用的。有兴趣的可以试试这个pb文件。

不想改的话可以用老版本的train.py,在legacy/train.py,同样运行:

python3 legacy/train.py --logtostderr --train_dir=training/ --pipeline_config_path=training/ssd_mobilenet_v1_coco.config

就开始训练了

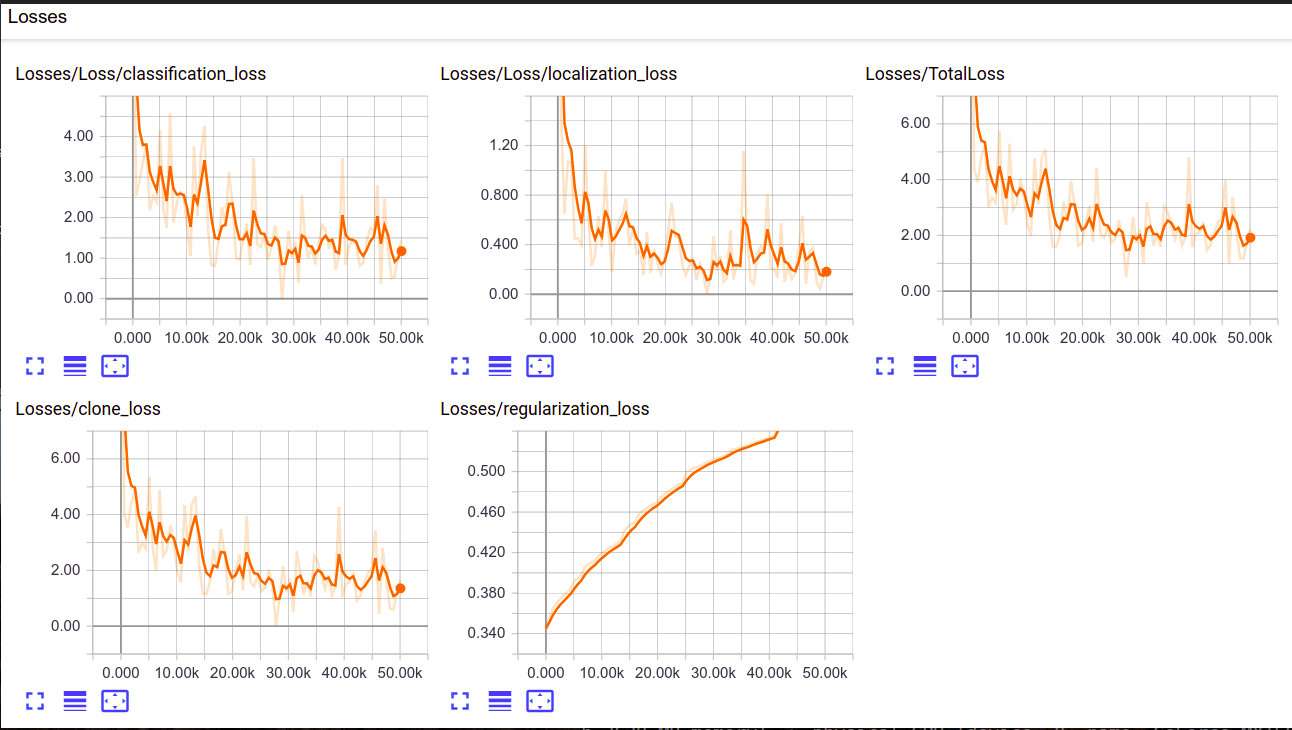

另开一个终端,同样进入到object_detection目录下,输入:

tensorboard --logdir=training

此时,我们可以在浏览器打开查看训练进度,它会不停地传递新训练的数据进来。

运行一段时间后,我们可以看到我们的training文件夹下已经有模型数据保存了,接下来就可以生成我们的需要的模型文件了,终端在object_detection目录下,输入:

python3 export_inference_graph.py --input_type image_tensor --pipeline_config_path training/ssd_mobilenet_v1_coco.config --trained_checkpoint_prefix training/model.ckpt- --output_directory zhangjn_detction

其中,trained checkpoint 要改为自己训练到的数字, output为想要将模型存放在何处,我这里新建了一个文件夹zhangjn_detction 。运行结束后,就可以在zhangjn_detction文件夹下看到若干文件,有saved_model、checkpoint、frozen_inference_graph.pb等。 .pb结尾的就是最重要的frozen model了,上一篇小demo里用的就是它,接下来我们测试就是要用到它。

四、测试模型

将object_detection目录下的object_detection_tutorial.ipynb打开,或者转成object_detection_tutorial.py的python文件,更改一下就可以测试。

# coding: utf-8 # # Object Detection Demo

# Welcome to the object detection inference walkthrough! This notebook will walk you step by step through the process of using a pre-trained model to detect objects in an image. Make sure to follow the [installation instructions](https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/installation.md) before you start. from distutils.version import StrictVersion

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image # This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

from object_detection.utils import ops as utils_ops # if StrictVersion(tf.__version__) < StrictVersion('1.9.0'):

# raise ImportError('Please upgrade your TensorFlow installation to v1.9.* or later!') # ## Env setup # In[2]: # This is needed to display the images.

# get_ipython().magic(u'matplotlib inline') # ## Object detection imports

# Here are the imports from the object detection module. from utils import label_map_util from utils import visualization_utils as vis_util # # Model preparation # ## Variables

#

# Any model exported using the `export_inference_graph.py` tool can be loaded here simply by changing `PATH_TO_FROZEN_GRAPH` to point to a new .pb file.

#

# By default we use an "SSD with Mobilenet" model here. See the [detection model zoo](https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/detection_model_zoo.md) for a list of other models that can be run out-of-the-box with varying speeds and accuracies. # In[4]: # What model to download.

MODEL_NAME = 'zhangjn_detction'

# MODEL_FILE = MODEL_NAME + '.tar.gz'

# DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/' # Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb' # List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'zhangjn.pbtxt') NUM_CLASSES = 1 # ## Download Model # opener = urllib.request.URLopener()

# opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

'''

tar_file = tarfile.open(MODEL_FILE)

for file in tar_file.getmembers():

file_name = os.path.basename(file.name)

if 'frozen_inference_graph.pb' in file_name:

tar_file.extract(file, os.getcwd())

''' # ## Load a (frozen) Tensorflow model into memory. detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='') # ## Loading label map

# Label maps map indices to category names, so that when our convolution network predicts `5`, we know that this corresponds to `airplane`. Here we use internal utility functions, but anything that returns a dictionary mapping integers to appropriate string labels would be fine label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories) # ## Helper code # In[8]: def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8) # # Detection # For the sake of simplicity we will use only 2 images:

# image1.jpg

# image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = 'test_images'

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(3, 8) ] # Size, in inches, of the output images.

IMAGE_SIZE = (12, 8) # In[10]: def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0') # Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)}) # all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict # In[ ]: for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show()

1、因为不用下载模型,下载相关代码可以删除,model name, path to labels , num classes 更改成自己的,download model部分都删去。

2、测试图片,准备几张放入test images文件夹中,命名images+数字.jpg的格式,就不用改代码,在

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(3, 8) ]

一行更改自己图片的数字序列就好了,range(3,8),我的图片命名从3至7.

如果用python文件的话,最后图片显示要加一句

plt.show()

运行它就可以了。

python3 object_detection_tutorial.py

总之,整个训练过程就是这样。熟悉了之后也还挺简单的。运行中可能会碰到这样那样的问题,很多是版本问题导致的。TensorFlow最烦人的一点就是版本更新太快,并且改动大,前后版本有些还不兼容。所以有问题不用怕,多Google,百度一下,一般都可以找到答案,如果是版本问题,一时没法升级的话,对比一下你的版本和最新版本哪个差异导致的,把代码中方法调用方式改成你的版本就好了,我原来用1.4版本的时候,经常遇到版本不同的问题,比如最新版本中tf.contrib.data.parallel_interleave()方法,在1.4版本中tf.contrib.data没有parallel_interleave()这个方法;再比如1.10版本中tf.keras.Model()类也可这这样调用tf.keras.models.Model(),但是在1.4版本中只有后者一种调用方法,若是某个程序用了前者方法,在1.4版本中要运行起来就得自己去改一下了,等等。不过用了一段时间后我还是把TensorFlow升级到1.10了,改太多了,自己都受不了。升级一下就是麻烦点,NVIDIA 驱动,cuda,cudnn 都得改。还好这次轻车熟路,三四个小时就升级成功了。

TensorFlow object detection API应用的更多相关文章

- 使用TensorFlow Object Detection API+Google ML Engine训练自己的手掌识别器

上次使用Google ML Engine跑了一下TensorFlow Object Detection API中的Quick Start(http://www.cnblogs.com/take-fet ...

- 谷歌开源的TensorFlow Object Detection API视频物体识别系统实现教程

视频中的物体识别 摘要 物体识别(Object Recognition)在计算机视觉领域里指的是在一张图像或一组视频序列中找到给定的物体.本文主要是利用谷歌开源TensorFlow Object De ...

- TensorFlow object detection API

cloud执行:https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/running_pet ...

- Tensorflow object detection API 搭建属于自己的物体识别模型

一.下载Tensorflow object detection API工程源码 网址:https://github.com/tensorflow/models,可通过Git下载,打开Git Bash, ...

- Tensorflow object detection API ——环境搭建与测试

1.开发环境搭建 ①.安装Anaconda 建议选择 Anaconda3-5.0.1 版本,已经集成大多数库,并将其作为默认python版本(3.6.3),配置好环境变量(Anaconda安装则已经配 ...

- Tensorflow object detection API 搭建物体识别模型(四)

四.模型测试 1)下载文件 在已经阅读并且实践过前3篇文章的情况下,读者会有一些文件夹.因为每个读者的实际操作不同,则文件夹中的内容不同.为了保持本篇文章的独立性,制作了可以独立运行的文件夹目标检测. ...

- Tensorflow object detection API 搭建物体识别模型(三)

三.模型训练 1)错误一: 在桌面的目标检测文件夹中打开cmd,即在路径中输入cmd后按Enter键运行.在cmd中运行命令: python /your_path/models-master/rese ...

- Tensorflow object detection API 搭建物体识别模型(一)

一.开发环境 1)python3.5 2)tensorflow1.12.0 3)Tensorflow object detection API :https://github.com/tensorfl ...

- Tensorflow object detection API 搭建物体识别模型(二)

二.数据准备 1)下载图片 图片来源于ImageNet中的鲤鱼分类,下载地址:https://pan.baidu.com/s/1Ry0ywIXVInGxeHi3uu608g 提取码: wib3 在桌面 ...

- 基于TensorFlow Object Detection API进行相关开发的步骤

*以下二/三.四步骤确保你当前的文件目录是以research文件夹为相对目录. 一/安装或升级protoc 查看protoc版本命令: protoc --version 如果发现版本低于2.6.0或运 ...

随机推荐

- 实战--利用SVM对基因表达标本是否癌变的预测

利用支持向量机对基因表达标本是否癌变的预测 As we mentioned earlier, gene expression analysis has a wide variety of applic ...

- Ng第九课:神经网络的学习(Neural Networks: Learning)

9.1 代价函数 9.2 反向传播算法 9.3 反向传播算法的直观理解 9.4 实现注意:展开参数 9.5 梯度检验 9.6 随机初始化 9.7 综合起来 9.8 自主驾驶 9.1 ...

- Ng第五课:Octave 教程(Octave Tutorial)

5.1 基本操作 5.2 对数据进行灵活操作 5.3 计算数据 5.4 数据可视化 5.5 控制语句和函数 5.6 矢量化 官网安装:Installation 在线文档:http://ww ...

- webService之helloword(java)

webservice 远程数据交互技术 1.导入jar包(如果是 maven项目导入项目坐标) 2.创建服务 3.测试服务 我们使用maven来做测试服务 pom.xml文件 <project ...

- 事件冒泡的应用——jq on的实现

曾对jQuery中on的实现有所疑问,一直没有找到合适的实现方法,今日看<javascript高级程序设计>中的事件冒泡有了些思路. 针对于新增的DOM元素,JQ中若为其绑定事件就必须使用 ...

- STL容器-- forward_list 用法

http://www.cplusplus.com/reference/forward_list/

- Flume source 支持的type类型

Flume是一个分布式的高可用的消费组件.通过修改配置文件,可以启动不同的agent处理不同来源的数据. agent包含source,channel,sink三个组件.今天我们学习下source的ty ...

- 【转】selenium webdriver三种等待方法

原文:https://www.cnblogs.com/lgh344902118/p/6015593.html webdriver三种等待方法 1.使用WebDriverWait from seleni ...

- 常用下载方式的区别-BT下载、磁力链接、电驴

出处:https://www.jianshu.com/p/72b7a64e5be1 打开 115 离线下载的窗口,看到支持这么多种链接,你都清楚他们是什么原理嘛?接下来我们一个一个说. 一.HTTP( ...

- Nmap命令的常用实例

一.Nmap简介 nmap是一个网络连接端扫描软件,用来扫描网上电脑开放的网络连接端.确定哪些服务运行在哪些连接端,并且推断计算机运行哪个操作系统(这是亦称 fingerprinting).它是网络管 ...