Torch vs Theano

Torch vs Theano

Recently we took a look at Torch 7 and found its data ingestion facilities less than impressive. Torch’s biggest competitor seems to be Theano, a popular deep-learning framework for Python.

It seems that these two have been having “who is faster” competition going for a few years now. It’s been documented in the following papers:

J. Bergstra, O. Breuleux, F. Bastien, P. Lamblin, R. Pascanu, G. Desjardins, J. Turian, Y. Bengio - Theano: a CPU and GPU Math Expression Compiler PDF

Ronan Collobert, Koray Kavukcuoglu, Clement Farabet - Torch7: A Matlab-like Environment for Machine Learning PDF

Frédéric Bastien, Pascal Lamblin, Razvan Pascanu, James Bergstra, Ian Goodfellow, Arnaud Bergeron, Nicolas Bouchard, David Warde-Farley, Yoshua Bengio - Theano: new features and speed improvements arxiv

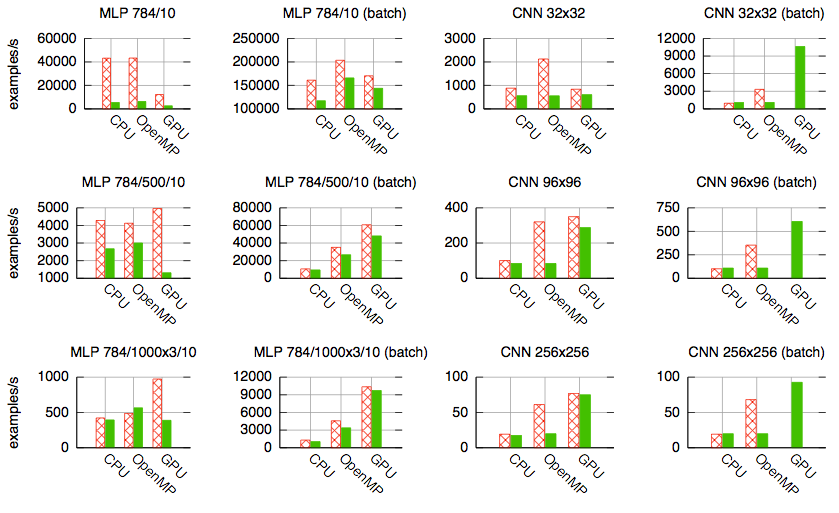

A figure from the Torch7 paper [2]. Torch - red, Theano - green. Higher is better.

And a quote from [3]:

Bergstra et al.(2010) showed that Theano was faster than many other tools available at the time, including Torch5. The following year, Collobert et al.(2011) showed that Torch7 was faster than Theano on the same benchmarks.

The results in the last paper are mixed, if you’re wondering.

The latest act in this friendly competition, which can be seen as one between Bengio’s and LeCun’s groups, appears to be about FFT convolutions, first available in Theano and recently open-sourced by Facebook in Torch.

As a side note, the press really jumped at this second event with headlines about turbo-charging deep learning and the like. Probably the allure of Facebook and deep learning in the same sentence.

Let’s look at convnet benchmarks by Soumith Chintala. He is a Facebook/Torch guy and yet the Theano’s convolution layer is reported to be the fastest at the time of writing. Waiting for those fbfft results.

Anyway, speed isn’t everything and there’s more to life than FFT convolutions. From a developer’s perspective minor differences in speed are less important than other factors, like ease of use. Which leads us to what Soumith had to say about Torch, according to VentureBeat:

It’s like building some kind of electronic contraption or, like, a Lego set. You just can plug in and plug out all these blocks that have different dynamics and that have complex algorithms within them.

At the same time Torch is actually not extremely difficult to learn — unlike, say, the Theano library.

We’ve made it incredibly easy to use. We introduce someone to Torch, and they start churning out research really fast.

Well, you already know our opinion about the “incredibly easy” bit. Torch is not really a Matlab-like environment. Matlab, with all its shortcomings, is a very well polished piece of software with examplary documentation. Torch, on the other hand, is rather rough around the edges.

Besides the language gap, that’s one of the reasons that you don’t see that much Torch usage apart from Facebook and DeepMind. At the same time libraries using Theano have been springing up like mushrooms after a rain (you might want to take a look at Sander Dieleman’s Lasagne and at blocks). It is hard to beat the familiar and rich Python ecosystem.

Theano tutorials

- The official tutorial

- Alec Radford’s talk and corresponding code

- Colin Raffel’s tutorial notebook

- The Portrait of a Machine Learning Priestess

- Best framework for Deep Neural Nets thread at Reddit

P.S. What about Caffe?

Caffe is a fine and very popular piece of software. How does it compare with Torch and Theano? Here’s sieisteinmodel’s answer from Reddit:

Caffe has a pretty different target. More mass market, for people who want to use deep learning for applications. Torch and Theano are more tailored towards people who want to use it for research on DL itself.

Torch vs Theano的更多相关文章

- mxnet,theano与torch的简单比较

这篇文章我想来比较一下Theano和mxnet,Torch(Torch基本没用过,所以只能说一些直观的感觉).我主要从以下几个方面来计较它们: 1.学习框架的成本,接口设计等易用性方面. 三个框架的学 ...

- Summary on deep learning framework --- Theano && Lasagne

Summary on deep learning framework --- Theano && Lasagne 2017-03-23 1. theano.function outp ...

- 普通程序员如何转向AI方向

眼下,人工智能已经成为越来越火的一个方向.普通程序员,如何转向人工智能方向,是知乎上的一个问题.本文是我对此问题的一个回答的归档版.相比原回答有所内容增加. 一. 目的 本文的目的是给出一个简单的,平 ...

- AI方向

普通程序员如何转向AI方向 眼下,人工智能已经成为越来越火的一个方向.普通程序员,如何转向人工智能方向,是知乎上的一个问题.本文是我对此问题的一个回答的归档版.相比原回答有所内容增加. 一. 目的 ...

- (转) Deep Learning Resources

转自:http://www.jeremydjacksonphd.com/category/deep-learning/ Deep Learning Resources Posted on May 13 ...

- 学习Data Science/Deep Learning的一些材料

原文发布于我的微信公众号: GeekArtT. 从CFA到如今的Data Science/Deep Learning的学习已经有一年的时间了.期间经历了自我的兴趣.擅长事务的探索和试验,有放弃了的项目 ...

- 百度Paddle会和Python一样,成为最流行的深度学习引擎吗?

PaddlePaddle会和Python一样流行吗? 深度学习引擎最近经历了开源热.2013年Caffe开源,很快成为了深度学习在图像处理中的主要框架,但那时候的开源框架还不多.随着越来越多的开发者开 ...

- Google研究员Ilya Sutskever:成功训练LDNN的13点建议

Google研究员Ilya Sutskever:成功训练LDNN的13点建议 摘要:本文由Ilya Sutskever(Google研究员.深度学习泰斗Geoffrey Hinton的学生.DNNre ...

- Popular Deep Learning Tools – a review

Popular Deep Learning Tools – a review Deep Learning is the hottest trend now in AI and Machine Lear ...

随机推荐

- java float、double精度研究(转)

在java中运行一下代码System.out.println(2.00-1.10);输出的结果是:0.8999999999999999很奇怪,并不是我们想要的值0.9 再运行如下代码:System.o ...

- 深入理解计算机系统第二版习题解答CSAPP 2.2

填写空白项. n 2n(十进制) 2n(十六进制) 9 512 0x200 19 0x80000 16384 0x4000 0x10000 17 0x20000 32 0x20 0x80

- MySQL特殊语法---replace into

MySQL中有这样的SQL语句 1. replace into tbl_name(col_name, ...) values(...) 2. replace into tbl_name(col_nam ...

- Activity 的生命周期与加载模式

当Activity 处于Android 应用中运行时,它的活动状态由 Android 以 Activity 栈的形式管理.当前活动的Activity位于栈顶.随着不同应用的运行,每个Activity都 ...

- ionic 手机端如何嵌入视频iframe

需求说明:后台提供功能,可以通过富文本编辑器[summernote]上传优酷的视频链接地址(这里需要注意:优酷视频提供多种操作方式 下面截图说明,先做个标记): 客户端是通过ionic开发的:而上传的 ...

- Eclipse搭建服务器

一.首先,依次点击Window -->preferences-->Server-->Runtime environment -->add,再选择Apache,选择TOMcat的 ...

- JavaWeb_Day10_学习笔记1_response(3、4、5、6、7、8、9)发送状态码、响应、重定向、定时刷新、禁用浏览器缓存、响应字节数据、快捷重定向方法、完成防盗链

今天学习重点: 1.response和request响应和应答分别学习: 请求响应流程图 response 1 response概述 response是Servlet.service方法 ...

- mysql更改root密码及root远程登录

1.更改root密码 use mysql; update user set password=password('petecc') where user='root'; 2.root远程登录 1 up ...

- IAP (In-App Purchase)中文文档

内容转自:http://yarin.blog.51cto.com/1130898/549141 一.In App Purchase概览 Store Kit代表App和App Store之间进行通信.程 ...

- Xcode中实现ARC和MRC混编

1.在Xcode中打开项目文件 2.选中项目名称 3.在右侧选择build phass 选项卡 4.选择 complite source 选项 5.选择要支持MRC编译的.m文件,双击 6.在弹出的框 ...