DeepLearning - Regularization

I have finished the first course in the DeepLearnin.ai series. The assignment is relatively easy, but it indeed provides many interesting insight. You can find some summary notes of the first course in my previous 2 posts.

Now let's move on to the second course - Improving Deep Neural Networks: Hyper-parameter tuning, Regularization and Optimization..The second course mainly focus on some details in model tuning, including regularization, Batch, optimization, and some other techniques. Let's start with regularization.

Regularization is used to fight model over fitting. Almost all the model over fits to some extent. Because the distribution of your train and test set can't be exactly the same. Meaning your model will always learn something unique to your training set. That's why we need regularization. It tries to make your model more generalize without sacrificing too much performance- the trade off between bias (Performance) and variance (Generalization)

Any feedback is welcomed. And please correct me if I got anything wrong.

1. Parameter Regularization - L2

L2 regularization is a popular method outside NN. It is frequently used in regression, random forest and etc. With L2 regularization, the loss function of NN will be following:

\]

For layer L, above regularization term can be calculated as following:

\]

But why can adding L2 regularization help reduces over-fitting? We can get a rough idea of this from another name of it - weight Decay. Basically L2 works by pushing the weight close to 0. It is more obvious from gradient descent:

\]

\]

Further clean it up, we will get following:

\]

Compare with the original gradient, we can see after L2 regularization, parameter \(w\) will shrink by \((1- \alpha\epsilon)\) in each iteration.

So far I don't know whether you have the same confusion like me. Why would shrinking weight helps in reduce over-fitting? I found 2 ways to convince myself. Let's go with the intuition one first.

(1). Some intuition into weight decay

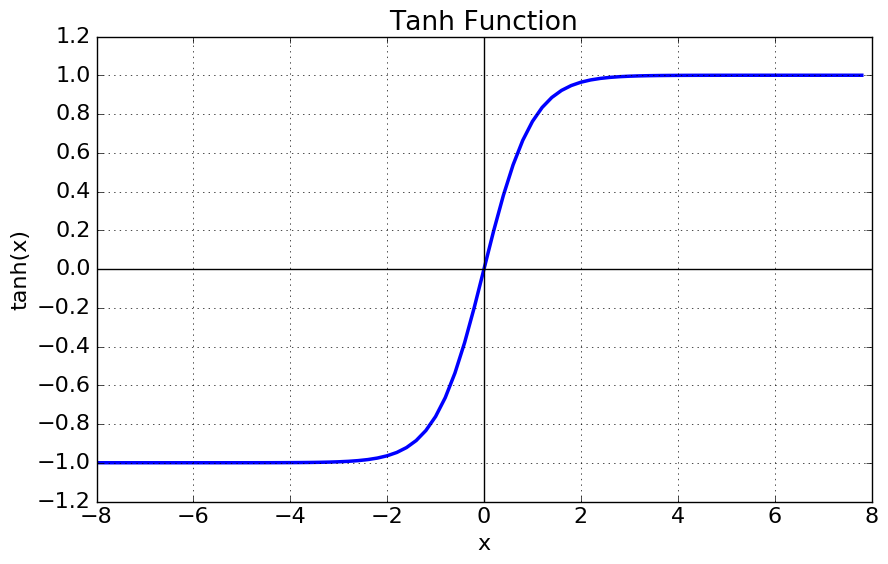

Andrew gives the below intuition, which is simple but very convincing. Below is Tanh function, it is frequently used as activation function in the hidden layer.

We can see when the function is around 0. it is almost linear. The non-linearity is more significant when the X gets bigger. Mean By pushing the weight close to 0, we will get a activation function with less non-linearity. Therefore weight decay can lead to a less complicated model and less over-fitting.

(2). Some math of weight decay

Of course, we can also prove the effect of **weight decay mathematically.

Let's use \(w^*\) to denote the optimal weight for original model without regularization. \(w^* = argmin_w{L(a, y)}\)

\(H\) is the Hessian matrix at \(w^*\), where \(H_{ij} = \frac{\partial^2L}{\partial{w_i}\partial{w_j}}\)

\(J\) is the Jacobian matrix at \(w^*\), where \(J_{j} = \frac{\partial{L}}{\partial{w_i}}\)

We can use Taylor rule to get the approximate form of the new loss function.

\]

Because \(w^*\) is at optimzal, so \(J=0\) and \(H\) is positive. So above can be simplified as below

\]

The new gradient \(\tilde{w}\) is following

\]

And we will get the new optimal:

\]

Because H is positive matrix, so we can decompose H into \(H = Q\Lambda{Q^T}\). where \(\Lambda\) is interpreted as the importance of weight \(w\). So above form will be following:

\]

Each weight \(w_i\) is scaled by \(\frac{\lambda_i}{\lambda_i+\alpha}\). If \(w_i\) is bigger, regularization will has less impact. Basically L2 shrinks the weight that are not important to the model.

2. Dropout

Dropout is a very simple, yet very powerful technique in regularization. It functions by randomly assigning 0 to neuron in hidden layer. It can be easily understood using following code:

import numpy as np

drop = np.random.rand(a.shape) < keep_probs ## drop out rate

a = np.multiply(drop,a ) ## randomly turned off neuron

when keep_probs gets lower, more neuron will be shut down. And here is a few ways to understand why dropout can reduce overfitting

(1). Intuition 1 - spread out weight

One way to understand dropout is that it helps spread out the weights across neurons in each hidden layer.

It is possible that the original model has higher weight on a few neurons and much lower weight on others. With dropout, the lower weighted neruon will have relatively higher weight.

Simiilar method is also used in Random Forest. In each iteraion, we randomly select a subset of columns to build the tree, so that the less importan column will have higher probably to got picked.

(2). Intuition 2 - Bagging

Bagging(Bootstrap aggregating) is used to reduce the variance of model by averaging across several models. It is very popularly used in Kaggle competition. A lot of 1st rank model is actually an average of several models.

Just like investing in portfolio is generally less risky than investing in one asset. Because the asset themselves are not entirely correlated. Therefore the variance of portfolio is smaller than the sum of the variance from each asset.

To some extent, dropout is also bagging. It randomly shuts down neurons through forward propogation, leading to a slightly different neural netwrok in each iteration (sub neural network).

The difference is in bagging, all the models are independent, while using dropout, all the sub neural networks share all the parameters. And we can view the final neural network as an aggregation of all the sub neural networks.

(3). Intuition 3 - Noise injection

Dropout also can be viewed as injecting noise into the hidden layer.

It multiplies the original hidden neuron by a randomly generated indicator (0/1). Multiplicative noise is sometimes regarded as better than additive noise. Because for additive noise, the model can easily reverse it by giving bigger weight to make the added noise less significant.

3. Early Stopping

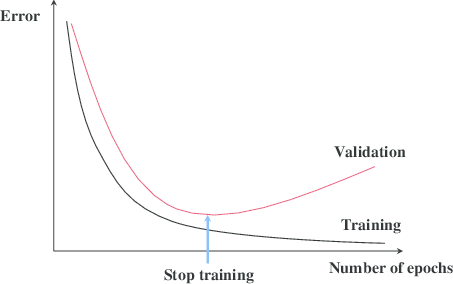

This technique is wildly used, because it is very easy to implement and very efficient. You just need to stop training after certain threshold - a hyper parameter to train.

The best part of this method is that it doesn't change anything in the model training. And it can be easily combined with other method.

Because final goal of the model is to have better performance on the test set. So the stopping threshold is set on the validation set. Basically we should stop the model when the validation error stops decreasing after N iteration, like following:

4. other methods

There are many other techniques like data augmentation, noise robustness, multi-task learning. They are mainly used at more specific area. We will go through them later.

Reference

- Ian Goodfellow, Yoshua Bengio, Aaron Conrville, "Deep Learning"

- Deeplearning.ai https://www.deeplearning.ai/

DeepLearning - Regularization的更多相关文章

- DeepLearning之路(三)MLP

DeepLearning tutorial(3)MLP多层感知机原理简介+代码详解 @author:wepon @blog:http://blog.csdn.net/u012162613/articl ...

- Coursera深度学习(DeepLearning.ai)编程题&笔记

因为是Jupyter Notebook的形式,所以不方便在博客中展示,具体可在我的github上查看. 第一章 Neural Network & DeepLearning week2 Logi ...

- 课程回顾-Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

训练.验证.测试划分的量要保证数据来自一个分布偏差方差分析如果存在high bias如果存在high variance正则化正则化减少过拟合的intuitionDropoutdropout分析其它正则 ...

- 我眼中的正则化(Regularization)

警告:本文为小白入门学习笔记 在机器学习的过程中我们常常会遇到过拟合和欠拟合的现象,就如西瓜书中一个例子: 如果训练样本是带有锯齿的树叶,过拟合会认为树叶一定要带有锯齿,否则就不是树叶.而欠拟合则认为 ...

- Coursera机器学习+deeplearning.ai+斯坦福CS231n

日志 20170410 Coursera机器学习 2017.11.28 update deeplearning 台大的机器学习课程:台湾大学林轩田和李宏毅机器学习课程 Coursera机器学习 Wee ...

- WHAT I READ FOR DEEP-LEARNING

WHAT I READ FOR DEEP-LEARNING Today, I spent some time on two new papers proposing a new way of trai ...

- Deeplearning - Overview of Convolution Neural Network

Finally pass all the Deeplearning.ai courses in March! I highly recommend it! If you already know th ...

- DeepLearning Intro - sigmoid and shallow NN

This is a series of Machine Learning summary note. I will combine the deep learning book with the de ...

- deeplearning.ai 旁听如何做课后编程作业

在上吴恩达老师的深度学习课程,在coursera上. 我觉得课程绝对值的49刀,但是确实没有额外的钱来上课.而且课程提供了旁听和助学金. 之前在coursera上算法和机器学习都是直接旁听的,这些课旁 ...

随机推荐

- Oracle 自定义实用函数

一.ctod 字符转为date, create or replace function ctod(str in varchar2) return date as begin return to_dat ...

- android学习:关于RelativeLayout叠放布局的问题

RelativeLayout布局关于元素叠加的问题 1.RelativeLayout布局中的元素如果要实现元素叠加必须设置 RelativeLayout.ALIGN_PARENT_TOP 这样元素 ...

- ios宏定义字符串

ios宏定义字符串 #define objcString(str) @""#str"" 使用效果: objcString(字符串)

- JBDC—③数据库连接池的介绍、使用和配置

首先要知道数据库连接(Connection对象)的创建和关闭是非常浪费系统资源的,如果是使用常规的数据库连接方式来操作数据库,当用户变多时,每次访问数据库都要创建大量的Connnection对象,使用 ...

- #leetcode刷题之路25- k个一组翻转链表

给出一个链表,每 k 个节点一组进行翻转,并返回翻转后的链表.k 是一个正整数,它的值小于或等于链表的长度.如果节点总数不是 k 的整数倍,那么将最后剩余节点保持原有顺序. 示例 :给定这个链表:1- ...

- 【Linux】YUM源搭建

YUM是什么? YUM是什么 基于rpm但更胜于rpm的软件管理工具: YUM有服务端和客户端: 如果服务端和客户端在同一台机器,这是本地YUM: 如果服务端和客户端不在同一台机器,这是网络YUM. ...

- mysql-5.7.24 在centos7安装

搭建环境:mysql5.7.24 CentOS-7-x86_64-DVD-1804.iso 桌面版 1. 进入官网:https://dev.mysql.com/downloads/mysql/ 该 ...

- redis学习指南

一.redis安装 # 安装最新版 yum install -y epel-release vim atop htop net-tools git wget gcc-c++ yum clean all ...

- Java ConcurrentHashMap 源代码分析

Java ConcurrentHashMap jdk1.8 之前用到过这个,但是一直不清楚原理,今天抽空看了一下代码 但是由于我一直在使用java8,试了半天,暂时还没复现过put死循环的bug 查了 ...

- 理解同步,异步,阻塞,非阻塞,多路复用,事件驱动IO

以下是IO的一个基本过程 先理解一下用户空间和内核空间,系统为了保护内核数据,会将寻址空间分为用户空间和内核空间,32位机器为例,高1G字节作为内核空间,低3G字节作为用户空间.当用户程序读取数据的时 ...