DeepLearning Intro - sigmoid and shallow NN

This is a series of Machine Learning summary note. I will combine the deep learning book with the deeplearning open course . Any feedback is welcomed!

First let's go through some basic NN concept using Bernoulli classification problem as an example.

Activation Function

1.Bernoulli Output

1. Definition

When dealing with Binary classification problem, what activavtion function should we use in the output layer?

Basically given \(x \in R^n\), How to get \(P(y=1|x)\) ?

2. Loss function

Let $\hat{y} = P(y=1|x) $, We would expect following output

\[P(y|x) = \begin{cases}

\hat{y} & \quad when & y= 1\\

1-\hat{y} & \quad when & y= 0\\

\end{cases}

\]

Above can be simplified as

\[P(y|x)= \hat{y}^{y}(1-\hat{y})^{1-y}\]

Therefore, the maximum likelihood of m training samples will be

\[\theta_{ML} = argmax\prod^{m}_{i=1}P(y^i|x^i)\]

As ususal we take the log of above function and get following. Actually for gradient descent log has other advantages, which we will discuss later.

\[log(\theta_{ML}) = argmax\sum^{m}_{i=1} ylog(\hat{y})+(1-y)log(1-\hat{y}) \]

And the cost function for optimization is following

\[J(w,b) = \sum ^{m}_{i=1}L(y^i,\hat{y}^i)= -\sum^{m}_{i=1}ylog(\hat{y})+(1-y)log(1-\hat{y})\]

The cost function is the sum of loss from m training samples, which measures the performance of classification algo.

And yes here it is exactly the negative of log likelihood. While Cost function can be different from negative log likelihood, when we apply regularization. But here let's start with simple version.

So here comes our next problem, how can we get 1-dimension $ log(\hat{y})$, given input \(x\), which is n-dimension vector ?

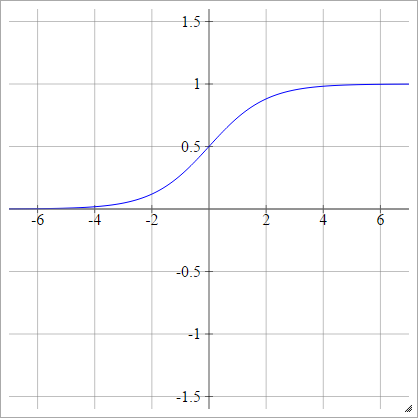

3. Activtion function - Sigmoid

Let \(h\) denotes the output from the previous hidden layer that goes into the final output layer. And a linear transformation is applied to \(h\) before activation function.

Let \(z = w^Th +b\)

The assumption here is

\[log(\hat{y}) = \begin{cases}

z & \quad when & y= 1\\

0 & \quad when & y= 0\\

\end{cases}

\]

Above can be simplified as

\[log(\hat{y}) = yz\quad \to \quad \hat{y} = exp(yz)\]

This is an unnormalized distribution of \(\hat{y}\). Because \(y\) denotes probability, we need to further normalize it to $ [0,1]$.

\[\hat{y} = \frac{exp(yz)} {\sum^1_{y=0}exp(yz)} \\

=\frac{exp(z)}{1+exp(z)}\\

\quad \quad \quad \quad = \frac{1}{1+exp(-z)} = \sigma(z)

\]

Bingo! Here we go - Sigmoid Function: \(\sigma(z) = \frac{1}{1+exp(-z)}\)

\[p(y|x) = \begin{cases}

\sigma(z) & \quad when & y= 1\\

1-\sigma(z) & \quad when & y= 0\\

\end{cases}

\]

Sigmoid function has many pretty cool features like following:

\[ 1- \sigma(x) = \sigma(-x) \\

\frac{d}{dx} \sigma(x) = \sigma(x)(1-\sigma(x)) \\

\quad \quad = \sigma(x)\sigma(-x)

\]

Using the first feature above, we can further simply the bernoulli output into following:

\[p(y|x) = \sigma((2y-1)z)\]

4. gradient descent and back propagation

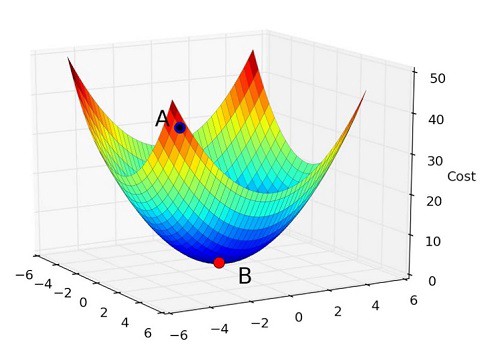

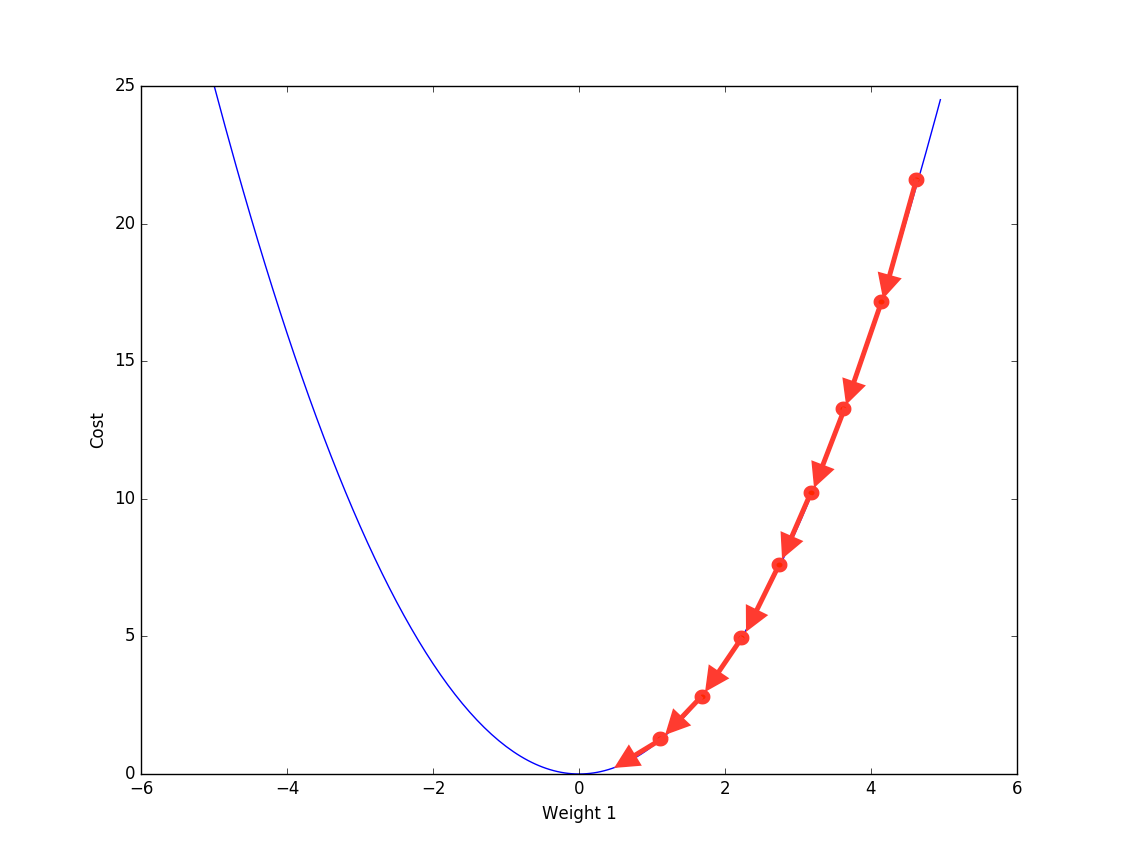

Now we have target cost fucntion to optimize. How does the NN learn from training data? The answer is -- Back Propagation.

Actually back propagation is not some fancy method that is designed for Neural Network. When training sample is big, we can use back propagation to train linear regerssion too.

Back Propogation is iteratively using the partial derivative of cost function to update the parameter, in order to reach local optimum.

$ \

Looping \quad m \quad samples :\

w= w - \frac{\partial J(w,b)}{\partial w} \

b= b - \frac{\partial J(w,b)}{\partial b}

$

Bascically, for each training sample \((x,y)\), we compare the \(y\) with \(\hat{y}\) from output layer. Get the difference, and compute which part of difference is from which parameter( by partial derivative). And then update the parameter accordingly.

And the derivative of sigmoid function can be calcualted using chaining method:

For each training sample, let \(\hat{y}=a = \sigma(z)\)

\[ \frac{\partial L(a,y)}{\partial w} =

\frac{\partial L(a,y)}{\partial a} \cdot

\frac{\partial a}{\partial z} \cdot

\frac{\partial z}{\partial w}\]

Where

1.$\frac{\partial L(a,y)}{\partial a}

=-\frac{y}{a} + \frac{1-y}{1-a} $

Given loss function is

\(L(a,y) = -(ylog(a) + (1-y)log(1-a))\)

2.\(\frac{\partial a}{\partial z} = \sigma(z)(1-\sigma(z)) = a(1-a)\).

See above for sigmoid features.

3.\(\frac{\partial z}{\partial w} = x\)

Put them together we get :

\[ \frac{\partial L(a,y)}{\partial w} = (a-y)x\]

This is exactly the update we will have from each training sample \((x,y)\) to the parameter \(w\).

5. Entire work flow.

Summarizing everything. A 1-layer binary classification neural network is trained as following:

- Forward propagation: From \(x\), we calculate \(\hat{y}= \sigma(z)\)

- Calculate the cost function \(J(w,b)\)

- Back propagation: update parameter \((w,b)\) using gradient descent.

- keep doing above until the cost function stop improving (improment < certain threshold)

6. what's next?

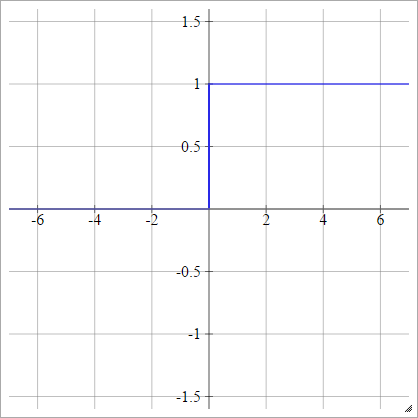

When NN has more than 1 layer, there will be hidden layers in between. And to get non-linear transformation of x, we also need different types of activation function for hidden layer.

However sigmoid is rarely used as hidden layer activation function for following reasons

- vanishing gradient descent

the reason we can't use [left] as activation function is because the gradient is 0 when \(z>1 ,z <0\).

Sigmoid only solves this problem partially. Becuase \(gradient \to 0\), when \(z>1 ,z <0\).

| \(p(y=1\|x)= max\{0,min\{1,z\}\}\) | \(p(y=1\|x)= \sigma(z)\) |

|---|---|

|

|

- non-zero centered

To be continued

Reference

- Ian Goodfellow, Yoshua Bengio, Aaron Conrville, "Deep Learning"

- Deeplearning.ai https://www.deeplearning.ai/

DeepLearning Intro - sigmoid and shallow NN的更多相关文章

- Sigmoid function in NN

X = [ones(m, ) X]; temp = X * Theta1'; t = size(temp, ); temp = [ones(t, ) temp]; h = temp * Theta2' ...

- Deeplearning - Overview of Convolution Neural Network

Finally pass all the Deeplearning.ai courses in March! I highly recommend it! If you already know th ...

- DeepLearning - Regularization

I have finished the first course in the DeepLearnin.ai series. The assignment is relatively easy, bu ...

- DeepLearning - Forard & Backward Propogation

In the previous post I go through basic 1-layer Neural Network with sigmoid activation function, inc ...

- Pytorch_第六篇_深度学习 (DeepLearning) 基础 [2]---神经网络常用的损失函数

深度学习 (DeepLearning) 基础 [2]---神经网络常用的损失函数 Introduce 在上一篇"深度学习 (DeepLearning) 基础 [1]---监督学习和无监督学习 ...

- 0802_转载-nn模块中的网络层介绍

0802_转载-nn 模块中的网络层介绍 目录 一.写在前面 二.卷积运算与卷积层 2.1 1d 2d 3d 卷积示意 2.2 nn.Conv2d 2.3 转置卷积 三.池化层 四.线性层 五.激活函 ...

- Neural Networks and Deep Learning

Neural Networks and Deep Learning This is the first course of the deep learning specialization at Co ...

- 关于BP算法在DNN中本质问题的几点随笔 [原创 by 白明] 微信号matthew-bai

随着deep learning的火爆,神经网络(NN)被大家广泛研究使用.但是大部分RD对BP在NN中本质不甚清楚,对于为什这么使用以及国外大牛们是什么原因会想到用dropout/sigmoid ...

- Pytorch实现UNet例子学习

参考:https://github.com/milesial/Pytorch-UNet 实现的是二值汽车图像语义分割,包括 dense CRF 后处理. 使用python3,我的环境是python3. ...

随机推荐

- Django开发BUG汇总

使用版本知悉 limengjiedeMacBook-Pro:~ limengjie$ python --version Python :: Anaconda, Inc. limengjiedeMacB ...

- JasperReport4.6生成PDF中文

Web项目中PDF显示中文 本人无奈使用JasperReport4.6,因为这本书(好像也是唯一的一本国内的介绍JasperReport的书), 选择"文件"→New命令,弹出一个 ...

- 一点一点看JDK源码(六)java.util.LinkedList前篇之链表概要

一点一点看JDK源码(六)java.util.LinkedList前篇之链表概要 liuyuhang原创,未经允许禁止转载 本文举例使用的是JDK8的API 目录:一点一点看JDK源码(〇) 1.什么 ...

- nopCommerce电子商务平台 安装教程(图文)

nopCommerce是一个通用的电子商务平台,适合每个商家的需要:它强大的企业和小型企业网站遍布世界各地的公司销售实体和数字商品.nopCommerce是一个透明且结构良好的解决方案,它结合了开源和 ...

- vue 样式渲染,添加删除元素

<template> <div> <ul> <li v-for="(item,index) in cartoon" :key=" ...

- JavaScript手绘风格的图形库RoughJS使用指南

RoughJS是一个轻量级的JavaScript图形库(压缩后约9KB),可以让你在网页上绘制素描风格.手绘样式般的图形.RoughJS定义了绘制直线,曲线,圆弧,多边形,圆和椭圆的图元,同时它还支持 ...

- MAC系统 输入管理员账户密码 登录不上

mac新系统改密码~管理员 升级10.13.2后,很多不会操作了, 那天把系统管理员设置成了普通管理,就不能打开个别软件了, 贼尴尬~~~ 后来找blog才解决,现在分享下~~ http://www. ...

- mysql的docker化安装

mysql版本有很多,先看下各类版本号说明: 3.X至5.1.X:这是早期MySQL的版本.常见早期的版本有:4.1.7.5.0.56等. 5.4.X到5.7.X:这是为了整合MySQL AB公司社区 ...

- 能够还原jQuery1.8的toggle的功能的插件

下面这个jQuery插件能够还原1.8的toggle的功能,如果你需要,可以直接把下面这段代码拷贝到你的jQuery里面,然后跟平时一样使用toggle的功能即可. //toggle plugin f ...

- 【Keil】Keil5的安装和破...

档案的话网上很多的,另外要看你开发的是哪种内核的芯片 如果是STC的,就安装C51 如果是STM的,就安装MDK 当然市面上有很多芯片的,我也没用过那么多种,这里也就不列举了 至于注册机,就是...恩 ...