吴裕雄 python 机器学习——模型选择数据集切分

import numpy as np

from sklearn.model_selection import train_test_split,KFold,StratifiedKFold,LeaveOneOut,cross_val_score #模型选择数据集切分train_test_split模型

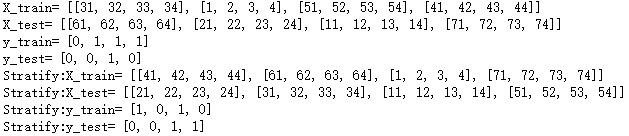

def test_train_test_split():

X=[[1,2,3,4],

[11,12,13,14],

[21,22,23,24],

[31,32,33,34],

[41,42,43,44],

[51,52,53,54],

[61,62,63,64],

[71,72,73,74]]

y=[1,1,0,0,1,1,0,0]

# 切分,测试集大小为原始数据集大小的 40%

X_train, X_test, y_train, y_test = train_test_split(X, y,test_size=0.4, random_state=0)

print("X_train=",X_train)

print("X_test=",X_test)

print("y_train=",y_train)

print("y_test=",y_test)

# 分层采样切分,测试集大小为原始数据集大小的 40%

X_train, X_test, y_train, y_test = train_test_split(X, y,test_size=0.4,random_state=0,stratify=y)

print("Stratify:X_train=",X_train)

print("Stratify:X_test=",X_test)

print("Stratify:y_train=",y_train)

print("Stratify:y_test=",y_test) test_train_test_split()

#模型选择数据集切分KFold模型

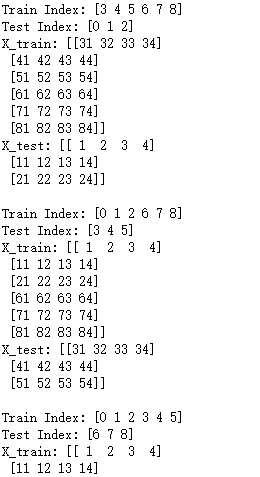

def test_KFold():

X=np.array([[1,2,3,4],

[11,12,13,14],

[21,22,23,24],

[31,32,33,34],

[41,42,43,44],

[51,52,53,54],

[61,62,63,64],

[71,72,73,74],

[81,82,83,84]])

y=np.array([1,1,0,0,1,1,0,0,1])

# 切分之前不混洗数据集

folder=KFold(n_splits=3,random_state=0,shuffle=False)

for train_index,test_index in folder.split(X,y):

print("Train Index:",train_index)

print("Test Index:",test_index)

print("X_train:",X[train_index])

print("X_test:",X[test_index])

print("")

# 切分之前混洗数据集

shuffle_folder=KFold(n_splits=3,random_state=0,shuffle=True)

for train_index,test_index in shuffle_folder.split(X,y):

print("Shuffled Train Index:",train_index)

print("Shuffled Test Index:",test_index)

print("Shuffled X_train:",X[train_index])

print("Shuffled X_test:",X[test_index])

print("") test_KFold()

#模型选择数据集切分StratifiedKFold模型

def test_StratifiedKFold():

X=np.array([[1,2,3,4],

[11,12,13,14],

[21,22,23,24],

[31,32,33,34],

[41,42,43,44],

[51,52,53,54],

[61,62,63,64],

[71,72,73,74]]) y=np.array([1,1,0,0,1,1,0,0]) folder=KFold(n_splits=4,random_state=0,shuffle=False)

stratified_folder=StratifiedKFold(n_splits=4,random_state=0,shuffle=False)

for train_index,test_index in folder.split(X,y):

print("Train Index:",train_index)

print("Test Index:",test_index)

print("y_train:",y[train_index])

print("y_test:",y[test_index])

print("") for train_index,test_index in stratified_folder.split(X,y):

print("Stratified Train Index:",train_index)

print("Stratified Test Index:",test_index)

print("Stratified y_train:",y[train_index])

print("Stratified y_test:",y[test_index])

print("") test_StratifiedKFold()

#模型选择数据集切分LeaveOneOut模型

def test_LeaveOneOut():

X=np.array([[1,2,3,4],

[11,12,13,14],

[21,22,23,24],

[31,32,33,34]])

y=np.array([1,1,0,0])

lo=LeaveOneOut()

for train_index,test_index in lo.split(X):

print("Train Index:",train_index)

print("Test Index:",test_index)

print("X_train:",X[train_index])

print("X_test:",X[test_index])

print("") test_LeaveOneOut()

#模型选择数据集切分cross_val_score模型

def test_cross_val_score():

from sklearn.datasets import load_digits

from sklearn.svm import LinearSVC

digits=load_digits() # 加载用于分类问题的数据集

X=digits.data

y=digits.target

# 使用 LinearSVC 作为分类器

result=cross_val_score(LinearSVC(),X,y,cv=10)

print("Cross Val Score is:",result) test_cross_val_score()

吴裕雄 python 机器学习——模型选择数据集切分的更多相关文章

- 吴裕雄 python 机器学习——模型选择验证曲线validation_curve模型

import numpy as np import matplotlib.pyplot as plt from sklearn.svm import LinearSVC from sklearn.da ...

- 吴裕雄 python 机器学习——模型选择学习曲线learning_curve模型

import numpy as np import matplotlib.pyplot as plt from sklearn.svm import LinearSVC from sklearn.da ...

- 吴裕雄 python 机器学习——模型选择回归问题性能度量

from sklearn.metrics import mean_absolute_error,mean_squared_error #模型选择回归问题性能度量mean_absolute_error模 ...

- 吴裕雄 python 机器学习——模型选择分类问题性能度量

import numpy as np import matplotlib.pyplot as plt from sklearn.svm import SVC from sklearn.datasets ...

- 吴裕雄 python 机器学习——模型选择参数优化暴力搜索寻优GridSearchCV模型

import scipy from sklearn.datasets import load_digits from sklearn.metrics import classification_rep ...

- 吴裕雄 python 机器学习——模型选择参数优化随机搜索寻优RandomizedSearchCV模型

import scipy from sklearn.datasets import load_digits from sklearn.metrics import classification_rep ...

- 吴裕雄 python 机器学习——模型选择损失函数模型

from sklearn.metrics import zero_one_loss,log_loss def test_zero_one_loss(): y_true=[1,1,1,1,1,0,0,0 ...

- 吴裕雄 python 机器学习——KNN回归KNeighborsRegressor模型

import numpy as np import matplotlib.pyplot as plt from sklearn import neighbors, datasets from skle ...

- 吴裕雄 python 机器学习——KNN分类KNeighborsClassifier模型

import numpy as np import matplotlib.pyplot as plt from sklearn import neighbors, datasets from skle ...

随机推荐

- HDU1069 Monkey and Banana(dp)

链接:http://acm.hdu.edu.cn/showproblem.php?pid=1069 题意:给定n种类型的长方体,每个类型长方体无数个,要求长方体叠放在一起,且上面的长方体接触面积要小于 ...

- 使用docker布署wordpress

环境准备 本博客使用docker环境搭建,从而做到布署简单 centos7环境(centos6跑docker要升级内核,也不建议这样做) 在centos7上安装好docker环境docker安装文档 ...

- input如何上传文件

1)绑定input[type='file']的change事件 <input @change="uploadPhoto($event)" type="file&qu ...

- spring(四):Resource

Resource Spring的Resource接口代表底层外部资源,提供了对底层外部资源的一致性访问接口. public interface Resource extends InputStream ...

- 题解【洛谷P5483】[JLOI2011]小A的烦恼

我们可以灵活运用\(C++\)的语法来解决此题. 解释一下代码中会出现的语法: \(string::iterator\ it\)表示定义了一个\(string\)类型的迭代器\(it\),\(^*it ...

- 解决linux 中文乱码

解决办法是在文件/etc/profile末尾添加一行 echo 'export LC_ALL="en_US.UTF-8"' >> /etc/profile source ...

- jenkins pipline 几个注意细节

新建jenkins pipline 1)pipeline的脚本语法要正确,sonarqube的projectKey需要做相应的修改 2)先执行一次构建,会报错 3)进到jenkins workspac ...

- Python记:通用的序列操作之成员资格(听起来倒是有些抽象的!)

______________________________永远守护这一尘不染的真心! 要检查特定的值是否包含在序列中,可使用运算符in.它检查是否满足指定的条件,并返回相应的值:满足时返回True, ...

- codeforces C. Primes and Multiplication(快速幂 唯一分解定理)

题目链接:http://codeforces.com/contest/1228/problem/C 题解:给定一个函数f,g,题目有描述其中的表达式含义和两者之间的关系. 然后计算: 首先把给定的x用 ...

- Seekbar扩大点击区域

//扩大点击区域private void enlargeSeekBar() { mContentView.setOnTouchListener(new OnTouchListener() {//mCo ...