Installing Apache Spark on Ubuntu 16.04

Santosh Srinivas

on 07 Nov 2016, tagged onApache Spark, Analytics, Data Minin

I've finally got to a long pending to-do-item to play with Apache Spark.

The following installation steps worked for me on Ubuntu 16.04.

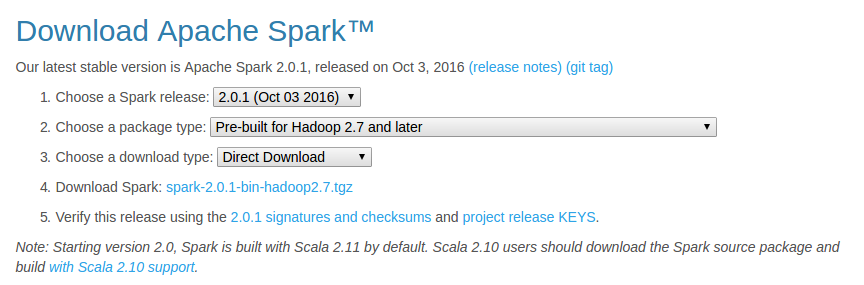

- Download the latest pre-built version from http://spark.apache.org/downloads.html

The below options worked for me:

- Unzip and move Spark

cd ~/Downloads/

tar xzvf spark-2.0.1-bin-hadoop2.7.tgz

mv spark-2.0.1-bin-hadoop2.7/ spark

sudo mv spark/ /usr/lib/

- Install SBT

As mentioned at sbt - Download

echo "deb https://dl.bintray.com/sbt/debian /" | sudo tee -a /etc/apt/sources.list.d/sbt.list

sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 2EE0EA64E40A89B84B2DF73499E82A75642AC823

sudo apt-get update

sudo apt-get install sbt

- Make sure Java is installed

If not, install java

sudo apt-add-repository ppa:webupd8team/java

sudo apt-get update

sudo apt-get install oracle-java8-installer

- Configure Spark

cd /usr/lib/spark/conf/

cp spark-env.sh.template spark-env.sh

vi spark-env.sh

Add the following lines

JAVA_HOME=/usr/lib/jvm/java-8-oracle

SPARK_WORKER_MEMORY=4g

- Configure IPv6

Basically, disable IPv6 using sudo vi /etc/sysctl.conf and add below lines

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

- Configure .bashrc

I modified .bashrc in Sublime Text using subl ~/.bashrc and added the following lines

export JAVA_HOME=/usr/lib/jvm/java-8-oracle

export SBT_HOME=/usr/share/sbt-launcher-packaging/bin/sbt-launch.jar

export SPARK_HOME=/usr/lib/spark

export PATH=$PATH:$JAVA_HOME/bin

export PATH=$PATH:$SBT_HOME/bin:$SPARK_HOME/bin:$SPARK_HOME/sbin

- Configure fish (Optional - But I love the fish shell)

Modify config.fish using subl ~/.config/fish/config.fish and add the following lines

#Credit: http://fishshell.com/docs/current/tutorial.html#tut_startup

set -x PATH $PATH /usr/lib/spark

set -x PATH $PATH /usr/lib/spark/bin

set -x PATH $PATH /usr/lib/spark/sbin

- Test Spark (Should work both in fish and bash)

Run pyspark (this is available in /usr/lib/spark/bin/) and test out.

For example ....

>>> a = 5

>>> b = 3

>>> a+b

8

>>> print(“Welcome to Spark”)

Welcome to Spark

## type Ctrl-d to exit

Try also, the built in run-example using run-example org.apache.spark.examples.SparkPi

That's it! You are ready to rock on using Apache Spark!

Next, I plan to checkout analysis using R as mentioned inhttp://www.milanor.net/blog/wp-content/uploads/2016/11/interactiveDataAnalysiswithSparkR_v5.pdf

Installing Apache Spark on Ubuntu 16.04的更多相关文章

- Install and Configure Apache Kafka on Ubuntu 16.04

https://devops.profitbricks.com/tutorials/install-and-configure-apache-kafka-on-ubuntu-1604-1/ by hi ...

- Install LAMP Stack On Ubuntu 16.04

原文:http://www.unixmen.com/how-to-install-lamp-stack-on-ubuntu-16-04/ LAMP is a combination of operat ...

- Ubuntu 16.04 LAMP server tutorial with Apache 2.4, PHP 7 and MariaDB (instead of MySQL)

https://www.howtoforge.com/tutorial/install-apache-with-php-and-mysql-on-ubuntu-16-04-lamp/ This tut ...

- digitalocean --- How To Install Apache Tomcat 8 on Ubuntu 16.04

https://www.digitalocean.com/community/tutorials/how-to-install-apache-tomcat-8-on-ubuntu-16-04 Intr ...

- 安装Hadoop及Spark(Ubuntu 16.04)

安装Hadoop及Spark(Ubuntu 16.04) 安装JDK 下载jdk(以jdk-8u91-linux-x64.tar.gz为例) 新建文件夹 sudo mkdir /usr/lib/jvm ...

- 解决Ubuntu 16.04 上Android Studio2.3上面运行APP时提示DELETE_FAILED_INTERNAL_ERROR Error while Installing APKs的问题

本人工作环境:Ubuntu 16.04 LTS + Android Studio 2.3 AVD启动之后,运行APP,报错提示: DELETE_FAILED_INTERNAL_ERROR Error ...

- Installing Moses on Ubuntu 16.04

Installing Moses on Ubuntu 16.04 The process of installation To install requirements sudo apt-get in ...

- Installing Hyperledger Fabric v1.1 on Ubuntu 16.04 — Part I

There is an entire library of Blockchain APIs which you can select according to the needs that suffi ...

- 如何在Ubuntu 16.04上安装Apache Web服务器

转载自:https://www.howtoing.com/how-to-install-the-apache-web-server-on-ubuntu-16-04 介绍 Apache HTTP服务器是 ...

随机推荐

- 【POJ】2165.Gunman

题解 把直线的斜率分解成二维,也就是随着z的增加x的增量和y的增量 我们发现一条合法直线向上移一点一定能碰到一条横线 知道了这条横线可以算出y的斜率 我们旋转一下,让这条横线碰到两条竖线,就可以算出x ...

- 微信公众号开发--用.Net Core实现微信消息加解密

1.进入微信公众号后台设置微信服务器配置参数(注意:Token和EncodingAESKey必须和微信服务器验证参数保持一致,不然验证不会通过). 2.设置为安全模式 3.代码实现(主要分为验证接口和 ...

- 2017-2018-1 20179202《Linux内核原理与分析》第十一周作业

Metasploit实现木马生成.捆绑.免杀 1.预备知识 (1)Metasploit Metasploit是一款开源的安全漏洞检测工具,全称叫做The Metasploit Framework,简称 ...

- 使用apache的ab命令进行压测

1. 背景:互联网发达的今天,大大小小的网站如雨后春笋,不断出现,但是想要做出一个网站很简单,但是想要做好一个网站,非常非常难,首先:网站做好之后的功能怎么样这都是次要的,主要的是你的网站能承受怎么样 ...

- DSP已经英雄迟暮了吗?FPGA才是未来的大杀器?

DSP技术,在某些人看来,或者已经面临着英雄迟暮的感觉,就我们当前所知道的.Freesacle.ADI.NXP早就停掉了新技术发展,而当前从大的方面说只剩下TI一家扛着Digital Si ...

- LINUX 下挂载 exfat 格式 u 盘或移动硬盘

apt-get update apt-get install exfat-utils

- 【基础知识】Asp.Net基础三

服务器端控件一般用于访问量不高的网站,要做到物尽其用. 服务器端控件: FIleUpload控件:向服务器上传文件 if (this.FileUpload1.HasFile) { // Path.Ge ...

- 机器学习之路: python 朴素贝叶斯分类器 MultinomialNB 预测新闻类别

使用python3 学习朴素贝叶斯分类api 设计到字符串提取特征向量 欢迎来到我的git下载源代码: https://github.com/linyi0604/MachineLearning fro ...

- Linux嵌入式文件系统(网络文件系统)

<文件系统定义> 怎么将文件和文件目录加载到linux内核中,这一种加载的方式就叫做文件系统 <建立根文件系统目录和文件> <创建目录> 1)在linux系统中使用 ...

- CF17E Palisection 差分+manacher算法

题目大意: 给定一个串$S$,询问有多少对相交的回文子串 直接做的办法: 我们先考虑求出以$i$为结尾的串的数量,这个很好统计 之后,我们再求出所有包含了点$i$的回文串的数目 这个相当于在$i$的左 ...

Download Apache Spark

Download Apache Spark