吴裕雄 python深度学习与实践(18)

# coding: utf-8 import time

import numpy as np

import tensorflow as tf

import _pickle as pickle

import matplotlib.pyplot as plt def unpickle(filename):

import pickle

with open(filename, 'rb') as fo:

data = pickle.load(fo, encoding='latin1')

return data def onehot(labels):

n_sample = len(labels)

n_class = max(labels) + 1

onehot_labels = np.zeros((n_sample, n_class))

onehot_labels[np.arange(n_sample), labels] = 1

return onehot_labels # 训练数据集

data1 = unpickle('F:\\TensorFlow_deep_learn\\cifar-10-batches-py\\data_batch_1')

data2 = unpickle('F:\\TensorFlow_deep_learn\\cifar-10-batches-py\\data_batch_2')

data3 = unpickle('F:\\TensorFlow_deep_learn\\cifar-10-batches-py\\data_batch_3')

data4 = unpickle('F:\\TensorFlow_deep_learn\\cifar-10-batches-py\\data_batch_4')

data5 = unpickle('F:\\TensorFlow_deep_learn\\cifar-10-batches-py\\data_batch_5') X_train = np.concatenate((data1['data'], data2['data'], data3['data'], data4['data'], data5['data']), axis=0)

y_train = np.concatenate((data1['labels'], data2['labels'], data3['labels'], data4['labels'], data5['labels']), axis=0)

y_train = onehot(y_train)

# 测试数据集

test = unpickle('F:\\TensorFlow_deep_learn\\cifar-10-batches-py\\test_batch')

X_test = test['data'][:5000, :]

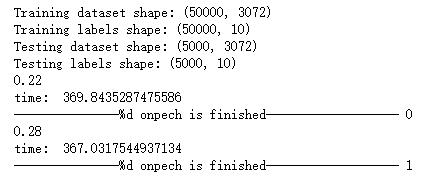

y_test = onehot(test['labels'])[:5000, :] print('Training dataset shape:', X_train.shape)

print('Training labels shape:', y_train.shape)

print('Testing dataset shape:', X_test.shape)

print('Testing labels shape:', y_test.shape) with tf.device('/cpu:0'): # 模型参数

learning_rate = 1e-3

training_iters = 200

batch_size = 50

display_step = 5

n_features = 3072 # 32*32*3

n_classes = 10

n_fc1 = 384

n_fc2 = 192 # 构建模型

x = tf.placeholder(tf.float32, [None, n_features])

y = tf.placeholder(tf.float32, [None, n_classes]) W_conv = {

'conv1': tf.Variable(tf.truncated_normal([5, 5, 3, 32], stddev=0.0001)),

'conv2': tf.Variable(tf.truncated_normal([5, 5, 32, 64],stddev=0.01)),

'fc1': tf.Variable(tf.truncated_normal([8*8*64, n_fc1], stddev=0.1)),

'fc2': tf.Variable(tf.truncated_normal([n_fc1, n_fc2], stddev=0.1)),

'fc3': tf.Variable(tf.truncated_normal([n_fc2, n_classes], stddev=0.1))

}

b_conv = {

'conv1': tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[32])),

'conv2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[64])),

'fc1': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[n_fc1])),

'fc2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[n_fc2])),

'fc3': tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[n_classes]))

} x_image = tf.reshape(x, [-1, 32, 32, 3])

# 卷积层 1

conv1 = tf.nn.conv2d(x_image, W_conv['conv1'], strides=[1, 1, 1, 1], padding='SAME')

conv1 = tf.nn.bias_add(conv1, b_conv['conv1'])

conv1 = tf.nn.relu(conv1)

# 池化层 1

pool1 = tf.nn.avg_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME')

# LRN层,Local Response Normalization

norm1 = tf.nn.lrn(pool1, 4, bias=1.0, alpha=0.001/9.0, beta=0.75)

# 卷积层 2

conv2 = tf.nn.conv2d(norm1, W_conv['conv2'], strides=[1, 1, 1, 1], padding='SAME')

conv2 = tf.nn.bias_add(conv2, b_conv['conv2'])

conv2 = tf.nn.relu(conv2)

# LRN层,Local Response Normalization

norm2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001/9.0, beta=0.75)

# 池化层 2

pool2 = tf.nn.avg_pool(norm2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME')

reshape = tf.reshape(pool2, [-1, 8*8*64]) fc1 = tf.add(tf.matmul(reshape, W_conv['fc1']), b_conv['fc1'])

fc1 = tf.nn.relu(fc1)

# 全连接层 2

fc2 = tf.add(tf.matmul(fc1, W_conv['fc2']), b_conv['fc2'])

fc2 = tf.nn.relu(fc2)

# 全连接层 3, 即分类层

fc3 = tf.nn.softmax(tf.add(tf.matmul(fc2, W_conv['fc3']), b_conv['fc3'])) # 定义损失

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=fc3, labels=y))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=learning_rate).minimize(loss)

# 评估模型

correct_pred = tf.equal(tf.argmax(fc3, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32)) init = tf.global_variables_initializer() with tf.Session() as sess:

sess.run(init)

c = []

total_batch = int(X_train.shape[0] / batch_size)

# for i in range(training_iters):

start_time = time.time()

for i in range(200):

for batch in range(total_batch):

batch_x = X_train[batch*batch_size : (batch+1)*batch_size, :]

batch_y = y_train[batch*batch_size : (batch+1)*batch_size, :]

sess.run(optimizer, feed_dict={x: batch_x, y: batch_y})

acc = sess.run(accuracy, feed_dict={x: batch_x, y: batch_y})

print(acc)

c.append(acc)

end_time = time.time()

print('time: ', (end_time - start_time))

start_time = end_time

print("---------------%d onpech is finished-------------------",i)

print("Optimization Finished!") # Test

test_acc = sess.run(accuracy, feed_dict={x: X_test, y: y_test})

print("Testing Accuracy:", test_acc)

plt.plot(c)

plt.xlabel('Iter')

plt.ylabel('Cost')

plt.title('lr=%f, ti=%d, bs=%d, acc=%f' % (learning_rate, training_iters, batch_size, test_acc))

plt.tight_layout()

plt.savefig('F:\\cnn-tf-cifar10-%s.png' % test_acc, dpi=200)

吴裕雄 python深度学习与实践(18)的更多相关文章

- 吴裕雄 python深度学习与实践(17)

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data import time # 声明输 ...

- 吴裕雄 python深度学习与实践(16)

import struct import numpy as np import matplotlib.pyplot as plt dateMat = np.ones((7,7)) kernel = n ...

- 吴裕雄 python深度学习与实践(15)

import tensorflow as tf import tensorflow.examples.tutorials.mnist.input_data as input_data mnist = ...

- 吴裕雄 python深度学习与实践(14)

import numpy as np import tensorflow as tf import matplotlib.pyplot as plt threshold = 1.0e-2 x1_dat ...

- 吴裕雄 python深度学习与实践(13)

import numpy as np import matplotlib.pyplot as plt x_data = np.random.randn(10) print(x_data) y_data ...

- 吴裕雄 python深度学习与实践(12)

import tensorflow as tf q = tf.FIFOQueue(,"float32") counter = tf.Variable(0.0) add_op = t ...

- 吴裕雄 python深度学习与实践(11)

import numpy as np from matplotlib import pyplot as plt A = np.array([[5],[4]]) C = np.array([[4],[6 ...

- 吴裕雄 python深度学习与实践(10)

import tensorflow as tf input1 = tf.constant(1) print(input1) input2 = tf.Variable(2,tf.int32) print ...

- 吴裕雄 python深度学习与实践(9)

import numpy as np import tensorflow as tf inputX = np.random.rand(100) inputY = np.multiply(3,input ...

随机推荐

- [转]微软商店 打开就显示无法加载该页面 代码0x80131500?

在某博客看到的方法,供参考,可以尝试一下,我的也是这么解决的 1.打开“运行”输入 inetcpl.cpl (“WINDOWS”+“R”键,输入 inetcpl.cpl亦可) 2.点开高级往下拉,勾上 ...

- Unity外包团队:U3D与UE我选哪个好?请别再问这种问题了!

原本预先决定的两家VR游戏公司采访,思熊和星为棋,并没有发现什么共性之初.结果在采访之后却意外发现,两家的经历有着非常相似的地方.他们都是来自于开发游戏所用的引擎的原开发商,比如思熊的主力来自Epic ...

- win10 家庭版 升级 win10企业版

更改秘钥 我的电脑(右键)->属性-> 更改产品秘钥 -> 96YNV-9X4RP-2YYKB-RMQH4-6Q72D->重启系统 如果秘钥过期了,就百度按时间搜索,总有一个是 ...

- Galaxy2D Game Engine 4.2 开发版发布

Update: ◆删除Graph_GetRenderTarget()函数,添加Graph_CopyBackBuffer()/Graph_CopyRanderTarget()函数 ◆Graph_EndS ...

- scikit-learn框架学习笔记(一)

sklearn于2006年问世于Google,是使用python语言编写的.基于numpy.scipy和matplotlib的一个机器学习算法库,设计的非常优雅,它让我们能够使用同样的接口来实现所有不 ...

- Problem A: Apple(高斯消元)

可以发现具有非常多的方程, 然后高斯消元就能85分 然而我们发现这些方程组成了一些环, 我们仅仅设出一部分变量即可获得N个方程, 就可以A了 trick 合并方程 #include <cstdi ...

- mybatis入门篇:存储过程的操作

1.无ResultType/ResultMap查询 先定义一个查询的存储过程: DELIMITER // CREATE PROCEDURE `select_user_by_id`( IN userId ...

- python-web自动化-Js-日历操作

日历控件是web网站上经常会遇到的一个场景,有些输入框是可以直接输入日期的,有些不能:以12306网站为例,讲解如何解决日历控件为readonly属性的问题. 基本思路:先用js去掉readonly属 ...

- (Python基础)文件操作

对文件操作流程 打开文件,得到文件句柄并赋值给一个变量 通过句柄对文件进行操作 关闭文件 现有文件如下 命名为7 years Once I was seven years old my momma t ...

- Security注解:@PreAuthorize,@PostAuthorize, @Secured, EL实现方法安全

说明 (1)JDK版本:1.8(2)Spring Boot 2.0.6(3)Spring Security 5.0.9(4)Spring Data JPA 2.0.11.RELEASE(5)hibe ...