吴裕雄 python 机器学习-KNN算法(1)

import numpy as np

import operator as op

from os import listdir def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def createDataSet():

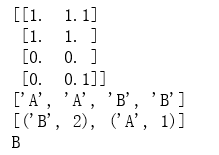

group = np.array([[1.0,1.1],[1.0,1.0],[0,0],[0,0.1]])

labels = ['A','A','B','B']

return group, labels data,labels = createDataSet()

print(data)

print(labels) test = np.array([[0,0.5]])

result = classify0(test,data,labels,3)

print(result)

import numpy as np

import operator as op

from os import listdir def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def file2matrix(filename):

fr = open(filename)

returnMat = []

classLabelVector = [] #prepare labels return

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat.append([float(listFromLine[0]),float(listFromLine[1]),float(listFromLine[2])])

classLabelVector.append(int(listFromLine[-1]))

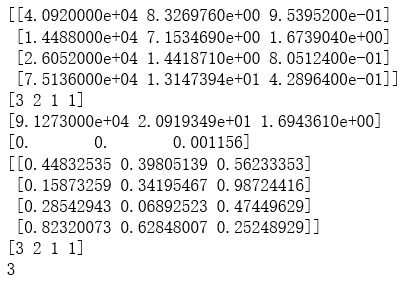

return np.array(returnMat),np.array(classLabelVector) trainData,trainLabel = file2matrix("D:\\LearningResource\\machinelearninginaction\\Ch02\\datingTestSet2.txt")

print(trainData[0:4])

print(trainLabel[0:4]) def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = np.zeros(np.shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - np.tile(minVals, (m,1))

normDataSet = normDataSet/np.tile(ranges, (m,1)) #element wise divide

return normDataSet, ranges, minVals normDataSet, ranges, minVals = autoNorm(trainData)

print(ranges)

print(minVals)

print(normDataSet[0:4])

print(trainLabel[0:4]) testData = np.array([[0.5,0.3,0.5]])

result = classify0(testData, normDataSet, trainLabel, 5)

print(result)

import numpy as np

import operator as op

from os import listdir def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def file2matrix(filename):

fr = open(filename)

returnMat = []

classLabelVector = [] #prepare labels return

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat.append([float(listFromLine[0]),float(listFromLine[1]),float(listFromLine[2])])

classLabelVector.append(listFromLine[-1])

return np.array(returnMat),np.array(classLabelVector) def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = np.zeros(np.shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - np.tile(minVals, (m,1))

normDataSet = normDataSet/np.tile(ranges, (m,1)) #element wise divide

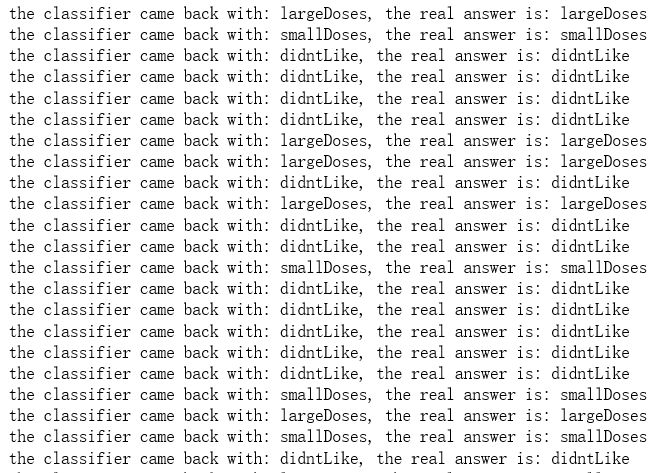

return normDataSet, ranges, minVals normDataSet, ranges, minVals = autoNorm(trainData) def datingClassTest():

hoRatio = 0.10 #hold out 10%

datingDataMat,datingLabels = file2matrix("D:\\LearningResource\\machinelearninginaction\\Ch02\\datingTestSet.txt")

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRatio)

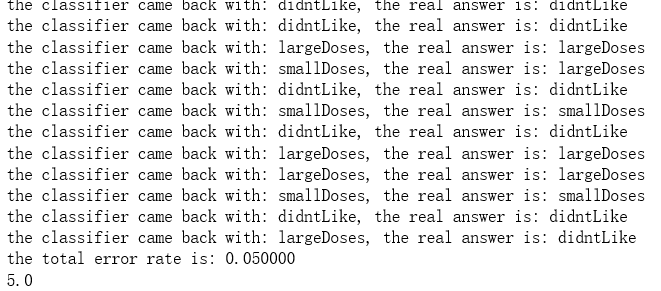

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:],normMat[numTestVecs:m,:],datingLabels[numTestVecs:m],3)

print(('the classifier came back with: %s, the real answer is: %s') % (classifierResult, datingLabels[i]))

if (classifierResult != datingLabels[i]):

errorCount += 1.0

print(('the total error rate is: %f') % (errorCount/float(numTestVecs)))

print(errorCount) datingClassTest()

import numpy as np

import operator as op

from os import listdir def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def file2matrix(filename):

fr = open(filename)

returnMat = []

classLabelVector = [] #prepare labels return

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat.append([float(listFromLine[0]),float(listFromLine[1]),float(listFromLine[2])])

classLabelVector.append(listFromLine[-1])

return np.array(returnMat),np.array(classLabelVector) def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = np.zeros(np.shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - np.tile(minVals, (m,1))

normDataSet = normDataSet/np.tile(ranges, (m,1)) #element wise divide

return normDataSet, ranges, minVals normDataSet, ranges, minVals = autoNorm(trainData) def datingClassTest():

hoRatio = 0.10 #hold out 10%

datingDataMat,datingLabels = file2matrix("D:\\LearningResource\\machinelearninginaction\\Ch02\\datingTestSet.txt")

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRatio)

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:],normMat[numTestVecs:m,:],datingLabels[numTestVecs:m],3)

print(('the classifier came back with: %s, the real answer is: %s') % (classifierResult, datingLabels[i]))

if (classifierResult != datingLabels[i]):

errorCount += 1.0

print(('the total error rate is: %f') % (errorCount/float(numTestVecs)))

print(errorCount) datingClassTest()

................................................

import numpy as np

import operator as op

from os import listdir def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def file2matrix(filename):

fr = open(filename)

returnMat = []

classLabelVector = [] #prepare labels return

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat.append([float(listFromLine[0]),float(listFromLine[1]),float(listFromLine[2])])

classLabelVector.append(int(listFromLine[-1]))

return np.array(returnMat),np.array(classLabelVector) def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = np.zeros(np.shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - np.tile(minVals, (m,1))

normDataSet = normDataSet/np.tile(ranges, (m,1)) #element wise divide

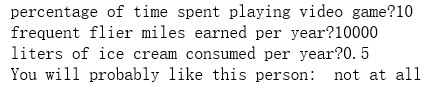

return normDataSet, ranges, minVals def classifyPerson():

resultList = ["not at all", "in samll doses", "in large doses"]

percentTats = float(input("percentage of time spent playing video game?"))

ffMiles = float(input("frequent flier miles earned per year?"))

iceCream = float(input("liters of ice cream consumed per year?"))

testData = np.array([percentTats,ffMiles,iceCream])

trainData,trainLabel = file2matrix("D:\\LearningResource\\machinelearninginaction\\Ch02\\datingTestSet2.txt")

normDataSet, ranges, minVals = autoNorm(trainData)

result = classify0((testData-minVals)/ranges, normDataSet, trainLabel, 3)

print("You will probably like this person: ",resultList[result-1]) classifyPerson()

import numpy as np

import operator as op

from os import listdir def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def file2matrix(filename):

fr = open(filename)

returnMat = []

classLabelVector = [] #prepare labels return

for line in fr.readlines():

line = line.strip()

listFromLine = line.split('\t')

returnMat.append([float(listFromLine[0]),float(listFromLine[1]),float(listFromLine[2])])

classLabelVector.append(int(listFromLine[-1]))

return np.array(returnMat),np.array(classLabelVector) def autoNorm(dataSet):

minVals = dataSet.min(0)

maxVals = dataSet.max(0)

ranges = maxVals - minVals

normDataSet = np.zeros(np.shape(dataSet))

m = dataSet.shape[0]

normDataSet = dataSet - np.tile(minVals, (m,1))

normDataSet = normDataSet/np.tile(ranges, (m,1)) #element wise divide

return normDataSet, ranges, minVals def classifyPerson():

resultList = ["not at all", "in samll doses", "in large doses"]

percentTats = float(input("percentage of time spent playing video game?"))

ffMiles = float(input("frequent flier miles earned per year?"))

iceCream = float(input("liters of ice cream consumed per year?"))

testData = np.array([percentTats,ffMiles,iceCream])

trainData,trainLabel = file2matrix("D:\\LearningResource\\machinelearninginaction\\Ch02\\datingTestSet2.txt")

normDataSet, ranges, minVals = autoNorm(trainData)

result = classify0((testData-minVals)/ranges, normDataSet, trainLabel, 3)

print("You will probably like this person: ",resultList[result-1]) classifyPerson()

import numpy as np

import operator as op

from os import listdir def classify0(inX, dataSet, labels, k):

dataSetSize = dataSet.shape[0]

diffMat = np.tile(inX, (dataSetSize,1)) - dataSet

sqDiffMat = diffMat**2

sqDistances = sqDiffMat.sum(axis=1)

distances = sqDistances**0.5

sortedDistIndicies = distances.argsort()

classCount={}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

classCount[voteIlabel] = classCount.get(voteIlabel,0) + 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def img2vector(filename):

returnVect = []

fr = open(filename)

for i in range(32):

lineStr = fr.readline()

for j in range(32):

returnVect.append(int(lineStr[j]))

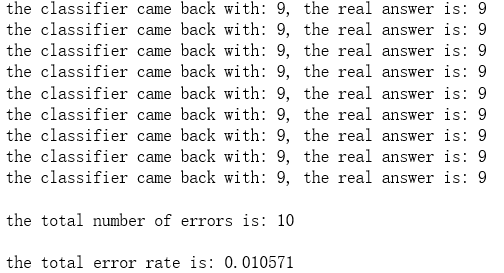

return np.array([returnVect]) def handwritingClassTest():

hwLabels = []

trainingFileList = listdir('D:\\LearningResource\\machinelearninginaction\\Ch02\\trainingDigits') #load the training set

m = len(trainingFileList)

trainingMat = np.zeros((m,1024))

for i in range(m):

fileNameStr = trainingFileList[i]

fileStr = fileNameStr.split('.')[0] #take off .txt

classNumStr = int(fileStr.split('_')[0])

hwLabels.append(classNumStr)

trainingMat[i,:] = img2vector('D:\\LearningResource\\machinelearninginaction\\Ch02\\trainingDigits\\%s' % fileNameStr)

testFileList = listdir('D:\\LearningResource\\machinelearninginaction\\Ch02\\testDigits') #iterate through the test set

mTest = len(testFileList)

errorCount = 0.0

for i in range(mTest):

fileNameStr = testFileList[i]

fileStr = fileNameStr.split('.')[0] #take off .txt

classNumStr = int(fileStr.split('_')[0])

vectorUnderTest = img2vector('D:\\LearningResource\\machinelearninginaction\\Ch02\\testDigits\\%s' % fileNameStr)

classifierResult = classify0(vectorUnderTest, trainingMat, hwLabels, 3)

print("the classifier came back with: %d, the real answer is: %d" % (classifierResult, classNumStr))

if (classifierResult != classNumStr):

errorCount += 1.0

print("\nthe total number of errors is: %d" % errorCount)

print("\nthe total error rate is: %f" % (errorCount/float(mTest))) handwritingClassTest()

.......................................

吴裕雄 python 机器学习-KNN算法(1)的更多相关文章

- 吴裕雄 python 机器学习——KNN回归KNeighborsRegressor模型

import numpy as np import matplotlib.pyplot as plt from sklearn import neighbors, datasets from skle ...

- 吴裕雄 python 机器学习——KNN分类KNeighborsClassifier模型

import numpy as np import matplotlib.pyplot as plt from sklearn import neighbors, datasets from skle ...

- 吴裕雄 python 机器学习-KNN(2)

import matplotlib import numpy as np import matplotlib.pyplot as plt from matplotlib.patches import ...

- 吴裕雄 python 机器学习——半监督学习标准迭代式标记传播算法LabelPropagation模型

import numpy as np import matplotlib.pyplot as plt from sklearn import metrics from sklearn import d ...

- 吴裕雄 python 机器学习——集成学习AdaBoost算法回归模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets,ensemble from sklear ...

- 吴裕雄 python 机器学习——集成学习AdaBoost算法分类模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets,ensemble from sklear ...

- 吴裕雄 python 机器学习——人工神经网络感知机学习算法的应用

import numpy as np from matplotlib import pyplot as plt from sklearn import neighbors, datasets from ...

- 吴裕雄 python 机器学习——半监督学习LabelSpreading模型

import numpy as np import matplotlib.pyplot as plt from sklearn import metrics from sklearn import d ...

- 吴裕雄 python 机器学习——人工神经网络与原始感知机模型

import numpy as np from matplotlib import pyplot as plt from mpl_toolkits.mplot3d import Axes3D from ...

随机推荐

- CS229 6.12 Neurons Networks from self-taught learning to deep network

self-taught learning 在特征提取方面完全是用的无监督的方法,对于有标记的数据,可以结合有监督学习来对上述方法得到的参数进行微调,从而得到一个更加准确的参数a. 在self-taug ...

- 分布式系统的Raft算法

好东西~~ 英文动画演示Raft 过去, Paxos一直是分布式协议的标准,但是Paxos难于理解,更难以实现,Google的分布式锁系统Chubby作为Paxos实现曾经遭遇到很多坑. 来自Stan ...

- Wsgi的web框架实例

建立server服务端: from wsgiref.simple_server import make_server import time def f1(request): return [b'&l ...

- python函数的创建和函数参数

[1]#函数的作用:1.减少重复代码 2.方便修改,更容易扩展3.保持代码的一致性 [2]#函数简单的定义规则: 函数代码块以def关键词开头,后接函数标识符名称和圆括号(),任何传入参数和自变量必须 ...

- es6(8)--对象

//对象 { //简洁表示法 let o = 1; let k = 2; let es5 = { o:o, k:k }; let es6 = { o, k }; console.log(es5); c ...

- Python的字典类型

Python的字典类型为dict,用{}来表示,字典存放键值对数据,每个键值对用:号分隔,每个键值对之间用,号分隔,其基本格式如下: d = {key1 : value1, key2 : value2 ...

- 虚拟机安装 gentoo 的时候,通过 filezilla 上传 stage3 文件

最近需要在 虚拟机里面安装gentoo,但因为虚拟机里面自动下载的 stage3 太慢了,所以也在寻找解决办法,最终发现 filezilla 是个好办法. 主要参考 https://www.linux ...

- RPC通信原理

什么是 RPCRPC(Remote Procedure Call Protocol)远程过程调用协议.通俗的描述是:客户端在不知道调用细节的情况下,调用存在于远程计算上的某个过程或函数,就像调用本地应 ...

- pom格式

参考: https://www.jianshu.com/p/0e3a1f9c9ce7 https://blog.csdn.net/u012152619/article/details/51485297 ...

- 浮动ip cz