吴裕雄 python神经网络 花朵图片识别(9)

import os

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image, ImageChops

from skimage import color,data,transform,io

#获取所有数据文件夹名称

fileList = os.listdir("F:\\data\\flowers")

trainDataList = []

trianLabel = []

testDataList = []

testLabel = []

for j in range(len(fileList)):

data = os.listdir("F:\\data\\flowers\\"+fileList[j])

testNum = int(len(data)*0.25)

while(testNum>0):

np.random.shuffle(data)

testNum -= 1

trainData = np.array(data[:-(int(len(data)*0.25))])

testData = np.array(data[-(int(len(data)*0.25)):])

for i in range(len(trainData)):

if(trainData[i][-3:]=="jpg"):

image = io.imread("F:\\data\\flowers\\"+fileList[j]+"\\"+trainData[i])

image=transform.resize(image,(64,64))

trainDataList.append(image)

trianLabel.append(int(j))

angle = np.random.randint(-90,90)

image =transform.rotate(image, angle)

image=transform.resize(image,(64,64))

trainDataList.append(image)

trianLabel.append(int(j))

for i in range(len(testData)):

if(testData[i][-3:]=="jpg"):

image = io.imread("F:\\data\\flowers\\"+fileList[j]+"\\"+testData[i])

image=transform.resize(image,(64,64))

testDataList.append(image)

testLabel.append(int(j))

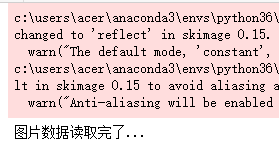

print("图片数据读取完了...")

print(np.shape(trainDataList))

print(np.shape(trianLabel))

print(np.shape(testDataList))

print(np.shape(testLabel))

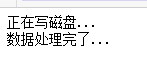

print("正在写磁盘...")

np.save("G:\\trainDataList",trainDataList)

np.save("G:\\trianLabel",trianLabel)

np.save("G:\\testDataList",testDataList)

np.save("G:\\testLabel",testLabel)

print("数据处理完了...")

import numpy as np

from keras.utils import to_categorical

trainLabel = np.load("G:\\trianLabel.npy")

testLabel = np.load("G:\\testLabel.npy")

trainLabel_encoded = to_categorical(trainLabel)

testLabel_encoded = to_categorical(testLabel)

np.save("G:\\trianLabel",trainLabel_encoded)

np.save("G:\\testLabel",testLabel_encoded)

print("转码类别写盘完了...")

import random

import numpy as np

trainDataList = np.load("G:\\trainDataList.npy")

trianLabel = np.load("G:\\trianLabel.npy")

print("数据加载完了...")

trainIndex = [i for i in range(len(trianLabel))]

random.shuffle(trainIndex)

trainData = []

trainClass = []

for i in range(len(trainIndex)):

trainData.append(trainDataList[trainIndex[i]])

trainClass.append(trianLabel[trainIndex[i]])

print("训练数据shuffle完了...")

np.save("G:\\trainDataList",trainData)

np.save("G:\\trianLabel",trainClass)

print("训练数据写盘完毕...")

import random

import numpy as np

testDataList = np.load("G:\\testDataList.npy")

testLabel = np.load("G:\\testLabel.npy")

testIndex = [i for i in range(len(testLabel))]

random.shuffle(testIndex)

testData = []

testClass = []

for i in range(len(testIndex)):

testData.append(testDataList[testIndex[i]])

testClass.append(testLabel[testIndex[i]])

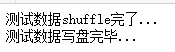

print("测试数据shuffle完了...")

np.save("G:\\testDataList",testData)

np.save("G:\\testLabel",testClass)

print("测试数据写盘完毕...")

# coding: utf-8

import tensorflow as tf

from random import shuffle

INPUT_NODE = 64*64

OUT_NODE = 5

IMAGE_SIZE = 64

NUM_CHANNELS = 3

NUM_LABELS = 5

#第一层卷积层的尺寸和深度

CONV1_DEEP = 16

CONV1_SIZE = 5

#第二层卷积层的尺寸和深度

CONV2_DEEP = 32

CONV2_SIZE = 5

#全连接层的节点数

FC_SIZE = 512

def inference(input_tensor, train, regularizer):

#卷积

with tf.variable_scope('layer1-conv1'):

conv1_weights = tf.Variable(tf.random_normal([CONV1_SIZE,CONV1_SIZE,NUM_CHANNELS,CONV1_DEEP],stddev=0.1),name='weight')

tf.summary.histogram('convLayer1/weights1', conv1_weights)

conv1_biases = tf.Variable(tf.Variable(tf.random_normal([CONV1_DEEP])),name="bias")

tf.summary.histogram('convLayer1/bias1', conv1_biases)

conv1 = tf.nn.conv2d(input_tensor,conv1_weights,strides=[1,1,1,1],padding='SAME')

tf.summary.histogram('convLayer1/conv1', conv1)

relu1 = tf.nn.relu(tf.nn.bias_add(conv1,conv1_biases))

tf.summary.histogram('ConvLayer1/relu1', relu1)

#池化

with tf.variable_scope('layer2-pool1'):

pool1 = tf.nn.max_pool(relu1,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

tf.summary.histogram('ConvLayer1/pool1', pool1)

#卷积

with tf.variable_scope('layer3-conv2'):

conv2_weights = tf.Variable(tf.random_normal([CONV2_SIZE,CONV2_SIZE,CONV1_DEEP,CONV2_DEEP],stddev=0.1),name='weight')

tf.summary.histogram('convLayer2/weights2', conv2_weights)

conv2_biases = tf.Variable(tf.random_normal([CONV2_DEEP]),name="bias")

tf.summary.histogram('convLayer2/bias2', conv2_biases)

#卷积向前学习

conv2 = tf.nn.conv2d(pool1,conv2_weights,strides=[1,1,1,1],padding='SAME')

tf.summary.histogram('convLayer2/conv2', conv2)

relu2 = tf.nn.relu(tf.nn.bias_add(conv2,conv2_biases))

tf.summary.histogram('ConvLayer2/relu2', relu2)

#池化

with tf.variable_scope('layer4-pool2'):

pool2 = tf.nn.max_pool(relu2,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

tf.summary.histogram('ConvLayer2/pool2', pool2)

#变型

pool_shape = pool2.get_shape().as_list()

#计算最后一次池化后对象的体积(数据个数\节点数\像素个数)

nodes = pool_shape[1]*pool_shape[2]*pool_shape[3]

#根据上面的nodes再次把最后池化的结果pool2变为batch行nodes列的数据

reshaped = tf.reshape(pool2,[-1,nodes])

#全连接层

with tf.variable_scope('layer5-fc1'):

fc1_weights = tf.Variable(tf.random_normal([nodes,FC_SIZE],stddev=0.1),name='weight')

if(regularizer != None):

tf.add_to_collection('losses',tf.contrib.layers.l2_regularizer(0.03)(fc1_weights))

fc1_biases = tf.Variable(tf.random_normal([FC_SIZE]),name="bias")

#预测

fc1 = tf.nn.relu(tf.matmul(reshaped,fc1_weights)+fc1_biases)

if(train):

fc1 = tf.nn.dropout(fc1,0.5)

#全连接层

with tf.variable_scope('layer6-fc2'):

fc2_weights = tf.Variable(tf.random_normal([FC_SIZE,64],stddev=0.1),name="weight")

if(regularizer != None):

tf.add_to_collection('losses',tf.contrib.layers.l2_regularizer(0.03)(fc2_weights))

fc2_biases = tf.Variable(tf.random_normal([64]),name="bias")

#预测

fc2 = tf.nn.relu(tf.matmul(fc1,fc2_weights)+fc2_biases)

if(train):

fc2 = tf.nn.dropout(fc2,0.5)

#全连接层

with tf.variable_scope('layer7-fc3'):

fc3_weights = tf.Variable(tf.random_normal([64,NUM_LABELS],stddev=0.1),name="weight")

if(regularizer != None):

tf.add_to_collection('losses',tf.contrib.layers.l2_regularizer(0.03)(fc3_weights))

fc3_biases = tf.Variable(tf.random_normal([NUM_LABELS]),name="bias")

#预测

logit = tf.matmul(fc2,fc3_weights)+fc3_biases

return logit

import time

import keras

import numpy as np

from keras.utils import np_utils

X = np.load("G:\\trainDataList.npy")

Y = np.load("G:\\trianLabel.npy")

print(np.shape(X))

print(np.shape(Y))

print(np.shape(testData))

print(np.shape(testLabel))

batch_size = 10

n_classes=5

epochs=16#循环次数

learning_rate=1e-4

batch_num=int(np.shape(X)[0]/batch_size)

dropout=0.75

x=tf.placeholder(tf.float32,[None,64,64,3])

y=tf.placeholder(tf.float32,[None,n_classes])

# keep_prob = tf.placeholder(tf.float32)

#加载测试数据集

test_X = np.load("G:\\testDataList.npy")

test_Y = np.load("G:\\testLabel.npy")

back = 64

ro = int(len(test_X)/back)

#调用神经网络方法

pred=inference(x,1,"regularizer")

cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred,labels=y))

# 三种优化方法选择一个就可以

optimizer=tf.train.AdamOptimizer(1e-4).minimize(cost)

# train_step = tf.train.GradientDescentOptimizer(0.001).minimize(cost)

# train_step = tf.train.MomentumOptimizer(0.001,0.9).minimize(cost)

#将预测label与真实比较

correct_pred=tf.equal(tf.argmax(pred,1),tf.argmax(y,1))

#计算准确率

accuracy=tf.reduce_mean(tf.cast(correct_pred,tf.float32))

merged=tf.summary.merge_all()

#将tensorflow变量实例化

init=tf.global_variables_initializer()

start_time = time.time()

with tf.Session() as sess:

sess.run(init)

#保存tensorflow参数可视化文件

writer=tf.summary.FileWriter('F:/Flower_graph', sess.graph)

for i in range(epochs):

for j in range(batch_num):

offset = (j * batch_size) % (Y.shape[0] - batch_size)

# 准备数据

batch_data = X[offset:(offset + batch_size), :]

batch_labels = Y[offset:(offset + batch_size), :]

sess.run(optimizer, feed_dict={x:batch_data,y:batch_labels})

result=sess.run(merged, feed_dict={x:batch_data,y:batch_labels})

writer.add_summary(result, i)

loss,acc = sess.run([cost,accuracy],feed_dict={x:batch_data,y:batch_labels})

print("Epoch:", '%04d' % (i+1),"cost=", "{:.9f}".format(loss),"Training accuracy","{:.5f}".format(acc*100))

writer.close()

print("########################训练结束,下面开始测试###################")

for i in range(ro):

s = i*back

e = s+back

test_accuracy = sess.run(accuracy,feed_dict={x:test_X[s:e],y:test_Y[s:e]})

print("step:%d test accuracy = %.4f%%" % (i,test_accuracy*100))

print("Final test accuracy = %.4f%%" % (test_accuracy*100))

end_time = time.time()

print('Times:',(end_time-start_time))

print('Optimization Completed')

........................................

import os

import numpy as np

from scipy import ndimage

from skimage import color,data,transform,io

move=np.arange(-3,3,1)

moveIndex = np.random.randint(len(move))

lightStrong=np.arange(0.01,3,0.1)

lightStrongIndex = np.random.randint(len(lightStrong))

moveImage=ndimage.shift(transImageGray,move[moveIndex],cval=lightStrong[lightStrongIndex])

moveImage[moveImage>1.0]=1.0

from numpy import array

from numpy import argmax

from keras.utils import to_categorical

from sklearn.preprocessing import LabelEncoder

#对数值

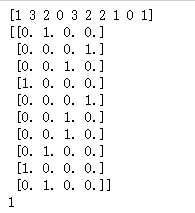

data=[1, 3, 2, 0, 3, 2, 2, 1, 0, 1]

data=array(data)

print(data)

encoded = to_categorical(data)

print(encoded)

inverted = argmax(encoded[0])

print(inverted)

import numpy as np

from numpy import argmax

data = 'hello world'

print(len(data))

alphabet = 'abcdefghijklmnopqrstuvwxyz '

char_to_int = dict((c, i) for i, c in enumerate(alphabet))

print(char_to_int)

int_to_char = dict((i, c) for i, c in enumerate(alphabet))

print(int_to_char)

integer_encoded = [char_to_int[char] for char in data]

print(integer_encoded)

onehot_encoded = list()

for value in integer_encoded:

letter = [0 for _ in range(len(alphabet))]

letter[value] = 1

onehot_encoded.append(letter)

print(np.shape(onehot_encoded))

print(onehot_encoded)

inverted = int_to_char[argmax(onehot_encoded[0])]

print(inverted)

from numpy import array

from numpy import argmax

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import OneHotEncoder

data = ['cold', 'cold', 'warm', 'cold', 'hot', 'hot', 'warm', 'cold', 'warm', 'hot']

values = array(data)

print(values)

label_encoder = LabelEncoder()

integer_encoded = label_encoder.fit_transform(values)

print(integer_encoded)

integer_encoded = integer_encoded.reshape(len(integer_encoded), 1)

print(integer_encoded)

onehot_encoder = OneHotEncoder(sparse=False)

onehot_encoded = onehot_encoder.fit_transform(integer_encoded)

print(onehot_encoded)

inverted = label_encoder.inverse_transform([argmax(onehot_encoded[0, :])])

print(inverted)

from numpy import array

from numpy import argmax

from keras.utils import to_categorical

data = ['cold', 'cold', 'warm', 'cold', 'hot', 'hot', 'warm', 'cold', 'warm', 'hot']

values = array(data)

print(values)

label_encoder = LabelEncoder()

integer_encoded = label_encoder.fit_transform(values)

print(integer_encoded)

##对数值

#data=[1, 3, 2, 0, 3, 2, 2, 1, 0, 1]

#data=array(data)

#print(data)

# one hot encode

encoded = to_categorical(integer_encoded)

print(encoded)

inverted = argmax(encoded[0])

print(inverted)

import os

import numpy as np

import matplotlib.pyplot as plt

from scipy import ndimage

from skimage import color,data,transform,io

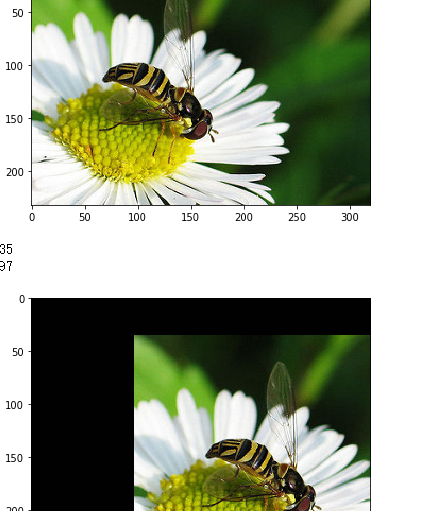

image = data.imread("F:\\data\\flowers\\daisy\\5547758_eea9edfd54_n.jpg")

io.imshow(image)

plt.show()

x = np.random.randint(-100,100)

print(x)

y = np.random.randint(-100,100)

print(y)

moveImage=ndimage.shift(image,(x,y,0),cval=0.5)

io.imshow(moveImage)

plt.show()

吴裕雄 python神经网络 花朵图片识别(9)的更多相关文章

- 吴裕雄 python神经网络 花朵图片识别(10)

import osimport numpy as npimport matplotlib.pyplot as pltfrom PIL import Image, ImageChopsfrom skim ...

- 吴裕雄 python神经网络 水果图片识别(4)

# coding: utf-8 # In[1]:import osimport numpy as npfrom skimage import color, data, transform, io # ...

- 吴裕雄 python神经网络 水果图片识别(3)

import osimport kerasimport timeimport numpy as npimport tensorflow as tffrom random import shufflef ...

- 吴裕雄 python神经网络 水果图片识别(2)

import osimport numpy as npimport matplotlib.pyplot as pltfrom skimage import color,data,transform,i ...

- 吴裕雄 python神经网络 水果图片识别(5)

#-*- coding:utf-8 -*-### required libaraiedimport osimport matplotlib.image as imgimport matplotlib. ...

- 吴裕雄 python神经网络 水果图片识别(1)

import osimport numpy as npimport matplotlib.pyplot as pltfrom skimage import color,data,transform,i ...

- 吴裕雄 python 神经网络——TensorFlow图片预处理调整图片

import numpy as np import tensorflow as tf import matplotlib.pyplot as plt def distort_color(image, ...

- 吴裕雄 python 神经网络——TensorFlow 花瓣识别2

import glob import os.path import numpy as np import tensorflow as tf from tensorflow.python.platfor ...

- 吴裕雄 python 神经网络——TensorFlow图片预处理

import numpy as np import tensorflow as tf import matplotlib.pyplot as plt # 使用'r'会出错,无法解码,只能以2进制形式读 ...

随机推荐

- Koa快速入门教程(一)

Koa 是由 Express 原班人马打造的,致力于成为一个更小.更富有表现力.更健壮的 Web 框架,采用了async和await的方式执行异步操作. Koa有v1.0与v2.0两个版本,随着nod ...

- es6(14)--iterator for ...of循环

//iterator for ...of循环 { let arr=['hello','world']; let map=arr[Symbol.iterator](); console.log(map. ...

- linux 乌班图 安装pycharm

1.通过vmware安装ubuntu系统2.安装完成后,登录ubuntu,通过普通用户 s14登录,密码redhat3.下载pycharm到ubuntu系统中 -可以通过python -m http. ...

- 微信小程序开发踩坑日记

2017.12.29 踩坑记录 引用图片名称不要使用中文,尽量使用中文命名,IDE中图片显示无异样,手机上图片可能出现不显示的情况. 2018.1.5 踩坑记录 微信小程序设置元素满屏,横向直接w ...

- FreeMarker之FTL指令

assign指令 此指令用于在页面上定义一个变量 (1)定义简单类型: <#assign linkman="周先生"> 联系人:${linkman} (2)定义对象类型 ...

- 一个不错的PHP二维数组排序函数简单易用存用

一个不错的PHP二维数组排序函数简单易用存用 传入数组,传入排序的键,传入排序顺序 public function array_sort($arr,$keys,$type='asc') { $keys ...

- python学习笔记_week22

note 知识点概要 - Session - CSRF - Model操作 - Form验证(ModelForm) - 中间件 - 缓存 - 信号 内容详细: 1. Session 基于Cookie做 ...

- View Stack容器,按钮选择子容器

<?xml version="1.0" encoding="utf-8"?> <s:Application xmlns:fx="ht ...

- 在keil调用Notepad++

先打开keil, 新建一个 取名为notepad 选择notepad++的安装路径 设置参数 保持后可以看多了notepad的选项 运行当前的文件在notepad++打开

- ADOQuery.Parameters: Property Parameters does not exist

Exception class EReadError with message 'Property Parameters does not exist'. Exception class EReadE ...